这里(stackoverflow)有一篇关于使用Django随机获取记录的讨论。

主要意思是说

Record.objects.order_by('?')[:2]这样获取2个记录会导致性能问题,原因如下:

“

对于有着相当多数量记录的表来说,这种方法异常糟糕。这会导致一个 ORDER BY RAND() 的SQL查询。举个栗子,这里是MYSQL是如何处理这个查询的(其他数据库的情况也差不多),想象一下当一个表有十亿行的时候会怎样:

- 为了完成ORDER BY RAND() ,需要一个RAND()列来排序

- 为了有RAND()列,需要一个新表,因为现有的表没有这个列。

- 为了这个新表,mysql建立了一个带有新列的,新的临时表,并且将已有的一百万行数据复制进去。

- 当其新建完了,他如你所要求的,为每一行运行RAND()函数来填上这个值。是的,你派mysql创建一百万个随机数,这要点时间:)

- 几个小时或几天后,当他干完这活,他要排序。是的,你排mysql去排序一个一百万行的,最糟糕的表(说他最糟糕是因为排序的键是随机的)。

- 几天或者几星期后,当排序完了,他忠诚地将你实际需要的可怜的两行抓出来返回给你。做的好。;)

注意:只是稍微说一句,得注意到mysql一开始会试着在内存中创建临时表。当内存不够了,他将会把所有东西放在硬盘上,所以你会因为近乎于整个过程中的I/O瓶颈而雪上加霜。

怀疑者可以去看看python代码引起的查询语句,确认是ORDER BY RAND(), 然后去Google下"order by rand()"(带上引号)。

一个更好的方式是将这个耗费严重的查询换成3个耗费更轻的:

last = MyModel.objects.count() - 1

# 这是一个获取两个不重复随机数的简单方法

index1 = randint(0, last)

index2 = randint(0, last - 1)

if index2 == index1:

index2 = last

MyObj1 = MyModel.objects.all()[index1]

MyObj2 = MyModel.objects.all()[index2]

”

如上Manganeez所说的方法,相应的获取n条记录的代码应该如下:

sample = random.sample(xrange(Record.objects.count()),n)

result = [Record.objects.all()[i]) for i in sample]result = random.sample(Record.objects.all(),n)就性能问题,请教了stackoverflow上的大神 (虽然被踩和被教育了=。=)

“

Record.objects.count() 将被转换成一个相当轻量级的SQL请求:

SELECT COUNT(*) FROM TABLERecord.objects.all()[0]也会被转换成一个十分轻量级的SQL请求:

SELECT * FROM TABLE LIMIT 1SELECT * FROM TABLE

SELECT * FROM table LIMIT 20; // or something similar任何时候你将一个Queryset转换成list的时候,将是资源消耗严重的时候。

如果我没错的话,在这个例子里,sample方法将把Queryset转换成list。

这样如果你result = random.sample(Record.objects.all(),n) 这样做的话,全部的Queryset将会转换成list,然后从中随机选择。

想象一下如果你有十亿行的数据。你是打算把它存储在一个有百万元素的list中,还是愿意一个一个的query?

”

“

.count的性能是基于数据库的。而Postgres的.count为人所熟知的相当之慢。

”

某人说过,要知道梨子的滋味,就得变革梨子,亲口尝一尝。

在一个已有的测试project中新建一个app,数据库是MYSQL:

D:\PyWorkspace\DjangoTest>python manage.py startapp randomrecordsclass Record(models.Model):

"""docstring for Record"""

id = models.AutoField(primary_key = True)

content = models.CharField(max_length = 16)

def __str__(self):

return "id:%s content:%s" % (self.id, self.content)

def __unicode__(self):

return u"id:%s content:%s" % (self.id, self.content)添加一万行数据:

D:\PyWorkspace\DjangoTest>python manage.py syncdb

Creating tables ...

Creating table randomrecords_record

Installing custom SQL ...

Installing indexes ...

Installed 0 object(s) from 0 fixture(s)

D:\PyWorkspace\DjangoTest>python manage.py shell

Python 2.7.5 (default, May 15 2013, 22:44:16) [MSC v.1500 64 bit (AMD64)] on win

32

Type "help", "copyright", "credits" or "license" for more information.

(InteractiveConsole)

>>> from randomrecords.models import Record

>>> for i in xrange(10000):

... Record.objects.create(content = 'c of %s' % i).save()

...先写了个脚本 在manage.py shell中调用了下 结果让我震惊了。

我表示不敢相信 又写了view 并添加了显示Query的log

这里是写的view:

def test1(request):

start = datetime.datetime.now()

result = Record.objects.order_by('?')[:20]

l = list(result) # Queryset是惰性的,强制将Queryset转为list

end = datetime.datetime.now()

return HttpResponse("time: <br/> %s" % (end-start).microseconds/1000))

def test2(request):

start = datetime.datetime.now()

sample = random.sample(xrange(Record.objects.count()),20)

result = [Record.objects.all()[i] for i in sample]

l = list(result)

end = datetime.datetime.now()

return HttpResponse("time: <br/> %s" % (end-start)

def test3(request):

start = datetime.datetime.now()

result = random.sample(Record.objects.all(),20)

l = list(result)

end = datetime.datetime.now()

return HttpResponse("time: <br/> %s" % (end-start)运行结果如下,第一行是页面显示的时间,后边是Queryset实际调用的SQL语句:

test1:

time: 0:00:00.012000

(0.009) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` ORDER BY RAND() LIMIT 20; args=()

[05/Dec/2013 17:48:19] "GET /dbtest/test1 HTTP/1.1" 200 775

test2:

time: 0:00:00.055000

(0.002) SELECT COUNT(*) FROM `randomrecords_record`; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 6593; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 2570; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 620; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 5814; args=()

(0.003) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 6510; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3536; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3362; args=()

(0.003) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 8948; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 7723; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 2374; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 8269; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4370; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 6953; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1441; args=()

(0.000) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 772; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4323; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 8139; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 7441; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1306; args=()

(0.001) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 5462; args=()

[05/Dec/2013 17:50:34] "GET /dbtest/test2 HTTP/1.1" 200 777

test3:

time: 0:00:00.156000

(0.032) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record`; args=()

[05/Dec/2013 17:51:29] "GET /dbtest/test3 HTTP/1.1" 200 774

令人难以置信的,在10000行的MYSQL表中 方法1的效率是最高的

无论是结果上看(12ms)还是SQL语句的运行时间上看(9ms)方法1甩了其他方法一大截

即便数据量增加到21万:

time: 98

(0.094) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` ORDER BY RAND() LIMIT 20; args=()

[05/Dec/2013 19:18:59] "GET /dbtest/test1 HTTP/1.1" 200 14

time: 0:00:00.668000

//这里没有注意到 掉了一行count语句

(0.045) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 176449; args=()

(0.016) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 68082; args=()

(0.036) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 145571; args=()

(0.033) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 111029; args=()

(0.043) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 169675; args=()

(0.046) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 186234; args=()

(0.043) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 167233; args=()

(0.015) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 54404; args=()

(0.036) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 140395; args=()

(0.004) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 13128; args=()

(0.039) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 153695; args=()

(0.034) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 131863; args=()

(0.021) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 82785; args=()

(0.015) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 57253; args=()

(0.021) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 77836; args=()

(0.049) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 199567; args=()

(0.002) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3867; args=()

(0.027) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 104470; args=()

(0.026) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 107058; args=()

(0.043) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 150979; args=()

[05/Dec/2013 19:21:33] "GET /dbtest/test2 HTTP/1.1" 200 15

time 0:00:00.781000

[05/Dec/2013 19:23:01] "GET /dbtest/test3 HTTP/1.1" 200 15

(0.703) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record`; args=()

[05/Dec/2013 19:23:06] "GET /dbtest/test3 HTTP/1.1" 200 15

数据量再次提升至百万级别 1066768条数据

time:

0:00:02.197000

(2.193) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` ORDER BY RAND() LIMIT 20; args=()

[05/Dec/2013 20:00:55] "GET /dbtest/test1 HTTP/1.1" 200 26

time:

0:00:02.659000

(0.204) SELECT COUNT(*) FROM `randomrecords_record`; args=()

(0.180) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 703891; args=()

(0.038) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 156668; args=()

(0.013) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 50742; args=()

(0.031) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 121107; args=()

(0.033) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 130565; args=()

(0.017) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 66225; args=()

(0.234) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 922479; args=()

(0.267) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1027166; args=()

(0.189) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 765499; args=()

(0.009) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 31569; args=()

(0.233) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 934055; args=()

(0.264) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1052741; args=()

(0.155) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 621692; args=()

(0.014) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 52388; args=()

(0.199) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 759669; args=()

(0.170) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 655598; args=()

(0.035) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 139709; args=()

(0.228) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 919480; args=()

(0.104) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 422051; args=()

(0.017) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 67549; args=()

[05/Dec/2013 20:00:45] "GET /dbtest/test2 HTTP/1.1" 200 26

time:

0:00:19.651000

(3.645) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record`; args=()

[05/Dec/2013 20:02:50] "GET /dbtest/test3 HTTP/1.1" 200 26

第三种方法所用时间长到令人无法接受 但有意思的是 SQL语句所花费的时间“只有”3.6秒。而大部分的时间都用在python上了。

既然第二种方法和第三种方法都需要random.sample 一个百万个数据的list,那就是说,有大量的时间花费在将SELECT到的结果转化为django对象的过程中了。

此后将不再测试第三种方法

最后,数据量增加到5,195,536个

time:

0:00:22.278000

(22.275) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FR

OM `randomrecords_record` ORDER BY RAND() LIMIT 20; args=()

[05/Dec/2013 21:46:33] "GET /dbtest/test1 HTTP/1.1" 200 26

time:

0:00:33.319000

(1.393) SELECT COUNT(*) FROM `randomrecords_record`; args=()

(3.201) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4997880; args=()

(1.229) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 2169311; args=()

(0.445) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1745307; args=()

(1.306) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3233861; args=()

(1.881) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3946647; args=()

(1.624) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3534377; args=()

(1.068) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1684337; args=()

(0.902) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 2607361; args=()

(2.938) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4872494; args=()

(0.493) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 851494; args=()

(3.275) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 5182414; args=()

(0.946) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1684670; args=()

(0.701) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1819730; args=()

(0.915) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1626221; args=()

(1.809) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 3638682; args=()

(3.237) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4801027; args=()

(1.187) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1955843; args=()

(2.736) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 4835733; args=()

(1.705) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 2756641; args=()

(0.286) SELECT `randomrecords_record`.`id`, `randomrecords_record`.`content` FRO

M `randomrecords_record` LIMIT 1 OFFSET 1117426; args=()

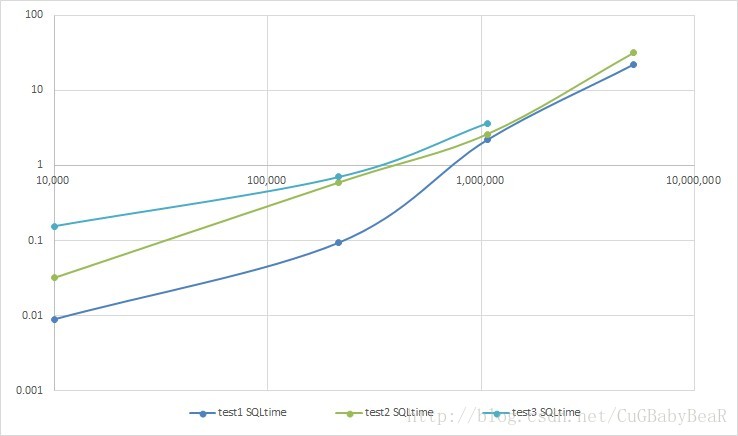

随着表中数据行数的增加,两个方法的所用的时间都到了一个完全不能接受的程度。两种方法所用的时间也几乎相同。

值得注意的是,Mysql数据库有一个特点是,对于一个大表,OFFSET越大,查询时间越长。或许有其他方法可以在offset较大的时候加快select的速度,然而django明显没有做到。如果能够减少这种消耗,方法2明显会优于方法1。

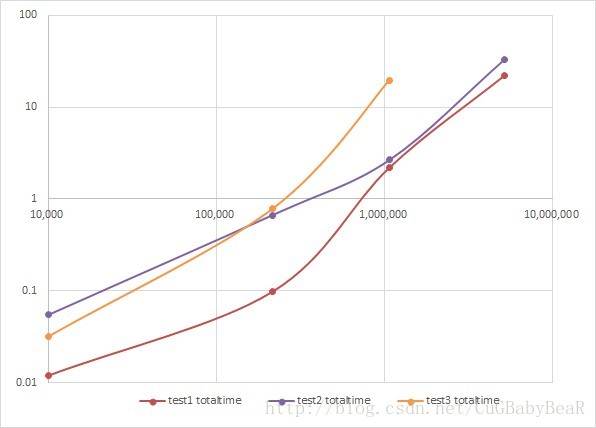

附上三种方法数据量和SQL时间/总时间的图

最后总结,Django下,使用mysql数据库,数据量在百万级以下时,使用

Record.objects.order_by('?')[:2]来获取随机记录系列,性能不会比

sample = random.sample(xrange(Record.objects.count()),n)

result = [Record.objects.all()[i]) for i in sample]差。

2592

2592

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?