********安装hadoop准备*******

1、配置hosts及hostname

假设三台服务器,ip如下:

172.17.253.217

172.17.253.216

172.17.253.67

三台服务器的/etc/hosts内容修改如下:

172.17.253.217 Master

172.17.253.216 Slave1

172.17.253.67 Slave2

172.17.253.217 namenode

172.17.253.216 datanode1

172.17.253.67 datanode2

172.17.253.217服务器的/etc/sysconfig/network的hostname修改为Master

172.17.253.216服务器的/etc/sysconfig/network的hostname修改为Slave1

172.17.253.67服务器的/etc/sysconfig/network的hostname修改为Slave2

修改后重启服务器

2、配置linux ssh互信

在三台服务器上分别生成公钥,将公钥内容合并到authorized_key,保证每台机器都有一个合并后的authorized_key,生成公钥时三台服务器使用的账户需要一致,且该用户需要具有hadoop和hbase安装目录操作权限和authorized_key操作权限,步骤如下:

1) 172.17.253.216、172.17.253.67服务器上分别生成公钥

[root@master ~]# mkdir ~/.ssh

mkdir: 无法创建目录"/root/.ssh": 文件已存在

[root@master ~]#

[root@master ~]# chmod 700 ~/.ssh

[root@master ~]#

[root@master ~]# cd ~/.ssh

[root@master .ssh]#

[root@master .ssh]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

9b:25:ae:71:4e:38:fa:a1:31:3c:02:55:c6:fe:3c:c2 root@master

The key's randomart image is:

+--[ RSA 2048]----+

| .o |

| o. |

| .. |

| . . |

|. . o S . |

| . .E +o = |

| . =.=.* |

| . * O |

| o.o . |

+-----------------+

[root@master .ssh]#

[root@master .ssh]#

[root@master .ssh]# ls

authorized_key id_rsa id_rsa.pub known_hosts

2)三台服务器上整合公钥,并将合并后的公钥文件分发到所有的机器,只需要在master机器上操作便可

[root@master .ssh]# ll

总用量 16

-rwxrwxrwx. 1 root root 393 2月 19 11:46 authorized_key

-rw-r--r--. 1 root root 0 2月 19 11:38 authorized_keys

-rw-------. 1 root root 1675 2月 19 11:25 id_rsa

-rw-r--r--. 1 root root 393 2月 19 11:25 id_rsa.pub

-rw-r--r--. 1 root root 1015 2月 19 11:43 known_hosts

[root@master .ssh]#

[root@master .ssh]#

[root@master .ssh]# ssh Master cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'master (172.17.253.217)' can't be established.

RSA key fingerprint is 88:9c:28:06:93:c7:18:ce:db:a0:c4:91:77:a1:60:27.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'master,172.17.253.217' (RSA) to the list of known hosts.

root@master's password:

[root@master .ssh]#

[root@master .ssh]# ls

authorized_key authorized_keys id_rsa id_rsa.pub known_hosts

[root@master .ssh]# ll

总用量 20

-rwxrwxrwx. 1 root root 393 2月 19 11:46 authorized_key

-rw-r--r--. 1 root root 393 2月 19 11:55 authorized_keys

-rw-------. 1 root root 1675 2月 19 11:25 id_rsa

-rw-r--r--. 1 root root 393 2月 19 11:25 id_rsa.pub

-rw-r--r--. 1 root root 1418 2月 19 11:55 known_hosts

[root@master .ssh]#

[root@master .ssh]# ssh Slave1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'slave1 (172.17.253.67)' can't be established.

RSA key fingerprint is 3c:6f:a0:7f:e0:4b:83:cc:3e:dd:7e:49:f8:21:b2:ab.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave1' (RSA) to the list of known hosts.

root@slave1's password:

[root@master .ssh]#

[root@master .ssh]# ll

总用量 20

-rwxrwxrwx. 1 root root 393 2月 19 11:46 authorized_key

-rw-r--r--. 1 root root 786 2月 19 11:56 authorized_keys

-rw-------. 1 root root 1675 2月 19 11:25 id_rsa

-rw-r--r--. 1 root root 393 2月 19 11:25 id_rsa.pub

-rw-r--r--. 1 root root 1806 2月 19 11:55 known_hosts

[root@master .ssh]#

[root@master .ssh]# ssh Slave2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'slave2 (172.17.253.216)' can't be established.

RSA key fingerprint is 1e:0b:cd:0d:f6:d4:89:1d:3f:51:be:bb:c6:36:a2:84.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave2' (RSA) to the list of known hosts.

root@slave2's password:

[root@master .ssh]#

[root@master .ssh]# ll

总用量 20

-rwxrwxrwx. 1 root root 393 2月 19 11:46 authorized_key

-rw-r--r--. 1 root root 1179 2月 19 11:56 authorized_keys

-rw-------. 1 root root 1675 2月 19 11:25 id_rsa

-rw-r--r--. 1 root root 393 2月 19 11:25 id_rsa.pub

-rw-r--r--. 1 root root 2194 2月 19 11:56 known_hosts

[root@master .ssh]#

[root@master .ssh]#

[root@master .ssh]# scp ~/.ssh/authorized_keys 172.17.253.216:~/.ssh/

root@172.17.253.216's password:

authorized_keys 100% 1179 1.2KB/s 00:00

[root@master .ssh]#

[root@master .ssh]# scp ~/.ssh/authorized_keys 172.17.253.67:~/.ssh/

root@172.17.253.67's password:

authorized_keys 100% 1179 1.2KB/s 00:00

[root@master .ssh]#

[root@master .ssh]#

3)三台机器可以免密码登陆

[root@master .ssh]# ssh -t -lroot 172.17.253.216

Last login: Wed Feb 19 11:12:49 2014 from 172.18.66.20

[root@Slave1 ~]#

*********安装hadoop*********

部署hadoop

[root@Slave1 hadoop]# tar -xzvf hadoop-2.2.0.tar.gz

[root@Slave1 etc]# cd /export/hadoop/etc/hadoop/

[root@Slave1 hadoop]#

1、修改/export/hadoop/etc/hdfs-site.xml

<configuration>

<property>

<name>dfs.name.dir</name>

<value>/export/hadoop/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/export/hadoop/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

dfs.name.dir为name数据存储目录

dfs.data.dir为data数据存储目录

dfs.replication为冗余备份数量,需要小于Slave数量

2、修改/export/hadoop/etc/haddop/hadoop-env.sh

export JAVA_HOME=/opt/jdk1.6.0_24

设置为服务器jdk安装路径,去掉前面的#

3、修改/export/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://172.17.253.217:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/export/hadoop/tmp</value>

</property>

</configuration>

fs.default.name为namenode地址及端口号,也可以写

hadoop.tmp.dir为hadoop临时文件目录

4、修改 vi /export/hadoop/etc/hadoop/masters文件内容

|

| 172.17.253.217 |

5、修改vi /data/hadoop/etc/hadoop/slaves文件内容

|

| 172.17.253.216 172.17.253.67 |

6、master机器上的hadoop搭建完毕后scp到slave机器上

scp -r /export/hadoop/ 172.17.253.67:/export/hadoop/

scp -r /export/hadoop/ 172.17.253.216:/export/hadoop/

7、在master机器上执行下面的命令,初始化name目录和数据目录

[root@master ~]# cd /export/hadoop/bin

[root@master bin]# ./hadoop namenode -format

执行后返回的结果:

[root@master bin]# ./hadoop namenode -format

export JAVA_HOME=/opt/jdk1.6.0_24

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

export JAVA_HOME=/opt/jdk1.6.0_24

14/02/20 14:50:16 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/172.17.253.217

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /export/hadoop/etc/hadoop:/export/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/export/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/export/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/export/hadoop/share/hadoop/common/lib/xz-1.0.jar:/export/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/export/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/export/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/export/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/export/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/export/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/export/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/export/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/export/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/export/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/export/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/export/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/export/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/export/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/export/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/export/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/export/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/export/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/export/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/export/hadoop/share/hadoop/common/lib/activation-1.1.jar:/export/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/export/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/export/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/export/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/export/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/export/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/export/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/export/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/export/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/export/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/export/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/export/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/export/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/export/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/export/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/export/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/export/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/export/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/export/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/export/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/export/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/export/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/export/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/export/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/export/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/export/hadoop/share/hadoop/common/lib/asm-3.2.jar:/export/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/export/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/export/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/export/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/export/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/export/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/export/hadoop/share/hadoop/hdfs:/export/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/export/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/export/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/export/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/export/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/export/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/export/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/export/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/export/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/export/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/export/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/export/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/export/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/export/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/export/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/export/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/export/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/export/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/export/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/export/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/export/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/export/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/export/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/export/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/export/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/export/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/export/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/export/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/export/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/export/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/export/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/export/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/export/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/export/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/export/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/export/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/export/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/export/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/export/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/export/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/export/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/export/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/export/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/export/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/export/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/export/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/export/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/export/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/export/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/export/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/export/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/export/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/export/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/export/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/export/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/export/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/export/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/export/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/export/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/export/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/export/hadoop//contrib/capacity-scheduler/*.jar:/export/hadoop//contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common -r 1529768; compiled by 'hortonmu' on 2013-10-07T06:28Z

STARTUP_MSG: java = 1.6.0_24

************************************************************/

14/02/20 14:50:16 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

14/02/20 14:50:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/02/20 14:50:18 WARN common.Util: Path /export/hadoop/name should be specified as a URI in configuration files. Please update hdfs configuration.

14/02/20 14:50:18 WARN common.Util: Path /export/hadoop/name should be specified as a URI in configuration files. Please update hdfs configuration.

Formatting using clusterid: CID-2238ff0e-5f26-4ffd-9465-5348f772ade5

14/02/20 14:50:18 INFO namenode.HostFileManager: read includes:

HostSet(

)

14/02/20 14:50:18 INFO namenode.HostFileManager: read excludes:

HostSet(

)

14/02/20 14:50:18 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/02/20 14:50:18 INFO util.GSet: Computing capacity for map BlocksMap

14/02/20 14:50:18 INFO util.GSet: VM type = 64-bit

14/02/20 14:50:18 INFO util.GSet: 2.0% max memory = 888.9 MB

14/02/20 14:50:18 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/02/20 14:50:18 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/02/20 14:50:18 INFO blockmanagement.BlockManager: defaultReplication = 2

14/02/20 14:50:18 INFO blockmanagement.BlockManager: maxReplication = 512

14/02/20 14:50:18 INFO blockmanagement.BlockManager: minReplication = 1

14/02/20 14:50:18 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/02/20 14:50:18 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/02/20 14:50:18 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/02/20 14:50:18 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/02/20 14:50:18 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

14/02/20 14:50:18 INFO namenode.FSNamesystem: supergroup = supergroup

14/02/20 14:50:18 INFO namenode.FSNamesystem: isPermissionEnabled = true

14/02/20 14:50:18 INFO namenode.FSNamesystem: HA Enabled: false

14/02/20 14:50:18 INFO namenode.FSNamesystem: Append Enabled: true

14/02/20 14:50:19 INFO util.GSet: Computing capacity for map INodeMap

14/02/20 14:50:19 INFO util.GSet: VM type = 64-bit

14/02/20 14:50:19 INFO util.GSet: 1.0% max memory = 888.9 MB

14/02/20 14:50:19 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/02/20 14:50:19 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/02/20 14:50:19 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/02/20 14:50:19 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/02/20 14:50:19 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/02/20 14:50:19 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/02/20 14:50:19 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/02/20 14:50:19 INFO util.GSet: Computing capacity for map Namenode Retry Cache

14/02/20 14:50:19 INFO util.GSet: VM type = 64-bit

14/02/20 14:50:19 INFO util.GSet: 0.029999999329447746% max memory = 888.9 MB

14/02/20 14:50:19 INFO util.GSet: capacity = 2^15 = 32768 entries

14/02/20 14:50:19 INFO common.Storage: Storage directory /export/hadoop/name has been successfully formatted.

14/02/20 14:50:19 INFO namenode.FSImage: Saving image file /export/hadoop/name/current/fsimage.ckpt_0000000000000000000 using no compression

14/02/20 14:50:19 INFO namenode.FSImage: Image file /export/hadoop/name/current/fsimage.ckpt_0000000000000000000 of size 196 bytes saved in 0 seconds.

14/02/20 14:50:19 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/02/20 14:50:19 INFO util.ExitUtil: Exiting with status 0

14/02/20 14:50:20 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/172.17.253.217

************************************************************/

[root@master bin]#

8、启动hadoop所有服务

[root@master hadoop]# cd /export/hadoop/sbin/

[root@master sbin]# ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

14/02/20 14:54:18 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [export JAVA_HOME=/opt/jdk1.6.0_24

Master]

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

sed??-e ????ʽ #1???ַ? 16????s????δ֪ѡ??

Master: export JAVA_HOME=/opt/jdk1.6.0_24

Master: export JAVA_HOME=/opt/jdk1.6.0_24

Master: starting namenode, logging to /export/hadoop/logs/hadoop-root-namenode-master.out

The authenticity of host 'export (61.50.248.117)' can't be established.

RSA key fingerprint is 63:2d:26:a2:d2:fd:53:d3:3c:5f:1c:25:af:ff:15:01.

Are you sure you want to continue connecting (yes/no)? Master: export JAVA_HOME=/opt/jdk1.6.0_24

export: Host key verification failed.

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.216: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.216: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.216: starting datanode, logging to /data/export/hadoop/logs/hadoop-root-datanode-Slave1.out

172.17.253.67: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.67: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.67: starting datanode, logging to /export/hadoop/logs/hadoop-root-datanode-Slave2.out

172.17.253.216: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.67: export JAVA_HOME=/opt/jdk1.6.0_24

Starting secondary namenodes [export JAVA_HOME=/opt/jdk1.6.0_24

0.0.0.0]

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

sed??-e ????ʽ #1???ַ? 16????s????δ֪ѡ??

export: ssh_exchange_identification: Connection closed by remote host

0.0.0.0: export JAVA_HOME=/opt/jdk1.6.0_24

0.0.0.0: export JAVA_HOME=/opt/jdk1.6.0_24

0.0.0.0: starting secondarynamenode, logging to /export/hadoop/logs/hadoop-root-secondarynamenode-master.out

0.0.0.0: export JAVA_HOME=/opt/jdk1.6.0_24

14/02/20 14:54:42 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

starting resourcemanager, logging to /export/hadoop/logs/yarn-root-resourcemanager-master.out

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.67: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.67: starting nodemanager, logging to /export/hadoop/logs/yarn-root-nodemanager-Slave2.out

172.17.253.216: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.216: starting nodemanager, logging to /data/export/hadoop/logs/yarn-root-nodemanager-Slave1.out

172.17.253.67: export JAVA_HOME=/opt/jdk1.6.0_24

172.17.253.216: export JAVA_HOME=/opt/jdk1.6.0_24

[root@master sbin]#

[root@master sbin]# echo "export PATH=/opt/jdk1.6.0_24/bin:$PATH" >> /etc/profile

[root@master sbin]# source /etc/profile

[root@master sbin]#

用jps查看java进程

[root@master sbin]# jps

28860 NameNode

5693 ElasticSearch

29250 ResourceManager

12412 jar

12396 jar

30535 Jps

23283 QuorumPeerMain

29089 SecondaryNameNode

[root@master sbin]#

相应的端口是否启动:netstat -ntpl

0.0.0.0:50090

172.17.253.217:9000

:::8088

:::8033

:::8032

:::8031

:::8030

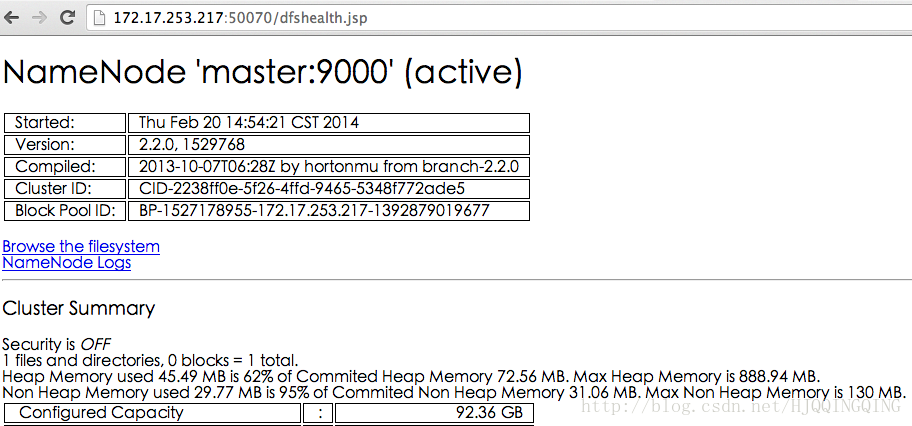

9、访问地址http://172.17.253.217:50070/dfshealth.jsp,效果图如下:

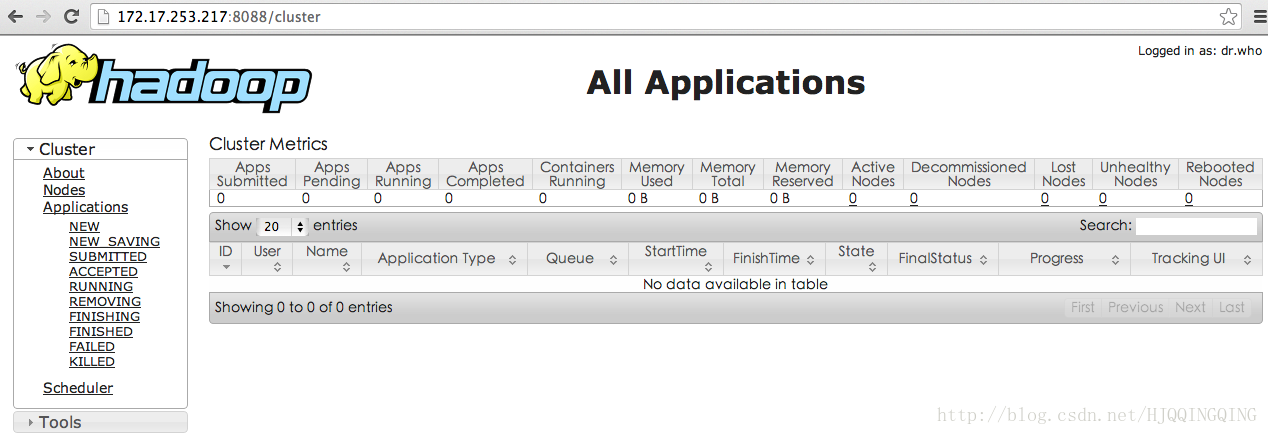

10、访问http://172.17.253.217:8088/cluster效果图

11、简单测试下hadoop

[root@master bin]# ./hadoop fs -ls /yibao

export JAVA_HOME=/opt/jdk1.6.0_24

14/02/20 15:19:34 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@master bin]# ./hadoop fs -ls /

export JAVA_HOME=/opt/jdk1.6.0_24

14/02/20 15:20:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

drwxr-xr-x - root supergroup 0 2014-02-20 15:18 /yibao

**********HBase安装***********

分布式环境需要zookeeper支持,hbase自带zookeeper,可是直接使用,也可以使用独立的zookeeper,配置conf/hbase-env.sh中export HBASE_MANAGES_ZK=true为使用hbase自带的zookeeper,false为使用独立zookeeper,启动hbase后jps,自带zookeeper显示为HQuorumPeer,独立zookeeper为HQuorumPeerMain

这里使用hbase自带zookeeper部署

1、master机器,修改配置文件/export/hbase-0.96.1.1/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://Master:9000/hbase</value>

</property>

<property>

<name>hbase.master</name>

<value>172.17.253.217:60000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>172.17.253.67,172.17.253.67,172.17.253.216</value>

</property>

</configuration>

hbase.rootdir为hdfs地址及端口

hbase.master为Master服务器地址及端口

hbase.zookeeper.quorum为集群中各节点ip

2、master机器,修改配置文件/export/hbase-0.96.1.1/conf/hbase-en.sh,设置JavaHome,并使用Hbase自带的zookeeper

export JAVA_HOME=/opt/jdk1.6.0_24

export HBASE_MANAGES_ZK=true

3、master机器,修改配置文件/export/hbase-0.96.1.1/conf/regionservers

172.17.253.217

172.17.253.216

172.17.253.67

在这里列出了你希望运行的全部 HRegionServer,一行写一个host (就像Hadoop里面的 slaves 一样). 列在这里的server会随着集群的启动而启动,集群的停止而停止

4、master机器,将已经安装好的hadoop的hadoop开头的jar包替换掉hbase的lib文件夹下的hadoop-core包

[root@master hbase-0.96.1.1]# ls lib|grep hadoop

hadoop-core-1.0.4.jar

[root@master export]# find /export/hadoop/share/hadoop/ -name "hadoop*jar" | xargs -i cp {} /opt/hbase-0.94.16/lib/

5、master机器,scp到其他机器上

scp -r /export/hbase 172.17.253.67:/export/hbase

scp -r /export/hbase 172.17.253.216:/export/hbase

6、master机器,启动hbase

[root@master bin]# cd /export/hbase/bin/

[root@Slave2 bin]# sh start-hbase.sh

starting master, logging to /export/hbase/bin/../logs/hbase-root-master-Slave2.out

[root@Slave2 bin]#

[root@Slave2 bin]#

[root@Slave2 bin]# ps -ef|grep hbase

root 21815 1 0 17:55 pts/2 00:00:00 bash /export/hbase/bin/hbase-daemon.sh --config /export/hbase/bin/../conf internal_start master

root 21828 21815 54 17:55 pts/2 00:00:09 /opt/jdk1.6.0_24//bin/java -Dproc_master -XX:OnOutOfMemoryError=kill -9 %p -Xmx1000m -XX:+UseConcMarkSweepGC -Dhbase.log.dir=/export/hbase/bin/../logs -Dhbase.log.file=hbase-root-master-Slave2.log -Dhbase.home.dir=/export/hbase/bin/.. -Dhbase.id.str=root -Dhbase.root.logger=INFO,RFA -Dhbase.security.logger=INFO,RFAS org.apache.hadoop.hbase.master.HMaster start

root 22088 21672 0 17:56 pts/2 00:00:00 grep hbase

[root@Slave2 bin]#

7、测试hbase启动效果

[root@Slave2 bin]# ls

get-active-master.rb hbase-config.cmd local-master-backup.sh replication test

graceful_stop.sh hbase-config.sh local-regionservers.sh rolling-restart.sh zookeepers.sh

hbase hbase-daemon.sh master-backup.sh start-hbase.cmd

hbase-cleanup.sh hbase-daemons.sh region_mover.rb start-hbase.sh

hbase.cmd hbase-jruby regionservers.sh stop-hbase.cmd

hbase-common.sh hirb.rb region_status.rb stop-hbase.sh

[root@Slave2 bin]# ./hbase shell

2014-02-21 18:01:49,483 INFO [main] Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 0.96.1.1-hadoop2, rUnknown, Tue Dec 17 12:22:12 PST 2013

hbase(main):001:0>

hbase(main):002:0* list

TABLE

2014-02-21 18:01:57,453 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

0 row(s) in 3.2270 seconds

=> []

hbase(main):003:0> create 'yibao','usr'

0 row(s) in 0.5930 seconds

=> Hbase::Table - yibao

hbase(main):004:0> list

TABLE

yibao

1 row(s) in 0.0350 seconds

=> ["yibao"]

hbase(main):005:0>

2711

2711

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?