(ICLR 2017) Learning to Remember Rare Events

Paper: https://openreview.net/pdf?id=SJTQLdqlg

Code: https://github.com/tensorflow/models/tree/master/learning_to_remember_rare_events

提出一个大规模的终身学习的记忆模块使得one-shot learning应用于各种神经网络。

memory-augmented deep neural networks are still limited when it comes to life-long and one-shot learning, especially in remembering rare events.

We present a large-scale life-long memory module for use in deep learning.

The module exploits fast nearest-neighbor algorithms for efficiency and thus scales to large memory sizes.

It operates in a life-long manner, i.e., without the need to reset it during training.

Our memory module can be easily added to any part of a supervised neural network.

the enhanced network gains the ability to remember and do life-long one-shot learning.

Our module remembers training examples shown many thousands of steps in the past and it can successfully generalize from them.

Introduction

general problem with current deep learning models: it is necessary to extend the training data and re-train them to handle such rare or new events.

Motivation: Humans, on the other hand, learn in a life-long fashion, often from single examples.

We present a life-long memory module that enables one-shot learning in a variety of neural networks.

Our memory module consists of key-value pairs. Keys are activations of a chosen layer of a neural network, and values are the ground-truth targets for the given example.

as the network is trained, its memory increases and becomes more useful. Eventually it can give predictions that leverage on knowledge from past data with similar activations.

advantages of having a long-term memory:

One-shot learning

Even real-world tasks where we have large training sets, such as translation, can benefit from long-term memory.

since the memory can be traced back to training examples, it might help explain the decisions that the model is making and thus improve understandability of the model.

evaluate in a few ways, to show that our memory module indeed works:

evaluate on the well-known one-shot learning task Omniglot

devise a synthetic task that requires life-long one-shot learning

train an English-German translation model that has our life-long one-shot learning module.

Memory Module

Our memory consists of a matrix

K

of memory keys, a vector

A memory query is a vector of size key-size which we assume to be normalized, i.e., ∥q∥=1 .

Given a query

q

, we define the nearest neighbor of

Since the keys are normalized, the above notion corresponds to the nearest neighbor with respect

to cosine similarity.

When given a query q, the memory

=(K,V,A)

will compute

k

nearest neighbors (sorted by

decreasing cosine similarity):

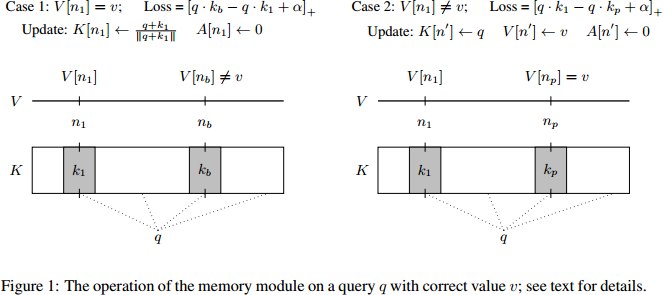

Memory Loss

Assume now that in addition to a query

q

we are also given the correct desired (supervised) value

In the case of classification, this v would be the class label.

In a sequence-to-sequence task,

let

p

be the smallest index such that

We define the memory loss as:

loss(q,v,)=[q⋅K[nb]−q⋅K[np]+α]+

Memory Update

If the memory already returns the correct value, i.e., if V[n1]=v , then we only update the key for n1 by taking the average of the current key and q and normalizing it:

When doing this, we also re-set the age: A[n1]←0 .

when V[n1]≠v , we find memory items with maximum age, and write to one of those (randomly chosen).

More formally, we pick n′=argmaxiA[i]+ri where ∥ri∥<<∥∥ is a random number that introduces some randomness in the choice so as to avoid race conditions in asynchronous multi-replica training. We then set:

K[n′]←q,V[n′]←v,A[n′]←0

With every memory update we also increment the age of all non-updated indices by 1 .

Efficient nearest neighbor computation

The most expensive operation in our memory module is the computation of

In the exact mode, to calculate the nearest neighbors in

K

to a mini-batch of queries

Using The Memory Module

The memory module presented above can be added to any classification network.

In the simplest case, we use the final layer of a network as query and the output of the module is directly used for classification.

it is possible to embed it again into a dense representation and mix it with other predictions made by the network. To study this setting, we add the memory module to sequence-to-sequence recurrent neural networks.

Convolutional Network with Memory

To test our memory module in a simple setting, we first add it to a basic convolutional network network for image classification.

The output of the final layer is used as query to our memory module and the nearest neighbor returned by the memory is used as the final network prediction.

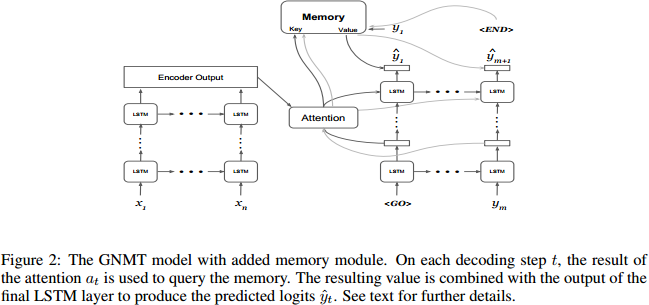

Sequence-to-sequence with Memory

We add the memory module to the Google Neural Machine Translation (GNMT) model (Wu et al., 2016).

This model consists of an encoder RNN, which creates a representation of the source language sentence, and a decoder RNN that outputs the target language sentence.

We left the encoder RNN unmodified.

In the decoder RNN, we use the vector retrieved by the attention mechanism as query to the memory module.

In the GNMT model, the attention vector is used in all LSTM layers beyond the second one, so the computation of the other layers and the memory can happen in parallel.

Before the final softmax layer, we combine the embedded memory output with the output of the final LSTM layer using an additional linear layer, as depicted in Figure 2.

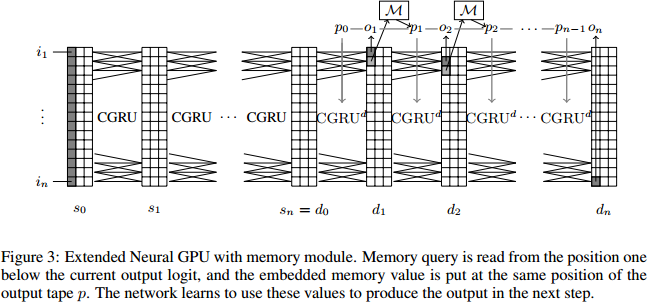

Extended Neural GPU with Memory

we also add it to the Extended Neural GPU, a convolutional-recurrent model introduced by Kaiser & Bengio (2016).

The Extended Neural GPU is a sequence-to-sequence model too, but its decoder is convolutional and the size of its state changes depending on the size of the input.

Again, we leave the encoder part of the model intact, and extend the decoder part by a memory query.

This time, we use the position one step ahead to query memory, and we put the embedded result to the output tape, as shown in Figure 3.

Related Work

Memory in Neural Networks

One-shot Learning

Experiments

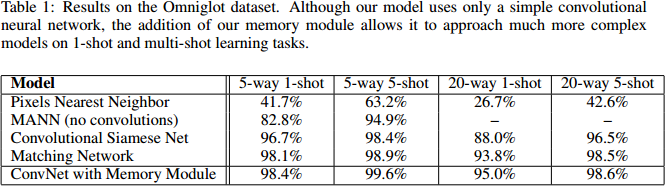

Omniglot

The Omniglot dataset (Lake et al., 2011) consists of 1623 characters from 50 different alphabets, each hand-drawn by 20 different people. The large number of classes (characters) with relatively few data per class (20), makes this an ideal data set for testing one-shot classification.

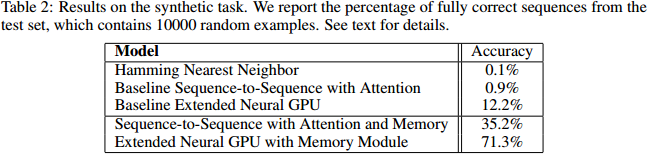

Synthetic task

To better understand the memory module operation and to test what it can remember, we devise a synthetic task and train the Extended Neural GPU with and without memory.

To create training and test data for our synthetic task, we use symbols from the set S={2,⋯,16000} and first fix a random function f:S→S .

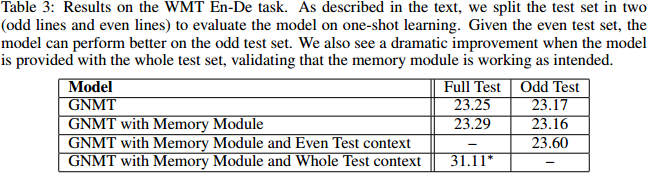

Translation

To evaluate the memory module in a large-scale setting we use the GNMT model (Wu et al., 2016) extended with our memory module on the WMT14 English-to-German translation task.

Discussion

We presented a long-term memory module that can be used for life-long learning.

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?