LINUX内核狂想曲

@CopyLeft by ICANTH,I Can do ANy THing that I CAN THink!~

Author: WenHui, WuHan University,2012-5-12

Version:v1.0

Last Modified Time: 2012-5-20

SLOB概述

SLOB(Simple List Of Blocks)分配器,是一个在SLAB分配器类层之中的传统K&R/UNIX堆分配器。它比LINUX原slab分配器代码更小短小,且更有效率。但它比SLAB更容易产生内存碎片问题,仅应用于小系统,特别是嵌入式系统。

SLOB分配器总共只有三条半满空闲链(partial free list),free_slab_large、free_slab_medium和free_slab_small。每条链都由已被分配给SLOB的page元素构成。free_slab_small链只分配小于256字节的块,free_slab_medium只分配小于1024字节的块,而free_slab_large只分配小于PAGE_SIZE的块。

若通过SLOB分配器分配大于一页的对象,则SLOB分配器直接调用zone mem allocator (alloc_pages)分配连续页(compound pages)并返回。并修改相应首page结构体的flags字段,private字段中保存该块大小。

由于SLOB分配器需要重用page结构体中SLAB相关字段,为避免不必要的混淆,SLOB利用C语言union结构体特性将page重新封装成slab_page。在slab_page中,几个关键字段含义如下:

| slobidx_t _units | 在slob page中剩余空闲单元数。此处unit,等同于 slobidx_t、slob_block(其大小视PAGE_SIZE而定,当PAGE_SIZE=4KB时,为16bits)。SLOB将一页内存划分成slobidx_t个大小的单元。 |

| slob_t *free | SLOB分配器将slob page内存中的空闲块按线性地址升序方式组织成空闲块链,由page中free字段指向链首元素。 |

| list_head list | 在空闲slob page链表中指向下一个slob page |

SLOB分配器数据结构如下图所示:

SLOB分配器数据结构图

对于一个slob page所对应的物理页帧,它的内存区域被划分成固定大小的UNIT。BLOCK,是一个slob中基本分配对象,分为空闲块和已分配块。BLOCK大小只能是整数倍UNIT,且其起始地址也必须以UNIT对齐。 一个BLOCK由slob_t描述,slob_t在BLOCK的头部,其大小为一个UNIT。

对于空闲块而言,若BLOCK_SIZE = 1 (UNIT),则仅有一个slob_t描述符。且slob_t存放next block相对于物理页帧起始虚拟地址偏移的负值(以UNIT为单位,- offset)。若BLOCK_SIZE > 1 UNIT,则BLOCK头部有两个slob_t。slob_t[0]为BOCK_SIZE (UNIT)。slob_t[1]为next block的 + offset。当offset超过本页范围时,表示next block为 NUL。

对于已分配块而言,BLOCK因采用KMALLOC、及KMEM_CACHE两种不同分配方式而结构不同。对于KMALLOC而言,其已分配BLOCK结构如下:

KMALLOC的BLOCK内首部有一个align内存区,用以存储object的大小。返回BLOCK时,返回object的起始地址。而KMEM_CACHE若选择RCU方式释放,则BLOCK尾部有一个slob_rcu结构,用于采用RCU机制释放BLOCK,在slob_rcu中包含size字段,用以指示BLOCK中object的大小。

SLOB函数说明

SLOB主要函数功能如下表:

| 函数接口SLOB | 函数功能 |

set_slob | 设置slob空闲对象头部slob_t,包括本空闲对象大小(以单元为单位,而非字节)和下一空闲对象相对于所在物理页起始地址的偏移。 |

slob_page | 将虚拟地址所对应的页框page对象转换成slab_page类型 |

| 通过next offset是否起出偏移一页范围判断当前空闲slob对象是否为free list中链尾元素。 | |

| 查找指定page的freelist链并分配一个大小合适的空闲对象 | |

| 根据node调用alloc_pages或alloc_pages_exact_node调用buddy分配器分配连续order个pages | |

| slob分配器分配对象接口,从slob中分配一个对象 | |

| slob_free | slob分配器释放对象接口,将一个block释放回slob分配器 |

| 函数接口KMALLOC | 函数功能 |

| 对slob_alloc的函数的封装若分配空间+对齐空间大于一页空间时,则直接从buddy分配器中分配连续order页并返回;否则,调用slob_alloc分配器进行分配block,此时align空间用于存放block中object大小 | |

| 释放block,对于slob_free的封装。 | |

| 返回block所占用的空间,对于大于页的分配,则返回object的真实大小。若为slob block,则返回对象占用空间 |

| 函数接口-KMEM_CACHE | 函数功能 |

| 从slob分配器中分配一个kmem_cache对象,并对其进行初始化 | |

| 将kmem_cache内存释放回slob分配器 | |

| 根据kmem_cache所提供有关对象的信息,分配一个block对象。若kmem_cache中size小于一页,则调用slob_alloc从slob分配器中分配一个block。否则,直接调用buddy分配器分配 | |

| 释放通过kmem_cache所分配的block | |

| 采用rcu方式释放通过kmem_cache所分配的block | |

| kmem_cache释放block的接口,根据kmem_cache中flags字段判断采用kmem_rcu_free还是__kmem_cache_free方式释放block |

SLOB分配器对外提供两个接口,KMEM_CACHE方式、以及KMALLOC方式。KMALLOC方式指定object size直接从三个空闲slob链中分配BLOCK。而KMEM_CACHE方式,则需先创建一个kmem_cache对象,其中存储固定分配block的size、初始化函数ctor等。

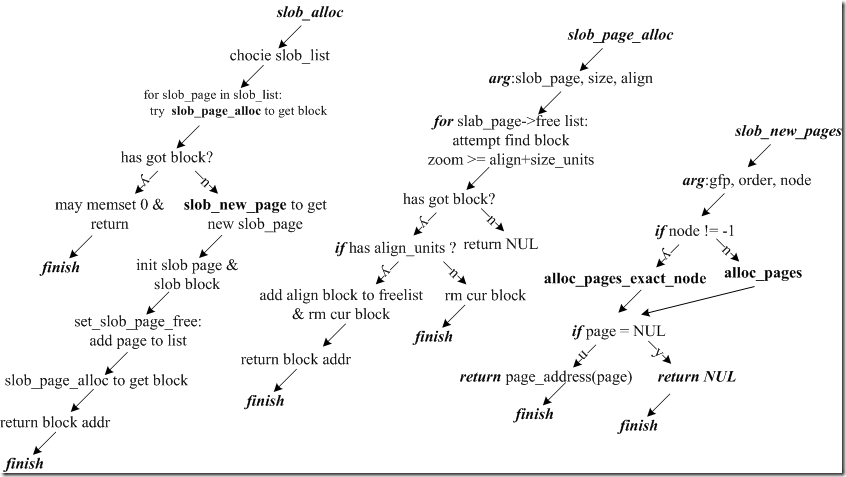

SLOB分配器分配和释放的两个核心函数接口为:slob_alloc和slob_free,其流程如下图所示。slob_alloc对于分配大于PAGE_SIE时,本质上直接调用alloc_pages()分配compound pages。否则找到BLOCK_SIZE > REQUIRE_UNITS的BLOCK,对其分配REQUIRE_UNITS,将该BLOCK从slob free block list中移除,并将剩余空间合并归一,放入slob free block list中。若此时slob处于全满状态,则将slob page 从free slob page list中移除。

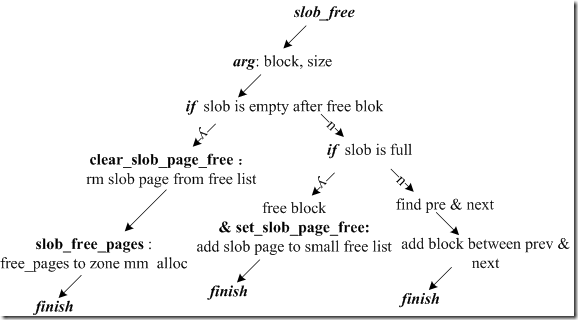

slob_free,对于大于PAGE_SIZE的释放,直接调用free_pages()释放BLOCK。否则,将BLOCK合并归一放入slob free block list。若slob此时完全空闲,则调用free_pages()释放该页。若slob先前处于全满状态,则将该slob重新放入free_slab_small半满队列。

SLOB分配器alloc函数流程图

SLOB分配器slob_free函数流程图

对于KMEM_CACHE分配和释放函数接口为:kmem_cache_alloc_node和kmem_cache_free。kmem_cache_alloc_node所需分配BLOCK的信息由kmem_cache描述,并可初始化该对象。kmem_cache中flags设置采用RCU琐机制,则为BLOCK分配slob_rcu对象空间。当利用kmem_cache_free释放时,若采用RCU琐机制,则采用call_rcu注册回调函数kmem_rcu_free的方式,对BLOCK进行异步释放。

SLOB分配器源码

001 /*

002 * SLOB Allocator: Simple List Of Blocks

003 *

004 * Matt Mackall <mpm@selenic.com> 12/30/03

005 *

006 * NUMA support by Paul Mundt, 2007.

007 *

008 * How SLOB works:

009 *

010 * The core of SLOB is a traditional K&R style heap allocator, with

011 * support for returning aligned objects. The granularity of this

012 * allocator is as little as 2 bytes, however typically most architectures

013 * will require 4 bytes on 32-bit and 8 bytes on 64-bit.

014 *

015 * The slob heap is a set of linked list of pages from alloc_pages(),

016 * and within each page, there is a singly-linked list of free blocks

017 * (slob_t). The heap is grown on demand. To reduce fragmentation,

018 * heap pages are segregated into three lists, with objects less than

019 * 256 bytes, objects less than 1024 bytes, and all other objects.

020 *

021 * Allocation from heap involves first searching for a page with

022 * sufficient free blocks (using a next-fit-like approach) followed by

023 * a first-fit scan of the page. Deallocation inserts objects back

024 * into the free list in address order, so this is effectively an

025 * address-ordered first fit.

026 *

027 * Above this is an implementation of kmalloc/kfree. Blocks returned

028 * from kmalloc are prepended with a 4-byte header with the kmalloc size.

029 * If kmalloc is asked for objects of PAGE_SIZE or larger, it calls

030 * alloc_pages() directly, allocating compound pages so the page order

031 * does not have to be separately tracked, and also stores the exact

032 * allocation size in page->private so that it can be used to accurately

033 * provide ksize(). These objects are detected in kfree() because slob_page()

034 * is false for them.

035 *

036 * SLAB is emulated on top of SLOB by simply calling constructors and

037 * destructors for every SLAB allocation. Objects are returned with the

038 * 4-byte alignment unless the SLAB_HWCACHE_ALIGN flag is set, in which

039 * case the low-level allocator will fragment blocks to create the proper

040 * alignment. Again, objects of page-size or greater are allocated by

041 * calling alloc_pages(). As SLAB objects know their size, no separate

042 * size bookkeeping is necessary and there is essentially no allocation

043 * space overhead, and compound pages aren't needed for multi-page

044 * allocations.

045 *

046 * NUMA support in SLOB is fairly simplistic, pushing most of the real

047 * logic down to the page allocator, and simply doing the node accounting

048 * on the upper levels. In the event that a node id is explicitly

049 * provided, alloc_pages_exact_node() with the specified node id is used

050 * instead. The common case (or when the node id isn't explicitly provided)

051 * will default to the current node, as per numa_node_id().

052 *

053 * Node aware pages are still inserted in to the global freelist, and

054 * these are scanned for by matching against the node id encoded in the

055 * page flags. As a result, block allocations that can be satisfied from

056 * the freelist will only be done so on pages residing on the same node,

057 * in order to prevent random node placement.

058 */

/*

SLOB适用于嵌入式系统,通常也将其归为slab类分配器。它的代码非常小巧、简单,管理也没有LINUX所提供的SLAB分配器复杂,例如没有per cpu cache。SLOB,为减少碎片,按对象大小将pages链接为三种队列:< 256 bytes、< 1024 bytes、其它大小。对于大于PAGE_SIZE的对象,直接调用alloc_pages分配对象,并将size_order在于首page的private字段中。page内部所有空闲对象都被链成一个free list,由slob_t结构描述。

*/

060 #include <linux/kernel.h>

061 #include <linux/slab.h>

062 #include <linux/mm.h>

063 #include <linux/swap.h> /* struct reclaim_state */

064 #include <linux/cache.h>

065 #include <linux/init.h>

066 #include <linux/module.h>

067 #include <linux/rcupdate.h>

068 #include <linux/list.h>

069 #include <linux/kmemtrace.h>

070 #include <linux/kmemleak.h>

071 #include <asm/atomic.h>

073 /*

074 * slob_block has a field 'units', which indicates size of block if +ve,

075 * or offset of next block if -ve (in SLOB_UNITs).

076 *

077 * Free blocks of size 1 unit simply contain the offset of the next block.

078 * Those with larger size contain their size in the first SLOB_UNIT of

079 * memory, and the offset of the next free block in the second SLOB_UNIT.

080 */

081 #if PAGE_SIZE <= (32767 * 2)

083 #else

085 #endif

087 struct slob_block {

089 };

090 typedef struct slob_block slob_t;

/*

slob_page,是对page结构体的重新“解释”。对照slob_page结构体,可以找到page与slob_page的映射关系。我将slob_page字段注释到page结构体中。slobidx_t的大小,对于4KB页而言,是2个字节。则对应关系将发生2个字节的偏移,但是这对page结构体的内存是没有影响的,因为所占用的是SLUB分配器的相关字段。

034 struct page {

035 unsigned long flags; /* slob_page:flags */

037 atomic_t_count; /* slob_page: _count */

038 union { /* slob_page: units (slobidx_t maybe 2 bytes oo~)*/

039 atomic_t_mapcount;

043 struct {

044 u16inuse;

045 u16objects;

046 };

047 };

048 union { /* slob_page: pad[2] */

049 struct {

050 unsigned long private;

057 struct address_space *mapping;

064 };

068 struct kmem_cache *slab;

069 struct page *first_page;

070 };

071 union { /* slob_page: free, first free slob_t in page */

072 pgoff_tindex;

073 void *freelist;

074 };

075 struct list_headlru; /* slob_page: list, linked list of free pages */

};

*/

092 /*

093 * We use struct page fields to manage some slob allocation aspects,

094 * however to avoid the horrible mess in include/linux/mm_types.h, we'll

095 * just define our own struct page type variant here.

096 */

098 union {

099 struct {

100 unsigned long flags; /* mandatory */

101 atomic_t _count; /* mandatory */

102 slobidx_t units; /* free units left in page */

104 slob_t *free; /* first free slob_t in page */

105 struct list_head list; /* linked list of free pages */

106 };

108 };

109 };

110 static inline void struct_slob_page_wrong_size(void)

111 { BUILD_BUG_ON(sizeof(struct slob_page) != sizeof(struct page)); }

113 /*

114 * free_slob_page: call before a slob_page is returned to the page allocator.

115 */

116 static inline void free_slob_page(struct slob_page *sp)

117 {

118 reset_page_mapcount(&sp->page);

120 }

122 /*

123 * All partially free slob pages go on these lists.

124 */

125 #define SLOB_BREAK1 256

126 #define SLOB_BREAK2 1024

127 static LIST_HEAD(free_slob_small);

128 static LIST_HEAD(free_slob_medium);

129 static LIST_HEAD(free_slob_large);

131 /*

132 * is_slob_page: True for all slob pages (false for bigblock pages)

133 */

134 static inline int is_slob_page(struct slob_page *sp)

135 {

136 return PageSlab((struct page *)sp);

137 }

139 static inline void set_slob_page(struct slob_page *sp)

140 {

141 __SetPageSlab((struct page *)sp);

142 }

144 static inline void clear_slob_page(struct slob_page *sp)

145 {

146 __ClearPageSlab((struct page *)sp);

147 }

/* 将一个虚拟地址转换成对应的page地址,并page对象转换成slob_page类型 */

149 static inline struct slob_page *slob_page(const void *addr)

150 {

151 return (struct slob_page *)virt_to_page(addr);

152 }

154 /*

155 * slob_page_free: true for pages on free_slob_pages list.

156 */

157 static inline int slob_page_free(struct slob_page *sp)

158 {

159 return PageSlobFree((struct page *)sp);

160 }

/* 将slob page添加进相应free slob list */

162 static void set_slob_page_free(struct slob_page *sp, struct list_head *list)

163 {

164 list_add(&sp->list, list);

165 __SetPageSlobFree((struct page *)sp);

166 }

168 static inline void clear_slob_page_free(struct slob_page *sp)

169 {

171 __ClearPageSlobFree((struct page *)sp);

172 }

174 #define SLOB_UNIT sizeof(slob_t)

175 #define SLOB_UNITS(size) (((size) + SLOB_UNIT - 1)/SLOB_UNIT)

176 #define SLOB_ALIGN L1_CACHE_BYTES

178 /*

179 * struct slob_rcu is inserted at the tail of allocated slob blocks, which

180 * were created with a SLAB_DESTROY_BY_RCU slab. slob_rcu is used to free

181 * the block using call_rcu.

182 */

186 };

188 /*

189 * slob_lock protects all slob allocator structures.

190 */

191 static DEFINE_SPINLOCK(slob_lock);

193 /*

194 * Encode the given size and next info into a free slob block s.

195 */

196 static void set_slob(slob_t *s, slobidx_t size, slob_t *next)

197 {

/* 获取free slob_t所在PHY_FN的内存起始地址base,并计算next slob相对于base的偏移大小。为什么不直接存储虚拟地址???在32位机器上,虚拟地址大小为32 bits,而当PAGE_SIZE = 4KB(12 bits)时,偏移大小units为16 bits,省2 bytes勒! */

198 slob_t *base = (slob_t *)((unsigned long)s & PAGE_MASK);

199 slobidx_t offset = next - base;

/* 当对象大小大于1 byte时,该slab有两个units,-offset,表示1 bytes空闲对象 */

204 } else

/* 当对象大小为1 byte时,该slab只有一个units,-offset,表示1 bytes空闲对象 */

206 }

208 /*

209 * Return the size of a slob block.

210 */

211 static slobidx_t slob_units(slob_t *s)

212 {

215 return 1;

216 }

218 /*

219 * Return the next free slob block pointer after this one.

220 */

221 static slob_t *slob_next(slob_t *s)

222 {

223 slob_t *base = (slob_t *)((unsigned long)s & PAGE_MASK);

228 else

231 }

233 /*

234 * Returns true if s is the last free block in its page.

235 */

/* 若slob的next offset超出一页面大小,则无效。PAGE_MASK = 2 ^ 12 - 1,故offset = 2 ^ 12时,即将nex设置成下一物理页起始地址时,表示offset无效,故当前slob_t为block free list链尾 */

236 static int slob_last(slob_t *s)

237 {

238 return !((unsigned long)slob_next(s) & ~PAGE_MASK);

239 }

241 static void *slob_new_pages(gfp_t gfp, int order, int node)

242 {

245 #ifdef CONFIG_NUMA

247 page = alloc_pages_exact_node(node, gfp, order);

248 else

249 #endif

250 page = alloc_pages(gfp, order);

/* 只能分配低端内存哦,亲!~ */

255 return page_address(page);

256 }

258 static void slob_free_pages(void *b, int order)

259 {

260 if (current->reclaim_state)

261 current->reclaim_state->reclaimed_slab += 1 << order;

262 free_pages((unsigned long)b, order);

263 }

265 /*

266 * Allocate a slob block within a given slob_page sp.

267 */

268 static void *slob_page_alloc(struct slob_page *sp, size_t size, int align)

269 {

270 slob_t *prev, *cur, *aligned = NULL;

/* units = 将对象字节个数换算成单元个数。当对象处于空闲状态时,slob_t结构复用空闲对象的头部空间 */

271 int delta = 0, units = SLOB_UNITS(size);

273 for (prev = NULL, cur = sp->free; ; prev = cur, cur = slob_next(cur)) {

274 slobidx_t avail = slob_units(cur);

/* 将cur以align字节对齐 */

277 aligned = (slob_t *)ALIGN((unsigned long)cur, align);

/* slob_t指针相减,结果为指针间slob_t元素个数 */

279 }

/* 当前剩余空间足以存放经过align对齐之后的对象 */

280 if (avail >= units + delta) { /* room enough? */

/* 若当前slob由于内存对齐可划分出两个slob,则将第二个slob对象aligned指向next,将第一个对象原cur指向aligned。并更新prev、cur使其指向两个slob */

283 if (delta) { /* need to fragment head to align? */

285 set_slob(aligned, avail - delta, next);

286 set_slob(cur, delta, aligned);

289 avail = slob_units(cur);

290 }

/* 当新slob对象cur空间大小正好与所需对象大小完全匹配,则直接将cur从freee list中移除 */

293 if (avail == units) { /* exact fit? unlink. */

295 set_slob(prev, slob_units(prev), next);

296 else

298 } else { /* fragment */

/* 否则slob对象大于对象所需单元,将产生碎片。故将cur从free list移除时将该碎片添入free list */

300 set_slob(prev, slob_units(prev), cur + units);

301 else

303 set_slob(cur + units, avail - units, next);

304 }

310 }

/* 若循环至free list链表未尾,还未发现足以存放object_size的空闲对象,则结束循环并返回 */

313 }

314 }

316 /*

317 * slob_alloc: entry point into the slob allocator.

318 */

319 static void *slob_alloc(size_t size, gfp_t gfp, int align, int node)

320 {

323 struct list_head *slob_list;

327 if (size < SLOB_BREAK1)

328 slob_list = &free_slob_small;

329 else if (size < SLOB_BREAK2)

330 slob_list = &free_slob_medium;

331 else

332 slob_list = &free_slob_large;

334 spin_lock_irqsave(&slob_lock, flags);

335 /* Iterate through each partially free page, try to find room */

/* 循环遍历slob list中空闲slob_page,并调用slob_page_alloc尝试分配size的空闲块slob block */

336 list_for_each_entry(sp, slob_list, list) {

337 #ifdef CONFIG_NUMA

338 /*

339 * If there's a node specification, search for a partial

340 * page with a matching node id in the freelist.

341 */

342 if (node != -1 && page_to_nid(&sp->page) != node)

343 continue;

344 #endif

345 /* Enough room on this page? */

346 if (sp->units < SLOB_UNITS(size))

347 continue;

349 /* Attempt to alloc */

351 b = slob_page_alloc(sp, size, align);

353 continue;

355 /* Improve fragment distribution and reduce our average

356 * search time by starting our next search here. (see

357 * Knuth vol 1, sec 2.5, pg 449) */

/* 此时已经从sp分配空闲对象,若sp非链首或链尾元素,则根据knunth那神书上的算法,将slob_list指针指向sp,下次从此开始搜索可以有效减少平均搜索时间以及提高碎片随机分布 */

358 if (prev != slob_list->prev &&

359 slob_list->next != prev->next)

360 list_move_tail(slob_list, prev->next);

361 break;

362 }

363 spin_unlock_irqrestore(&slob_lock, flags);

/* 若在slob list中所有slob都没有满足条件的空闲对象,则新建一个slob page对象 */

365 /* Not enough space: must allocate a new page */

367 b = slob_new_pages(gfp & ~__GFP_ZERO, 0, node);

/* 分配内存对应的页框page转换成slob_page类型,并调用__SetPageSlab将页框flags设置相应标记 */

373 spin_lock_irqsave(&slob_lock, flags);

374 sp->units = SLOB_UNITS(PAGE_SIZE);

376 INIT_LIST_HEAD(&sp->list);

/* 设置slob整页内存空闲块的块头描述符slob_t中的空闲大小。将next offset设置成下一页的unit偏移时,表示b为sp的free链表为最后一个slob block */

377 set_slob(b, SLOB_UNITS(PAGE_SIZE), b + SLOB_UNITS(PAGE_SIZE));

/* 将新分配内存页的slob page将入slob_list中 */

378 set_slob_page_free(sp, slob_list);

379 b = slob_page_alloc(sp, size, align);

381 spin_unlock_irqrestore(&slob_lock, flags);

382 }

383 if (unlikely((gfp & __GFP_ZERO) && b))

386 }

388 /*

389 * slob_free: entry point into the slob allocator.

390 */

391 static void slob_free(void *block, int size)

392 {

394 slob_t *prev, *next, *b = (slob_t *)block;

398 if (unlikely(ZERO_OR_NULL_PTR(block)))

399 return;

403 units = SLOB_UNITS(size);

405 spin_lock_irqsave(&slob_lock, flags);

/* 若释放block后,slob page整个页的内存空间为空,则释放该slob page */

407 if (sp->units + units == SLOB_UNITS(PAGE_SIZE)) {

408 /* Go directly to page allocator. Do not pass slob allocator */

/* 若该page在free_slob_pages list中,则将该slob page从链表中删除,并调用__ClearPageSlobFree清除page中相关记录标志 */

409 if (slob_page_free(sp))

411 spin_unlock_irqrestore(&slob_lock, flags);

/* __ClearPageSlab */

/* reset_page_mapcount(),并清空page的mapping字段 */

/* 将当前进程的recliam_state记账,并调用free_pages 将page释放到zone mem allocator中 */

414 slob_free_pages(b, 0);

415 return;

416 }

/* 若当前slob page除block之外,还有未释放的other blocks,即部分满slob 或 全满slob */

417

/* 若slob page不在free_slob_pages链表中,(即slob page为全满slob)则将该slob page加入free_slob_small链表中 */

418 if (!slob_page_free(sp)) {

419 /* This slob page is about to become partially free. Easy! */

423 (void *)((unsigned long)(b +

424 SLOB_UNITS(PAGE_SIZE)) & PAGE_MASK)); /* 将当前唯一空闲block加入slob page的free链表 */

425 set_slob_page_free(sp, &free_slob_small); goto out;

427 }

429 /*

430 * Otherwise the page is already partially free, so find reinsertion

431 * point.

432 */

/* 若slob page是部分满blocks的slob */

/* 若要释放的block在free list first block之前,则将block插入并更新free链首。若block紧邻first block,则插入时还需合并空闲块 */

436 if (b + units == sp->free) {

437 units += slob_units(sp->free);

438 sp->free = slob_next(sp->free);

439 }

440 set_slob(b, units, sp->free);

442 } else {

/* 由于slob free block list是按地址升序组织成链,故需先查找到适当的插入位置,并更新链表。 */

448 }

/* 此时地址关系为: prev < block <= next */

449

/* 若prev存在且prev和block是邻居,则将prev合并到block,并将block的offset链接到next */

450 if (!slob_last(prev) && b + units == next) {

451 units += slob_units(next);

452 set_slob(b, units, slob_next(next));

453 } else

/* 否则只是简单地将block的offset链接到next */

/* 若prev和block是邻居,则将block合并到prev,并将prev的offset链接到block的offset */

456 if (prev + slob_units(prev) == b) {

457 units = slob_units(b) + slob_units(prev);

458 set_slob(prev, units, slob_next(b));

459 } else

/* 否则只是简单地将prev的offset链接到block */

460 set_slob(prev, slob_units(prev), b);

461 }

463 spin_unlock_irqrestore(&slob_lock, flags);

464 }

466 /*

467 * End of slob allocator proper. Begin kmem_cache_alloc and kmalloc frontend.

468 */

470 void *__kmalloc_node(size_t size, gfp_t gfp, int node)

471 {

473 int align = max(ARCH_KMALLOC_MINALIGN, ARCH_SLAB_MINALIGN);

/* 如果对分配空间+ align空间之后,还不超过一页大小时,则直接调用slob分配器接口slob_alloc分配block。此处align空间用于存放对象大小,即block_size >= obj_size + align_size */

478 if (size < PAGE_SIZE - align) {

480 return ZERO_SIZE_PTR;

482 m = slob_alloc(size + align, gfp, align, node);

/* 将对象大小存放在block的头sizeof(size_t)空间中,紧随其后的是对象空间。block = (align + object) */

489 trace_kmalloc_node(_RET_IP_, ret,

490 size, size + align, gfp, node);

491 } else {

/* 如果所需分配空间超过一页大小,则直接调用slob_new_pages从buddy分配器中分配连续order个compound page(order > 1的连续页面)*/

492 unsigned int order = get_order(size);

494 ret = slob_new_pages(gfp | __GFP_COMP, get_order(size), node);

497 page = virt_to_page(ret);

499 }

501 trace_kmalloc_node(_RET_IP_, ret,

502 size, PAGE_SIZE << order, gfp, node);

503 }

505 kmemleak_alloc(ret, size, 1, gfp);

507 }

508 EXPORT_SYMBOL(__kmalloc_node);

510 void kfree(const void *block)

511 {

514 trace_kfree(_RET_IP_, block);

516 if (unlikely(ZERO_OR_NULL_PTR(block)))

517 return;

/* 若所释放的空间属于slob,此处block_addr = object_addr – align,当分配对象时即给其附加分配align大小空间,见__kmalloc_node() */

521 if (is_slob_page(sp)) {

522 int align = max(ARCH_KMALLOC_MINALIGN, ARCH_SLAB_MINALIGN);

523 unsigned int *m = (unsigned int *)(block - align);

525 } else

527 }

530 /* can't use ksize for kmem_cache_alloc memory, only kmalloc */

531 size_t ksize(const void *block)

532 {

536 if (unlikely(block == ZERO_SIZE_PTR))

537 return 0;

/* 返回object占用的空间,有可能 > object_size,由于slob是按SLOB_UNIT为单位进行分配。 */

540 if (is_slob_page(sp)) {

541 int align = max(ARCH_KMALLOC_MINALIGN, ARCH_SLAB_MINALIGN);

542 unsigned int *m = (unsigned int *)(block - align);

543 return SLOB_UNITS(*m) * SLOB_UNIT;

544 } else

/* 若调用kmalloc_node分配大于1页空间的对象,则返回compound page,在page的private记录object对象的字节大小 */

546 }

/* 有了__kmalloc_node,已经可以使用slob,但为什么还要有kmem_cache?因为通过kmem_cache分配时,分配相同size的block,不需要在block内部还额外存储align空间用以存放object大小,但若定义SLAB_DESTROY_BY_RCU,则block_size = object_size + rcu_size。kmem_cache并可指定构造函数,对其初始化。另一方面,使用__kmalloc_node的好处是:分配可变大小的object。 */

549 struct kmem_cache {

554 };

/* 从slob分配器中分配一个kmem_cache对象,并对其进行初始化 */

556 struct kmem_cache *kmem_cache_create(const char *name, size_t size,

557 size_t align, unsigned long flags, void (*ctor)(void *))

558 {

559 struct kmem_cache *c;

561 c = slob_alloc(sizeof(struct kmem_cache),

562 GFP_KERNEL, ARCH_KMALLOC_MINALIGN, -1);

/* 若定义了按slab destroy by rcu,则block_size = object_size + slob_rcu_size。在slob_rcu的size字段,还存放object_size大小 */

567 if (flags & SLAB_DESTROY_BY_RCU) {

568 /* leave room for rcu footer at the end of object */

569 c->size += sizeof(struct slob_rcu);

570 }

573 /* ignore alignment unless it's forced */

574 c->align = (flags & SLAB_HWCACHE_ALIGN) ? SLOB_ALIGN : 0;

575 if (c->align < ARCH_SLAB_MINALIGN)

576 c->align = ARCH_SLAB_MINALIGN;

579 } else if (flags & SLAB_PANIC)

580 panic("Cannot create slab cache %s\n", name);

582 kmemleak_alloc(c, sizeof(struct kmem_cache), 1, GFP_KERNEL);

584 }

585 EXPORT_SYMBOL(kmem_cache_create);

/* 将kmem_cache内存释放回slob分配器 */

587 void kmem_cache_destroy(struct kmem_cache *c)

588 {

590 if (c->flags & SLAB_DESTROY_BY_RCU)

591 rcu_barrier();

592 slob_free(c, sizeof(struct kmem_cache));

593 }

594 EXPORT_SYMBOL(kmem_cache_destroy);

/* 根据kmem_cache所提供有关对象的信息,分配一个block对象。若kmem_cache中size小于一页,则调用slob_alloc从slob分配器中分配一个block。否则,直接调用buddy分配器分配 */

596 void *kmem_cache_alloc_node(struct kmem_cache *c, gfp_t flags, int node)

597 {

600 if (c->size < PAGE_SIZE) {

/* 注意:仅要求slob分配object_size哦,未像__kmalloc_node还包含align_size~!因为object_size可以记录在kmem_cache的size字段中。 */

601 b = slob_alloc(c->size, flags, c->align, node);

602 trace_kmem_cache_alloc_node(_RET_IP_, b, c->size,

603 SLOB_UNITS(c->size) * SLOB_UNIT,

605 } else {

606 b = slob_new_pages(flags, get_order(c->size), node);

607 trace_kmem_cache_alloc_node(_RET_IP_, b, c->size,

608 PAGE_SIZE << get_order(c->size),

610 }

/* 若调用__kmalloc_node,则不能进行初始化啦~~这也是kmem_cache_alloc_node的一个优势 */

615 kmemleak_alloc_recursive(b, c->size, 1, c->flags, flags);

617 }

618 EXPORT_SYMBOL(kmem_cache_alloc_node);

620 static void __kmem_cache_free(void *b, int size)

621 {

/* block无需做偏移调整 */

624 else

625 slob_free_pages(b, get_order(size));

626 }

/* 若slob采用rcu释放方式,则当释放一个rcu block时,由于其内存格局如下:block = [object | slob_rcu],且slob_rcu的size为block_size */

628 static void kmem_rcu_free(struct rcu_head *head)

629 {

630 struct slob_rcu *slob_rcu = (struct slob_rcu *)head;

631 void *b = (void *)slob_rcu - (slob_rcu->size - sizeof(struct slob_rcu));

633 __kmem_cache_free(b, slob_rcu->size);

634 }

/* 通过kmem_cache接口将block释放回slob分配器。释放时,若定义了SLAB_DESTROY_BY_RCU,则采用rcu方式释放block */

636 void kmem_cache_free(struct kmem_cache *c, void *b)

637 {

638 kmemleak_free_recursive(b, c->flags);

639 if (unlikely(c->flags & SLAB_DESTROY_BY_RCU)) {

640 struct slob_rcu *slob_rcu;

641 slob_rcu = b + (c->size - sizeof(struct slob_rcu));

642 INIT_RCU_HEAD(&slob_rcu->head);

/* 将kmem_rcu_free回调函数注册到rcu回调链上并立即返回。一旦所有CPU都已完成临界区操作,kmem_rcu_free函数将被调用,以释放不在被使用的block */

644 call_rcu(&slob_rcu->head, kmem_rcu_free);

645 } else {

646 __kmem_cache_free(b, c->size);

647 }

649 trace_kmem_cache_free(_RET_IP_, b);

650 }

651 EXPORT_SYMBOL(kmem_cache_free);

653 unsigned int kmem_cache_size(struct kmem_cache *c)

654 {

656 }

657 EXPORT_SYMBOL(kmem_cache_size);

659 const char *kmem_cache_name(struct kmem_cache *c)

660 {

662 }

663 EXPORT_SYMBOL(kmem_cache_name);

665 int kmem_cache_shrink(struct kmem_cache *d)

666 {

667 return 0;

668 }

669 EXPORT_SYMBOL(kmem_cache_shrink);

671 int kmem_ptr_validate(struct kmem_cache *a, const void *b)

672 {

673 return 0;

674 }

676 static unsigned int slob_ready __read_mostly;

678 int slab_is_available(void)

679 {

680 return slob_ready;

681 }

683 void __init kmem_cache_init(void)

684 {

685 slob_ready = 1;

686 }

688 void __init kmem_cache_init_late(void)

689 {

690 /* Nothing to do */

691 }

参考资料

《slob: introduce the SLOB allocator》,http://lwn.net/Articles/157944/

《What is RCU, Fundamentally?》,http://lwn.net/Articles/262464/

2743

2743

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?