一、创建数据库

create database hive;二、创建表

- 创建员工表

create table t_emp ( id int, name string, age int, dept_name string ) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

- 创建员工文件 emp.txt

1,张三,30,销售部 2,李四,31,市场部 3,王五,32,研发部 4,孙六,33,行政部 - 导入数据

LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1, partcol2=val2 ...)]LOAD DATA LOCAL INPATH '/root/emp.txt' INTO TABLE t_emp; - 查询员工总数

hive> select count(*) from t_emp; Query ID = root_20160531150116_fa9fcc80-eb98-4e84-ba50-646e4e56d9aa Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks determined at compile time: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<span style="font-family: Arial, Helvetica, sans-serif;"><number></span> Starting Job = job_1464666884311_0002, Tracking URL = http://node1:8088/proxy/application_1464666884311_0002/ Kill Command = /home/hadoop-2.5/bin/hadoop job -kill job_1464666884311_0002 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1 2016-05-31 15:03:09,162 Stage-1 map = 0%, reduce = 0% 2016-05-31 15:04:09,510 Stage-1 map = 0%, reduce = 0% 2016-05-31 15:04:39,292 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 16.43 sec 2016-05-31 15:05:39,300 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 16.43 sec 2016-05-31 15:05:46,423 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 19.93 sec 2016-05-31 15:05:49,925 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 23.47 sec MapReduce Total cumulative CPU time: 23 seconds 470 msec Ended Job = job_1464666884311_0002 MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 25.84 sec HDFS Read: 6793 HDFS Write: 2 SUCCESS Total MapReduce CPU Time Spent: 25 seconds 840 msec OK 4 Time taken: 281.847 seconds, Fetched: 1 row(s)

三、数据类型

参考文档:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+Types

四、导入数据 LOAD

LOCAL:导入本地数据LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1, partcol2=val2 ...)]

filepath: 相对路径project/data1、绝对路径/user/hive/project/data1、完整url hdfs://namenode:9000/user/hive/project/data1等

OVERWRITE: 覆盖文件

PARTITION: 分区,即按名称保存数据的文件夹

五、插入数据 INSERT

Standard syntax:

INSERT OVERWRITE TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...) [IF NOT EXISTS]] select_statement1 FROM from_statement;

INSERT INTO TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...)] select_statement1 FROM from_statement;

Hive extension (multiple inserts):

FROM from_statement

INSERT OVERWRITE TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...) [IF NOT EXISTS]] select_statement1

[INSERT OVERWRITE TABLE tablename2 [PARTITION ... [IF NOT EXISTS]] select_statement2]

[INSERT INTO TABLE tablename2 [PARTITION ...] select_statement2] ...;

FROM from_statement

INSERT INTO TABLE tablename1 [PARTITION (partcol1=val1, partcol2=val2 ...)] select_statement1

[INSERT INTO TABLE tablename2 [PARTITION ...] select_statement2]

[INSERT OVERWRITE TABLE tablename2 [PARTITION ... [IF NOT EXISTS]] select_statement2] ...;

Hive extension (dynamic partition inserts):

INSERT OVERWRITE TABLE tablename PARTITION (partcol1[=val1], partcol2[=val2] ...) select_statement FROM from_statement;

INSERT INTO TABLE tablename PARTITION (partcol1[=val1], partcol2[=val2] ...) select_statement FROM from_statement;

例如:

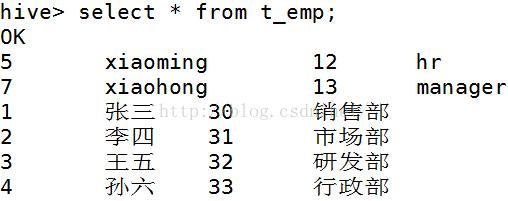

hive> insert into table t_emp values (5,'xiaoming',12,'hr'),(7,'xiaohong',13,'manager');- 创建数据库

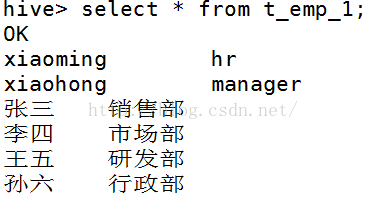

插入数据到t_emp_1hive> create table t_emp_1 ( > name varchar(50), > dname varchar(50));

hive> insert into t_emp_1 select name, dept_name from t_emp group dept_name;

六、导出 Export

EXPORT TABLE tablename [PARTITION (part_column="value"[, ...])]

TO 'export_target_path' [ FOR replication('eventid') ]hive> export table t_emp to '/usr/file/emp.txt';七、导入 Import

IMPORT [[EXTERNAL] TABLE new_or_original_tablename [PARTITION (part_column="value"[, ...])]]

FROM 'source_path'

[LOCATION 'import_target_path']

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DML#LanguageManualDML-InsertingdataintoHiveTablesfromqueries

126

126

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?