概述

What Is Apache Hadoop?

The Apache™ Hadoop® project develops open-source software for reliable, scalable, distributed computing.

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

什么是Apache Hadoop?

Apache™Hadoop®项目开发可靠,可扩展,分布式计算的开源软件。

Apache Hadoop软件库是一个框架,允许使用简单的编程模型跨计算机群集分布式处理大型数据集。 它旨在从单台服务器扩展到数千台机器,每台机器提供本地计算和存储。 该库本身不是依靠硬件来提供高可用性,而是设计用于在应用层检测和处理故障,从而在一组计算机之上提供高可用性服务,每个计算机都可能出现故障。

Hadoop2的框架最核心的设计就是HDFS、MapReduce和YARN,为海量的数据提供了存储和计算。

HDFS: 分布式文件系统,存储

MapReduce: 分布式计算

Yarn: 资源(memory+cpu)和job调度监控

1. 部署方式

1、单机模式standalone: 一般不使用单JAVA进程模式

2、伪分布模式 Pseudo-Distributed: 开发环境|学习环境多JAVA进程模式

3、集群模式 Cluster Mode: 生产使用多台机器多JAVA进程模式

2. 伪分布模式部署

获取HADOOP软件包有两种方式

1、通过源码方式maven编译获取(本实验使用源码编译方式)

2、直接获取编译后二进制软件包

3. 前期规划

| System | IPaddr | 主机名 | Software |

|---|---|---|---|

| CentOS-6.8-x86_64 | 192.168.10.10 | hadoop | hadoop-2.8.3.tar.gz |

4. 安装前准备

4.1 配置主机名静态IPaddr(略)

4.2 配置aliyun yum源(略)

4.3 Oracle jdk1.8安装部署(Open jdk尽量不要使用)下载地址(略)

4.4设置java全局环境变量验证(略)

5. hadoop安装部署

5.1 配置hadoop用户与用户组

[root@hadoop ~]# useradd -u 515 -m hadoop -s /bin/bash

[root@hadoop ~]# vim /etc/sudoers

hdaoop ALL=(root) NOPASSWD:ALL5.2 解压源码编译hadoop

[root@hadoop ~]# tar -xf /tmp/hadoop-rel-release-2.8.3/hadoop-dist/target/hadoop-2.8.3.tar.gz -C /usr/local/

[root@hadoop ~]# cd /usr/local/

[root@hadoop local]# ln -s hadoop-2.8.3/ hadoop

[root@hadoop local]# chown hadoop.hadoop -R /usr/local/hadoop

[root@hadoop local]# chown hadoop.hadoop -R /usr/local/hadoop-2.8.3/5.3 设置hadoop家目录全局环境变量

[root@hadoop local]# vim /etc/profile

JAVA_HOME=/usr/java/jdk

MAVEN_HOME=/usr/local/apache-maven

FINDBUGS_HOME=/usr/local/findbugs

FORREST_HOME=/usr/local/apache-forrest

HADOOP_HOME=/usr/local/hadoop

PATH=$JAVA_HOME/bin:$MAVEN_HOME/bin:$FINDBUGS_HOME/bin:$FORREST_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

CLASSPATH=$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME

export MAVEN_HOME

export FINDBUGS_HOME

export FORREST_HOME

export HADOOP_HOME

export PATH

export CLASSPATH

[root@hadoop local]# source /etc/profile

####全局环境变量验证

[root@hadoop local]# hdfs

hdfs hdfs.cmd hdfs-config.cmd hdfs-config.sh

5.4 配置文件设置

hadoop核心配置文件官方文档

hadoop hdfs服务配置文件官方文档

####配置core-site.xml hadoop核心配置文件

[root@hadoop hadoop]# vim etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

####配置hdfs-site.xml hadoop hdfs服务配置

[root@hadoop hadoop]# vim etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

####配置hadoop-env.sh hadoop配置环境

[root@hadoop hadoop]# vim etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/java/jdk5.5配置hadoop用户ssh信任关系

[root@hadoop hadoop]# su - hadoop

[hadoop@hadoop ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

41:f4:8c:83:77:7f:1b:05:59:7f:cb:dd:11:7f:0b:e0 hadoop@hadoop

The key's randomart image is:

+--[ RSA 2048]----+

| .o . .+.|

| o +. . ..+|

| . = +E . .*|

| . + . o.O|

| S . o+o|

| . o |

| . |

| |

| |

+-----------------+

[hadoop@hadoop ~]$ cd .ssh/

[hadoop@hadoop .ssh]$ mv id_rsa.pub authorized_keys

[hadoop@hadoop .ssh]$ chmod 600 authorized_keys

####验证免密码登录

[hadoop@hadoop .ssh]$ ssh hadoop

RSA key fingerprint is 8b:a9:2e:6a:0c:5a:db:b0:d9:26:65:36:39:fd:8c:6f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'hadoop,192.168.10.10' (RSA) to the list of known hosts.

[hadoop@hadoop ~]$ exit

logout

Connection to 10.70.193.215 closed.

[hadoop@hadoop .ssh]$5.6 格式化hdfs文件系统

[hadoop@hadoop .ssh]$ hdfs namenode -format

17/12/23 14:57:08 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

17/12/23 14:57:10 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1241967460-127.0.0.1-1514012230107

17/12/23 14:57:10 INFO common.Storage: Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted.

17/12/23 14:57:10 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

17/12/23 14:57:10 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 seconds.

17/12/23 14:57:10 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

17/12/23 14:57:10 INFO util.ExitUtil: Exiting with status 0

17/12/23 14:57:10 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/1.默认的存储路径哪个配置?

Storage directory: /tmp/hadoop-hadoop/dfs/name

2.hadoop-hadoop指的什么意思?

core-site.xml

hadoop.tmp.dir: /tmp/hadoop-${user.name}

A base for other temporary directories. 其他临时目录的基础

hdfs-site.xml

dfs.namenode.name.dir: file://${hadoop.tmp.dir}/dfs/name

hdfs 名称节点存储路径默认基于hadoop.tmp.dir/dfs/name,一般环境不设置在tmp目录(ubuntu 在系统重启后tmp目录文件清空)

5.6 启动HDFS服务验证

[hadoop@hadoop ~]$ start-dfs.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop-2.8.3/logs/hadoop-hadoop-namenode-hadoop.out

localhost: starting datanode, logging to /usr/local/hadoop-2.8.3/logs/hadoop-hadoop-datanode-hadoop.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: Warning: Permanently added '0.0.0.0' (RSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop-2.8.3/logs/hadoop-hadoop-secondarynamenode-hadoop.out

[hadoop@hadoop ~]$ jps

2615 Jps

1817 NameNode

2153 SecondaryNameNode

1962 DataNode5.7 配置MapReduce+Yarn设置

hadoop mapred计算配置文件

hadoop yarn服务配置文件

####配置mapred-site.xml hadoop mapred计算配置文件

[root@hadoop hadoop]# vim etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

####配置mapred-site.xml hadoop yarn服务配置文件

[root@hadoop hadoop]# vim etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>5.8 启动yarn服务验证

[hadoop@hadoop-master ~]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-resourcemanager-hadoop-master.out

localhost: starting nodemanager, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-nodemanager-hadoop-master.out

[hadoop@hadoop-master ~]$ jps

15141 NodeManager

5592 SecondaryNameNode

15177 Jps

5321 NameNode

15050 ResourceManager

5406 DataNode6. 重新修改core-site.xml fs.defaultFS配置项

缺省文件系统的名称设置hadoop-master改为ipaddr 192.168.10.10

6.1 更改core-site.xml

[hadoop@hadoop-master hadoop]$ cd /usr/local/hadoop/etc/hadoop

[hadoop@hadoop-master hadoop]$ vim core-site.xml

<configuration>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.10.10:9000</value>

</property>

</configuration>

</configuration>6.2 重新格式化文件系统

[hadoop@hadoop-master hadoop]$ bin/hdfs namenode -format

18/01/01 17:25:25 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/01/01 17:25:25 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/01/01 17:25:25 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/01/01 17:25:25 INFO util.GSet: VM type = 64-bit

18/01/01 17:25:25 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

18/01/01 17:25:25 INFO util.GSet: capacity = 2^15 = 32768 entries

18/01/01 17:25:25 INFO namenode.NNConf: ACLs enabled? false

18/01/01 17:25:25 INFO namenode.NNConf: XAttrs enabled? true

18/01/01 17:25:25 INFO namenode.NNConf: Maximum size of an xattr: 16384

Re-format filesystem in Storage Directory /tmp/hadoop-hadoop/dfs/name ? (Y or N) Y <--需要输入Y确认重新初始化

18/01/01 17:25:26 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1676650874-192.168.10.10-1514798726625

18/01/01 17:25:26 INFO common.Storage: Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted.

18/01/01 17:25:27 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/01/01 17:25:27 INFO util.ExitUtil: Exiting with status 0

18/01/01 17:25:27 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-master/192.168.10.10

************************************************************/

[hadoop@hadoop-master hadoop]$ jps

2464 DataNode

3459 Jps

2823 ResourceManager

2925 NodeManager

2669 SecondaryNameNode

2367 NameNode

[hadoop@hadoop-master hadoop]$ netstat -tulnp | grep 9000

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

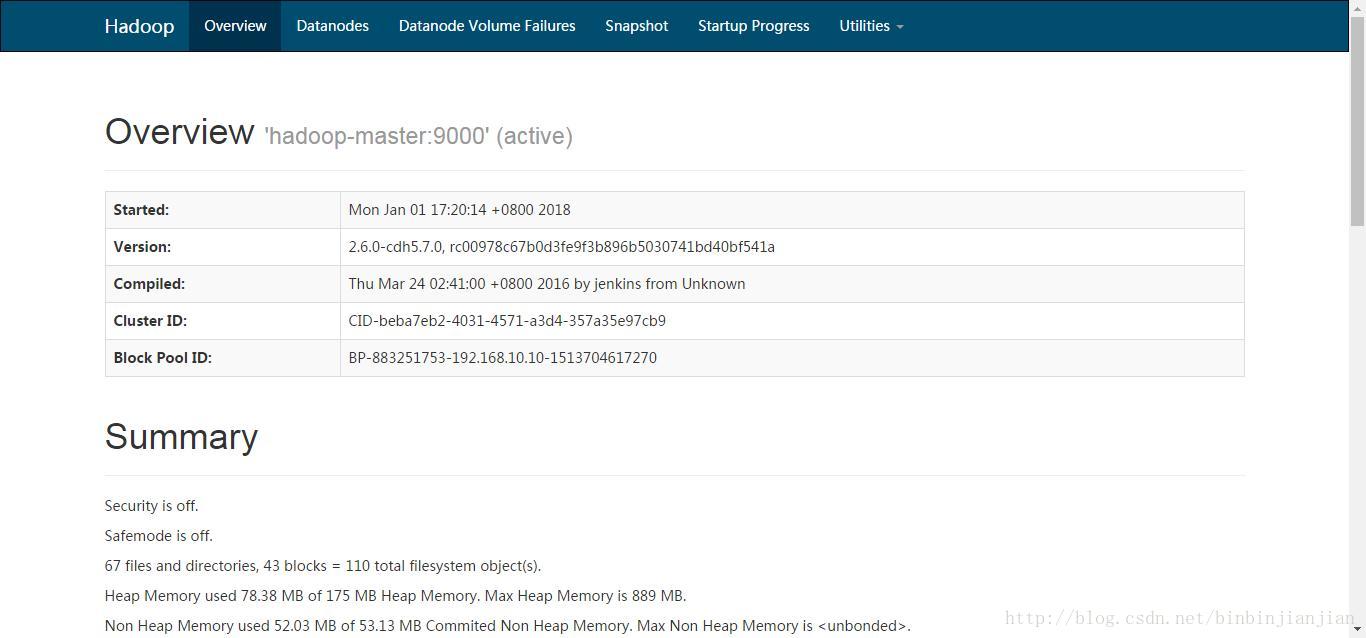

tcp 0 0 192.168.10.10:9000 0.0.0.0:* LISTEN 2367/java 6.3 浏览NameNode的web界面

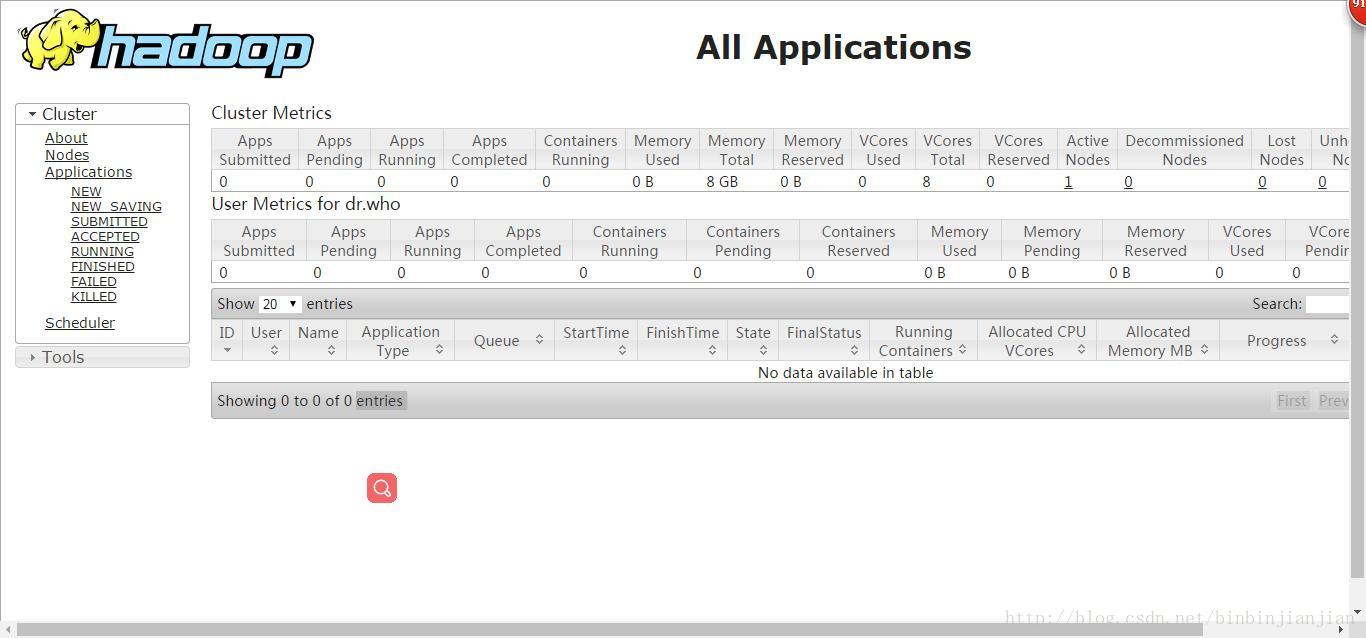

6.4 浏览ResourceManager的Web界面

7. 配置secondary namenode服务与slave服务

####配置secondary namenode

[hadoop@hadoop-master ~]$ vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop-master:50090</value>

</property>

<property>

<name>dfs.namenode.secondary.https-address</name>

<value>hadoop-master:50091</value>

</property>

####配置slave

[hadoop@hadoop-master ~]$ vim /usr/local/hadoop/etc/hadoop/slaves

hadoop-master

####重启hdfs生效配置

[hadoop@hadoop-master ~]$ start-dfs.sh

Starting namenodes on [hadoop-master]

hadoop-master: starting namenode, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-namenode-hadoop-master.out

hadoop-master: starting datanode, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-datanode-hadoop-master.out

Starting secondary namenodes [hadoop-master]

hadoop-master: starting secondarynamenode, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-secondarynamenode-hadoop-master.out

[hadoop@hadoop-master ~]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-resourcemanager-hadoop-master.out

hadoop-master: starting nodemanager, logging to /usr/local/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-nodemanager-hadoop-master.out

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?