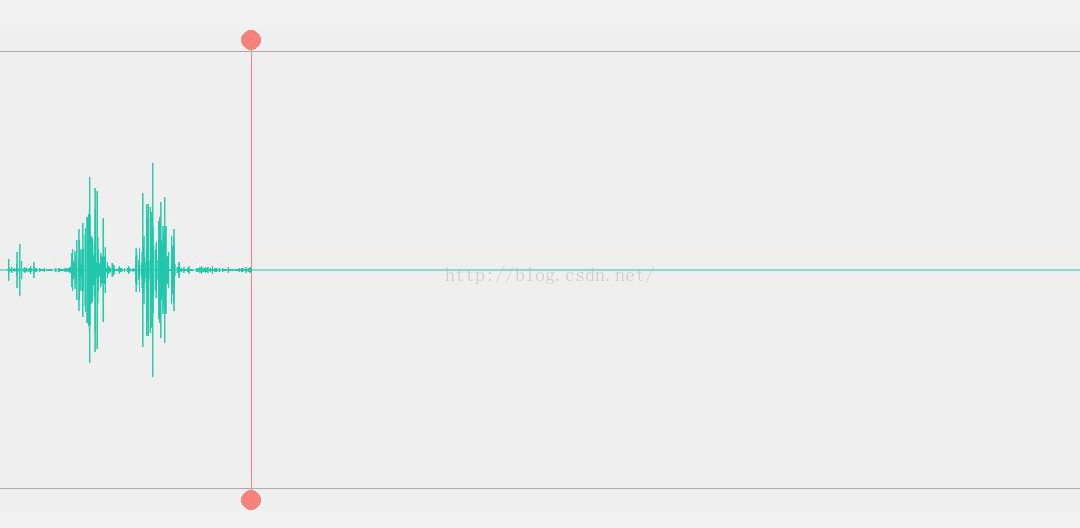

今天来分享下android的录音,并绘制波形。也许大家还不知道我在说啥功能上图吧!网上找了很多都写得很垃圾不说,有些甚至连原理都没整明白就直接用在项目中。中国大神就是与众不同。

上图是边录制边绘制的图,先讲讲原理,可能会涉及到音频的知识,如果我讲的不清楚你自己想办法弄懂吧。(神马还是要靠自己)

波形声音设备可以通过麦克风捕捉声音,并将其转换为数值,然后把它们储存到内存或者磁盘上的波形中,波形文件的扩展名是 .WAV。这样,声音就可以播放了。数字化的波形声音是一种使用二进制表示的串行比特流,它遵循一定的标准或者规范编码,其数据是按时间顺序组织的,文件扩展名为“wav”

有的同学看到这肯定android不是pcm格式吗 你咋说wav,这个其实pcm加个wave头就是wav文件了,我想分析下这个wav头格式,先上pcm转换成wav格式代码

public class Pcm2Wav

{

public void convertAudioFiles(String src, String target) throws Exception

{

FileInputStream fis = new FileInputStream(src);

FileOutputStream fos = new FileOutputStream(target);

//计算长度

byte[] buf = new byte[1024 * 4];

int size = fis.read(buf);

int PCMSize = 0;

while (size != -1)

{

PCMSize += size;

size = fis.read(buf);

}

fis.close();

//填入参数,比特率等等。这里用的是16位单声道 8000 hz

WaveHeader header = new WaveHeader();

//长度字段 = 内容的大小(PCMSize) + 头部字段的大小(不包括前面4字节的标识符RIFF以及fileLength本身的4字节)

header.fileLength = PCMSize + (44 - 8);

header.FmtHdrLeth = 16;

header.BitsPerSample = 16;

header.Channels = 1;

header.FormatTag = 0x0001;

header.SamplesPerSec = 16000;

header.BlockAlign = (short) (header.Channels * header.BitsPerSample / 8);

header.AvgBytesPerSec = header.BlockAlign * header.SamplesPerSec;

header.DataHdrLeth = PCMSize;

byte[] h = header.getHeader();

assert h.length == 44; //WAV标准,头部应该是44字节

//write header

fos.write(h, 0, h.length);

//write data stream

fis = new FileInputStream(src);

size = fis.read(buf);

while (size != -1)

{

fos.write(buf, 0, size);

size = fis.read(buf);

}

fis.close();

fos.close();

}

}

我相信做过的肯定会说加个头,网上都有算法在前面加44个字节对应格式的数据就可以了。(这种最多,其实还有其他的头格式,只是提醒下)

<pre name="code" class="html"> 00H 4 char "RIFF"标志

04H 4 long int 文件长度

08H 4 char "WAVE"标志

0CH 4 char "fmt"标志

10H 4 过渡字节(不定)

14H 2 int 格式类别(10H为PCM形式的声音数据)

16H 2 int 通道数,单声道为1,双声道为2

18H 2 int 采样率(每秒样本数),表示每个通道的播放速度,

1CH 4 long int 波形音频数据传送速率,其值为通道数×每秒数据位数×每样

本的数据位数/8。播放软件利用此值可以估计缓冲区的大小。

20H 2 int 数据块的调整数(按字节算的),其值为通道数×每样本的数据位

值/8。播放软件需要一次处理多个该值大小的字节数据,以便将其值用于缓冲区的调整。

22H 2 每样本的数据位数,表示每个声道中各个样本的数据位数。如果有多

个声道,对每个声道而言,样本大小都一样。

24H 4 char 数据标记符"data"

28H 4 long int 语音数据的长度

上面就是一个wav的头信息,那我们波形和哪些信息有关呢,是不是又头疼了...哈哈,慢慢听cokus来给你分析

采样的位数指的是描述数字信号所使用的位数。8位(8bit)代表2的8次方=256,16 位(16bit)则代表2的16次方=65536 / 1024 =64K采样率是一秒钟内对声音信号的采样次数,采样率越高声音音质理论就越真实音频文件就越大。

也许很多聪明的同学已经看懂了,我们可以根据wav的头信息获取他的采样,然后从录音缓存区中随着时间顺序去读取对应的一个数值,然后通过这个数值去绘制对应的,

我们项目中用的16位所以我可以直接用short,也可以用byte,如果你是8位的必须用byte了。上代码

short[] buffer = new short[recBufSize];

audioRecord.startRecording(); // 开始录制

while (isRecording) {

// 从MIC保存数据到缓冲区

int readsize = audioRecord.read(buffer, 0,

recBufSize);<pre name="code" class="java" style="text-indent: 28px;">synchronized (inBuf) {

// 添加数据

int len = readsize / rateX;

for (int i = 0; i < len; i += rateX) {

inBuf.add(buffer[i]);

}

}

边录边绘制这个过程说到这里,其次就是生成一个完成的波形图,其实原理同上,只是可能有些参数动态配置就可以,我是分开的封装的录音绘制用的surfaceview,波形汇总用的View。

package com.cokus.audio;

import com.datatang.client.R;

import android.annotation.SuppressLint;

import android.annotation.TargetApi;

import android.content.Context;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.DashPathEffect;

import android.graphics.Paint;

import android.os.Build;

import android.util.AttributeSet;

import android.util.Log;

import android.view.GestureDetector;

import android.view.MotionEvent;

import android.view.ScaleGestureDetector;

import android.view.View;

public class WaveformView extends View {

public interface WaveformListener {

public void waveformTouchStart(float x);

public void waveformTouchMove(float x);

public void waveformTouchEnd();

public void waveformFling(float x);

public void waveformDraw();

public void waveformZoomIn();

public void waveformZoomOut();

};

// Colors

private int line_offset;

private Paint mGridPaint;

private Paint mSelectedLinePaint;

private Paint mUnselectedLinePaint;

private Paint mUnselectedBkgndLinePaint;

private Paint mBorderLinePaint;

private Paint mPlaybackLinePaint;

private Paint mTimecodePaint;

Paint paintLine;

private int playFinish;

private SoundFile mSoundFile;

private int[] mLenByZoomLevel;

private double[][] mValuesByZoomLevel;

private double[] mZoomFactorByZoomLevel;

private int[] mHeightsAtThisZoomLevel;

private int mZoomLevel;

private int mNumZoomLevels;

private int mSampleRate;

private int mSamplesPerFrame;

private int mOffset;

private int mSelectionStart;

private int mSelectionEnd;

private int mPlaybackPos;

private float mDensity;

private float mInitialScaleSpan;

private WaveformListener mListener;

private GestureDetector mGestureDetector;

private ScaleGestureDetector mScaleGestureDetector;

private boolean mInitialized;

private int state=0;

public int getPlayFinish() {

return playFinish;

}

public void setPlayFinish(int playFinish) {

this.playFinish = playFinish;

}

public int getState() {

return state;

}

public void setState(int state) {

this.state = state;

}

public int getLine_offset() {

return line_offset;

}

public void setLine_offset(int line_offset) {

this.line_offset = line_offset;

}

public WaveformView(Context context, AttributeSet attrs) {

super(context, attrs);

// We don't want keys, the markers get these

setFocusable(false);

mGridPaint = new Paint();

mGridPaint.setAntiAlias(false);

mGridPaint.setColor(

getResources().getColor(R.drawable.grid_line));

mSelectedLinePaint = new Paint();

mSelectedLinePaint.setAntiAlias(false);

mSelectedLinePaint.setColor(

getResources().getColor(R.drawable.waveform_selected));

mUnselectedLinePaint = new Paint();

mUnselectedLinePaint.setAntiAlias(false);

mUnselectedLinePaint.setColor(

getResources().getColor(R.drawable.waveform_unselected));

mUnselectedBkgndLinePaint = new Paint();

mUnselectedBkgndLinePaint.setAntiAlias(false);

mUnselectedBkgndLinePaint.setColor(

getResources().getColor(

R.drawable.waveform_unselected_bkgnd_overlay));

mBorderLinePaint = new Paint();

mBorderLinePaint.setAntiAlias(true);

mBorderLinePaint.setStrokeWidth(1.5f);

mBorderLinePaint.setPathEffect(

new DashPathEffect(new float[] { 3.0f, 2.0f }, 0.0f));

mBorderLinePaint.setColor(

getResources().getColor(R.drawable.selection_border));

mPlaybackLinePaint = new Paint();

mPlaybackLinePaint.setAntiAlias(false);

mPlaybackLinePaint.setColor(

getResources().getColor(R.drawable.playback_indicator));

mTimecodePaint = new Paint();

mTimecodePaint.setTextSize(12);

mTimecodePaint.setAntiAlias(true);

mTimecodePaint.setColor(

getResources().getColor(R.drawable.timecode));

mTimecodePaint.setShadowLayer(

2, 1, 1,

getResources().getColor(R.drawable.timecode_shadow));

mGestureDetector = new GestureDetector(

context,

new GestureDetector.SimpleOnGestureListener() {

public boolean onFling(MotionEvent e1, MotionEvent e2, float vx, float vy) {

// mListener.waveformFling(vx);

return true;

}

}

);

mScaleGestureDetector = new ScaleGestureDetector(

context,

new ScaleGestureDetector.SimpleOnScaleGestureListener() {

@SuppressLint("NewApi")

public boolean onScaleBegin(ScaleGestureDetector d) {

Log.v("Ringdroid", "ScaleBegin " + d.getCurrentSpanX());

mInitialScaleSpan = Math.abs(d.getCurrentSpanX());

return true;

}

@SuppressLint("NewApi")

public boolean onScale(ScaleGestureDetector d) {

float scale = Math.abs(d.getCurrentSpanX());

Log.v("Ringdroid", "Scale " + (scale - mInitialScaleSpan));

if (scale - mInitialScaleSpan > 40) {

mListener.waveformZoomIn();

mInitialScaleSpan = scale;

}

if (scale - mInitialScaleSpan < -40) {

mListener.waveformZoomOut();

mInitialScaleSpan = scale;

}

return true;

}

@TargetApi(Build.VERSION_CODES.HONEYCOMB)

public void onScaleEnd(ScaleGestureDetector d) {

Log.v("Ringdroid", "ScaleEnd " + d.getCurrentSpanX());

}

}

);

mSoundFile = null;

mLenByZoomLevel = null;

mValuesByZoomLevel = null;

mHeightsAtThisZoomLevel = null;

mOffset = 0;

mPlaybackPos = -1;

mSelectionStart = 0;

mSelectionEnd = 0;

mDensity = 1.0f;

mInitialized = false;

}

public boolean hasSoundFile() {

return mSoundFile != null;

}

public void setSoundFile(SoundFile soundFile) {

mSoundFile = soundFile;

mSampleRate = mSoundFile.getSampleRate();

mSamplesPerFrame = mSoundFile.getSamplesPerFrame();

computeDoublesForAllZoomLevels();

mHeightsAtThisZoomLevel = null;

}

public boolean isInitialized() {

return mInitialized;

}

public int getZoomLevel() {

return mZoomLevel;

}

public void setZoomLevel(int zoomLevel) {

while (mZoomLevel > zoomLevel) {

zoomIn();

}

while (mZoomLevel < zoomLevel) {

zoomOut();

}

}

public boolean canZoomIn() {

//System.out.println("heihie"+mZoomLevel);

return (mZoomLevel > 0);

}

public void zoomIn() {

if (canZoomIn()) {

mZoomLevel--;

mSelectionStart *= 2;

mSelectionEnd *= 2;

mHeightsAtThisZoomLevel = null;

int offsetCenter = mOffset + getMeasuredWidth() / 2;

offsetCenter *= 2;

mOffset = offsetCenter - getMeasuredWidth() / 2;

if (mOffset < 0)

mOffset = 0;

invalidate();

}

}

public boolean canZoomOut() {

return (mZoomLevel < mNumZoomLevels - 1);

}

public void zoomOut() {

if (canZoomOut()) {

mZoomLevel++;

mSelectionStart /= 2;

mSelectionEnd /= 2;

int offsetCenter = mOffset + getMeasuredWidth() / 2;

offsetCenter /= 2;

mOffset = offsetCenter - getMeasuredWidth() / 2;

if (mOffset < 0)

mOffset = 0;

mHeightsAtThisZoomLevel = null;

invalidate();

}

}

public int maxPos() {

return mLenByZoomLevel[mZoomLevel];

}

public int secondsToFrames(double seconds) {

return (int)(1.0 * seconds * mSampleRate / mSamplesPerFrame + 0.5);

}

public int secondsToPixels(double seconds) {

double z = mZoomFactorByZoomLevel[mZoomLevel];

return (int)(z * seconds * mSampleRate / mSamplesPerFrame + 0.5);

}

public double pixelsToSeconds(int pixels) {

double z = mZoomFactorByZoomLevel[mZoomLevel];

return (mSoundFile.getmNumFramesFloat()*2 * (double)mSamplesPerFrame / (mSampleRate * z));

}

public int millisecsToPixels(int msecs) {

double z = mZoomFactorByZoomLevel[mZoomLevel];

return (int)((msecs * 1.0 * mSampleRate * z) /

(1000.0 * mSamplesPerFrame) + 0.5);

}

public int pixelsToMillisecs(int pixels) {

double z = mZoomFactorByZoomLevel[mZoomLevel];

return (int)(pixels * (1000.0 * mSamplesPerFrame) /

(mSampleRate * z) + 0.5);

}

public int pixelsToMillisecsTotal() {

double z = mZoomFactorByZoomLevel[mZoomLevel];

return (int)(mSoundFile.getmNumFramesFloat()*2 * (1000.0 * mSamplesPerFrame) /

(mSampleRate * z) + 0.5);

}

public void setParameters(int start, int end, int offset) {

mSelectionStart = start;

mSelectionEnd = end;

mOffset = offset;

}

public int getStart() {

return mSelectionStart;

}

public int getEnd() {

return mSelectionEnd;

}

public int getOffset() {

return mOffset;

}

public void setPlayback(int pos) {

mPlaybackPos = pos;

}

public void setListener(WaveformListener listener) {

mListener = listener;

}

public void recomputeHeights(float density) {

mHeightsAtThisZoomLevel = null;

mDensity = density;

mTimecodePaint.setTextSize((int)(12 * density));

invalidate();

}

protected void drawWaveformLine(Canvas canvas,

int x, int y0, int y1,

Paint paint) {

int pos = maxPos();

float rat =((float)getMeasuredWidth()/pos);

canvas.drawLine((int)(x*rat), y0*3/4+getMeasuredHeight()/8, (int)(x*rat), y1*3/4+getMeasuredHeight()/8, paint);

}

@Override

protected void onDraw(Canvas canvas) {

super.onDraw(canvas);

int measuredWidth = getMeasuredWidth();

int measuredHeight = getMeasuredHeight();

int height =measuredHeight-line_offset;

Paint centerLine = new Paint();

centerLine.setColor(Color.rgb(39, 199, 175));

canvas.drawLine(0, height*0.5f+line_offset/2, measuredWidth, height*0.5f+line_offset/2, centerLine);//中心线

paintLine =new Paint();

paintLine.setColor(Color.rgb(169, 169, 169));

canvas.drawLine(0, line_offset/2,measuredWidth, line_offset/2, paintLine);//最上面的那根线

// canvas.drawLine(0, height*0.25f+20, measuredWidth, height*0.25f+20, paintLine);//第二根线

// canvas.drawLine(0, height*0.75f+20, measuredWidth, height*0.75f+20, paintLine);//第3根线

canvas.drawLine(0, measuredHeight-line_offset/2-1, measuredWidth, measuredHeight-line_offset/2-1, paintLine);//最下面的那根线

// }

if(state == 1){

mSoundFile = null;

state = 0;

return;

}

if (mSoundFile == null){

height =measuredHeight-line_offset;

centerLine = new Paint();

centerLine.setColor(Color.rgb(39, 199, 175));

canvas.drawLine(0, height*0.5f+line_offset/2, measuredWidth, height*0.5f+line_offset/2, centerLine);//中心线

paintLine =new Paint();

paintLine.setColor(Color.rgb(169, 169, 169));

canvas.drawLine(0, line_offset/2,measuredWidth, line_offset/2, paintLine);//最上面的那根线

// canvas.drawLine(0, height*0.25f+20, measuredWidth, height*0.25f+20, paintLine);//第二根线

// canvas.drawLine(0, height*0.75f+20, measuredWidth, height*0.75f+20, paintLine);//第3根线

canvas.drawLine(0, measuredHeight-line_offset/2-1, measuredWidth, measuredHeight-line_offset/2-1, paintLine);//最下面的那根线

return;

}

if (mHeightsAtThisZoomLevel == null)

computeIntsForThisZoomLevel();

// Draw waveform

int start = mOffset;

int width = mHeightsAtThisZoomLevel.length - start;

int ctr = measuredHeight / 2;

if (width > measuredWidth)

width = measuredWidth;

// Draw grid

double onePixelInSecs = pixelsToSeconds(1);

boolean onlyEveryFiveSecs = (onePixelInSecs > 1.0 / 50.0);

double fractionalSecs = mOffset * onePixelInSecs;

int integerSecs = (int) fractionalSecs;

int i = 0;

while (i < width) {

i++;

fractionalSecs += onePixelInSecs;

int integerSecsNew = (int) fractionalSecs;

if (integerSecsNew != integerSecs) {

integerSecs = integerSecsNew;

if (!onlyEveryFiveSecs || 0 == (integerSecs % 5)) {

// canvas.drawLine(i, 0, i, measuredHeight, mGridPaint);

}

}

}

// Draw waveform

for (i = 0; i < width; i++) {

Paint paint;

if (i + start >= mSelectionStart &&

i + start < mSelectionEnd) {

paint = mSelectedLinePaint;

} else {

// drawWaveformLine(canvas, i, 0, measuredHeight,

// mUnselectedBkgndLinePaint);

paint = mUnselectedLinePaint;

}

paint.setColor(Color.rgb(39, 199, 175));

paint.setStrokeWidth(2);

drawWaveformLine(

canvas, i,

(ctr - mHeightsAtThisZoomLevel[start + i]),

(ctr + 1 + mHeightsAtThisZoomLevel[start + i]),

paint);

if (i + start == mPlaybackPos && playFinish != 1) {

Paint circlePaint = new Paint();//画圆

circlePaint.setColor(Color.rgb(246, 131, 126));

circlePaint.setAntiAlias(true);

canvas.drawCircle(i*getMeasuredWidth()/maxPos(), line_offset/4, line_offset/4, circlePaint);// 上圆

canvas.drawCircle(i*getMeasuredWidth()/maxPos(), getMeasuredHeight()-line_offset/4, line_offset/4, circlePaint);// 下圆

canvas.drawLine(i*getMeasuredWidth()/maxPos(), 0, i*getMeasuredWidth()/maxPos(), getMeasuredHeight(), circlePaint);//垂直的线

//System.out.println("current"+mPlaybackPos);

//画正在播放的线

//canvas.drawLine(i*getMeasuredWidth()/maxPos(), 0, i*getMeasuredWidth()/maxPos(), measuredHeight, paint);

}

}

// Draw timecode

double timecodeIntervalSecs = 1.0;

if (timecodeIntervalSecs / onePixelInSecs < 50) {

timecodeIntervalSecs = 5.0;

}

if (timecodeIntervalSecs / onePixelInSecs < 50) {

timecodeIntervalSecs = 15.0;

}

if (mListener != null) {

mListener.waveformDraw();

}

}

public void initWaveView(View view){

}

/**

* Called once when a new sound file is added

*/

private void computeDoublesForAllZoomLevels() {

int numFrames = mSoundFile.getNumFrames();

int[] frameGains = mSoundFile.getFrameGains();

double[] smoothedGains = new double[numFrames];

if (numFrames == 1) {

smoothedGains[0] = frameGains[0];

} else if (numFrames == 2) {

smoothedGains[0] = frameGains[0];

smoothedGains[1] = frameGains[1];

} else if (numFrames > 2) {

smoothedGains[0] = (double)(

(frameGains[0] / 2.0) +

(frameGains[1] / 2.0));

for (int i = 1; i < numFrames - 1; i++) {

smoothedGains[i] = (double)(

(frameGains[i - 1] / 3.0) +

(frameGains[i ] / 3.0) +

(frameGains[i + 1] / 3.0));

}

smoothedGains[numFrames - 1] = (double)(

(frameGains[numFrames - 2] / 2.0) +

(frameGains[numFrames - 1] / 2.0));

}

// Make sure the range is no more than 0 - 255

double maxGain = 1.0;

for (int i = 0; i < numFrames; i++) {

if (smoothedGains[i] > maxGain) {

maxGain = smoothedGains[i];

}

}

double scaleFactor = 1.0;

if (maxGain > 255.0) {

scaleFactor = 255 / maxGain;

}

// Build histogram of 256 bins and figure out the new scaled max

maxGain = 0;

int gainHist[] = new int[256];

for (int i = 0; i < numFrames; i++) {

int smoothedGain = (int)(smoothedGains[i] * scaleFactor);

if (smoothedGain < 0)

smoothedGain = 0;

if (smoothedGain > 255)

smoothedGain = 255;

if (smoothedGain > maxGain)

maxGain = smoothedGain;

gainHist[smoothedGain]++;

}

// Re-calibrate the min to be 5%

double minGain = 0;

int sum = 0;

while (minGain < 255 && sum < numFrames / 20) {

sum += gainHist[(int)minGain];

minGain++;

}

// Re-calibrate the max to be 99%

sum = 0;

while (maxGain > 2 && sum < numFrames / 100) {

sum += gainHist[(int)maxGain];

maxGain--;

}

if(maxGain <=50){

maxGain = 80;

}else if(maxGain>50 && maxGain < 120){

maxGain = 142;

}else{

maxGain+=10;

}

// Compute the heights

double[] heights = new double[numFrames];

double range = maxGain - minGain;

for (int i = 0; i < numFrames; i++) {

double value = (smoothedGains[i] * scaleFactor - minGain) / range;

if (value < 0.0)

value = 0.0;

if (value > 1.0)

value = 1.0;

heights[i] = value * value;

}

mNumZoomLevels = 5;

mLenByZoomLevel = new int[5];

mZoomFactorByZoomLevel = new double[5];

mValuesByZoomLevel = new double[5][];

// Level 0 is doubled, with interpolated values

mLenByZoomLevel[0] = numFrames * 2;

System.out.println("ssnum"+numFrames);

mZoomFactorByZoomLevel[0] = 2.0;

mValuesByZoomLevel[0] = new double[mLenByZoomLevel[0]];

if (numFrames > 0) {

mValuesByZoomLevel[0][0] = 0.5 * heights[0];

mValuesByZoomLevel[0][1] = heights[0];

}

for (int i = 1; i < numFrames; i++) {

mValuesByZoomLevel[0][2 * i] = 0.5 * (heights[i - 1] + heights[i]);

mValuesByZoomLevel[0][2 * i + 1] = heights[i];

}

// Level 1 is normal

mLenByZoomLevel[1] = numFrames;

mValuesByZoomLevel[1] = new double[mLenByZoomLevel[1]];

mZoomFactorByZoomLevel[1] = 1.0;

for (int i = 0; i < mLenByZoomLevel[1]; i++) {

mValuesByZoomLevel[1][i] = heights[i];

}

// 3 more levels are each halved

for (int j = 2; j < 5; j++) {

mLenByZoomLevel[j] = mLenByZoomLevel[j - 1] / 2;

mValuesByZoomLevel[j] = new double[mLenByZoomLevel[j]];

mZoomFactorByZoomLevel[j] = mZoomFactorByZoomLevel[j - 1] / 2.0;

for (int i = 0; i < mLenByZoomLevel[j]; i++) {

mValuesByZoomLevel[j][i] =

0.5 * (mValuesByZoomLevel[j - 1][2 * i] +

mValuesByZoomLevel[j - 1][2 * i + 1]);

}

}

if (numFrames > 5000) {

mZoomLevel = 3;

} else if (numFrames > 1000) {

mZoomLevel = 2;

} else if (numFrames > 300) {

mZoomLevel = 1;

} else {

mZoomLevel = 0;

}

mInitialized = true;

}

/**

* Called the first time we need to draw when the zoom level has changed

* or the screen is resized

*/

private void computeIntsForThisZoomLevel() {

int halfHeight = (getMeasuredHeight() / 2) - 1;

mHeightsAtThisZoomLevel = new int[mLenByZoomLevel[mZoomLevel]];

for (int i = 0; i < mLenByZoomLevel[mZoomLevel]; i++) {

mHeightsAtThisZoomLevel[i] =

(int)(mValuesByZoomLevel[mZoomLevel][i] * halfHeight);

}

}

}

先说到在,如果不明白可以问我。我在补充。 有兴趣可以加入AndroidE族E家QQ群 222894364

本文有cokus著

1475

1475

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?