K-means算法

- opencv中文版原文描述是:K均值是一种非监督的聚类方法,使用K个均值来表示数据的分布,其中K由用户定义。该方法跟期望最大化方法的区别是K均值的中心不是高斯分布,而且因为各个中心竞争去“俘获”最近的点,所以聚类更像肥皂泡。该方法由Steinhaus发明,并由Lloyd推广使用。

- K均值有以下3个问题.

1.它不能保证找到定位聚类中心的最佳方案,但是它能保证能收敛到某个解决方案。

2.K均值无法指出应该使用多少个类别。

3.K均值假设空间的协方差矩阵不会影响结果,或者已经归一化。 解决办法:

1.多运行几次K均值,每次聚类中心不一样,最后选择方差最小的那个结果。

2.首先将类别设为1,逐渐提高类别数,总方差会很快下降,直到出现一个拐点(不是极值点),选择拐点处对应的类别数。

3.将数据乘以协方差矩阵。即点之间的距离不是欧式距离而是Mahalanobis距离。

opencv2.4.9中代码如下:

double cv::kmeans( InputArray _data, int K,

InputOutputArray _bestLabels,

TermCriteria criteria, int attempts,

int flags, OutputArray _centers )

{

const int SPP_TRIALS = 3;//用于产生类中心时尝试的次数

Mat data = _data.getMat();//数据矩阵

bool isrow = data.rows == 1 && data.channels() > 1;

int N = !isrow ? data.rows : data.cols;

int dims = (!isrow ? data.cols : 1)*data.channels();

int type = data.depth();//要求数据个数能用32bit表示

attempts = std::max(attempts, 1);//聚类的次数

CV_Assert( data.dims <= 2 && type == CV_32F && K > 0 );//只能处理2维及以下的数据

CV_Assert( N >= K );//样本数必须大于类中心数

_bestLabels.create(N, 1, CV_32S, -1, true);//每个样本最合适的类别,初始时为-1

Mat _labels, best_labels = _bestLabels.getMat();//best_labels用于保存每次聚类时最好的类别,开始时可能为空

if( flags & CV_KMEANS_USE_INITIAL_LABELS )

{

CV_Assert( (best_labels.cols == 1 || best_labels.rows == 1) &&

best_labels.cols*best_labels.rows == N &&

best_labels.type() == CV_32S &&

best_labels.isContinuous());

best_labels.copyTo(_labels);//如果开始指定了类别,则放到_labels里

}

else

{

if( !((best_labels.cols == 1 || best_labels.rows == 1) &&

best_labels.cols*best_labels.rows == N &&

best_labels.type() == CV_32S &&

best_labels.isContinuous()))

best_labels.create(N, 1, CV_32S);

_labels.create(best_labels.size(), best_labels.type());//如果没有指定类别,则创建类别空间

}

int* labels = _labels.ptr<int>();//用于临时保存样本的类别编号

//centers用于临时保存每次迭代后类中心,old_centers用于临时保存每次迭代之前的类中心;开始时都为空

Mat centers(K, dims, type), old_centers(K, dims, type), temp(1, dims, type);

vector<int> counters(K);//保存类别样本数

vector<Vec2f> _box(dims);//包含dims个值初始化的元素,每个元素是个Vec2f

Vec2f* box = &_box[0];

double best_compactness = DBL_MAX, compactness = 0;

RNG& rng = theRNG();

int a, iter, i, j, k;

if( criteria.type & TermCriteria::EPS )

criteria.epsilon = std::max(criteria.epsilon, 0.);

else

criteria.epsilon = FLT_EPSILON;

criteria.epsilon *= criteria.epsilon;

if( criteria.type & TermCriteria::COUNT )

criteria.maxCount = std::min(std::max(criteria.maxCount, 2), 100);

else

criteria.maxCount = 100;

if( K == 1 )

{

attempts = 1;

criteria.maxCount = 2;

}

const float* sample = data.ptr<float>(0);//得到第一个样本(x,y)

for( j = 0; j < dims; j++ )//box[0] = (x,x);box[1]=(y,y)

box[j] = Vec2f(sample[j], sample[j]);

for( i = 1; i < N; i++ )

{

sample = data.ptr<float>(i);

for( j = 0; j < dims; j++ )

{

float v = sample[j];

box[j][0] = std::min(box[j][0], v);//保存第j维的最小值

box[j][1] = std::max(box[j][1], v);//保存第j维的最大值

}

}

for( a = 0; a < attempts; a++ )

{

double max_center_shift = DBL_MAX;

for( iter = 0;; )

{

swap(centers, old_centers);//迭代old_centers = centers,centers=0或centers=随机中心

if( iter == 0 && (a > 0 || !(flags & KMEANS_USE_INITIAL_LABELS)) )

{

if( flags & KMEANS_PP_CENTERS )

generateCentersPP(data, centers, K, rng, SPP_TRIALS);//尝试SPP_TRIALS次用随机中心批量填充centers

else

{

for( k = 0; k < K; k++ )

generateRandomCenter(_box, centers.ptr<float>(k), rng);//随机中心填充第k个类中心

}

}

else

{

if( iter == 0 && a == 0 && (flags & KMEANS_USE_INITIAL_LABELS) )

{//判断输入参数是否合法

for( i = 0; i < N; i++ )

CV_Assert( (unsigned)labels[i] < (unsigned)K );//确保样本i的类别号从0开始小于K。

}

// compute centers

centers = Scalar(0);

for( k = 0; k < K; k++ )

counters[k] = 0;

for( i = 0; i < N; i++ )

{

sample = data.ptr<float>(i);

k = labels[i];

float* center = centers.ptr<float>(k);

j=0;

#if CV_ENABLE_UNROLLED

for(; j <= dims - 4; j += 4 )

{

float t0 = center[j] + sample[j];

float t1 = center[j+1] + sample[j+1];

center[j] = t0;

center[j+1] = t1;

t0 = center[j+2] + sample[j+2];

t1 = center[j+3] + sample[j+3];

center[j+2] = t0;

center[j+3] = t1;

}

#endif

for( ; j < dims; j++ )

center[j] += sample[j];//第k类的第j列和,用于计算新的类中心

counters[k]++;//每类的样本数

}

if( iter > 0 )

max_center_shift = 0;

for( k = 0; k < K; k++ )

{//for 循环确保每个类都有至少一个样本

if( counters[k] != 0 )

continue;

// if some cluster appeared to be empty then:

// 1. find the biggest cluster

// 2. find the farthest from the center point in the biggest cluster

// 3. exclude the farthest point from the biggest cluster and form a new 1-point cluster.

int max_k = 0;

for( int k1 = 1; k1 < K; k1++ )

{

if( counters[max_k] < counters[k1] )

max_k = k1;//得到1,2,...K-1中样本数最大的类别

}

double max_dist = 0;

int farthest_i = -1;

float* new_center = centers.ptr<float>(k);

float* old_center = centers.ptr<float>(max_k);//样本数最大的类别的类中心

float* _old_center = temp.ptr<float>(); // normalized

float scale = 1.f/counters[max_k];

for( j = 0; j < dims; j++ )

_old_center[j] = old_center[j]*scale;//用个数归一化类中心

for( i = 0; i < N; i++ )

{

if( labels[i] != max_k )//样本i不在最大组里

continue;

sample = data.ptr<float>(i);

double dist = normL2Sqr_(sample, _old_center, dims);//样本i到最大组的欧式距离

if( max_dist <= dist )

{//得到离最大组最远的点和距离

max_dist = dist;

farthest_i = i;

}

}

//将样本i从最大组转到空组中

counters[max_k]--;

counters[k]++;

labels[farthest_i] = k;

sample = data.ptr<float>(farthest_i);

for( j = 0; j < dims; j++ )

{

old_center[j] -= sample[j];

new_center[j] += sample[j];

}

}

for( k = 0; k < K; k++ )

{

float* center = centers.ptr<float>(k);

CV_Assert( counters[k] != 0 );

float scale = 1.f/counters[k];

for( j = 0; j < dims; j++ )

center[j] *= scale; //第k类的第j列平均值

if( iter > 0 )

{

double dist = 0;

const float* old_center = old_centers.ptr<float>(k);

for( j = 0; j < dims; j++ )

{//计算k个类别新旧类中心点的欧式距离和

double t = center[j] - old_center[j];

dist += t*t;

}

max_center_shift = std::max(max_center_shift, dist);//得到最大的中心移动距离,用于确定算法是否收敛

}

}

}

if( ++iter == MAX(criteria.maxCount, 2) || max_center_shift <= criteria.epsilon )

break;

// assign labels

Mat dists(1, N, CV_64F);

double* dist = dists.ptr<double>(0);

parallel_for_(Range(0, N),

KMeansDistanceComputer(dist, labels, data, centers));//每次迭代得到样本到类中心的距离和label

compactness = 0;//compactness是逐渐减少的

for( i = 0; i < N; i++ )

{

compactness += dist[i];

}

}

if( compactness < best_compactness )

{//一次聚类完成后,计算各个样本到其类中心距离和

best_compactness = compactness;

if( _centers.needed() )

centers.copyTo(_centers);//把最终类中心结果保存到输入参数_centers

_labels.copyTo(best_labels);//把最终的样本类别保存到best_labels

}

}

return best_compactness;//返回分类的代价函数,即距离和

}

其中如果函数中flag可取如下三个:

enum

{

KMEANS_RANDOM_CENTERS=0, // Chooses random centers for k-Means initialization

KMEANS_PP_CENTERS=2, // Uses k-Means++ algorithm for initialization

KMEANS_USE_INITIAL_LABELS=1 // Uses the user-provided labels for K-Means initialization

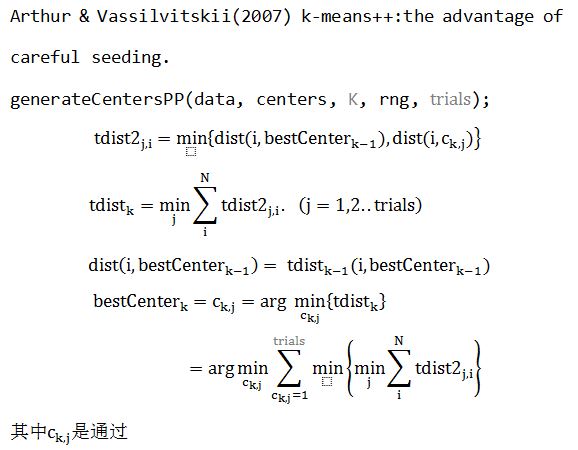

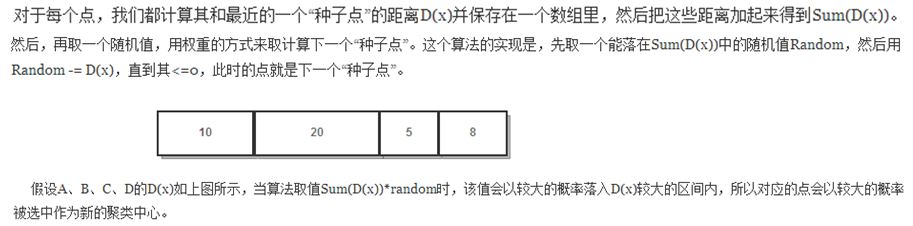

};K-means++算法的计算如下:

这个方法得到的。更详细的关于k-means的说明在http://blog.csdn.net/chlele0105/article/details/12997391。

opencv2.4.9中k-means++的代码如下:

static void generateCentersPP(const Mat& _data, Mat& _out_centers,

int K, RNG& rng, int trials)

{

int i, j, k, dims = _data.cols, N = _data.rows;

const float* data = _data.ptr<float>(0);

size_t step = _data.step/sizeof(data[0]);

vector<int> _centers(K);

int* centers = &_centers[0];

vector<float> _dist(N*3);

/*tdist2临时保存每个点到类中心的距离,tdist保存sum(tdist2)最小的tdist2,用于确定一个类中心

dist用于在确定第k个类中心时保存上一次类中心的tdist

*/

float* dist = &_dist[0], *tdist = dist + N, *tdist2 = tdist + N;

double sum0 = 0;

centers[0] = (unsigned)rng % N;//样本编号

for( i = 0; i < N; i++ )

{//计算每个样本i到样本centers[0]的欧式距离和

dist[i] = normL2Sqr_(data + step*i, data + step*centers[0], dims);

sum0 += dist[i];

}

for( k = 1; k < K; k++ )

{//根据通过随机猜的方法确定K个类中心

double bestSum = DBL_MAX;

int bestCenter = -1;

for( j = 0; j < trials; j++ )

{//从trials个候选中心选出一个作为第k个类中心

//根据距离用随机数方法确定样本i,作为类中心候选者,一共有trials个候选者

double p = (double)rng*sum0, s = 0;

for( i = 0; i < N-1; i++ )

if( (p -= dist[i]) <= 0 )

break;

int ci = i;

parallel_for_(Range(0, N),

KMeansPPDistanceComputer(tdist2, data, dist, dims, step, step*ci));

for( i = 0; i < N; i++ )

{

s += tdist2[i];

}

if( s < bestSum )

{//得到每个样本到可选类中心(随机产生)的最小距离及对应的样本

bestSum = s;

bestCenter = ci;

std::swap(tdist, tdist2);//tdist =tdist2

}

}

centers[k] = bestCenter;

sum0 = bestSum;

std::swap(dist, tdist);//dist =tdist

}

for( k = 0; k < K; k++ )

{

const float* src = data + step*centers[k];

float* dst = _out_centers.ptr<float>(k);//得到类中心的坐标值,作为输出参数

for( j = 0; j < dims; j++ )

dst[j] = src[j];

}

}

使用K-means示例代码在samples/cpp/kmeans.cpp中,不过只能对2维数据聚类。

(转载请注明作者和出处:http://blog.csdn.net/CHIERYU 未经允许请勿用于商业用途)

本文详细介绍了K-means算法的基本原理、存在的问题以及OpenCV中K-means函数的使用方法。重点阐述了算法的数学背景、优化策略和OpenCV源代码解析,帮助读者掌握如何在实际项目中应用K-means进行数据聚类。

本文详细介绍了K-means算法的基本原理、存在的问题以及OpenCV中K-means函数的使用方法。重点阐述了算法的数学背景、优化策略和OpenCV源代码解析,帮助读者掌握如何在实际项目中应用K-means进行数据聚类。

1687

1687

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?