前面已经简单介绍了多种网络模型,虽然不会像其他博客一样将复杂的网络知识给予原理性的讲解,但是读者应该已经知道大概OpenStack支持的网络方式是什么样子的,由于本博客最早介绍的安装部署的网络模式为FlatDHCP,这次是将该方式更换为GRE。

其实对于OpenStack来说,或者说对于云计算和虚拟化来说,就是一个软件定义硬件,对于网络来说就是软件定义网络,也就是经常看到的SDN,其实在更换不同的网络模型,其实对于已经安装好的环境并不需要进行修改,所要修改的就是不同的配置文件,下面我就将各个节点的配置文件进行展示,用户只需要按照如下信息配置,可能能够将原来的flatDHCP更换为GRE网络的。

注意:该文档是在以前基础上的修改,并不适合重新搭建GRE。

对于控制节点

/etc/neutron/neutron.conf配置文件,需要查看如下信息

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

root@controller:~# grep ^[a-z] /etc/neutron/neutron.conf

state_path = /var/lib/neutron

lock_path = $state_path/lock

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

dhcp_agent_notification = True

allow_overlapping_ips = True

rpc_backend = neutron.openstack.common.rpc.impl_kombu

control_exchange = neutron

rabbit_host = 192.168.3.180

rabbit_password = mq4smtest

rabbit_port = 5672

rabbit_userid = guest

notification_driver = neutron.openstack.common.notifier.rpc_notifier

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

nova_url = http://192.168.3.180:8774/v2

nova_admin_username =nova

nova_admin_tenant_id =2b71b7f509124584a0a891aae2d58f78

nova_admin_password =nova4smtest

nova_admin_auth_url = http://192.168.3.180:35357/v2.0

root_helper = sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf

auth_uri=http://192.168.3.180:5000

auth_host = 192.168.3.180

auth_port = 35357

auth_protocol = http

signing_dir = $state_path/keystone-signing

admin_tenant_name = service

admin_user = neutron

admin_password = neutron4smtest

signing_dir = $state_path/keystone-signing

connection = mysql://neutrondbadmin:neutron4smtest@192.168.3.180/neutron

service_provider=VPN:openswan:neutron.services.vpn.service_drivers.ipsec.IPsecVPNDriver:default配置Modular Layer 2 (ML2) 插件,ML2插件使用 Open vSwitch (OVS)机制,为实例创建虚拟网络框架。

/etc/neutron/plugins/ml2/ml2_conf.ini配置文件

[ml2]

type_drivers = gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group = True显示更新后的信息

root@controller:~# grep ^[a-z] /etc/neutron/plugins/ml2/ml2_conf.ini

type_drivers = gre

tenant_network_types = gre

mechanism_drivers =openvswitch

tunnel_id_ranges = 1:1000

配置Nova配置信息,对于/etc/nova/nova.conf

[DEFAULT]

network_api_class = nova.network.neutronv2.api.API

neutron_url = http://controller:9696

neutron_auth_strategy = keystone

neutron_admin_tenant_name = service

neutron_admin_username = neutron

neutron_admin_password = neutron密码

neutron_admin_auth_url = http://controller:35357/v2.0

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

security_group_api = neutron

root@controller:~# grep ^[a-z] /etc/nova/nova.conf

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/var/lock/nova

force_dhcp_release=True

iscsi_helper=tgtadm

libvirt_use_virtio_for_bridges=True

connection_type=libvirt

root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf

verbose=True

ec2_private_dns_show_ip=True

api_paste_config=/etc/nova/api-paste.ini

volumes_path=/var/lib/nova/volumes

enabled_apis=ec2,osapi_compute,metadata

rpc_backend = rabbit

rabbit_host = 192.168.3.180

rabbit_userid = guest

rabbit_password = mq4smtest

rabbit_port = 5672

my_ip = 192.168.3.180

vncserver_listen = 192.168.3.180

vncserver_proxyclient_address = 192.168.3.180

auth_strategy = keystone

network_api_class = nova.network.neutronv2.api.API

neutron_url = http://192.168.3.180:9696

neutron_auth_strategy = keystone

neutron_admin_tenant_name = service

neutron_admin_username = neutron

neutron_admin_password = neutron4smtest

neutron_admin_auth_url = http://192.168.3.180:35357/v2.0

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

security_group_api = neutron

service_neutron_metadata_proxy = True

neutron_metadata_proxy_shared_secret = neutron4smtest

auth_uri = http://192.168.3.180:5000

auth_host = 192.168.3.180

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = nova4smtest

connection = mysql://novadbadmin:nova4smtest@192.168.3.180/nova

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver注意:可能看起来我的信息比较多,这是因为在配置网络节点时可能会更新配置控制节点的信息

重启服务即可

# service nova-api restart

# service nova-scheduler restart

# service nova-conductor restart

# service neutron-server restart

=============================================================

网络节点

1、配置/etc/neutron/neutron.conf,添加或者修改如下

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True如果需要获得比较详细的日志信息,需要在[DEFAULT]关键字添加verbose = True,该配置是可选项。

显示所有信息

sm@network:~$ sudo grep ^[a-z] /etc/neutron/neutron.conf

state_path = /var/lib/neutron

lock_path = $state_path/lock

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

allow_overlapping_ips = True

rpc_backend = neutron.openstack.common.rpc.impl_kombu

rabbit_host = 192.168.3.180

rabbit_password = mq4smtest

rabbit_port = 5672

rabbit_userid = guest

notification_driver = neutron.openstack.common.notifier.rpc_notifier

root_helper = sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf

auth_host = 192.168.3.180

auth_port = 35357

auth_protocol = http

auth_uri = http://192.168.3.180:5000

admin_tenant_name = service

admin_user = neutron

admin_password = neutron4smtest

signing_dir = $state_path/keystone-signing

connection = sqlite:var/lib/neutron/neutron.sqlite

2、关于L3的路由层,需要修改/etc/neutron/l3_agent.ini,

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

use_namespaces = Truesm@network:~$ sudo cat /etc/neutron/l3_agent.ini

[DEFAULT]

# Show debugging output in log (sets DEBUG log level output)

debug = True

# L3 requires that an interface driver be set. Choose the one that best

# matches your plugin.

# interface_driver =

# Example of interface_driver option for OVS based plugins (OVS, Ryu, NEC)

# that supports L3 agent

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

# Use veth for an OVS interface or not.

# Support kernels with limited namespace support

# (e.g. RHEL 6.5) so long as ovs_use_veth is set to True.

# ovs_use_veth = False

# Example of interface_driver option for LinuxBridge

# interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

# Allow overlapping IP (Must have kernel build with CONFIG_NET_NS=y and

# iproute2 package that supports namespaces).

use_namespaces = True

# If use_namespaces is set as False then the agent can only configure one router.

# This is done by setting the specific router_id.

# router_id =

# When external_network_bridge is set, each L3 agent can be associated

# with no more than one external network. This value should be set to the UUID

# of that external network. To allow L3 agent support multiple external

# networks, both the external_network_bridge and gateway_external_network_id

# must be left empty.

# gateway_external_network_id =

# Indicates that this L3 agent should also handle routers that do not have

# an external network gateway configured. This option should be True only

# for a single agent in a Neutron deployment, and may be False for all agents

# if all routers must have an external network gateway

# handle_internal_only_routers = True

# Name of bridge used for external network traffic. This should be set to

# empty value for the linux bridge. when this parameter is set, each L3 agent

# can be associated with no more than one external network.

external_network_bridge = br-ex

# TCP Port used by Neutron metadata server

# metadata_port = 9697

# Send this many gratuitous ARPs for HA setup. Set it below or equal to 0

# to disable this feature.

# send_arp_for_ha = 0

# seconds between re-sync routers' data if needed

# periodic_interval = 40

# seconds to start to sync routers' data after

# starting agent

# periodic_fuzzy_delay = 5

# enable_metadata_proxy, which is true by default, can be set to False

# if the Nova metadata server is not available

# enable_metadata_proxy = True

# Location of Metadata Proxy UNIX domain socket

# metadata_proxy_socket = $state_path/metadata_proxy

# router_delete_namespaces, which is false by default, can be set to True if

# namespaces can be deleted cleanly on the host running the L3 agent.

# Do not enable this until you understand the problem with the Linux iproute

# utility mentioned in https://bugs.launchpad.net/neutron/+bug/1052535 and

# you are sure that your version of iproute does not suffer from the problem.

# If True, namespaces will be deleted when a router is destroyed.

router_delete_namespaces = True

# Timeout for ovs-vsctl commands.

# If the timeout expires, ovs commands will fail with ALARMCLOCK error.

# ovs_vsctl_timeout = 10

3、设置DHCP服务器配置文件,/etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

use_namespaces = Truesm@network:~$ sudo cat /etc/neutron/dhcp_agent.ini

[DEFAULT]

# Show debugging output in log (sets DEBUG log level output)

# debug = False

verbose = True

# The DHCP agent will resync its state with Neutron to recover from any

# transient notification or rpc errors. The interval is number of

# seconds between attempts.

# resync_interval = 5

# The DHCP agent requires an interface driver be set. Choose the one that best

# matches your plugin.

# interface_driver =

# Example of interface_driver option for OVS based plugins(OVS, Ryu, NEC, NVP,

# BigSwitch/Floodlight)

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

# Name of Open vSwitch bridge to use

# ovs_integration_bridge = br-int

# Use veth for an OVS interface or not.

# Support kernels with limited namespace support

# (e.g. RHEL 6.5) so long as ovs_use_veth is set to True.

# ovs_use_veth = False

# Example of interface_driver option for LinuxBridge

# interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

# The agent can use other DHCP drivers. Dnsmasq is the simplest and requires

# no additional setup of the DHCP server.

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

# Allow overlapping IP (Must have kernel build with CONFIG_NET_NS=y and

# iproute2 package that supports namespaces).

use_namespaces = True

# The DHCP server can assist with providing metadata support on isolated

# networks. Setting this value to True will cause the DHCP server to append

# specific host routes to the DHCP request. The metadata service will only

# be activated when the subnet does not contain any router port. The guest

# instance must be configured to request host routes via DHCP (Option 121).

# enable_isolated_metadata = False

# Allows for serving metadata requests coming from a dedicated metadata

# access network whose cidr is 169.254.169.254/16 (or larger prefix), and

# is connected to a Neutron router from which the VMs send metadata

# request. In this case DHCP Option 121 will not be injected in VMs, as

# they will be able to reach 169.254.169.254 through a router.

# This option requires enable_isolated_metadata = True

# enable_metadata_network = False

# Number of threads to use during sync process. Should not exceed connection

# pool size configured on server.

# num_sync_threads = 4

# Location to store DHCP server config files

# dhcp_confs = $state_path/dhcp

# Domain to use for building the hostnames

# dhcp_domain = openstacklocal

# Override the default dnsmasq settings with this file

#dnsmasq_config_file = /etc/neutron/dnsmasq-neutron.conf

# Comma-separated list of DNS servers which will be used by dnsmasq

# as forwarders.

# dnsmasq_dns_servers =

# Limit number of leases to prevent a denial-of-service.

# dnsmasq_lease_max = 16777216

# Location to DHCP lease relay UNIX domain socket

# dhcp_lease_relay_socket = $state_path/dhcp/lease_relay

# Location of Metadata Proxy UNIX domain socket

# metadata_proxy_socket = $state_path/metadata_proxy

# dhcp_delete_namespaces, which is false by default, can be set to True if

# namespaces can be deleted cleanly on the host running the dhcp agent.

# Do not enable this until you understand the problem with the Linux iproute

# utility mentioned in https://bugs.launchpad.net/neutron/+bug/1052535 and

# you are sure that your version of iproute does not suffer from the problem.

# If True, namespaces will be deleted when a dhcp server is disabled.

#dhcp_delete_namespaces = True

# Timeout for ovs-vsctl commands.

# If the timeout expires, ovs commands will fail with ALARMCLOCK error.

# ovs_vsctl_timeout = 10

4、配置元数据配置文件,/etc/neutron/metadata_agent.ini

[DEFAULT]

...

auth_url = http://controller:5000/v2.0

auth_region = regionOne

admin_tenant_name = service

admin_user = neutron

admin_password = NEUTRON_PASS

nova_metadata_ip = controller

metadata_proxy_shared_secret = 元数据密码显示我网络节点机器的所有配置信息

sm@network:~$ sudo cat /etc/neutron/metadata_agent.ini

[DEFAULT]

# Show debugging output in log (sets DEBUG log level output)

# debug = True

verbose=True

# The Neutron user information for accessing the Neutron API.

auth_url = http://192.168.3.180:5000/v2.0

auth_region = RegionOne

# Turn off verification of the certificate for ssl

# auth_insecure = False

# Certificate Authority public key (CA cert) file for ssl

# auth_ca_cert =

admin_tenant_name = service

admin_user = neutron

admin_password = neutron4smtest

# Network service endpoint type to pull from the keystone catalog

# endpoint_type = adminURL

# IP address used by Nova metadata server

nova_metadata_ip = 192.168.3.180

# TCP Port used by Nova metadata server

# nova_metadata_port = 8775

# When proxying metadata requests, Neutron signs the Instance-ID header with a

# shared secret to prevent spoofing. You may select any string for a secret,

# but it must match here and in the configuration used by the Nova Metadata

# Server. NOTE: Nova uses a different key: neutron_metadata_proxy_shared_secret

metadata_proxy_shared_secret = neutron4smtest

# Location of Metadata Proxy UNIX domain socket

# metadata_proxy_socket = $state_path/metadata_proxy

# Number of separate worker processes for metadata server

# metadata_workers = 0

# Number of backlog requests to configure the metadata server socket with

# metadata_backlog = 128

# URL to connect to the cache backend.

# Example of URL using memory caching backend

# with ttl set to 5 seconds: cache_url = memory://?default_ttl=5

# default_ttl=0 parameter will cause cache entries to never expire.

# Otherwise default_ttl specifies time in seconds a cache entry is valid for.

# No cache is used in case no value is passed.

# cache_url =

这时候,需要去控制节点,对Nova配置文件进行添加

[DEFAULT]

...

service_neutron_metadata_proxy = true

neutron_metadata_proxy_shared_secret = 元数据密码两个元数据密码必须一致

重启控制节点的service nova-api restart

5、对网络节点的/etc/neutron/plugins/ml2/ml2_conf.ini修改

[ml2]

type_drivers = gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[ovs]

local_ip = INSTANCE_TUNNELS_INTERFACE_IP_ADDRESS

tunnel_type = gre

enable_tunneling = True

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.

OVSHybridIptablesFirewallDriver

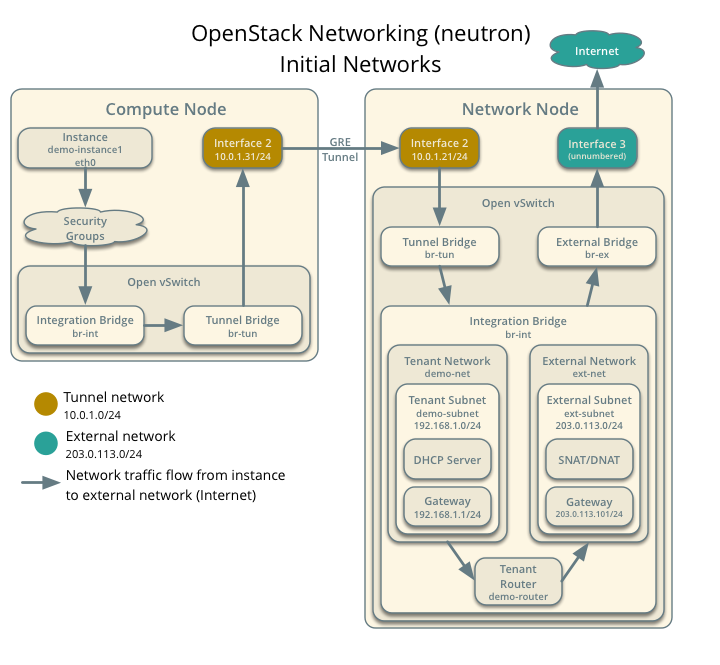

enable_security_group = True我们可以看一下如下所示,计算节点和网络节点就是通过GRE隧道来进行连通的。

显示我机器该配置文件的所有信息

sm@network:~$ sudo cat /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# (ListOpt) List of network type driver entrypoints to be loaded from

# the neutron.ml2.type_drivers namespace.

#

type_drivers =gre

# Example: type_drivers = flat,vlan,gre,vxlan

# (ListOpt) Ordered list of network_types to allocate as tenant

# networks. The default value 'local' is useful for single-box testing

# but provides no connectivity between hosts.

#

tenant_network_types = gre

# Example: tenant_network_types = vlan,gre,vxlan

# (ListOpt) Ordered list of networking mechanism driver entrypoints

# to be loaded from the neutron.ml2.mechanism_drivers namespace.

mechanism_drivers = openvswitch

# Example: mechanism_drivers = openvswitch,mlnx

# Example: mechanism_drivers = arista

# Example: mechanism_drivers = cisco,logger

# Example: mechanism_drivers = openvswitch,brocade

# Example: mechanism_drivers = linuxbridge,brocade

[ml2_type_flat]

# (ListOpt) List of physical_network names with which flat networks

# can be created. Use * to allow flat networks with arbitrary

# physical_network names.

#

#flat_networks = external

# Example:flat_networks = physnet1,physnet2

# Example:flat_networks = *

[ml2_type_vlan]

# (ListOpt) List of <physical_network>[:<vlan_min>:<vlan_max>] tuples

# specifying physical_network names usable for VLAN provider and

# tenant networks, as well as ranges of VLAN tags on each

# physical_network available for allocation as tenant networks.

#

# network_vlan_ranges =

# Example: network_vlan_ranges = physnet1:1000:2999,physnet2

[ml2_type_gre]

# (ListOpt) Comma-separated list of <tun_min>:<tun_max> tuples enumerating ranges of GRE tunnel IDs that are available for tenant network allocation

tunnel_id_ranges = 1:1000

[ml2_type_vxlan]

# (ListOpt) Comma-separated list of <vni_min>:<vni_max> tuples enumerating

# ranges of VXLAN VNI IDs that are available for tenant network allocation.

#

# vni_ranges =

# (StrOpt) Multicast group for the VXLAN interface. When configured, will

# enable sending all broadcast traffic to this multicast group. When left

# unconfigured, will disable multicast VXLAN mode.

#

# vxlan_group =

# Example: vxlan_group = 239.1.1.1

[securitygroup]

# Controls if neutron security group is enabled or not.

# It should be false when you use nova security group.

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

local_ip = 10.0.1.21

tunnel_type = gre

enable_tunneling = True

6、添加外部网桥

ovs-vsctl add-br br-exovs-vsctl add-port br-ex eth2

我们也可以通过命令来查看相关的网桥与网卡的信息(本信息包括已经创建的虚拟机)

sm@network:~$ sudo ovs-vsctl show

[sudo] password for sm:

1dbfa213-3eed-4aa2-accc-c429ec586687

Bridge br-ex

Port "qg-a50c5be1-6d"

Interface "qg-a50c5be1-6d"

type: internal

Port "eth2"

Interface "eth2"

Port phy-br-ex

Interface phy-br-ex

Port br-ex

Interface br-ex

type: internal

Bridge br-int

fail_mode: secure

Port int-br-ex

Interface int-br-ex

Port "tap8595720b-76"

tag: 3

Interface "tap8595720b-76"

type: internal

Port "qr-1d4d38d4-25"

tag: 3

Interface "qr-1d4d38d4-25"

type: internal

Port br-int

Interface br-int

type: internal

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Bridge br-tun

Port "gre-c0a80db5"

Interface "gre-c0a80db5"

type: gre

options: {in_key=flow, local_ip="10.0.1.21", out_key=flow, remote_ip="192.168.13.181"}

Port br-tun

Interface br-tun

type: internal

Port "gre-c0a803b5"

Interface "gre-c0a803b5"

type: gre

options: {in_key=flow, local_ip="10.0.1.21", out_key=flow, remote_ip="192.168.3.181"}

Port "gre-0a00011f"

Interface "gre-0a00011f"

type: gre

options: {in_key=flow, local_ip="10.0.1.21", out_key=flow, remote_ip="10.0.1.31"}

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "gre-c0a803b6"

Interface "gre-c0a803b6"

type: gre

options: {in_key=flow, local_ip="10.0.1.21", out_key=flow, remote_ip="192.168.3.182"}

ovs_version: "2.0.2"

重启服务

service neutron-plugin-openvswitch-agent restart

service neutron-l3-agent restart

service neutron-dhcp-agent restart

service neutron-metadata-agent restart

service openvswitch-switch restart

=============================================================

计算节点

1、编辑/etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = Truesm@computer:~$ sudo grep ^[a-z] /etc/neutron/neutron.conf

[sudo] password for sm:

verbose = True

state_path = /var/lib/neutron

lock_path = $state_path/lock

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

allow_overlapping_ips = True

rpc_backend = neutron.openstack.common.rpc.impl_kombu

rabbit_host = 192.168.3.180

rabbit_password = mq4smtest

rabbit_port = 5672

rabbit_userid = guest

notification_driver = neutron.openstack.common.notifier.rpc_notifier

root_helper = sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf

auth_host = 192.168.3.180

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = neutron

admin_password = neutron4smtest

signing_dir = $state_path/keystone-signing

auth_uri=http://192.168.3.180:5000

connection = sqlite:var/lib/neutron/neutron.sqlite

service_provider=LOADBALANCER:Haproxy:neutron.services.loadbalancer.drivers.haproxy.plugin_driver.HaproxyOnHostPluginDriver:default

service_provider=VPN:openswan:neutron.services.vpn.service_drivers.ipsec.IPsecVPNDriver:default

2、对计算节点的/etc/neutron/plugins/ml2/ml2_conf.ini修改,跟网络节点类似

[ml2]

type_drivers = gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[ovs]

local_ip = INSTANCE_TUNNELS_INTERFACE_IP_ADDRESS

tunnel_type = gre

enable_tunneling = True

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group = True查看所有信息

sm@computer:~$ sudo cat /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# (ListOpt) List of network type driver entrypoints to be loaded from

# the neutron.ml2.type_drivers namespace.

#

type_drivers = gre

# Example: type_drivers = flat,vlan,gre,vxlan

# (ListOpt) Ordered list of network_types to allocate as tenant

# networks. The default value 'local' is useful for single-box testing

# but provides no connectivity between hosts.

#

tenant_network_types = gre

# Example: tenant_network_types = vlan,gre,vxlan

# (ListOpt) Ordered list of networking mechanism driver entrypoints

# to be loaded from the neutron.ml2.mechanism_drivers namespace.

mechanism_drivers = openvswitch

# Example: mechanism_drivers = openvswitch,mlnx

# Example: mechanism_drivers = arista

# Example: mechanism_drivers = cisco,logger

# Example: mechanism_drivers = openvswitch,brocade

# Example: mechanism_drivers = linuxbridge,brocade

[ml2_type_flat]

# (ListOpt) List of physical_network names with which flat networks

# can be created. Use * to allow flat networks with arbitrary

# physical_network names.

#

# flat_networks =

# Example:flat_networks = physnet1,physnet2

# Example:flat_networks = *

[ml2_type_vlan]

# (ListOpt) List of <physical_network>[:<vlan_min>:<vlan_max>] tuples

# specifying physical_network names usable for VLAN provider and

# tenant networks, as well as ranges of VLAN tags on each

# physical_network available for allocation as tenant networks.

#

# network_vlan_ranges =

# Example: network_vlan_ranges = physnet1:1000:2999,physnet2

[ml2_type_gre]

# (ListOpt) Comma-separated list of <tun_min>:<tun_max> tuples enumerating ranges of GRE tunnel IDs that are available for tenant network allocation

tunnel_id_ranges =1:1000

[ml2_type_vxlan]

# (ListOpt) Comma-separated list of <vni_min>:<vni_max> tuples enumerating

# ranges of VXLAN VNI IDs that are available for tenant network allocation.

#

# vni_ranges =

# (StrOpt) Multicast group for the VXLAN interface. When configured, will

# enable sending all broadcast traffic to this multicast group. When left

# unconfigured, will disable multicast VXLAN mode.

#

# vxlan_group =

# Example: vxlan_group = 239.1.1.1

[securitygroup]

# Controls if neutron security group is enabled or not.

# It should be false when you use nova security group.

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

local_ip = 10.0.1.31

tunnel_type = gre

enable_tunneling = True

3、配置计算节点的/etc/nova/nova.conf

[DEFAULT]

network_api_class = nova.network.neutronv2.api.API

neutron_url = http://controller:9696

neutron_auth_strategy = keystone

neutron_admin_tenant_name = service

neutron_admin_username = neutron

neutron_admin_password = NEUTRON_PASS

neutron_admin_auth_url = http://controller:35357/v2.0

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

security_group_api = neutronsm@computer:~$ sudo cat /etc/nova/nova.conf

[DEFAULT]

dhcpbridge_flagfile=/etc/nova/nova.conf

dhcpbridge=/usr/bin/nova-dhcpbridge

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/var/lock/nova

force_dhcp_release=True

iscsi_helper=tgtadm

libvirt_use_virtio_for_bridges=True

connection_type=libvirt

root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf

verbose=True

ec2_private_dns_show_ip=True

api_paste_config=/etc/nova/api-paste.ini

volumes_path=/var/lib/nova/volumes

enabled_apis=ec2,osapi_compute,metadata

rpc_backend = rabbit

rabbit_host = 192.168.3.180

rabbit_userid = guest

rabbit_password = mq4smtest

rabbit_port = 5672

my_ip = 192.168.3.181

vnc_enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 192.168.3.181

vnc_enabled = True

novncproxy_base_url = http://192.168.3.180:6080/vnc_auto.html

vif_plugging_timeout = 10

vif_plugging_is_fatal = False

auth_strategy = keystone

glance_host = 192.168.3.180

network_api_class = nova.network.neutronv2.api.API

neutron_url = http://192.168.3.180:9696

neutron_auth_strategy = keystone

neutron_admin_tenant_name = service

neutron_admin_username = neutron

neutron_admin_password = neutron4smtest

neutron_admin_auth_url = http://192.168.3.180:35357/v2.0

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

security_group_api = neutron

[keystone_authtoken]

auth_uri = http://192.168.3.180:5000

auth_host = 192.168.3.180

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = nova4smtest

[database]

connection = mysql://novadbadmin:nova4smtest@192.168.3.180/nova

4、重启服务

service nova-compute restart

service neutron-plugin-openvswitch-agent restart

976

976

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?