在你任何东西没有配置的情况下,仅仅只是导入了hadoop的jar包后,运行程序,会出现如下错误:

016-03-25 15:38:17,015 WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2016-03-25 15:38:17,024 ERROR [main] util.Shell (Shell.java:getWinUtilsPath(336)) - Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:318)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:333)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:326)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:76)

at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:93)

at org.apache.hadoop.security.Groups.<init>(Groups.java:77)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:240)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:255)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:232)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:718)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:703)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:605)

at org.apache.hadoop.mapreduce.task.JobContextImpl.<init>(JobContextImpl.java:72)

at org.apache.hadoop.mapreduce.Job.<init>(Job.java:133)

at org.apache.hadoop.mapreduce.Job.getInstance(Job.java:176)

at tjut.edu.dcx.hadoop.mapreduce.wordcount.WordCountRunner.main(WordCountRunner.java:26)

Exception in thread "main" java.lang.IllegalArgumentException: java.net.UnknownHostException: master

at org.apache.hadoop.security.SecurityUtil.buildTokenService(SecurityUtil.java:377)

at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:240)

at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:144)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:579)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:524)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:146)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2397)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:89)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2431)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2413)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:368)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.setInputPaths(FileInputFormat.java:485)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.setInputPaths(FileInputFormat.java:454)

at tjut.edu.dcx.hadoop.mapreduce.wordcount.WordCountRunner.main(WordCountRunner.java:43)

Caused by: java.net.UnknownHostException: master

... 15 more

1.解决:

WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable覆盖hadoop/native这个文件夹

分享native地址:

链接:http://pan.baidu.com/s/1dFdOrex 密码:1vte

解决:

2016-03-25 15:38:17,024 ERROR [main] util.Shell (Shell.java:getWinUtilsPath(336)) - Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.首先确定环境变量下有HADOOP_HOME

winutils是一个文件传送编码工具,下载一个,并且将其放置在%HADOOP_HOME%/bin目录下

分享bin地址:

链接:http://pan.baidu.com/s/1dFdOrex 密码:1vte

2.解决:

java.lang.IllegalArgumentException: java.net.UnknownHostException: master是主机无法解析问题,在windows/system32下找到hosts文件,加入主机映射即可

例如:

192.168.0.120 master

3.再运行出现:

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=BayMax, access=WRITE, inode="/upload":ding:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkFsPermission(FSPermissionChecker.java:265)

原因是权限问题,windows下我的用户与linux下不一样

解决方法:

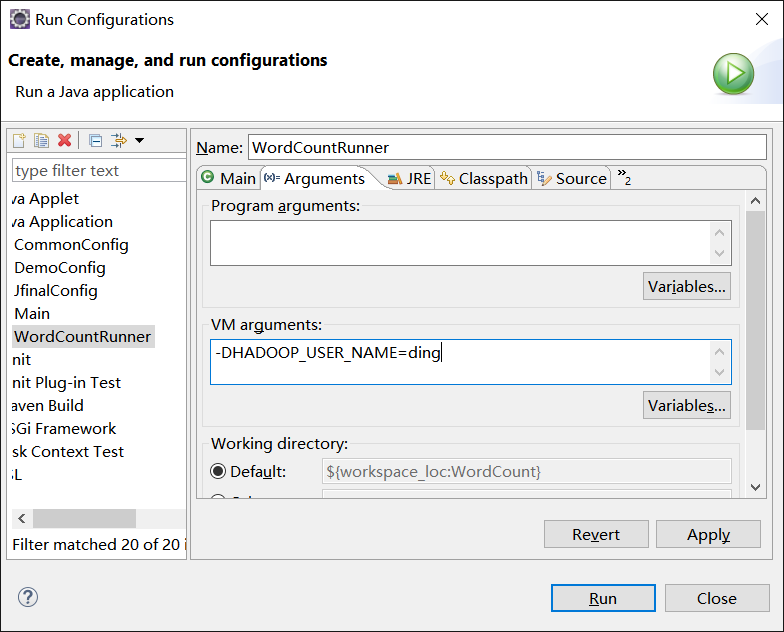

右击main——选择Run as—Run Configurations—–Arguments中填入你的Linux主机用户

-DHADOOP_USER_NAME=ding

再运行成功:

2016-03-25 11:12:04,040 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1009)) - session.id is deprecated. Instead, use dfs.metrics.session-id

2016-03-25 11:12:04,044 INFO [main] jvm.JvmMetrics (JvmMetrics.java:init(76)) - Initializing JVM Metrics with processName=JobTracker, sessionId=

2016-03-25 11:12:04,416 WARN [main] mapreduce.JobSubmitter (JobSubmitter.java:copyAndConfigureFiles(150)) - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2016-03-25 11:12:04,419 WARN [main] mapreduce.JobSubmitter (JobSubmitter.java:copyAndConfigureFiles(259)) - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2016-03-25 11:12:04,586 INFO [main] input.FileInputFormat (FileInputFormat.java:listStatus(280)) - Total input paths to process : 1

2016-03-25 11:12:04,996 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:submitJobInternal(396)) - number of splits:1

2016-03-25 11:12:05,100 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:printTokens(479)) - Submitting tokens for job: job_local593778342_0001

2016-03-25 11:12:05,147 WARN [main] conf.Configuration (Configuration.java:loadProperty(2358)) - file:/tmp/hadoop-BayMax/mapred/staging/ding593778342/.staging/job_local593778342_0001/job.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.retry.interval; Ignoring.

2016-03-25 11:12:05,150 WARN [main] conf.Configuration (Configuration.java:loadProperty(2358)) - file:/tmp/hadoop-BayMax/mapred/staging/ding593778342/.staging/job_local593778342_0001/job.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.attempts; Ignoring.

2016-03-25 11:12:05,355 WARN [main] conf.Configuration (Configuration.java:loadProperty(2358)) - file:/tmp/hadoop-BayMax/mapred/local/localRunner/ding/job_local593778342_0001/job_local593778342_0001.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.retry.interval; Ignoring.

2016-03-25 11:12:05,357 WARN [main] conf.Configuration (Configuration.java:loadProperty(2358)) - file:/tmp/hadoop-BayMax/mapred/local/localRunner/ding/job_local593778342_0001/job_local593778342_0001.xml:an attempt to override final parameter: mapreduce.job.end-notification.max.attempts; Ignoring.

2016-03-25 11:12:05,393 INFO [main] mapreduce.Job (Job.java:submit(1289)) - The url to track the job: http://localhost:8080/

2016-03-25 11:12:05,399 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1334)) - Running job: job_local593778342_0001

2016-03-25 11:12:05,405 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(471)) - OutputCommitter set in config null

2016-03-25 11:12:05,431 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(489)) - OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2016-03-25 11:12:05,647 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(448)) - Waiting for map tasks

2016-03-25 11:12:05,647 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(224)) - Starting task: attempt_local593778342_0001_m_000000_0

2016-03-25 11:12:05,926 INFO [LocalJobRunner Map Task Executor #0] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(182)) - ProcfsBasedProcessTree currently is supported only on Linux.

2016-03-25 11:12:06,035 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:initialize(581)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@104288bf

2016-03-25 11:12:06,039 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:runNewMapper(733)) - Processing split: hdfs://master:9000/upload/test.txt:0+81

2016-03-25 11:12:06,117 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:createSortingCollector(388)) - Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2016-03-25 11:12:06,163 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:setEquator(1182)) - (EQUATOR) 0 kvi 26214396(104857584)

2016-03-25 11:12:06,164 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(975)) - mapreduce.task.io.sort.mb: 100

2016-03-25 11:12:06,164 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(976)) - soft limit at 83886080

2016-03-25 11:12:06,164 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(977)) - bufstart = 0; bufvoid = 104857600

2016-03-25 11:12:06,164 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:init(978)) - kvstart = 26214396; length = 6553600

2016-03-25 11:12:06,413 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1355)) - Job job_local593778342_0001 running in uber mode : false

2016-03-25 11:12:06,481 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1362)) - map 0% reduce 0%

2016-03-25 11:12:06,920 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) -

2016-03-25 11:12:06,935 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1437)) - Starting flush of map output

2016-03-25 11:12:06,935 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1455)) - Spilling map output

2016-03-25 11:12:06,935 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1456)) - bufstart = 0; bufend = 173; bufvoid = 104857600

2016-03-25 11:12:06,935 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:flush(1458)) - kvstart = 26214396(104857584); kvend = 26214352(104857408); length = 45/6553600

2016-03-25 11:12:07,051 INFO [LocalJobRunner Map Task Executor #0] mapred.MapTask (MapTask.java:sortAndSpill(1641)) - Finished spill 0

2016-03-25 11:12:07,057 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:done(995)) - Task:attempt_local593778342_0001_m_000000_0 is done. And is in the process of committing

2016-03-25 11:12:07,068 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - map

2016-03-25 11:12:07,068 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:sendDone(1115)) - Task 'attempt_local593778342_0001_m_000000_0' done.

2016-03-25 11:12:07,069 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(249)) - Finishing task: attempt_local593778342_0001_m_000000_0

2016-03-25 11:12:07,069 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(456)) - map task executor complete.

2016-03-25 11:12:07,081 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(448)) - Waiting for reduce tasks

2016-03-25 11:12:07,082 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(302)) - Starting task: attempt_local593778342_0001_r_000000_0

2016-03-25 11:12:07,093 INFO [pool-6-thread-1] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(182)) - ProcfsBasedProcessTree currently is supported only on Linux.

2016-03-25 11:12:07,167 INFO [pool-6-thread-1] mapred.Task (Task.java:initialize(581)) - Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@4b786354

2016-03-25 11:12:07,202 INFO [pool-6-thread-1] mapred.ReduceTask (ReduceTask.java:run(362)) - Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@b7dc4cf

2016-03-25 11:12:07,212 INFO [pool-6-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:<init>(193)) - MergerManager: memoryLimit=1323407744, maxSingleShuffleLimit=330851936, mergeThreshold=873449152, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2016-03-25 11:12:07,216 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(61)) - attempt_local593778342_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2016-03-25 11:12:07,276 INFO [localfetcher#1] reduce.LocalFetcher (LocalFetcher.java:copyMapOutput(140)) - localfetcher#1 about to shuffle output of map attempt_local593778342_0001_m_000000_0 decomp: 199 len: 203 to MEMORY

2016-03-25 11:12:07,324 INFO [localfetcher#1] reduce.InMemoryMapOutput (InMemoryMapOutput.java:shuffle(100)) - Read 199 bytes from map-output for attempt_local593778342_0001_m_000000_0

2016-03-25 11:12:07,326 INFO [localfetcher#1] reduce.MergeManagerImpl (MergeManagerImpl.java:closeInMemoryFile(307)) - closeInMemoryFile -> map-output of size: 199, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->199

2016-03-25 11:12:07,327 INFO [EventFetcher for fetching Map Completion Events] reduce.EventFetcher (EventFetcher.java:run(76)) - EventFetcher is interrupted.. Returning

2016-03-25 11:12:07,328 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 1 / 1 copied.

2016-03-25 11:12:07,329 INFO [pool-6-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(667)) - finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2016-03-25 11:12:07,399 INFO [pool-6-thread-1] mapred.Merger (Merger.java:merge(591)) - Merging 1 sorted segments

2016-03-25 11:12:07,399 INFO [pool-6-thread-1] mapred.Merger (Merger.java:merge(690)) - Down to the last merge-pass, with 1 segments left of total size: 192 bytes

2016-03-25 11:12:07,403 INFO [pool-6-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(742)) - Merged 1 segments, 199 bytes to disk to satisfy reduce memory limit

2016-03-25 11:12:07,403 INFO [pool-6-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(772)) - Merging 1 files, 203 bytes from disk

2016-03-25 11:12:07,404 INFO [pool-6-thread-1] reduce.MergeManagerImpl (MergeManagerImpl.java:finalMerge(787)) - Merging 0 segments, 0 bytes from memory into reduce

2016-03-25 11:12:07,404 INFO [pool-6-thread-1] mapred.Merger (Merger.java:merge(591)) - Merging 1 sorted segments

2016-03-25 11:12:07,405 INFO [pool-6-thread-1] mapred.Merger (Merger.java:merge(690)) - Down to the last merge-pass, with 1 segments left of total size: 192 bytes

2016-03-25 11:12:07,405 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 1 / 1 copied.

2016-03-25 11:12:07,489 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1362)) - map 100% reduce 0%

2016-03-25 11:12:07,556 INFO [pool-6-thread-1] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1009)) - mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2016-03-25 11:12:07,935 INFO [pool-6-thread-1] mapred.Task (Task.java:done(995)) - Task:attempt_local593778342_0001_r_000000_0 is done. And is in the process of committing

2016-03-25 11:12:07,939 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - 1 / 1 copied.

2016-03-25 11:12:07,939 INFO [pool-6-thread-1] mapred.Task (Task.java:commit(1156)) - Task attempt_local593778342_0001_r_000000_0 is allowed to commit now

2016-03-25 11:12:07,973 INFO [pool-6-thread-1] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(439)) - Saved output of task 'attempt_local593778342_0001_r_000000_0' to hdfs://master:9000/upload/output2/_temporary/0/task_local593778342_0001_r_000000

2016-03-25 11:12:07,974 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:statusUpdate(591)) - reduce > reduce

2016-03-25 11:12:07,974 INFO [pool-6-thread-1] mapred.Task (Task.java:sendDone(1115)) - Task 'attempt_local593778342_0001_r_000000_0' done.

2016-03-25 11:12:07,974 INFO [pool-6-thread-1] mapred.LocalJobRunner (LocalJobRunner.java:run(325)) - Finishing task: attempt_local593778342_0001_r_000000_0

2016-03-25 11:12:07,975 INFO [Thread-4] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(456)) - reduce task executor complete.

2016-03-25 11:12:08,490 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1362)) - map 100% reduce 100%

2016-03-25 11:12:08,490 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1373)) - Job job_local593778342_0001 completed successfully

2016-03-25 11:12:08,500 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1380)) - Counters: 38

File System Counters

FILE: Number of bytes read=738

FILE: Number of bytes written=441697

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=162

HDFS: Number of bytes written=61

HDFS: Number of read operations=13

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

Map-Reduce Framework

Map input records=6

Map output records=12

Map output bytes=173

Map output materialized bytes=203

Input split bytes=99

Combine input records=0

Combine output records=0

Reduce input groups=7

Reduce shuffle bytes=203

Reduce input records=12

Reduce output records=7

Spilled Records=24

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=41

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=416284672

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=81

File Output Format Counters

Bytes Written=61

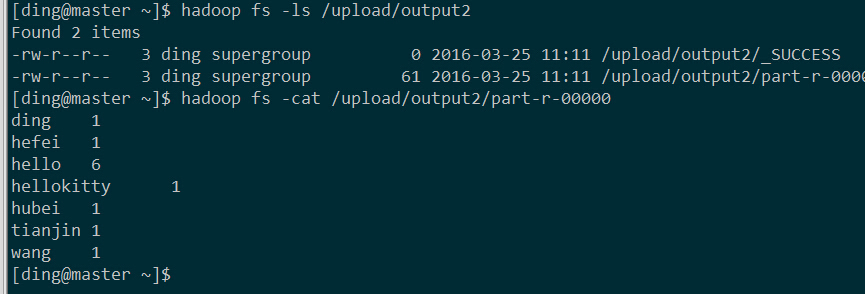

结果:

331

331

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?