1. API

API文档位于hadoop安装目录的docs文件夹中。

与HDFS相关的API位于org.apache.hadoop.fs包中。下面列出了几个常用类及其说明:

- FileSystem:用于封装文件系统(包括HDFS)的基类。类中提供了创建文件、拷贝文件、删除文件、目录列表等相关文件操作的API。

- FileStatus:用于封装文件属性信息的类,如文件长度、属主和组、最后修改时间等。

- FSDataInputStream和FSDataOutPutStream:读文件流和写文件流。它们分别继承自java.io.DataInputStream和java.io.DataOutputStream。

- FileUtil:文件操作的工具类,是对FileSystem类文件操作相关功能的补充。

- Path:用于封装文件名或路径的类

2. 访问HDFS的步骤

通常包含以下步骤:

1. 创建HDFS配置信息对象Configuration,代码如下:

Configuration conf=new Configuration();自动搜索包路径下的Hadoop配置文件。如果找到,将按配置信息在指定的HDFS文件系统中执行后续的文件操作;如果找不到,则在本地执行后续文件操作。

2. 基于Configuration对象创建FileSystem对象,代码如下:

FileSystem hdfs=FileSystem.get(conf);3.调用FileSystem的相关方法进行文件操作。例如,下面的代码用来获取HDFS根目录的列表信息:

Path path=new Path("/");

FileStatus files[] = hdfs.listStatus(path);Hadoop提供的实例程序一:(HDFSTest)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class AccessFile {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

FileSystem hdfs = FileSystem.get(conf);

System.out.println("fs.default.name=" +

conf.get("fs.default.name") + "\n");

Path path = new Path("./text");

System.out.println("----------create file-----------");

String text = "Hello world!\n";

FSDataOutputStream fos = hdfs.create(path);

fos.write(text.getBytes());

fos.close();

System.out.println("----------read file-----------");

byte[] line = new byte[text.length()];

FSDataInputStream fis = hdfs.open(path);

int len = fis.read(line);

fis.close();

System.out.println(len + ":" + new String(line));

}

}运行结果:

fs.default.name=hdfs://winstar:9000

----------create file-----------

----------read file-----------

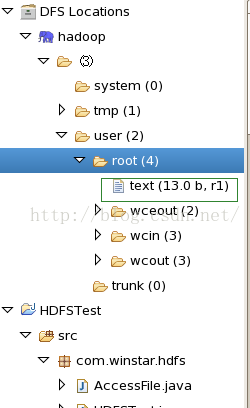

13:Hello world!然后观察HDFS上:

打开后里面就是写入的“Hello world!”。

Hadoop提供的示例二:

public class HDFSTest {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

FileSystem hdfs = FileSystem.get(conf);

copyFile(hdfs);

listFiles(hdfs);

renameFile(hdfs);

deleteFile(hdfs);

existsFile(hdfs);

}

public static void copyFile(FileSystem hdfs) throws IOException {

System.out.println("--------------copyFile--------------");

Path src = new Path("/root/install.log");

Path dest = new Path("/user/root");

hdfs.copyFromLocalFile(src, dest);

}

public static void listFiles(FileSystem hdfs) throws IOException {

System.out.println("--------------listFiles--------------");

Path path = new Path("/");

FileStatus files[] = hdfs.listStatus(path);

for (FileStatus file : files) {

System.out.println(file.getPath());

}

}

public static void renameFile(FileSystem hdfs) throws IOException {

System.out.println("--------------renameFile--------------");

Path path = new Path("/user/root/install.log");

Path toPath = new Path("/user/root/install.log.bak");

boolean result = hdfs.rename(path, toPath);

System.out.println("Rename file success?" + result);

}

public static void deleteFile(FileSystem hdfs) throws IOException {

System.out.println("--------------deleteFile--------------");

Path path = new Path("/user/root/install.log.bak");

boolean result = hdfs.delete(path, false);

System.out.println("Delete file success? " + result);

}

public static void existsFile(FileSystem hdfs) throws IOException {

System.out.println("--------------existsFile--------------");

Path path = new Path("/user/root/install.log.bak");

boolean result = hdfs.exists(path);

System.out.println("File exist? " + result);

}

}运行结果:

--------------copyFile--------------

--------------listFiles--------------

hdfs://winstar:9000/system

hdfs://winstar:9000/tmp

hdfs://winstar:9000/user

--------------renameFile--------------

Rename file success?true

--------------deleteFile--------------

Delete file success? true

--------------existsFile--------------

File exist? false2.1 开发代码实战

[root@winstar ~]# cd Desktop/

[root@winstar Desktop]# ls

ceshi.sh~ org temp~ 大数据处理技术教学课件-详细标签.pdf

eclipse softWare test.txt 新文件~

HDFSTest SubProjects test.txt~ 运行脚本记录可以看到桌面放置一个上面生成的test.txt文件。

[root@winstar Desktop]# hadoop fs -ls

Found 1 items

drwxr-xr-x - root supergroup 0 2016-11-22 09:28 /user/root/wcin这是HDFS上的文件。

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class FxbTest {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

FileSystem hdfs = FileSystem.get(conf);

System.out.println("fs.default.name=" + conf.get("fs.default.name")

+ "\n");

copyFileFromLocalToHDFS(hdfs);

listFiles(hdfs);

renameFile(hdfs);

deleteFile(hdfs);

existsFile(hdfs);

}

public static void copyFileFromLocalToHDFS(FileSystem hdfs)

throws IOException {

System.out.println("----------copy file from local to HDFS----------");

Path Localpath = new Path("/root/Desktop/test.txt");

Path HDFSpath = new Path("/user/root/ceshi");

hdfs.copyFromLocalFile(Localpath, HDFSpath);

System.out.println("----------copy file from HDFS to local----------");

Path LocalPath2 = new Path("/root/Desktop/");

Path HDFSpath2 = new Path("/user/root/wcin/test1.txt");

hdfs.copyToLocalFile(HDFSpath2,LocalPath2);

}

public static void listFiles(FileSystem hdfs) throws IOException {

System.out.println("----------listFilesOfHDFS----------");

Path path = new Path("/");

FileStatus files[] = hdfs.listStatus(path);

for (FileStatus fileStatus : files) {

System.out.println(fileStatus.getPath());

}

}

public static void renameFile(FileSystem hdfs)throws IOException{

System.out.println("----------renameFileOfHDFS----------");

Path path=new Path("user/root/ceshi/test.txt");

Path topath=new Path("user/root/ceshi/test.txt.bak");

Boolean result=hdfs.rename(path, topath);

System.out.println("Rename file success?\n"+result);

}

public static void deleteFile(FileSystem hdfs)throws IOException{

System.out.println("-----------deleteFileOfHDFS------------");

Path path =new Path("/user/root/wcin/test1");

Boolean result=hdfs.delete(path, false);

System.out.println("Delete file success?\n"+result);

}

public static void existsFile(FileSystem hdfs)throws IOException{

System.out.println("-----------existsFile------------");

Path path=new Path("user/root/ceshi/test.txt.bak");

Boolean result=hdfs.exists(path);

System.out.println("File exist?\n"+result);

}

}我的电脑运行结果:

fs.default.name=hdfs://winstar:9000

----------copy file from local to HDFS----------

----------copy file from HDFS to local----------

16/11/23 17:47:27 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

----------listFilesOfHDFS----------

hdfs://winstar:9000/system

hdfs://winstar:9000/tmp

hdfs://winstar:9000/user

----------renameFileOfHDFS----------

Rename file success?

false

-----------deleteFileOfHDFS------------

Delete file success?

false

-----------existsFile------------

File exist?

false显然这个运行结果不正常,照猫画虎还错,但是如何解决?

稍后在解决吧!!!!

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?