在公司6个节点的测试集群运行得好好的,结果也很正常,然后放上60个节点的预生产环境,我勒个擦,搞了我两天,主要是生产环境的那些家伙不配合,一个问题搞得拖啊拖

,首先是安全认证问题,截取一两个有意义的吧:

1.没有认证

Caused by: java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:680)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1671)

at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:643)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:730)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:368)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1521)

at org.apache.hadoop.ipc.Client.call(Client.java:1438)

... 28 more

Caused by: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

at com.sun.security.sasl.gsskerb.GssKrb5Client.evaluateChallenge(GssKrb5Client.java:212)

at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:413)

at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:553)

at org.apache.hadoop.ipc.Client$Connection.access$1800(Client.java:368)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:722)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:718)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1671)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:717)

... 31 more

Caused by: GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)

10:19:37

七匹猫 2016/6/7 10:19:37

[cem@inm15bdm01 datas]$ hadoop fs -lsr /

生产的家伙说是每次打开一个客户端 就要认证

,我是动手操作过shell这一块的,so按照人家的来做,每次登陆进去的时候执行:

执行命令为:kinit -kt xy.keytab cem/xxxyyyyyy@aaaa.bb (注意:该命令需要在keytab文件所在目录执行,要么加上绝对路径

执行之前上传了一个xx.keytab的文件

我表示一头雾水

2.--jars的错误

Error: Must specify a primary resource (JAR or Python file)

Run with --help for usage help or --verbose for debug output

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usrb/zookeeperb/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usrb/flume-ngb/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usrb/parquetb/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usrb/avro/avro-tools-1.7.6-cdh5.4.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

[cem@inm15bdm01 ~]$

咋一看,还以为是slf4j的错误呢,都冲突了,其实是你的脚本里面多了--jars的选项。说多了都是泪啊,我还问了我的组长来跟我一起调这个问题呢,我去,真是大坑啊。

解决方式是吧--jars直接去掉。

3.写路径问题

Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=cem, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

其实这个问题我也不知道他们是怎么解决的,他们也没说啊,但是我敢怕胸口肯定不是我的问题,因为我在测试节点上测试都没有问题,所以呢,可能还真的是跟我有关,因为用户不同,目录就不同了。

4.添加队列:

ERROR KeyProviderCache: Could not find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider !!

对于这个问题我表示真的不懂。

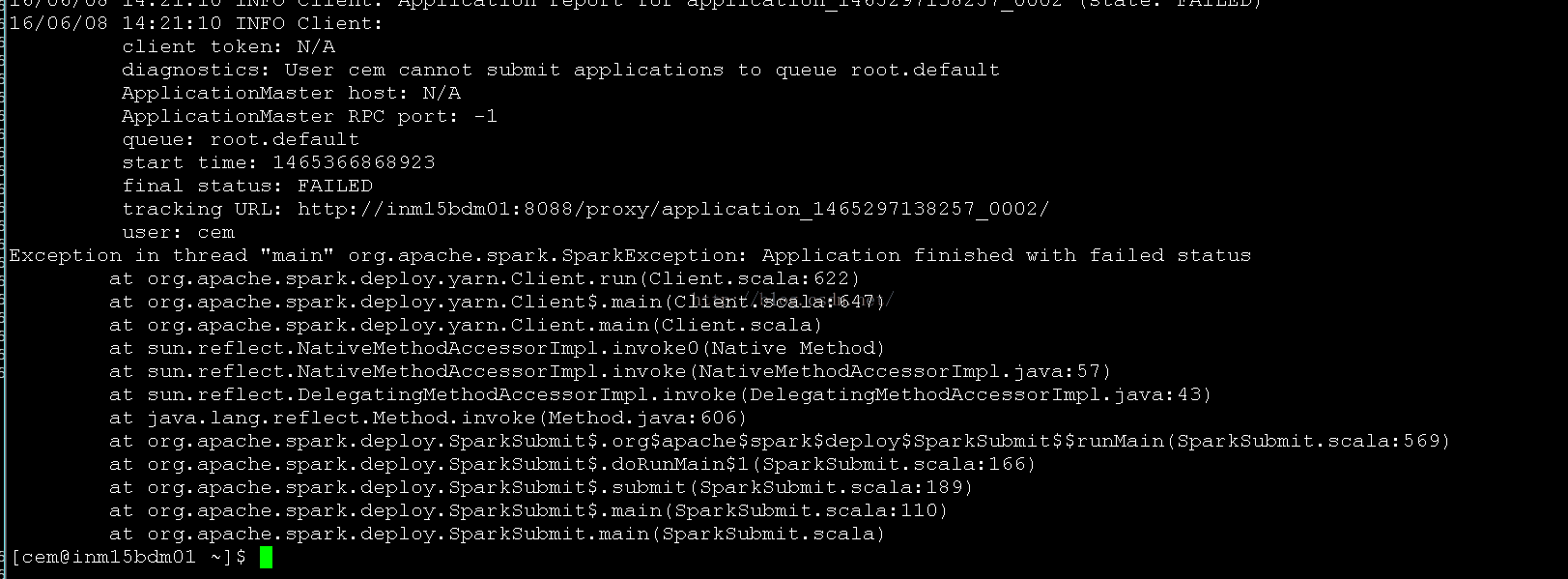

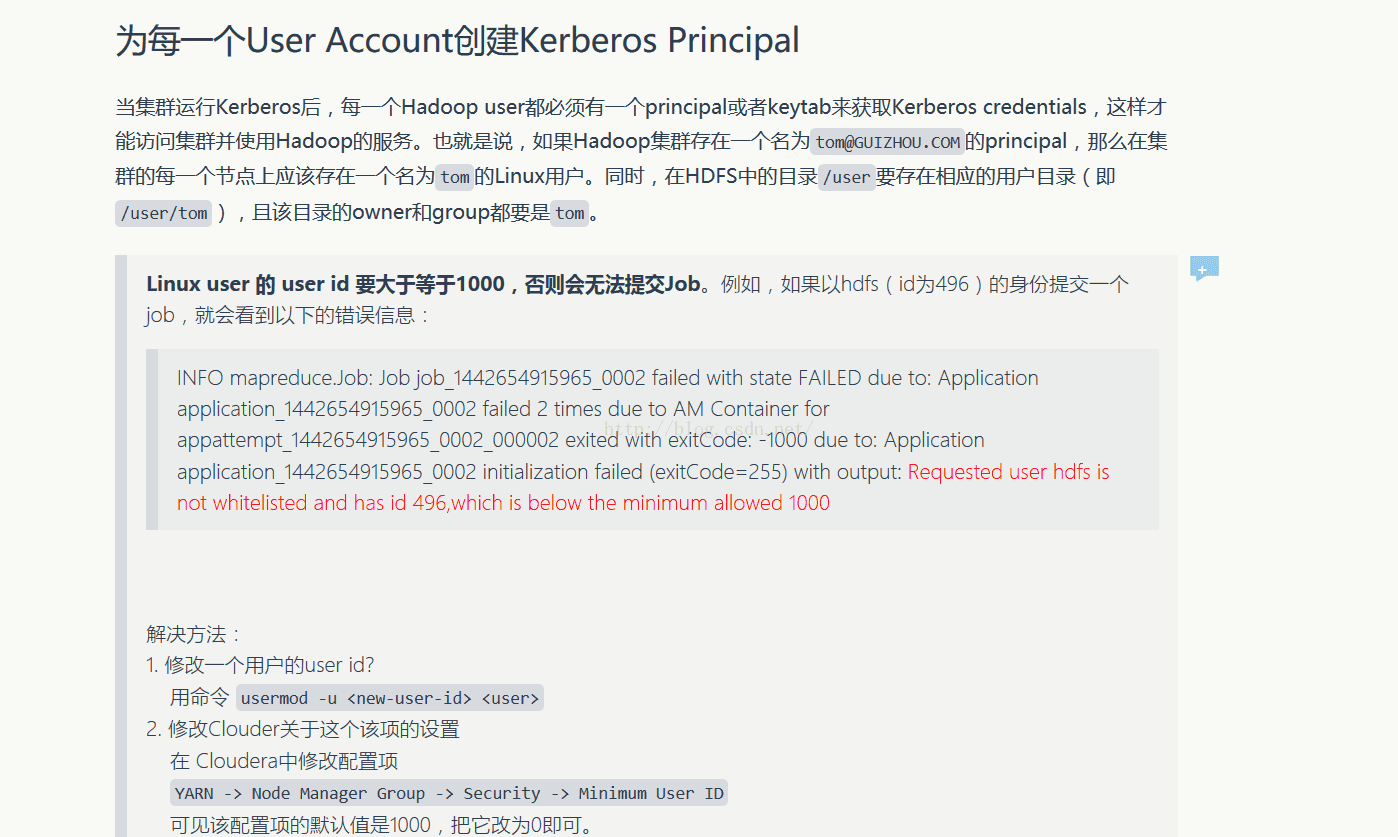

5.

关于这个问题,解决方法参考:

6.yarn logs -applicationId命令的使用

输出的内容:

16/06/08 18:22:24 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:25 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:26 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:27 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:28 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:29 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:30 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

16/06/08 18:22:31 INFO Client: Application report for application_1459834335246_0045 (state: ACCEPTED)

注意咯,上面的application_1459834335246_0045 是个applicationId,这个非常有用的,使用如下命令:

[hadoop@hadoop0002 cemtest]$ yarn logs -applicationId application_1459834335246_0046

可以查看到具体的日志执行情况:

[cem@inm15bdm01 ~]$ ./cem.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/flume-ng/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/parquet/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/avro/avro-tools-1.7.6-cdh5.4.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:43:58 INFO RMProxy: Connecting to ResourceManager at inm15bdm01/172.16.64.100:8032

16/06/08 18:43:58 INFO Client: Requesting a new application from cluster with 48 NodeManagers

16/06/08 18:43:58 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

16/06/08 18:43:58 INFO Client: Will allocate AM container, with 2432 MB memory including 384 MB overhead

16/06/08 18:43:58 INFO Client: Setting up container launch context for our AM

16/06/08 18:43:58 INFO Client: Preparing resources for our AM container

16/06/08 18:43:59 INFO Client: Uploading resource file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar -> hdfs://nameservice1/user/cem/.sparkStaging/application_1465377698932_0011/slf4j-log4j12-1.7.5.jar

16/06/08 18:44:00 INFO Client: Uploading resource file:/home/cem/jars/cem_exp_plugin-jar-with-dependencies.jar -> hdfs://nameservice1/user/cem/.sparkStaging/application_1465377698932_0011/cem_exp_plugin-jar-with-dependencies.jar

16/06/08 18:44:01 INFO Client: Setting up the launch environment for our AM container

16/06/08 18:44:01 INFO SecurityManager: Changing view acls to: cem

16/06/08 18:44:01 INFO SecurityManager: Changing modify acls to: cem

16/06/08 18:44:01 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(cem); users with modify permissions: Set(cem)

16/06/08 18:44:01 INFO Client: Submitting application 11 to ResourceManager

16/06/08 18:44:01 INFO YarnClientImpl: Submitted application application_1465377698932_0011

16/06/08 18:44:02 INFO Client: Application report for application_1465377698932_0011 (state: ACCEPTED)

16/06/08 18:44:02 INFO Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.default

start time: 1465382641768

final status: UNDEFINED

tracking URL: http://inm15bdm01:8088/proxy/application_1465377698932_0011/

user: cem

16/06/08 18:44:03 INFO Client: Application report for application_1465377698932_0011 (state: FAILED)

16/06/08 18:44:03 INFO Client:

client token: N/A

diagnostics: Application application_1465377698932_0011 failed 2 times due to AM Container for appattempt_1465377698932_0011_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://inm15bdm01:8088/proxy/application_1465377698932_0011/Then, click on links to logs of each attempt.

Diagnostics: Not able to initialize user directories in any of the configured local directories for user cem

Failing this attempt. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.default

start time: 1465382641768

final status: FAILED

tracking URL: http://inm15bdm01:8088/cluster/app/application_1465377698932_0011

user: cem

Exception in thread "main" org.apache.spark.SparkException: Application finished with failed status

at org.apache.spark.deploy.yarn.Client.run(Client.scala:622)

at org.apache.spark.deploy.yarn.Client$.main(Client.scala:647)

at org.apache.spark.deploy.yarn.Client.main(Client.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:569)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:166)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:189)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:110)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

[cem@inm15bdm01 ~]$ yarn logs -applicationId application_1465377698932_0011

16/06/08 18:44:32 INFO client.RMProxy: Connecting to ResourceManager at inm15bdm01/172.16.64.100:8032

/tmp/logs/cem/logs/application_1465377698932_0011does not have any log files.

[cem@inm15bdm01 ~]$ yarn logs -applicationId application_1465377698932_0011

16/06/08 18:45:27 INFO client.RMProxy: Connecting to ResourceManager at inm15bdm01/172.16.64.100:8032

/tmp/logs/cem/logs/application_1465377698932_0011does not have any log files.

[cem@inm15bdm01 ~]$

下面观看一下使用yarn logs -applicationId 后面的内容为:

[hadoop@hadoop0002 cemtest]$ yarn logs -applicationId application_1459834335246_0046

16/06/08 18:33:11 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:33:14 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

Container: container_1459834335246_0046_02_000003 on hadoop0004_59686

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:31

LogLength:4094

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:26:11 INFO executor.CoarseGrainedExecutorBackend: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:26:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:26:12 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:26:12 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:26:12 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:26:13 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:26:13 INFO Remoting: Starting remoting

16/06/08 18:26:13 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://driverPropsFetcher@hadoop0004:57852]

16/06/08 18:26:13 INFO util.Utils: Successfully started service 'driverPropsFetcher' on port 57852.

16/06/08 18:26:13 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:26:13 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:26:13 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:26:13 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:26:13 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:26:13 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:26:13 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:26:13 INFO Remoting: Starting remoting

16/06/08 18:26:13 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkExecutor@hadoop0004:59851]

16/06/08 18:26:13 INFO util.Utils: Successfully started service 'sparkExecutor' on port 59851.

16/06/08 18:26:13 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-d6204b04-929b-4597-9491-57aed064657c

16/06/08 18:26:13 INFO storage.MemoryStore: MemoryStore started with capacity 530.3 MB

16/06/08 18:26:14 INFO executor.CoarseGrainedExecutorBackend: Connecting to driver: akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler

16/06/08 18:26:14 INFO executor.CoarseGrainedExecutorBackend: Successfully registered with driver

16/06/08 18:26:14 INFO executor.Executor: Starting executor ID 2 on host hadoop0004

16/06/08 18:26:14 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 36305.

16/06/08 18:26:14 INFO netty.NettyBlockTransferService: Server created on 36305

16/06/08 18:26:14 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:26:14 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:26:18 INFO executor.CoarseGrainedExecutorBackend: Driver commanded a shutdown

16/06/08 18:26:18 INFO storage.MemoryStore: MemoryStore cleared

16/06/08 18:26:18 INFO storage.BlockManager: BlockManager stopped

16/06/08 18:26:18 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:26:18 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:26:18 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:26:18 INFO util.Utils: Shutdown hook called

LogType:stdout

Log Upload Time:8-六月-2016 18:27:31

LogLength:0

Log Contents:

Container: container_1459834335246_0046_01_000003 on hadoop0004_59686

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:31

LogLength:4094

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:25:48 INFO executor.CoarseGrainedExecutorBackend: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:25:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:25:49 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:25:49 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:25:49 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:25:50 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:25:50 INFO Remoting: Starting remoting

16/06/08 18:25:50 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://driverPropsFetcher@hadoop0004:38468]

16/06/08 18:25:50 INFO util.Utils: Successfully started service 'driverPropsFetcher' on port 38468.

16/06/08 18:25:50 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:25:50 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:25:50 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:25:50 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:25:50 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:25:50 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:25:50 INFO Remoting: Starting remoting

16/06/08 18:25:50 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:25:50 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkExecutor@hadoop0004:54890]

16/06/08 18:25:50 INFO util.Utils: Successfully started service 'sparkExecutor' on port 54890.

16/06/08 18:25:50 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-5d6479c1-31fe-4992-9e77-912d2965a0e4

16/06/08 18:25:50 INFO storage.MemoryStore: MemoryStore started with capacity 530.3 MB

16/06/08 18:25:51 INFO executor.CoarseGrainedExecutorBackend: Connecting to driver: akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler

16/06/08 18:25:51 INFO executor.CoarseGrainedExecutorBackend: Successfully registered with driver

16/06/08 18:25:51 INFO executor.Executor: Starting executor ID 2 on host hadoop0004

16/06/08 18:25:51 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 50555.

16/06/08 18:25:51 INFO netty.NettyBlockTransferService: Server created on 50555

16/06/08 18:25:51 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:25:51 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:25:55 INFO executor.CoarseGrainedExecutorBackend: Driver commanded a shutdown

16/06/08 18:25:55 INFO storage.MemoryStore: MemoryStore cleared

16/06/08 18:25:55 INFO storage.BlockManager: BlockManager stopped

16/06/08 18:25:55 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:25:55 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:25:55 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:25:55 INFO util.Utils: Shutdown hook called

LogType:stdout

Log Upload Time:8-六月-2016 18:27:31

LogLength:0

Log Contents:

Container: container_1459834335246_0046_02_000002 on hadoop0006_54193

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:30

LogLength:6646

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:28:20 INFO executor.CoarseGrainedExecutorBackend: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:28:20 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:28:20 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:28:20 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:28:20 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:28:21 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:28:21 INFO Remoting: Starting remoting

16/06/08 18:28:21 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://driverPropsFetcher@hadoop0006:33493]

16/06/08 18:28:21 INFO util.Utils: Successfully started service 'driverPropsFetcher' on port 33493.

16/06/08 18:28:22 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:28:22 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:28:22 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:28:22 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:28:22 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:28:22 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:28:22 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:28:22 INFO Remoting: Starting remoting

16/06/08 18:28:22 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkExecutor@hadoop0006:56072]

16/06/08 18:28:22 INFO util.Utils: Successfully started service 'sparkExecutor' on port 56072.

16/06/08 18:28:22 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-5da0e65e-efec-4cac-9879-628e60b2a77b

16/06/08 18:28:22 INFO storage.MemoryStore: MemoryStore started with capacity 530.3 MB

16/06/08 18:28:22 INFO executor.CoarseGrainedExecutorBackend: Connecting to driver: akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler

16/06/08 18:28:22 INFO executor.CoarseGrainedExecutorBackend: Successfully registered with driver

16/06/08 18:28:22 INFO executor.Executor: Starting executor ID 1 on host hadoop0006

16/06/08 18:28:22 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 34754.

16/06/08 18:28:22 INFO netty.NettyBlockTransferService: Server created on 34754

16/06/08 18:28:22 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:28:22 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:28:24 INFO executor.CoarseGrainedExecutorBackend: Got assigned task 0

16/06/08 18:28:24 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

16/06/08 18:28:24 INFO broadcast.TorrentBroadcast: Started reading broadcast variable 1

16/06/08 18:28:24 INFO storage.MemoryStore: ensureFreeSpace(2864) called with curMem=0, maxMem=556038881

16/06/08 18:28:24 INFO storage.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.8 KB, free 530.3 MB)

16/06/08 18:28:24 INFO broadcast.TorrentBroadcast: Reading broadcast variable 1 took 197 ms

16/06/08 18:28:24 INFO storage.MemoryStore: ensureFreeSpace(5024) called with curMem=2864, maxMem=556038881

16/06/08 18:28:24 INFO storage.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 530.3 MB)

16/06/08 18:28:25 INFO rdd.HadoopRDD: Input split: hdfs://mycluster/cem/CEM_CIRCUIT_CALC_2016/2016/05/31/part-m-00000:0+43842

16/06/08 18:28:25 INFO broadcast.TorrentBroadcast: Started reading broadcast variable 0

16/06/08 18:28:25 INFO storage.MemoryStore: ensureFreeSpace(21526) called with curMem=7888, maxMem=556038881

16/06/08 18:28:25 INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 21.0 KB, free 530.3 MB)

16/06/08 18:28:25 INFO broadcast.TorrentBroadcast: Reading broadcast variable 0 took 21 ms

16/06/08 18:28:25 INFO storage.MemoryStore: ensureFreeSpace(356096) called with curMem=29414, maxMem=556038881

16/06/08 18:28:25 INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 347.8 KB, free 529.9 MB)

16/06/08 18:28:26 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

16/06/08 18:28:26 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 826e7d8d3e839964dd9ed2d5f83296254b2c71d3]

16/06/08 18:28:26 INFO Configuration.deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

16/06/08 18:28:26 INFO Configuration.deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

16/06/08 18:28:26 INFO Configuration.deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

16/06/08 18:28:26 INFO Configuration.deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

16/06/08 18:28:26 INFO Configuration.deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

16/06/08 18:28:27 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 3980 bytes result sent to driver

16/06/08 18:28:27 INFO executor.CoarseGrainedExecutorBackend: Driver commanded a shutdown

16/06/08 18:28:27 INFO storage.MemoryStore: MemoryStore cleared

16/06/08 18:28:27 INFO storage.BlockManager: BlockManager stopped

16/06/08 18:28:27 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:28:27 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:28:27 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:28:27 INFO util.Utils: Shutdown hook called

LogType:stdout

Log Upload Time:8-六月-2016 18:27:30

LogLength:0

Log Contents:

Container: container_1459834335246_0046_01_000002 on hadoop0006_54193

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:30

LogLength:6646

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:27:56 INFO executor.CoarseGrainedExecutorBackend: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:27:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:27:57 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:57 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:57 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:57 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:27:57 INFO Remoting: Starting remoting

16/06/08 18:27:58 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://driverPropsFetcher@hadoop0006:56187]

16/06/08 18:27:58 INFO util.Utils: Successfully started service 'driverPropsFetcher' on port 56187.

16/06/08 18:27:58 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:58 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:58 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:58 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:27:58 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:27:58 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:27:58 INFO Remoting: Starting remoting

16/06/08 18:27:58 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:27:58 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkExecutor@hadoop0006:34841]

16/06/08 18:27:58 INFO util.Utils: Successfully started service 'sparkExecutor' on port 34841.

16/06/08 18:27:58 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-3ee5b4dc-93a3-40a3-9edc-8bef8eeebdd3

16/06/08 18:27:58 INFO storage.MemoryStore: MemoryStore started with capacity 530.3 MB

16/06/08 18:27:58 INFO executor.CoarseGrainedExecutorBackend: Connecting to driver: akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler

16/06/08 18:27:58 INFO executor.CoarseGrainedExecutorBackend: Successfully registered with driver

16/06/08 18:27:58 INFO executor.Executor: Starting executor ID 1 on host hadoop0006

16/06/08 18:27:58 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 59977.

16/06/08 18:27:58 INFO netty.NettyBlockTransferService: Server created on 59977

16/06/08 18:27:58 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:27:58 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:28:01 INFO executor.CoarseGrainedExecutorBackend: Got assigned task 0

16/06/08 18:28:01 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

16/06/08 18:28:01 INFO broadcast.TorrentBroadcast: Started reading broadcast variable 1

16/06/08 18:28:01 INFO storage.MemoryStore: ensureFreeSpace(2864) called with curMem=0, maxMem=556038881

16/06/08 18:28:01 INFO storage.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.8 KB, free 530.3 MB)

16/06/08 18:28:01 INFO broadcast.TorrentBroadcast: Reading broadcast variable 1 took 191 ms

16/06/08 18:28:01 INFO storage.MemoryStore: ensureFreeSpace(5024) called with curMem=2864, maxMem=556038881

16/06/08 18:28:01 INFO storage.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 530.3 MB)

16/06/08 18:28:02 INFO rdd.HadoopRDD: Input split: hdfs://mycluster/cem/CEM_CIRCUIT_CALC_2016/2016/05/31/part-m-00000:0+43842

16/06/08 18:28:02 INFO broadcast.TorrentBroadcast: Started reading broadcast variable 0

16/06/08 18:28:02 INFO storage.MemoryStore: ensureFreeSpace(21526) called with curMem=7888, maxMem=556038881

16/06/08 18:28:02 INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 21.0 KB, free 530.3 MB)

16/06/08 18:28:02 INFO broadcast.TorrentBroadcast: Reading broadcast variable 0 took 21 ms

16/06/08 18:28:02 INFO storage.MemoryStore: ensureFreeSpace(356096) called with curMem=29414, maxMem=556038881

16/06/08 18:28:02 INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 347.8 KB, free 529.9 MB)

16/06/08 18:28:03 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

16/06/08 18:28:03 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 826e7d8d3e839964dd9ed2d5f83296254b2c71d3]

16/06/08 18:28:03 INFO Configuration.deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

16/06/08 18:28:03 INFO Configuration.deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

16/06/08 18:28:03 INFO Configuration.deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

16/06/08 18:28:03 INFO Configuration.deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

16/06/08 18:28:03 INFO Configuration.deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

16/06/08 18:28:03 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 3980 bytes result sent to driver

16/06/08 18:28:04 INFO executor.CoarseGrainedExecutorBackend: Driver commanded a shutdown

16/06/08 18:28:04 INFO storage.MemoryStore: MemoryStore cleared

16/06/08 18:28:04 INFO storage.BlockManager: BlockManager stopped

16/06/08 18:28:04 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:28:04 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:28:04 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:28:04 INFO util.Utils: Shutdown hook called

LogType:stdout

Log Upload Time:8-六月-2016 18:27:30

LogLength:0

Log Contents:

Container: container_1459834335246_0046_02_000001 on hadoop0008_48786

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:30

LogLength:22462

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:27:47 INFO yarn.ApplicationMaster: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:27:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:27:48 INFO yarn.ApplicationMaster: ApplicationAttemptId: appattempt_1459834335246_0046_000002

16/06/08 18:27:48 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:48 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:48 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:48 INFO yarn.ApplicationMaster: Starting the user application in a separate Thread

16/06/08 18:27:48 INFO yarn.ApplicationMaster: Waiting for spark context initialization

16/06/08 18:27:48 INFO yarn.ApplicationMaster: Waiting for spark context initialization ...

16/06/08 18:27:48 INFO spark.SparkContext: Running Spark version 1.4.1

16/06/08 18:27:49 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:49 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:49 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:49 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:27:49 INFO Remoting: Starting remoting

16/06/08 18:27:49 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@172.16.0.8:37011]

16/06/08 18:27:49 INFO util.Utils: Successfully started service 'sparkDriver' on port 37011.

16/06/08 18:27:49 INFO spark.SparkEnv: Registering MapOutputTracker

16/06/08 18:27:49 INFO spark.SparkEnv: Registering BlockManagerMaster

16/06/08 18:27:49 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-fb5861c4-41b2-47c3-91d2-4972c7b378e7

16/06/08 18:27:49 INFO storage.MemoryStore: MemoryStore started with capacity 983.1 MB

16/06/08 18:27:49 INFO spark.HttpFileServer: HTTP File server directory is /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/httpd-0f1c30d7-f787-4f5e-8f28-d1bfb7671198

16/06/08 18:27:49 INFO spark.HttpServer: Starting HTTP Server

16/06/08 18:27:49 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/06/08 18:27:49 INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:58637

16/06/08 18:27:49 INFO util.Utils: Successfully started service 'HTTP file server' on port 58637.

16/06/08 18:27:49 INFO spark.SparkEnv: Registering OutputCommitCoordinator

16/06/08 18:27:50 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

16/06/08 18:27:50 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/06/08 18:27:50 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:59237

16/06/08 18:27:50 INFO util.Utils: Successfully started service 'SparkUI' on port 59237.

16/06/08 18:27:50 INFO ui.SparkUI: Started SparkUI at http://172.16.0.8:59237

16/06/08 18:27:50 INFO cluster.YarnClusterScheduler: Created YarnClusterScheduler

16/06/08 18:27:50 INFO cluster.YarnClusterScheduler: Starting speculative execution thread

16/06/08 18:27:50 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 50216.

16/06/08 18:27:50 INFO netty.NettyBlockTransferService: Server created on 50216

16/06/08 18:27:50 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:27:50 INFO storage.BlockManagerMasterEndpoint: Registering block manager 172.16.0.8:50216 with 983.1 MB RAM, BlockManagerId(driver, 172.16.0.8, 50216)

16/06/08 18:27:50 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:27:53 INFO scheduler.EventLoggingListener: Logging events to hdfs://mycluster/user/hadoop/spark-container/application_1459834335246_0046_2.snappy

16/06/08 18:27:53 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as AkkaRpcEndpointRef(Actor[akka://sparkDriver/user/YarnAM#1114655653])

16/06/08 18:27:53 INFO yarn.YarnRMClient: Registering the ApplicationMaster

16/06/08 18:27:55 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

16/06/08 18:27:55 INFO yarn.YarnAllocator: Will request 2 executor containers, each with 1 cores and 1408 MB memory including 384 MB overhead

16/06/08 18:27:55 INFO yarn.YarnAllocator: Container request (host: Any, capability: <memory:1408, vCores:1>)

16/06/08 18:27:55 INFO yarn.YarnAllocator: Container request (host: Any, capability: <memory:1408, vCores:1>)

16/06/08 18:27:55 INFO yarn.ApplicationMaster: Started progress reporter thread - sleep time : 5000

16/06/08 18:28:00 INFO impl.AMRMClientImpl: Received new token for : hadoop0006:54193

16/06/08 18:28:00 INFO impl.AMRMClientImpl: Received new token for : hadoop0004:59686

16/06/08 18:28:00 INFO yarn.YarnAllocator: Launching container container_1459834335246_0046_02_000002 for on host hadoop0006

16/06/08 18:28:00 INFO yarn.YarnAllocator: Launching ExecutorRunnable. driverUrl: akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler, executorHostname: hadoop0006

16/06/08 18:28:00 INFO yarn.YarnAllocator: Launching container container_1459834335246_0046_02_000003 for on host hadoop0004

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Starting Executor Container

16/06/08 18:28:00 INFO yarn.YarnAllocator: Launching ExecutorRunnable. driverUrl: akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler, executorHostname: hadoop0004

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Starting Executor Container

16/06/08 18:28:00 INFO yarn.YarnAllocator: Received 2 containers from YARN, launching executors on 2 of them.

16/06/08 18:28:00 INFO impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

16/06/08 18:28:00 INFO impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Setting up ContainerLaunchContext

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Setting up ContainerLaunchContext

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Preparing Local resources

16/06/08 18:28:00 INFO yarn.ExecutorRunnable: Preparing Local resources

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Prepared Local resources Map(__app__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar" } size: 105442982 timestamp: 1465381587242 type: FILE visibility: PRIVATE, __spark__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar" } size: 162976273 timestamp: 1459824120339 type: FILE visibility: PUBLIC)

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Prepared Local resources Map(__app__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar" } size: 105442982 timestamp: 1465381587242 type: FILE visibility: PRIVATE, __spark__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar" } size: 162976273 timestamp: 1459824120339 type: FILE visibility: PUBLIC)

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Setting up executor with environment: Map(CLASSPATH -> {{PWD}}<CPS>{{PWD}}/__spark__.jar<CPS>$HADOOP_CONF_DIR<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/*<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/lib/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/lib/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*, SPARK_LOG_URL_STDERR -> http://hadoop0004:8042/node/containerlogs/container_1459834335246_0046_02_000003/hadoop/stderr?start=0, SPARK_YARN_STAGING_DIR -> .sparkStaging/application_1459834335246_0046, SPARK_YARN_CACHE_FILES_FILE_SIZES -> 162976273,105442982, SPARK_USER -> hadoop, SPARK_YARN_CACHE_FILES_VISIBILITIES -> PUBLIC,PRIVATE, SPARK_YARN_MODE -> true, SPARK_YARN_CACHE_FILES_TIME_STAMPS -> 1459824120339,1465381587242, SPARK_LOG_URL_STDOUT -> http://hadoop0004:8042/node/containerlogs/container_1459834335246_0046_02_000003/hadoop/stdout?start=0, SPARK_YARN_CACHE_FILES -> hdfs://mycluster/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar#__spark__.jar,hdfs://mycluster/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar#__app__.jar)

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Setting up executor with environment: Map(CLASSPATH -> {{PWD}}<CPS>{{PWD}}/__spark__.jar<CPS>$HADOOP_CONF_DIR<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/*<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/lib/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/lib/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*, SPARK_LOG_URL_STDERR -> http://hadoop0006:8042/node/containerlogs/container_1459834335246_0046_02_000002/hadoop/stderr?start=0, SPARK_YARN_STAGING_DIR -> .sparkStaging/application_1459834335246_0046, SPARK_YARN_CACHE_FILES_FILE_SIZES -> 162976273,105442982, SPARK_USER -> hadoop, SPARK_YARN_CACHE_FILES_VISIBILITIES -> PUBLIC,PRIVATE, SPARK_YARN_MODE -> true, SPARK_YARN_CACHE_FILES_TIME_STAMPS -> 1459824120339,1465381587242, SPARK_LOG_URL_STDOUT -> http://hadoop0006:8042/node/containerlogs/container_1459834335246_0046_02_000002/hadoop/stdout?start=0, SPARK_YARN_CACHE_FILES -> hdfs://mycluster/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar#__spark__.jar,hdfs://mycluster/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar#__app__.jar)

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Setting up executor with commands: List({{JAVA_HOME}}/bin/java, -server, -XX:OnOutOfMemoryError='kill %p', -Xms1024m, -Xmx1024m, -Djava.io.tmpdir={{PWD}}/tmp, '-Dspark.ui.port=0', '-Dspark.driver.port=37011', -Dspark.yarn.app.container.log.dir=<LOG_DIR>, org.apache.spark.executor.CoarseGrainedExecutorBackend, --driver-url, akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler, --executor-id, 2, --hostname, hadoop0004, --cores, 1, --app-id, application_1459834335246_0046, --user-class-path, file:$PWD/__app__.jar, 1>, <LOG_DIR>/stdout, 2>, <LOG_DIR>/stderr)

16/06/08 18:28:01 INFO yarn.ExecutorRunnable: Setting up executor with commands: List({{JAVA_HOME}}/bin/java, -server, -XX:OnOutOfMemoryError='kill %p', -Xms1024m, -Xmx1024m, -Djava.io.tmpdir={{PWD}}/tmp, '-Dspark.ui.port=0', '-Dspark.driver.port=37011', -Dspark.yarn.app.container.log.dir=<LOG_DIR>, org.apache.spark.executor.CoarseGrainedExecutorBackend, --driver-url, akka.tcp://sparkDriver@172.16.0.8:37011/user/CoarseGrainedScheduler, --executor-id, 1, --hostname, hadoop0006, --cores, 1, --app-id, application_1459834335246_0046, --user-class-path, file:$PWD/__app__.jar, 1>, <LOG_DIR>/stdout, 2>, <LOG_DIR>/stderr)

16/06/08 18:28:01 INFO impl.ContainerManagementProtocolProxy: Opening proxy : hadoop0006:54193

16/06/08 18:28:01 INFO impl.ContainerManagementProtocolProxy: Opening proxy : hadoop0004:59686

16/06/08 18:28:03 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0006:33493

16/06/08 18:28:03 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0004:57852

16/06/08 18:28:04 INFO cluster.YarnClusterSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@hadoop0004:59851/user/Executor#-99737567]) with ID 2

16/06/08 18:28:04 INFO cluster.YarnClusterSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@hadoop0006:56072/user/Executor#-2025766229]) with ID 1

16/06/08 18:28:04 INFO cluster.YarnClusterSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

16/06/08 18:28:04 INFO cluster.YarnClusterScheduler: YarnClusterScheduler.postStartHook done

16/06/08 18:28:04 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop0004:36305 with 530.3 MB RAM, BlockManagerId(2, hadoop0004, 36305)

16/06/08 18:28:04 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop0006:34754 with 530.3 MB RAM, BlockManagerId(1, hadoop0006, 34754)

16/06/08 18:28:04 INFO storage.MemoryStore: ensureFreeSpace(247280) called with curMem=0, maxMem=1030823608

16/06/08 18:28:04 INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 241.5 KB, free 982.8 MB)

16/06/08 18:28:04 INFO storage.MemoryStore: ensureFreeSpace(21526) called with curMem=247280, maxMem=1030823608

16/06/08 18:28:04 INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 21.0 KB, free 982.8 MB)

16/06/08 18:28:04 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.16.0.8:50216 (size: 21.0 KB, free: 983.0 MB)

16/06/08 18:28:04 INFO spark.SparkContext: Created broadcast 0 from textFile at Test.java:102

16/06/08 18:28:06 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

16/06/08 18:28:06 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 826e7d8d3e839964dd9ed2d5f83296254b2c71d3]

16/06/08 18:28:06 INFO mapred.FileInputFormat: Total input paths to process : 4

16/06/08 18:28:06 INFO spark.SparkContext: Starting job: show at Test.java:93

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Got job 0 (show at Test.java:93) with 1 output partitions (allowLocal=false)

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Final stage: ResultStage 0(show at Test.java:93)

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Parents of final stage: List()

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Missing parents: List()

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[4] at show at Test.java:93), which has no missing parents

16/06/08 18:28:06 INFO storage.MemoryStore: ensureFreeSpace(5024) called with curMem=268806, maxMem=1030823608

16/06/08 18:28:06 INFO storage.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 982.8 MB)

16/06/08 18:28:06 INFO storage.MemoryStore: ensureFreeSpace(2864) called with curMem=273830, maxMem=1030823608

16/06/08 18:28:06 INFO storage.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.8 KB, free 982.8 MB)

16/06/08 18:28:06 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on 172.16.0.8:50216 (size: 2.8 KB, free: 983.0 MB)

16/06/08 18:28:06 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:874

16/06/08 18:28:06 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[4] at show at Test.java:93)

16/06/08 18:28:06 INFO cluster.YarnClusterScheduler: Adding task set 0.0 with 1 tasks

16/06/08 18:28:06 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hadoop0006, NODE_LOCAL, 1439 bytes)

16/06/08 18:28:06 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on hadoop0006:34754 (size: 2.8 KB, free: 530.3 MB)

16/06/08 18:28:07 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop0006:34754 (size: 21.0 KB, free: 530.3 MB)

16/06/08 18:28:08 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2445 ms on hadoop0006 (1/1)

16/06/08 18:28:08 INFO cluster.YarnClusterScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/06/08 18:28:08 INFO scheduler.DAGScheduler: ResultStage 0 (show at Test.java:93) finished in 2.454 s

16/06/08 18:28:08 INFO scheduler.DAGScheduler: Job 0 finished: show at Test.java:93, took 2.545246 s

16/06/08 18:28:08 ERROR yarn.ApplicationMaster: User class threw exception: java.lang.RuntimeException: path /cem/results already exists.

java.lang.RuntimeException: path /cem/results already exists.

at scala.sys.package$.error(package.scala:27)

at org.apache.spark.sql.json.DefaultSource.createRelation(JSONRelation.scala:91)

at org.apache.spark.sql.sources.ResolvedDataSource$.apply(ddl.scala:309)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:144)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:135)

at com.ctsi.cem.Test.main(Test.java:95)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:483)

16/06/08 18:28:08 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 15, (reason: User class threw exception: java.lang.RuntimeException: path /cem/results already exists.)

16/06/08 18:28:08 INFO spark.SparkContext: Invoking stop() from shutdown hook

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/metrics/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/kill,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/api,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/static,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/json,null}

16/06/08 18:28:08 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs,null}

16/06/08 18:28:08 INFO ui.SparkUI: Stopped Spark web UI at http://172.16.0.8:59237

16/06/08 18:28:08 INFO scheduler.DAGScheduler: Stopping DAGScheduler

16/06/08 18:28:08 INFO cluster.YarnClusterSchedulerBackend: Shutting down all executors

16/06/08 18:28:08 INFO cluster.YarnClusterSchedulerBackend: Asking each executor to shut down

16/06/08 18:28:08 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0004:59851

16/06/08 18:28:08 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0006:56072

16/06/08 18:28:08 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/06/08 18:28:08 INFO storage.MemoryStore: MemoryStore cleared

16/06/08 18:28:08 INFO storage.BlockManager: BlockManager stopped

16/06/08 18:28:08 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

16/06/08 18:28:08 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/06/08 18:28:08 INFO spark.SparkContext: Successfully stopped SparkContext

16/06/08 18:28:08 INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED (diag message: User class threw exception: java.lang.RuntimeException: path /cem/results already exists.)

16/06/08 18:28:08 INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

16/06/08 18:28:08 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

16/06/08 18:28:08 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered.

16/06/08 18:28:08 INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

16/06/08 18:28:08 INFO yarn.ApplicationMaster: Deleting staging directory .sparkStaging/application_1459834335246_0046

16/06/08 18:28:09 INFO util.Utils: Shutdown hook called

LogType:stdout

Log Upload Time:8-六月-2016 18:27:30

LogLength:1753

Log Contents:

+----------------+----------+--------------+-------------+-------------+

|businessblockmin|circuit_id|faultbreak_num|mainbreak_dur|mainbreak_num|

+----------------+----------+--------------+-------------+-------------+

| 0.0| 1753918| 0.0| 0.0| 0.0|

| 0.0| 43125269| 0.0| 0.0| 0.0|

| 0.0| 1723398| 0.0| 0.0| 0.0|

| 0.0| 37827246| 0.0| 0.0| 0.0|

| 0.0| 1685217| 0.0| 0.0| 0.0|

| 0.0| 1748618| 0.0| 0.0| 0.0|

| 0.0| 1676242| 0.0| 0.0| 0.0|

| 0.0| 1722308| 0.0| 0.0| 0.0|

| 0.0| 68128356| 0.0| 0.0| 0.0|

| 0.0| 1685024| 0.0| 0.0| 0.0|

| 0.0| 1818239| 0.0| 0.0| 0.0|

| 0.0| 1739621| 0.0| 0.0| 0.0|

| 0.0| 1684225| 0.0| 0.0| 0.0|

| 0.0| 7775571| 0.0| 0.0| 0.0|

| 0.0| 1733242| 0.0| 0.0| 0.0|

| 0.0| 1748803| 0.0| 0.0| 0.0|

| 0.0| 68128515| 0.0| 0.0| 0.0|

| 0.0| 7786947| 0.0| 0.0| 0.0|

| 0.0| 1740268| 0.0| 0.0| 0.0|

| 0.0| 43125269| 0.0| 0.0| 0.0|

+----------------+----------+--------------+-------------+-------------+

Container: container_1459834335246_0046_01_000001 on hadoop0008_48786

=======================================================================

LogType:stderr

Log Upload Time:8-六月-2016 18:27:30

LogLength:21708

Log Contents:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/nmdata/local/filecache/10/spark-assembly-1.4.1-hadoop2.6.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

16/06/08 18:27:19 INFO yarn.ApplicationMaster: Registered signal handlers for [TERM, HUP, INT]

16/06/08 18:27:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/08 18:27:19 INFO yarn.ApplicationMaster: ApplicationAttemptId: appattempt_1459834335246_0046_000001

16/06/08 18:27:20 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:20 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:20 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:20 INFO yarn.ApplicationMaster: Starting the user application in a separate Thread

16/06/08 18:27:20 INFO yarn.ApplicationMaster: Waiting for spark context initialization

16/06/08 18:27:20 INFO yarn.ApplicationMaster: Waiting for spark context initialization ...

16/06/08 18:27:20 INFO spark.SparkContext: Running Spark version 1.4.1

16/06/08 18:27:20 INFO spark.SecurityManager: Changing view acls to: hadoop

16/06/08 18:27:20 INFO spark.SecurityManager: Changing modify acls to: hadoop

16/06/08 18:27:20 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

16/06/08 18:27:21 INFO slf4j.Slf4jLogger: Slf4jLogger started

16/06/08 18:27:21 INFO Remoting: Starting remoting

16/06/08 18:27:21 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@172.16.0.8:35307]

16/06/08 18:27:21 INFO util.Utils: Successfully started service 'sparkDriver' on port 35307.

16/06/08 18:27:21 INFO spark.SparkEnv: Registering MapOutputTracker

16/06/08 18:27:21 INFO spark.SparkEnv: Registering BlockManagerMaster

16/06/08 18:27:21 INFO storage.DiskBlockManager: Created local directory at /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/blockmgr-bc1135dc-6cad-441d-a88e-4da1af10d841

16/06/08 18:27:21 INFO storage.MemoryStore: MemoryStore started with capacity 983.1 MB

16/06/08 18:27:21 INFO spark.HttpFileServer: HTTP File server directory is /home/hadoop/hadoop-2.6.0/nmdata/local/usercache/hadoop/appcache/application_1459834335246_0046/httpd-37766ea9-becc-4c18-b85b-aaf5afc3429d

16/06/08 18:27:21 INFO spark.HttpServer: Starting HTTP Server

16/06/08 18:27:21 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/06/08 18:27:21 INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:33301

16/06/08 18:27:21 INFO util.Utils: Successfully started service 'HTTP file server' on port 33301.

16/06/08 18:27:21 INFO spark.SparkEnv: Registering OutputCommitCoordinator

16/06/08 18:27:21 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter

16/06/08 18:27:21 INFO server.Server: jetty-8.y.z-SNAPSHOT

16/06/08 18:27:21 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:32815

16/06/08 18:27:21 INFO util.Utils: Successfully started service 'SparkUI' on port 32815.

16/06/08 18:27:21 INFO ui.SparkUI: Started SparkUI at http://172.16.0.8:32815

16/06/08 18:27:21 INFO cluster.YarnClusterScheduler: Created YarnClusterScheduler

16/06/08 18:27:21 INFO cluster.YarnClusterScheduler: Starting speculative execution thread

16/06/08 18:27:22 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 40977.

16/06/08 18:27:22 INFO netty.NettyBlockTransferService: Server created on 40977

16/06/08 18:27:22 INFO storage.BlockManagerMaster: Trying to register BlockManager

16/06/08 18:27:22 INFO storage.BlockManagerMasterEndpoint: Registering block manager 172.16.0.8:40977 with 983.1 MB RAM, BlockManagerId(driver, 172.16.0.8, 40977)

16/06/08 18:27:22 INFO storage.BlockManagerMaster: Registered BlockManager

16/06/08 18:27:24 INFO scheduler.EventLoggingListener: Logging events to hdfs://mycluster/user/hadoop/spark-container/application_1459834335246_0046_1.snappy

16/06/08 18:27:24 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as AkkaRpcEndpointRef(Actor[akka://sparkDriver/user/YarnAM#-1056523566])

16/06/08 18:27:24 INFO yarn.YarnRMClient: Registering the ApplicationMaster

16/06/08 18:27:26 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

16/06/08 18:27:26 INFO yarn.YarnAllocator: Will request 2 executor containers, each with 1 cores and 1408 MB memory including 384 MB overhead

16/06/08 18:27:26 INFO yarn.YarnAllocator: Container request (host: Any, capability: <memory:1408, vCores:1>)

16/06/08 18:27:26 INFO yarn.YarnAllocator: Container request (host: Any, capability: <memory:1408, vCores:1>)

16/06/08 18:27:26 INFO yarn.ApplicationMaster: Started progress reporter thread - sleep time : 5000

16/06/08 18:27:31 INFO impl.AMRMClientImpl: Received new token for : hadoop0006:54193

16/06/08 18:27:31 INFO impl.AMRMClientImpl: Received new token for : hadoop0004:59686

16/06/08 18:27:31 INFO yarn.YarnAllocator: Launching container container_1459834335246_0046_01_000002 for on host hadoop0006

16/06/08 18:27:31 INFO yarn.YarnAllocator: Launching ExecutorRunnable. driverUrl: akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler, executorHostname: hadoop0006

16/06/08 18:27:31 INFO yarn.YarnAllocator: Launching container container_1459834335246_0046_01_000003 for on host hadoop0004

16/06/08 18:27:31 INFO yarn.YarnAllocator: Launching ExecutorRunnable. driverUrl: akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler, executorHostname: hadoop0004

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Starting Executor Container

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Starting Executor Container

16/06/08 18:27:31 INFO yarn.YarnAllocator: Received 2 containers from YARN, launching executors on 2 of them.

16/06/08 18:27:31 INFO impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

16/06/08 18:27:31 INFO impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Setting up ContainerLaunchContext

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Setting up ContainerLaunchContext

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Preparing Local resources

16/06/08 18:27:31 INFO yarn.ExecutorRunnable: Preparing Local resources

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Prepared Local resources Map(__app__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar" } size: 105442982 timestamp: 1465381587242 type: FILE visibility: PRIVATE, __spark__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar" } size: 162976273 timestamp: 1459824120339 type: FILE visibility: PUBLIC)

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Prepared Local resources Map(__app__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar" } size: 105442982 timestamp: 1465381587242 type: FILE visibility: PRIVATE, __spark__.jar -> resource { scheme: "hdfs" host: "mycluster" port: -1 file: "/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar" } size: 162976273 timestamp: 1459824120339 type: FILE visibility: PUBLIC)

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Setting up executor with environment: Map(CLASSPATH -> {{PWD}}<CPS>{{PWD}}/__spark__.jar<CPS>$HADOOP_CONF_DIR<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/*<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/lib/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/lib/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*, SPARK_LOG_URL_STDERR -> http://hadoop0006:8042/node/containerlogs/container_1459834335246_0046_01_000002/hadoop/stderr?start=0, SPARK_YARN_STAGING_DIR -> .sparkStaging/application_1459834335246_0046, SPARK_YARN_CACHE_FILES_FILE_SIZES -> 162976273,105442982, SPARK_USER -> hadoop, SPARK_YARN_CACHE_FILES_VISIBILITIES -> PUBLIC,PRIVATE, SPARK_YARN_MODE -> true, SPARK_YARN_CACHE_FILES_TIME_STAMPS -> 1459824120339,1465381587242, SPARK_LOG_URL_STDOUT -> http://hadoop0006:8042/node/containerlogs/container_1459834335246_0046_01_000002/hadoop/stdout?start=0, SPARK_YARN_CACHE_FILES -> hdfs://mycluster/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar#__spark__.jar,hdfs://mycluster/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar#__app__.jar)

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Setting up executor with environment: Map(CLASSPATH -> {{PWD}}<CPS>{{PWD}}/__spark__.jar<CPS>$HADOOP_CONF_DIR<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/*<CPS>$HADOOP_COMMON_HOME/share/hadoop/common/lib/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/*<CPS>$HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/*<CPS>$HADOOP_YARN_HOME/share/hadoop/yarn/lib/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*<CPS>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*, SPARK_LOG_URL_STDERR -> http://hadoop0004:8042/node/containerlogs/container_1459834335246_0046_01_000003/hadoop/stderr?start=0, SPARK_YARN_STAGING_DIR -> .sparkStaging/application_1459834335246_0046, SPARK_YARN_CACHE_FILES_FILE_SIZES -> 162976273,105442982, SPARK_USER -> hadoop, SPARK_YARN_CACHE_FILES_VISIBILITIES -> PUBLIC,PRIVATE, SPARK_YARN_MODE -> true, SPARK_YARN_CACHE_FILES_TIME_STAMPS -> 1459824120339,1465381587242, SPARK_LOG_URL_STDOUT -> http://hadoop0004:8042/node/containerlogs/container_1459834335246_0046_01_000003/hadoop/stdout?start=0, SPARK_YARN_CACHE_FILES -> hdfs://mycluster/user/hadoop/spark/lib/spark-assembly-1.4.1-hadoop2.6.0.jar#__spark__.jar,hdfs://mycluster/user/hadoop/.sparkStaging/application_1459834335246_0046/cem_exp_plugin-jar-with-dependencies.jar#__app__.jar)

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Setting up executor with commands: List({{JAVA_HOME}}/bin/java, -server, -XX:OnOutOfMemoryError='kill %p', -Xms1024m, -Xmx1024m, -Djava.io.tmpdir={{PWD}}/tmp, '-Dspark.driver.port=35307', '-Dspark.ui.port=0', -Dspark.yarn.app.container.log.dir=<LOG_DIR>, org.apache.spark.executor.CoarseGrainedExecutorBackend, --driver-url, akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler, --executor-id, 1, --hostname, hadoop0006, --cores, 1, --app-id, application_1459834335246_0046, --user-class-path, file:$PWD/__app__.jar, 1>, <LOG_DIR>/stdout, 2>, <LOG_DIR>/stderr)

16/06/08 18:27:32 INFO yarn.ExecutorRunnable: Setting up executor with commands: List({{JAVA_HOME}}/bin/java, -server, -XX:OnOutOfMemoryError='kill %p', -Xms1024m, -Xmx1024m, -Djava.io.tmpdir={{PWD}}/tmp, '-Dspark.driver.port=35307', '-Dspark.ui.port=0', -Dspark.yarn.app.container.log.dir=<LOG_DIR>, org.apache.spark.executor.CoarseGrainedExecutorBackend, --driver-url, akka.tcp://sparkDriver@172.16.0.8:35307/user/CoarseGrainedScheduler, --executor-id, 2, --hostname, hadoop0004, --cores, 1, --app-id, application_1459834335246_0046, --user-class-path, file:$PWD/__app__.jar, 1>, <LOG_DIR>/stdout, 2>, <LOG_DIR>/stderr)

16/06/08 18:27:32 INFO impl.ContainerManagementProtocolProxy: Opening proxy : hadoop0006:54193

16/06/08 18:27:32 INFO impl.ContainerManagementProtocolProxy: Opening proxy : hadoop0004:59686

16/06/08 18:27:39 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0006:56187

16/06/08 18:27:40 INFO cluster.YarnClusterSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@hadoop0006:34841/user/Executor#1222655465]) with ID 1

16/06/08 18:27:40 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop0006:59977 with 530.3 MB RAM, BlockManagerId(1, hadoop0006, 59977)

16/06/08 18:27:40 INFO yarn.ApplicationMaster$AMEndpoint: Driver terminated or disconnected! Shutting down. hadoop0004:38468

16/06/08 18:27:41 INFO cluster.YarnClusterSchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@hadoop0004:54890/user/Executor#321092120]) with ID 2

16/06/08 18:27:41 INFO cluster.YarnClusterSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

16/06/08 18:27:41 INFO cluster.YarnClusterScheduler: YarnClusterScheduler.postStartHook done

16/06/08 18:27:41 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop0004:50555 with 530.3 MB RAM, BlockManagerId(2, hadoop0004, 50555)

16/06/08 18:27:41 INFO storage.MemoryStore: ensureFreeSpace(247280) called with curMem=0, maxMem=1030823608

16/06/08 18:27:41 INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 241.5 KB, free 982.8 MB)

16/06/08 18:27:41 INFO storage.MemoryStore: ensureFreeSpace(21526) called with curMem=247280, maxMem=1030823608

16/06/08 18:27:41 INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 21.0 KB, free 982.8 MB)

16/06/08 18:27:41 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.16.0.8:40977 (size: 21.0 KB, free: 983.0 MB)

16/06/08 18:27:41 INFO spark.SparkContext: Created broadcast 0 from textFile at Test.java:102

16/06/08 18:27:42 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

16/06/08 18:27:42 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 826e7d8d3e839964dd9ed2d5f83296254b2c71d3]

16/06/08 18:27:42 INFO mapred.FileInputFormat: Total input paths to process : 4

16/06/08 18:27:42 INFO spark.SparkContext: Starting job: show at Test.java:93

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Got job 0 (show at Test.java:93) with 1 output partitions (allowLocal=false)

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Final stage: ResultStage 0(show at Test.java:93)

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Parents of final stage: List()

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Missing parents: List()

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[4] at show at Test.java:93), which has no missing parents

16/06/08 18:27:43 INFO storage.MemoryStore: ensureFreeSpace(5024) called with curMem=268806, maxMem=1030823608

16/06/08 18:27:43 INFO storage.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 982.8 MB)

16/06/08 18:27:43 INFO storage.MemoryStore: ensureFreeSpace(2864) called with curMem=273830, maxMem=1030823608

16/06/08 18:27:43 INFO storage.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.8 KB, free 982.8 MB)

16/06/08 18:27:43 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on 172.16.0.8:40977 (size: 2.8 KB, free: 983.0 MB)

16/06/08 18:27:43 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:874

16/06/08 18:27:43 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[4] at show at Test.java:93)

16/06/08 18:27:43 INFO cluster.YarnClusterScheduler: Adding task set 0.0 with 1 tasks

16/06/08 18:27:43 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, hadoop0006, NODE_LOCAL, 1439 bytes)

16/06/08 18:27:43 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on hadoop0006:59977 (size: 2.8 KB, free: 530.3 MB)

16/06/08 18:27:44 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop0006:59977 (size: 21.0 KB, free: 530.3 MB)

16/06/08 18:27:45 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2484 ms on hadoop0006 (1/1)

16/06/08 18:27:45 INFO cluster.YarnClusterScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/06/08 18:27:45 INFO scheduler.DAGScheduler: ResultStage 0 (show at Test.java:93) finished in 2.489 s

16/06/08 18:27:45 INFO scheduler.DAGScheduler: Job 0 finished: show at Test.java:93, took 2.582450 s

16/06/08 18:27:45 ERROR yarn.ApplicationMaster: User class threw exception: java.lang.RuntimeException: path /cem/results already exists.

java.lang.RuntimeException: path /cem/results already exists.

at scala.sys.package$.error(package.scala:27)

at org.apache.spark.sql.json.DefaultSource.createRelation(JSONRelation.scala:91)

at org.apache.spark.sql.sources.ResolvedDataSource$.apply(ddl.scala:309)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:144)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:135)

at com.ctsi.cem.Test.main(Test.java:95)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:483)

16/06/08 18:27:45 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 15, (reason: User class threw exception: java.lang.RuntimeException: path /cem/results already exists.)

16/06/08 18:27:45 INFO spark.SparkContext: Invoking stop() from shutdown hook

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/metrics/json,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/kill,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/api,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/static,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump/json,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/json,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment/json,null}

16/06/08 18:27:45 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment,null}