准备工作

1.编译FFmpeg

下载最新版的FFmpeg,具体编译步骤参考文章:FFmpeg的Android平台移植—编译篇。

对于FFmpeg不太了解的可以先阅读雷霄骅的FFmpeg博客专栏。

2.开发环境

Windows 10

Android Studio 1.4

android-ndk-r10d

FFmpeg 3.0

具体的环境配置这里不细讲,可参考Android Studio + NDK的环境配置。

建立audioplayer工程

本文章仅介绍音频播放部分,不包含视频播放,视频播放参见Android+FFmpeg+ANativeWindow视频解码播放。

1.建立AS工程

本文采用直接从SD卡中读取音频文件进行播放,同时还要添加音频许可,因此在AndroidManifest.xml文件中添加权限:

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />在本例中使用两个功能按钮,播放和停止:

<Button

android:id="@+id/play"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="play"/>

<Button

android:id="@+id/stop"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="stop"/>在MainActivity.java中实现这两个按钮的功能:

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

... ...

Button playBtn = (Button) findViewById(R.id.play);

Button stopBtn = (Button) findViewById(R.id.stop);

playBtn.setOnClickListener(this);

stopBtn.setOnClickListener(this);

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.play :

new Thread(new Runnable() {

@Override

public void run() {

AudioPlayer.play();

}

}).start();

break;

case R.id.stop :

new Thread(new Runnable() {

@Override

public void run() {

AudioPlayer.stop();

}

}).start();

break;

}

}同样音频播放为耗时操作,为了不阻塞UI线程,需要新建线程播放音频。AudioPlayer类的具体实现为:

public class AudioPlayer {

public static native int play();

public static native int stop();

static {

System.loadLibrary("AudioPlayer");

}

}在AudioPlayer类中需要加载动态库,并将play和stop方法声明为本地方法。现在可以使用javah命令生成头文件:jonesx_audioplayer_AudioPlayer.h。

2.实现解码播放

首先按照文章FFmpeg的Android平台移植—编译篇:

新建jni目录,并将编译好的FFmpeg目录中的include和prebuilt文件夹拷贝到jni目录下。

在AudioPlayer.c文件中衔接的音频解码播放:

#include "log.h"

#include "jonesx_audioplayer_AudioPlayer.h"

#include "AudioDevice.h"

JNIEXPORT jint JNICALL Java_jonesx_audioplayer_AudioPlayer_play

(JNIEnv * env, jclass clazz)

{

LOGD("play");

play();

}

JNIEXPORT jint JNICALL Java_jonesx_audioplayer_AudioPlayer_stop

(JNIEnv * env, jclass clazz)

{

LOGD("stop");

shutdown();

}在AudioDevice.c中实现play和shutdown方法,主要参考ndk自带例子native_audio和Android 音频 OpenSL ES PCM数据播放。

#include "AudioDevice.h"

#include "FFmpegAudioPlay.h"

#include <assert.h>

#include <jni.h>

#include <string.h>

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

// for native asset manager

#include <sys/types.h>

#include <android/asset_manager.h>

#include <android/asset_manager_jni.h>

#include "log.h"

// engine interfaces

static SLObjectItf engineObject = NULL;

static SLEngineItf engineEngine;

// output mix interfaces

static SLObjectItf outputMixObject = NULL;

static SLEnvironmentalReverbItf outputMixEnvironmentalReverb = NULL;

// buffer queue player interfaces

static SLObjectItf bqPlayerObject = NULL;

static SLPlayItf bqPlayerPlay;

static SLAndroidSimpleBufferQueueItf bqPlayerBufferQueue;

static SLEffectSendItf bqPlayerEffectSend;

static SLMuteSoloItf bqPlayerMuteSolo;

static SLVolumeItf bqPlayerVolume;

// aux effect on the output mix, used by the buffer queue player

static const SLEnvironmentalReverbSettings reverbSettings =

SL_I3DL2_ENVIRONMENT_PRESET_STONECORRIDOR;

static void *buffer;

static size_t bufferSize;

// this callback handler is called every time a buffer finishes playing

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context)

{

LOGD("playerCallback");

assert(bq == bqPlayerBufferQueue);

bufferSize = 0;

//assert(NULL == context);

getPCM(&buffer, &bufferSize);

// for streaming playback, replace this test by logic to find and fill the next buffer

if (NULL != buffer && 0 != bufferSize) {

SLresult result;

// enqueue another buffer

result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue, buffer,

bufferSize);

// the most likely other result is SL_RESULT_BUFFER_INSUFFICIENT,

// which for this code example would indicate a programming error

assert(SL_RESULT_SUCCESS == result);

LOGD("Enqueue:%d", result);

(void)result;

}

}

void createEngine()

{

LOGD("createEngine");

SLresult result;

// create engine

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

LOGD("result:%d", result);

// realize the engine

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

LOGD("result:%d", result);

// get the engine interface, which is needed in order to create other objects

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine);

LOGD("result:%d", result);

// create output mix, with environmental reverb specified as a non-required interface

const SLInterfaceID ids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean req[1] = {SL_BOOLEAN_FALSE};

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 0, 0, 0);

LOGD("result:%d", result);

// realize the output mix

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

LOGD("result:%d", result);

// get the environmental reverb interface

// this could fail if the environmental reverb effect is not available,

// either because the feature is not present, excessive CPU load, or

// the required MODIFY_AUDIO_SETTINGS permission was not requested and granted

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB,

&outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

}

LOGD("result:%d", result);

}

// create buffer queue audio player

void createBufferQueueAudioPlayer(int rate, int channel, int bitsPerSample)

{

LOGD("createBufferQueueAudioPlayer");

SLresult result;

// configure audio source

SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

SLDataFormat_PCM format_pcm;

format_pcm.formatType = SL_DATAFORMAT_PCM;

format_pcm.numChannels = channel;

format_pcm.samplesPerSec = rate * 1000;

format_pcm.bitsPerSample = bitsPerSample;

format_pcm.containerSize = 16;

if (channel == 2)

format_pcm.channelMask = SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT;

else

format_pcm.channelMask = SL_SPEAKER_FRONT_CENTER;

format_pcm.endianness = SL_BYTEORDER_LITTLEENDIAN;

SLDataSource audioSrc = {&loc_bufq, &format_pcm};

// configure audio sink

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

// create audio player

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND,

/*SL_IID_MUTESOLO,*/ SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE,

/*SL_BOOLEAN_TRUE,*/ SL_BOOLEAN_TRUE};

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &audioSrc, &audioSnk,

3, ids, req);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// realize the player

result = (*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// get the play interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerPlay);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// get the buffer queue interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE,

&bqPlayerBufferQueue);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// register callback on the buffer queue

result = (*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, NULL);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// get the effect send interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_EFFECTSEND,

&bqPlayerEffectSend);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// get the volume interface

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME, &bqPlayerVolume);

assert(SL_RESULT_SUCCESS == result);

(void)result;

// set the player's state to playing

result = (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_PLAYING);

assert(SL_RESULT_SUCCESS == result);

(void)result;

}

void play()

{

int rate, channel;

// 创建FFmpeg音频解码器

createFFmpegAudioPlay(&rate, &channel);

// 创建播放引擎

createEngine();

// 创建缓冲队列音频播放器

createBufferQueueAudioPlayer(rate, channel, SL_PCMSAMPLEFORMAT_FIXED_16);

// 启动音频播放

bqPlayerCallback(bqPlayerBufferQueue, NULL);

}

// shut down the native audio system

void shutdown()

{

// destroy buffer queue audio player object, and invalidate all associated interfaces

if (bqPlayerObject != NULL) {

(*bqPlayerObject)->Destroy(bqPlayerObject);

bqPlayerObject = NULL;

bqPlayerPlay = NULL;

bqPlayerBufferQueue = NULL;

bqPlayerEffectSend = NULL;

bqPlayerMuteSolo = NULL;

bqPlayerVolume = NULL;

}

// destroy output mix object, and invalidate all associated interfaces

if (outputMixObject != NULL) {

(*outputMixObject)->Destroy(outputMixObject);

outputMixObject = NULL;

outputMixEnvironmentalReverb = NULL;

}

// destroy engine object, and invalidate all associated interfaces

if (engineObject != NULL) {

(*engineObject)->Destroy(engineObject);

engineObject = NULL;

engineEngine = NULL;

}

// 释放FFmpeg解码器相关资源

releaseFFmpegAudioPlay();

}接下来实现FFmpeg解码音频文件,具体实现在FFmpegAudioPlay.c中:

#include "log.h"

#include "FFmpegAudioPlay.h"

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavutil/samplefmt.h"

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

uint8_t *outputBuffer;

size_t outputBufferSize;

AVPacket packet;

int audioStream;

AVFrame *aFrame;

SwrContext *swr;

AVFormatContext *aFormatCtx;

AVCodecContext *aCodecCtx;

int createFFmpegAudioPlay(int *rate, int *channel) {

av_register_all();

aFormatCtx = avformat_alloc_context();

// 本地音频文件

char *file_name = "/sdcard/Video/00.mp3";

// Open audio file

if (avformat_open_input(&aFormatCtx, file_name, NULL, NULL) != 0) {

LOGE("Couldn't open file:%s\n", file_name);

return -1; // Couldn't open file

}

// Retrieve stream information

if (avformat_find_stream_info(aFormatCtx, NULL) < 0) {

LOGE("Couldn't find stream information.");

return -1;

}

// Find the first audio stream

int i;

audioStream = -1;

for (i = 0; i < aFormatCtx->nb_streams; i++) {

if (aFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO &&

audioStream < 0) {

audioStream = i;

}

}

if (audioStream == -1) {

LOGE("Couldn't find audio stream!");

return -1;

}

// Get a pointer to the codec context for the video stream

aCodecCtx = aFormatCtx->streams[audioStream]->codec;

// Find the decoder for the audio stream

AVCodec *aCodec = avcodec_find_decoder(aCodecCtx->codec_id);

if (!aCodec) {

fprintf(stderr, "Unsupported codec!\n");

return -1;

}

if (avcodec_open2(aCodecCtx, aCodec, NULL) < 0) {

LOGE("Could not open codec.");

return -1; // Could not open codec

}

aFrame = av_frame_alloc();

// 设置格式转换

swr = swr_alloc();

av_opt_set_int(swr, "in_channel_layout", aCodecCtx->channel_layout, 0);

av_opt_set_int(swr, "out_channel_layout", aCodecCtx->channel_layout, 0);

av_opt_set_int(swr, "in_sample_rate", aCodecCtx->sample_rate, 0);

av_opt_set_int(swr, "out_sample_rate", aCodecCtx->sample_rate, 0);

av_opt_set_sample_fmt(swr, "in_sample_fmt", aCodecCtx->sample_fmt, 0);

av_opt_set_sample_fmt(swr, "out_sample_fmt", AV_SAMPLE_FMT_S16, 0);

swr_init(swr);

// 分配PCM数据缓存

outputBufferSize = 8196;

outputBuffer = (uint8_t *) malloc(sizeof(uint8_t) * outputBufferSize);

// 返回sample rate和channels

*rate = aCodecCtx->sample_rate;

*channel = aCodecCtx->channels;

return 0;

}

// 获取PCM数据, 自动回调获取

int getPCM(void **pcm, size_t *pcmSize) {

LOGD("getPcm");

while (av_read_frame(aFormatCtx, &packet) >= 0) {

int frameFinished = 0;

// Is this a packet from the audio stream?

if (packet.stream_index == audioStream) {

avcodec_decode_audio4(aCodecCtx, aFrame, &frameFinished, &packet);

if (frameFinished) {

// data_size为音频数据所占的字节数

int data_size = av_samples_get_buffer_size(

aFrame->linesize, aCodecCtx->channels,

aFrame->nb_samples, aCodecCtx->sample_fmt, 1);

// 这里内存再分配可能存在问题

if (data_size > outputBufferSize) {

outputBufferSize = data_size;

outputBuffer = (uint8_t *) realloc(outputBuffer,

sizeof(uint8_t) * outputBufferSize);

}

// 音频格式转换

swr_convert(swr, &outputBuffer, aFrame->nb_samples,

(uint8_t const **) (aFrame->extended_data),

aFrame->nb_samples);

// 返回pcm数据

*pcm = outputBuffer;

*pcmSize = data_size;

return 0;

}

}

}

return -1;

}

// 释放相关资源

int releaseFFmpegAudioPlay()

{

av_packet_unref(&packet);

av_free(outputBuffer);

av_free(aFrame);

avcodec_close(aCodecCtx);

avformat_close_input(&aFormatCtx);

return 0;

}其中的音频转换问题参考自:How to convert sample rate from AV_SAMPLE_FMT_FLTP to AV_SAMPLE_FMT_S16?。

现在需要编写Android.mk文件:

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_MODULE := avcodec

LOCAL_SRC_FILES := prebuilt/libavcodec-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avformat

LOCAL_SRC_FILES := prebuilt/libavformat-57.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := avutil

LOCAL_SRC_FILES := prebuilt/libavutil-55.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swresample

LOCAL_SRC_FILES := prebuilt/libswresample-2.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := swscale

LOCAL_SRC_FILES := prebuilt/libswscale-4.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_SRC_FILES := AudioPlayer.c FFmpegAudioPlay.c AudioDevice.c

LOCAL_LDLIBS += -llog -lz -landroid -lOpenSLES

LOCAL_MODULE := AudioPlayer

LOCAL_C_INCLUDES += $(LOCAL_PATH)/include

LOCAL_SHARED_LIBRARIES:= avcodec avformat avutil swresample swscale

include $(BUILD_SHARED_LIBRARY)同样由于没有用到device和filter库中的方法,因此并未连接这两个动态库。

Application.mk文件如下:

APP_ABI := armeabi

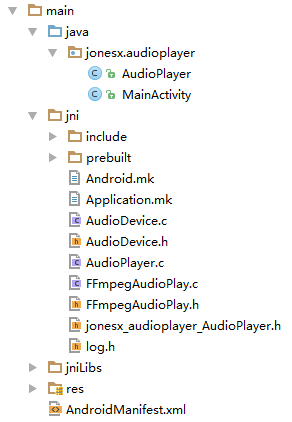

APP_PLATFORM := android-9经过ndk-build之后,整个工程的主要目录结构如下图所示:

3.运行结果

同样为了使工程能够运行,还需要在build.gradle文件中添加一行代码:

android {

... ...

sourceSets.main.jni.srcDirs = []

... ...

}由于本例为音频播放,因此无法贴出运行结果。

458

458

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?