分批次读取数据

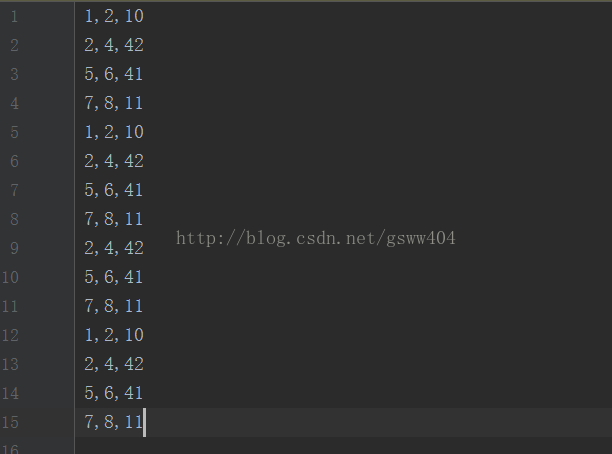

数据模板:

源代码

-------------------------------------------------

import tensorflow as tf

import numpy as np

def readMyFileFormat(fileNameQueue):

reader = tf.TextLineReader()

key, value = reader.read(fileNameQueue)

record_defaults = [[1.0], [1.0], [1.0]]

x1, x2, x3 = tf.decode_csv(value, record_defaults=record_defaults)

features = tf.stack([x1, x2])

label = x3

return features, label

def inputPipeLine(fileNames=["Test.csv"], batchSize = 4, numEpochs = None):

fileNameQueue = tf.train.string_input_producer(fileNames, num_epochs = numEpochs)

example, label = readMyFileFormat(fileNameQueue)

min_after_dequeue = 8

capacity = min_after_dequeue + 3 * batchSize

exampleBatch, labelBatch = tf.train.shuffle_batch([example, label], batch_size=batchSize, num_threads=3, capacity=capacity, min_after_dequeue=min_after_dequeue)

return exampleBatch, labelBatch

featureBatch, labelBatch = inputPipeLine(["Test.csv"], batchSize=4)

with tf.Session() as sess:

# Start populating the filename queue.

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(coord=coord)

example, label = sess.run([featureBatch, labelBatch])

print(example, label)

coord.request_stop()

coord.join(threads)

sess.close()

----------------------------------------------------------------------------------------------------------------------------------------------------

出现错误:

C:/Users/User/PycharmProjects/Test/test.py

2017-09-25 15:04:46.598434: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.598711: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.598974: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE3 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.599224: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.599460: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.599708: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.599948: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-25 15:04:46.600168: W c:\tf_jenkins\home\workspace\release-win\m\windows\py\35\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

[[ 7. 8.]

[ 2. 4.]

[ 1. 2.]

[ 1. 2.]] [ 11. 42. 10. 10.]

Traceback (most recent call last):

File "C:/Users/JZ/PycharmProjects/Test/test.py", line 30, in <module>

coord.join(threads)

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\site-packages\tensorflow\python\training\coordinator.py", line 389, in join

six.reraise(*self._exc_info_to_raise)

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\site-packages\six.py", line 686, in reraise

raise value

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\site-packages\tensorflow\python\training\queue_runner_impl.py", line 238, in _run

enqueue_callable()

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\site-packages\tensorflow\python\client\session.py", line 1063, in _single_operation_run

target_list_as_strings, status, None)

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\contextlib.py", line 66, in __exit__

next(self.gen)

File "C:\Users\JZ\AppData\Local\Programs\Python\Python35\lib\site-packages\tensorflow\python\framework\errors_impl.py", line 466, in raise_exception_on_not_ok_status

pywrap_tensorflow.TF_GetCode(status))

tensorflow.python.framework.errors_impl.InvalidArgumentError: Expect 3 fields but have 0 in record 0

[[Node: DecodeCSV = DecodeCSV[OUT_TYPE=[DT_FLOAT, DT_FLOAT, DT_FLOAT], field_delim=",", _device="/job:localhost/replica:0/task:0/cpu:0"](ReaderReadV2:1, DecodeCSV/record_defaults_0, DecodeCSV/record_defaults_1, DecodeCSV/record_defaults_2)]]

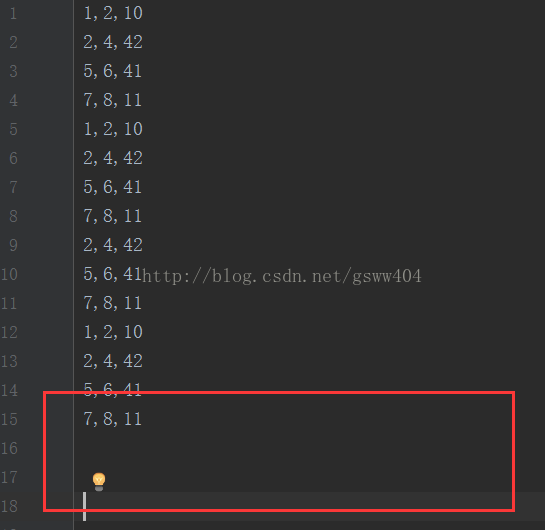

错误原因:很可能是CSV文件数据最后还有多余的行,例如我出现的原因

Similarly, you might want to check out your data file to see if there is any wrong with it, even if it is as trivial as "a blank line".

参考资料:

https://stackoverflow.com/questions/43781143/tensorflow-decode-csv-expect-3-fields-but-have-5-in-record-0-when-given-5-de

http://blog.csdn.net/yiqingyang2012/article/details/68485382

http://wiki.jikexueyuan.com/project/tensorflow-zh/how_tos/reading_data.html

tensorflow官方文档

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?