opencv 特征点提取、匹配(二)

demo: http://download.csdn.net/detail/keen_zuxwang/9852587

RANSAC是“RANdom SAmple Consensus随机抽样一致”的缩写。

它可以从一组包含“局外点”的观测数据集中,通过迭代方式估计数学模型的参数。

它是一种不确定的算法——它有一定的概率得出一个合理的结果,为了提高概率必须提高迭代次数。

该算法最早由Fischler和Bolles于1981年提出。

RANSAC的基本假设是:

1、数据由“局内点”组成,如:数据的分布可以用一些模型参数来解释;

2、“局外点”是不能适应该模型的数据;

3、除此之外的数据属于噪声。局外点产生的原因有:噪声的极值;错误的测量方法;对数据的错误假设

//! computes the best-fit perspective transformation mapping srcPoints to dstPoints.

CV_EXPORTS_W Mat findHomography( InputArray srcPoints, InputArray dstPoints,

int method=0, double ransacReprojThreshold=3,

OutputArray mask=noArray());功能寻找两个平面匹配上的关键点的变换

srcPoints:

原始平面中点的坐标,一个CV_32FC2 or vector 型矩阵;

dstPoints:

目标平面中点的坐标,一个CV_32FC2 or vector 型矩阵;

method:

用于计算单应矩阵的方法,是一个枚举值(默认为0,表示使用所有点的常规方法)

CV_RANSAC:基于RANSAC的鲁棒方法;

CV_LMEDS:最不平均方法;

ransacReprojThreshold:

仅当使用CV_RANSAC方法时,把一双点作为内窗的最大投影误差;

mask:

鲁棒方法的可选输出掩码设置;

mask参数,若有N个匹配点用来计算单应矩阵,则该值有N个元素,每个元素的值为0或者1.

值为0时,代表该匹配点事错误的匹配(离群值),只在使用RANSAC和LMeds方法时该值有效,

可以使用该值来删除错误的匹配—指出匹配的点是不是离群值,用来优化匹配结果

//! performs perspective transformation of each element of multi-channel input matrix

CV_EXPORTS_W void perspectiveTransform(InputArray src, OutputArray dst, InputArray m );功能:向量的投影矩阵转换

src:

输入2通道或3通道的浮点阵列,每个元素是一个将被转换的2D/3D向量;

dst:

与输入有相同大小、类型的输出矩阵;

m:

3x3 或4x4的浮点转换矩阵;

// Draws matches of keypints from two images on output image.

CV_EXPORTS void drawMatches( const Mat& img1, const vector<KeyPoint>& keypoints1,

const Mat& img2, const vector<KeyPoint>& keypoints2,

const vector<DMatch>& matches1to2, Mat& outImg,

const Scalar& matchColor=Scalar::all(-1), const Scalar& singlePointColor=Scalar::all(-1),

const vector<char>& matchesMask=vector<char>(), int flags=DrawMatchesFlags::DEFAULT );功能:从两幅图片中画出发现的匹配的关键点

img1:源图像

img2:源图像;

keypoints1:源图像中的关键点

keypoints2:源图像中的关键点;

matches1to2:从第一幅图像来匹配第二幅图像,即从keypoints1[i]中寻找与keypoints2[i]的对应点;

outImg:输出图像;它的值取决于flags–在图像中绘制的内容;

matchColor:

匹配颜色(线和点的颜色),matchColor==Scalar::all(-1),颜色随机生成;

singlePointColor:

单个关键点的颜色(即没有关键点与之匹配),matchColor==Scalar::all(-1),颜色随机生成;

matchesMask:

掩码决定画哪个匹配的关键点,如果掩码为空,画出所有的匹配的关键点;

flags:设置绘图功能,可能标志位的值由“DrawMatchesFlags”确定;

JNI :

JNIEXPORT jlong JNICALL Java_com_example_orbhog_MainActivity_doFeatureMatch(JNIEnv *env, jclass clz, jlong imgObject, jlong imgScene)

{

Mat img_object = Mat(*(Mat*)imgObject);

cvtColor(img_object, img_object, CV_BGRA2BGR);

Mat img_scene = Mat(*(Mat*)imgScene);

cvtColor(img_scene, img_scene, CV_BGRA2BGR);

LOGD(" featureTest %d, %d !",img_object.cols, img_object.rows);

//initModule_nonfree();//only for SIFT or SURF

//#include "opencv2/features2d/features2d.hpp"中声明过了才能有效创建

Ptr<FeatureDetector> detector = FeatureDetector::create("ORB"); // FAST

Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create("ORB"); // BRIEF

Ptr<DescriptorMatcher> descriptor_matcher = DescriptorMatcher::create("BruteForce");

if( detector.empty() || descriptor.empty()) {

LOGD(" fail to create detector! ");

}

vector<KeyPoint> keypoints_object, keypoints_scene;

detector->detect( img_object, keypoints_object );

detector->detect( img_scene, keypoints_scene );

Mat descriptors_object, descriptors_scene;

descriptor->compute( img_object, keypoints_object, descriptors_object );

descriptor->compute( img_scene, keypoints_scene, descriptors_scene );

//Mat img_keypoints1,img_keypoints2;

//drawKeypoints(img1,keypoints1,img_keypoints1,Scalar::all(-1),0);

//drawKeypoints(img2,keypoints2,img_keypoints2,Scalar::all(-1),0);

vector<DMatch> matches;

descriptor_matcher->match( descriptors_object, descriptors_scene, matches );

double max_dist = 0;

double min_dist = 50;

long length = matches.size();

LOGD(" FlannBasedMatcher %ld \n", length );

//Quick calculation of max and min distances between keypoints

for(int i=0; i<length; i++) { //descriptors_object.rows

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

LOGD("-- Max dist : %f \n", max_dist );

LOGD("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

vector< DMatch > good_matches;

int m_start = length/4;

int m_end = length - m_start;

for(int i=m_start; i<length; i++) { // descriptors_object.rows

good_matches.push_back(matches[i]);

}

/*

double max_d = max_dist*0.9;

for(int i=0; i<length; i++) { // descriptors_object.rows

if( matches[i].distance > min_dist+ && matches[i].distance<max_d){

good_matches.push_back( matches[i]);

}

}

*/

Mat img_matches;

drawMatches(img_object, keypoints_object, img_scene, keypoints_scene,

good_matches,

img_matches,

Scalar::all(-1),

Scalar::all(-1),

vector<char>(),

DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

vector<Point2f> obj;

vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ ) {

//-- Get the keypoints from the good matches

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt); // query descriptor index

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt); // train descriptor index

}

Mat H = findHomography( obj, scene, CV_RANSAC );

// 根据匹配点计算单应矩阵H,并细化匹配结果(矩阵H存储RANSAC得到的单应矩阵,用于求透视矩阵), 寻找两个平面匹配上的关键点的变换

//Get the corners from the img_object ( the object to be "detected" )

vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows );

obj_corners[3] = cvPoint( 0, img_object.rows );

vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H); // 向量的投影矩阵转换

//-- Draw lines between the corners (the mapped object in the scene - img_scene )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

Mat *hist = new Mat(img_matches);

return (jlong) hist;

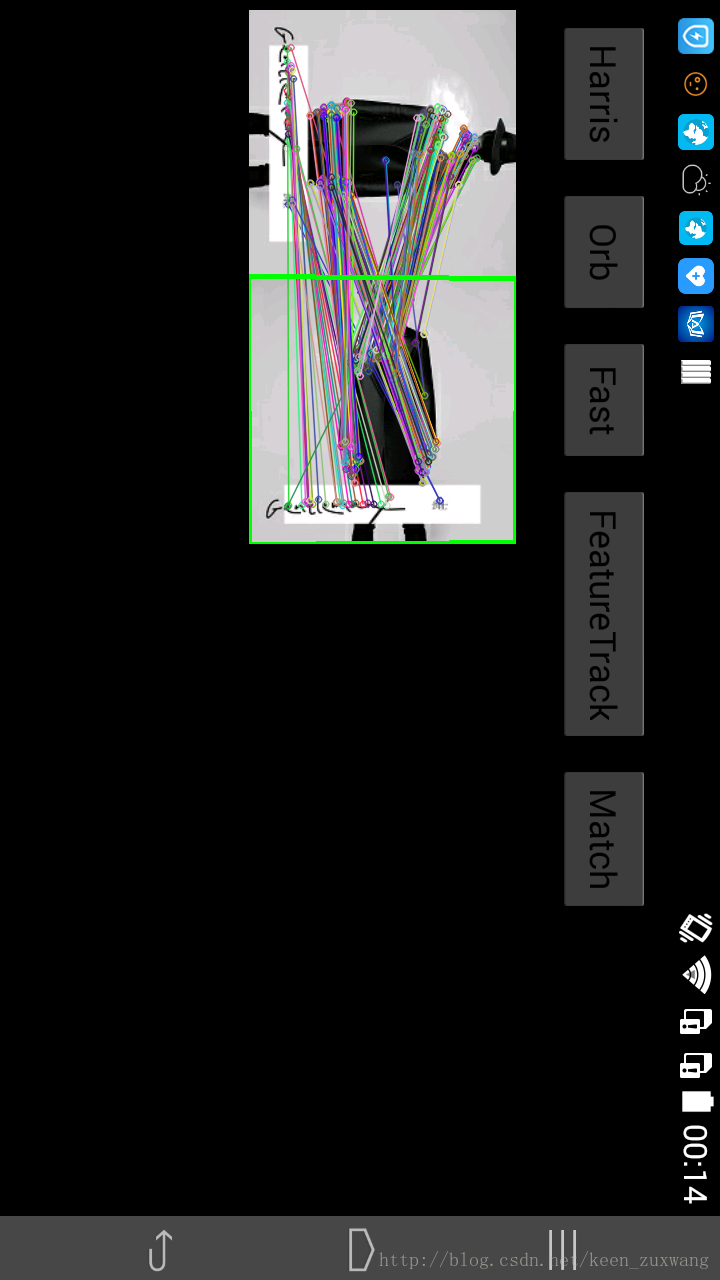

}ORB-ORB 匹配:

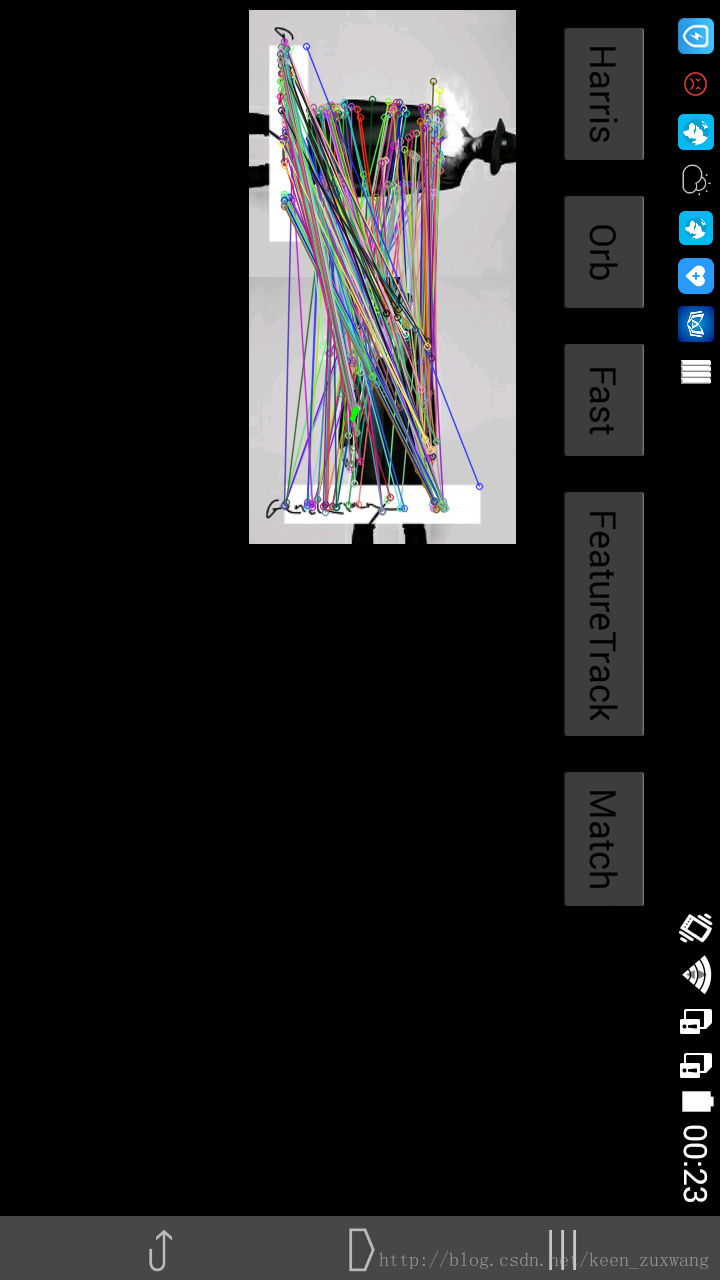

FAST-ORB 匹配:

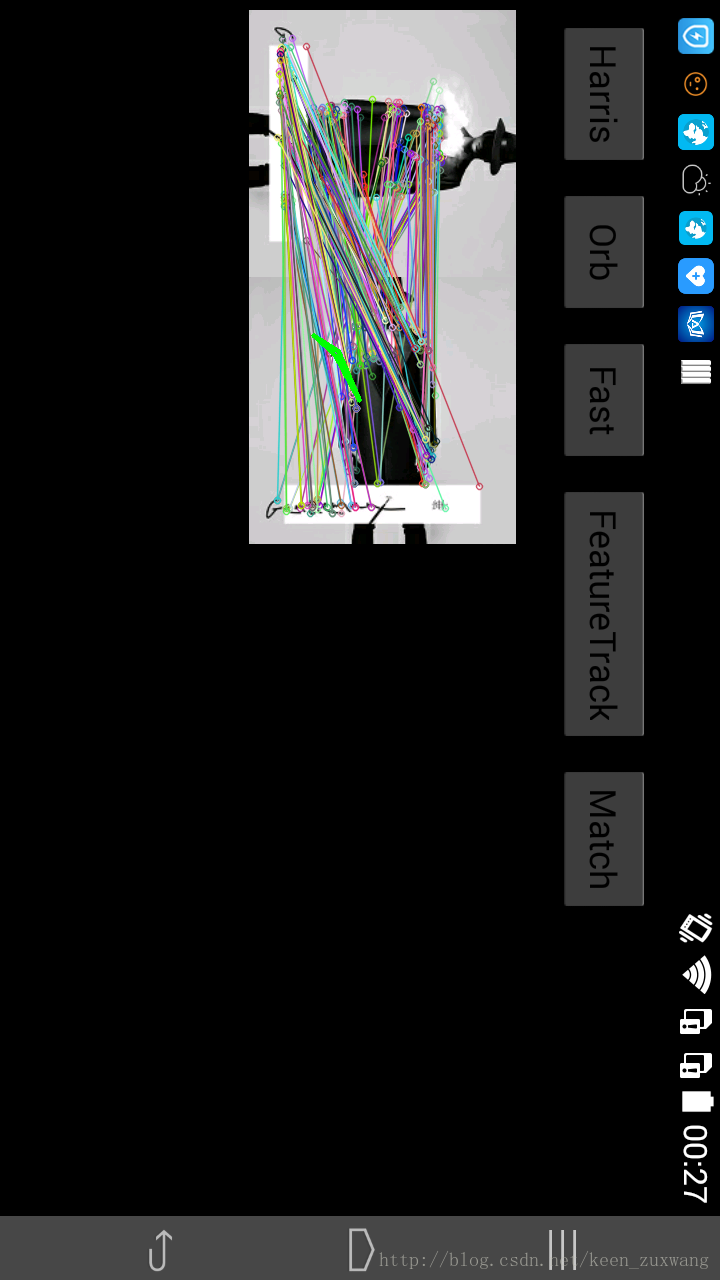

FAST-BRIEF 匹配:

3946

3946

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?