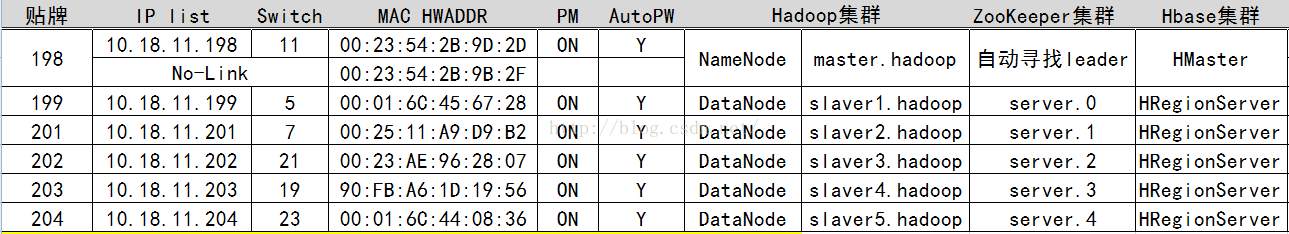

整体的配置表如下:

机器是从仓库里整理出来的箱底机器,已经属于淘汰货了。

总体说下,将198号机器为主机(master.hadoop),其它的主机全部当做从机(slaver1-slaver5.hadoop)。

设置每台机器的BIOS的电源管理,将其全部设置为上电开机。

操作系统采用的是centos 6.5 32bit

软件的版本如下:

jdk1.8.0_45

hadoop-2.2.0

zookeeper-3.4.5

hbase-0.98.8-hadoop2

apache-hive-0.14.0-bin

apache-mahout-1.0-bin

######################## master 198 ###########################

[root@localhost ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:23:54:2B:9D:2D

inet addr:10.18.11.198 Bcast:10.18.11.255 Mask:255.255.255.0

inet6 addr: fdd8:8f0:124b:0:223:54ff:fe2b:9d2d/64 Scope:Global

inet6 addr: fe80::223:54ff:fe2b:9d2d/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:93982 errors:0 dropped:0 overruns:0 frame:0

TX packets:1684 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8404629 (8.0 MiB) TX bytes:165374 (161.4 KiB)

Interrupt:16

## 修改本地域名及IP地址

[root@localhost ~]# vi /etc/hosts

[root@localhost ~]# vi /etc/sysconfig/network

[root@localhost ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.18.11.198 master.hadoop

10.18.11.199 slaver1.hadoop

10.18.11.201 slaver2.hadoop

10.18.11.202 slaver3.hadoop

10.18.11.203 slaver4.hadoop

10.18.11.204 slaver5.hadoop

[root@localhost ~]# cat /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=master.hadoop

[root@localhost ~]# reboot

## 禁用IPv6

[root@master ~]# vim /etc/modprobe.d/anaconda.conf

[root@master ~]# cat /etc/modprobe.d/anaconda.conf

# Module options and blacklists written by anaconda

install ipv6 /bin/true

[root@master ~]# lsmod |grep -i ipv6

nf_conntrack_ipv6 7207 2

nf_defrag_ipv6 8897 1 nf_conntrack_ipv6

nf_conntrack 65661 3 nf_conntrack_ipv4,nf_conntrack_ipv6,xt_state

ipv6 261089 35 ip6t_REJECT,nf_conntrack_ipv6,nf_defrag_ipv6

[root@master modprobe.d]# reboot

[root@master ~]# lsmod |grep -i ipv6

[root@master ~]# ifconfig | grep -i inet6

## 禁用iptables防火墙

[root@master ~]# /etc/init.d/iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@master ~]# chkconfig --list |grep iptables

iptables 0:off 1:off 2:on 3:on 4:on 5:on 6:off

[root@master ~]# chkconfig iptables off

[root@master ~]# chkconfig --list |grep iptables

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

## 建立用户和组

[root@master ~]# groupadd hadoop

[root@master ~]# useradd -g hadoop hduser

[root@master ~]# passwd hduser

Changing password for user hduser.

New password:

BAD PASSWORD: it is based on a dictionary word

Retype new password:

passwd: all authentication tokens updated successfully.

## 提升hduser的sudo权限

[root@master ~]# su - hduser

[hduser@master ~]$ ls

[hduser@master ~]$

[hduser@master ~]$

[hduser@master ~]$ sudo -i

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for hduser:

hduser is not in the sudoers file. This incident will be reported.

[root@master ~]# cat /etc/sudoers |grep -v "#" |grep -v "^$"

Defaults requiretty

Defaults !visiblepw

Defaults always_set_home

Defaults env_reset

Defaults env_keep = "COLORS DISPLAY HOSTNAME HISTSIZE INPUTRC KDEDIR LS_COLO RS"

Defaults env_keep += "MAIL PS1 PS2 QTDIR USERNAME LANG LC_ADDRESS LC_CTYPE"

Defaults env_keep += "LC_COLLATE LC_IDENTIFICATION LC_MEASUREMENT LC_MESSAGES "

Defaults env_keep += "LC_MONETARY LC_NAME LC_NUMERIC LC_PAPER LC_TELEPHONE"

Defaults env_keep += "LC_TIME LC_ALL LANGUAGE LINGUAS _XKB_CHARSET XAUTHORITY "

Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin

root ALL=(ALL) ALL

[root@master ~]# vi /etc/sudoers

[root@master ~]# cat /etc/sudoers |grep -v "#" |grep -v "^$"

Defaults requiretty

Defaults !visiblepw

Defaults always_set_home

Defaults env_reset

Defaults env_keep = "COLORS DISPLAY HOSTNAME HISTSIZE INPUTRC KDEDIR LS_COLO RS"

Defaults env_keep += "MAIL PS1 PS2 QTDIR USERNAME LANG LC_ADDRESS LC_CTYPE"

Defaults env_keep += "LC_COLLATE LC_IDENTIFICATION LC_MEASUREMENT LC_MESSAGES "

Defaults env_keep += "LC_MONETARY LC_NAME LC_NUMERIC LC_PAPER LC_TELEPHONE"

Defaults env_keep += "LC_TIME LC_ALL LANGUAGE LINGUAS _XKB_CHARSET XAUTHORITY "

Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin

root ALL=(ALL) ALL

hduser ALL=(ALL) ALL

[root@master ~]# chmod u-w /etc/sudoers

[root@master ~]# ls -l /etc/sudoers

-r--r-----. 1 root root 4024 Jan 19 11:20 /etc/sudoers

[root@master ~]# su - hduser

[hduser@master ~]$ sudo -i

[sudo] password for hduser:

[root@master ~]# ls

anaconda-ks.cfg install.log install.log.syslog

### 安装openssh-client 客户端

[root@master ~]# yum install -y openssh-clients

### 用户的无密码登陆

login as: hduser

hduser@10.18.11.198's password:

[hduser@master ~]$

[hduser@master ~]$

[hduser@master ~]$

[hduser@master ~]$ ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hduser/.ssh/id_rsa):

Created directory '/home/hduser/.ssh'.

Your identification has been saved in /home/hduser/.ssh/id_rsa.

Your public key has been saved in /home/hduser/.ssh/id_rsa.pub.

The key fingerprint is:

aa:cc:b1:86:6a:c9:3d:e2:52:15:b6:ae:cf:4b:ad:3a hduser@master.hadoop

The key's randomart image is:

+--[ RSA 2048]----+

| |

| o |

| . o |

| o |

| o S |

| . .. . |

|..ooo o |

|.=EB.= |

|=oo=@. |

+-----------------+

[hduser@master ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hduser@master ~]$ ssh localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

RSA key fingerprint is 6c:58:b9:5b:c6:a3:35:4f:49:cf:8b:10:0d:93:ae:81.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (RSA) to the list of known hosts.

Last login: Tue Jan 19 11:24:05 2016 from 10.17.74.212

[hduser@master ~]$ exit

logout

Connection to localhost closed.

[hduser@master ~]$ ssh master.hadoop

The authenticity of host 'master.hadoop (10.18.11.198)' can't be established.

RSA key fingerprint is 6c:58:b9:5b:c6:a3:35:4f:49:cf:8b:10:0d:93:ae:81.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'master.hadoop,10.18.11.198' (RSA) to the list of known hosts.

Last login: Tue Jan 19 11:27:12 2016 from localhost

[hduser@master ~]$ exit

logout

Connection to master.hadoop closed.

###将master上hduser的authorized_keys复制到所有的slaver1-slaver5的hduser目录下

scp /home/hduser/.ssh/authorized_keys hduser@slaver1.hadoop:/home/hduser/.ssh/

scp /home/hduser/.ssh/authorized_keys hduser@slaver2.hadoop:/home/hduser/.ssh/

scp /home/hduser/.ssh/authorized_keys hduser@slaver3.hadoop:/home/hduser/.ssh/

scp /home/hduser/.ssh/authorized_keys hduser@slaver4.hadoop:/home/hduser/.ssh/

scp /home/hduser/.ssh/authorized_keys hduser@slaver5.hadoop:/home/hduser/.ssh/

#如果出现拷贝不成功的问题,请检查是否存在/home/hduser/.ssh目录,如果不存在,请建立完hduser用户后,执行ssh localhost一下即可。

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Fri Sep 23 15:03:21 2016 from master.hadoop

[hduser@slaver1 ~]$ exit

logout

Connection to slaver1.hadoop closed.

[hduser@master ~]$ ssh slaver2.hadoop

Last login: Fri Sep 23 23:03:55 2016 from localhost

[hduser@slaver2 ~]$ exit

logout

Connection to slaver2.hadoop closed.

[hduser@master ~]$ ssh slaver3.hadoop

Last login: Fri Sep 23 23:04:31 2016 from localhost

[hduser@slaver3 ~]$ exit

logout

Connection to slaver3.hadoop closed.

[hduser@master ~]$ ssh slaver4.hadoop

The authenticity of host 'slaver4.hadoop (10.18.11.203)' can't be established.

RSA key fingerprint is bf:89:d8:50:4b:11:db:91:ca:f7:fd:2a:68:7d:07:74.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slaver4.hadoop' (RSA) to the list of known hosts.

Last login: Fri Sep 23 23:02:22 2016 from localhost

[hduser@slaver4 ~]$ exit

logout

Connection to slaver4.hadoop closed.

[hduser@master ~]$ ssh slaver5.hadoop

The authenticity of host 'slaver5.hadoop (10.18.11.204)' can't be established.

RSA key fingerprint is a3:b2:b1:2f:76:d7:c4:39:32:3c:ca:b7:c8:54:6d:17.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slaver5.hadoop' (RSA) to the list of known hosts.

Last login: Fri Sep 23 22:51:19 2016 from localhost

[hduser@slaver5 ~]$ exit

logout

Connection to slaver5.hadoop closed.

### 安装jdk

[hduser@master ~]$ ls -l

total 170892

-rw-r--r--. 1 hduser hadoop 174985642 Jul 13 2015 jdk-8u45-linux-i586.tar.gz

[hduser@master ~]$ tar zxvf jdk-8u45-linux-i586.tar.gz

[hduser@master ~]$ sudo mv jdk1.8.0_45 /usr/local/

[sudo] password for hduser:

[hduser@master ~]$ sudo vim /etc/profile

[hduser@master ~]$ tail -n 4 /etc/profile

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

[hduser@master ~]$ source /etc/profile

[hduser@master ~]$ java -version

java version "1.8.0_45"

Java(TM) SE Runtime Environment (build 1.8.0_45-b14)

Java HotSpot(TM) Client VM (build 25.45-b02, mixed mode)

### 建立目录

[hduser@master ~]$ sudo mkdir -p /usr/local/app/hadoop

[sudo] password for hduser:

[hduser@master ~]$ sudo chown -R hduser:hadoop /usr/local/app/hadoop

[hduser@master ~]$ mkdir -p /usr/local/app/hadoop/dfs/name

[hduser@master ~]$ mkdir -p /usr/local/app/hadoop/dfs/data

[hduser@master ~]$ mkdir -p /usr/local/app/hadoop/tmp/node

[hduser@master ~]$ mkdir -p /usr/local/app/hadoop/tmp/app-logs

[hduser@master ~]$ chmod 750 -R /usr/local/app/hadoop/dfs

[hduser@master ~]$ chmod 750 -R /usr/local/app/hadoop/tmp

[hduser@master ~]$ ls -ld /usr/local/app/hadoop/tmp/

drwxr-x---. 4 hduser hadoop 4096 Jan 19 11:56 /usr/local/app/hadoop/tmp/

[hduser@master ~]$ chmod -R 750 /usr/local/app/hadoop/dfs/

[hduser@master ~]$ ls -dl /usr/local/app/hadoop/dfs

drwxr-x---. 4 hduser hadoop 4096 Jan 19 11:53 /usr/local/app/hadoop/dfs

[root@master hadoop]# ls -l dfs/

total 8

drwxr-x---. 2 hduser hadoop 4096 Jan 19 11:53 data

drwxr-x---. 2 hduser hadoop 4096 Jan 19 11:53 name

[root@master hadoop]# ls -l tmp/

total 8

drwxr-x---. 2 hduser hadoop 4096 Jan 19 11:53 app-logs

drwxr-x---. 2 hduser hadoop 4096 Jan 19 11:53 node

### 安装hadoop

[hduser@master ~]$ ls

hadoop-2.2.0-32bit.tar.gz jdk-8u45-linux-i586.tar.gz

[hduser@master ~]$ tar zxvf hadoop-2.2.0-32bit.tar.gz

[hduser@master ~]$ sudo mv hadoop-2.2.0 /usr/local/

[sudo] password for hduser:

[hduser@master ~]$ sudo chown -R hduser:hadoop /usr/local/hadoop-2.2.0

### 配置hadoop环境

[hduser@master ~]$ vi /usr/local/hadoop-2.2.0/etc/hadoop/hadoop-env.sh

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/hadoop-env.sh | grep -rni "JAVA_HOME"

21:# The only required environment variable is JAVA_HOME. All others are

23:# set JAVA_HOME in this file, so that it is correctly defined on

27:#export JAVA_HOME=${JAVA_HOME}

28:export JAVA_HOME=/usr/local/jdk1.8.0_45

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/hadoop-env.sh | grep -rni "HADOOP_HEAPSIZE"

45:#export HADOOP_HEAPSIZE=

46:export HADOOP_HEAPSIZE=100

[hduser@master ~]$ vi /usr/local/hadoop-2.2.0/etc/hadoop/yarn-env.sh

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/yarn-env.sh | grep -rni "JAVA_HOME"

23:# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

24:export JAVA_HOME=/usr/local/jdk1.8.0_45

25:if [ "$JAVA_HOME" != "" ]; then

26: #echo "run java in $JAVA_HOME"

27: JAVA_HOME=$JAVA_HOME

30:if [ "$JAVA_HOME" = "" ]; then

31: echo "Error: JAVA_HOME is not set."

35:JAVA=$JAVA_HOME/bin/java

[hduser@master ~]$ vi /usr/local/hadoop-2.2.0/etc/hadoop/mapred-env.sh

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/mapred-env.sh | grep -rni "JAVA_HOME"

16:# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

17:export JAVA_HOME=/usr/local/jdk1.8.0_45

### 主要修改以下的几个xml档

[hduser@master ~]$ ls -l /usr/local/hadoop-2.2.0/etc/hadoop/*.xml*

-rw-r--r--. 1 hduser hadoop 3560 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/capacity-scheduler.xml

-rw-r--r--. 1 hduser hadoop 774 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/core-site.xml -----modify

-rw-r--r--. 1 hduser hadoop 9257 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/hadoop-policy.xml

-rw-r--r--. 1 hduser hadoop 775 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/hdfs-site.xml -----modify

-rw-r--r--. 1 hduser hadoop 620 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/httpfs-site.xml

-rw-r--r--. 1 hduser hadoop 4113 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/mapred-queues.xml.template

-rw-r--r--. 1 hduser hadoop 758 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/mapred-site.xml.template -----modify

-rw-r--r--. 1 hduser hadoop 2316 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/ssl-client.xml.example

-rw-r--r--. 1 hduser hadoop 2251 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/ssl-server.xml.example

-rw-r--r--. 1 hduser hadoop 690 Oct 7 2013 /usr/local/hadoop-2.2.0/etc/hadoop/yarn-site.xml -----modify

[hduser@master ~]$ vim /usr/local/hadoop-2.2.0/etc/hadoop/core-site.xml

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/core-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/app/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master.hadoop:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description></description>

</property>

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

</configuration>

[hduser@master ~]$ cp /usr/local/hadoop-2.2.0/etc/hadoop/hdfs-site.xml /usr/local/hadoop-2.2.0/etc/hadoop/hdfs-site.xml.old

[hduser@master ~]$ vim /usr/local/hadoop-2.2.0/etc/hadoop/hdfs-site.xml

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/hdfs-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master.hadoop:50060</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/app/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/app/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.http.address</name>

<value>master.hadoop:50070</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.datanode.du.reserved</name>

<value>1073741824</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

<description>Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.

</description>

</property>

</configuration>

[hduser@master ~]$ cp /usr/local/hadoop-2.2.0/etc/hadoop/mapred-site.xml.template /usr/local/hadoop-2.2.0/etc/hadoop/mapred-site.xml

[hduser@master ~]$ vim /usr/local/hadoop-2.2.0/etc/hadoop/mapred-site.xml

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/mapred-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master.hadoop:9020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master.hadoop:9888</value>

</property>

</configuration>

[hduser@master ~]$ cp /usr/local/hadoop-2.2.0/etc/hadoop/yarn-site.xml /usr/local/hadoop-2.2.0/etc/hadoop/yarn-site.xml.old

[hduser@master ~]$ vim /usr/local/hadoop-2.2.0/etc/hadoop/yarn-site.xml

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.address</name>

<value>master.hadoop:9001</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master.hadoop:9030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master.hadoop:9088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master.hadoop:9025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master.hadoop:9040</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

[hduser@master ~]$ vim /usr/local/hadoop-2.2.0/etc/hadoop/slaves

[hduser@master ~]$ cat /usr/local/hadoop-2.2.0/etc/hadoop/slaves

slaver1.hadoop

slaver2.hadoop

slaver3.hadoop

slaver4.hadoop

slaver5.hadoop

###将修改后的hadoop-2.2.0.tar.gz分别拷贝到对应的slaver硬盘中

[root@master ~]# scp /usr/local/hadoop-2.2.0.tar.gz root@10.18.11.201:/root/

root@10.18.11.201's password:

hadoop-2.2.0.tar.gz 100% 104MB 11.6MB/s 00:09

[root@master ~]# scp /usr/local/hadoop-2.2.0.tar.gz root@10.18.11.202:/root/

root@10.18.11.202's password:

hadoop-2.2.0.tar.gz 100% 104MB 11.6MB/s 00:09

[root@master ~]# scp /usr/local/hadoop-2.2.0.tar.gz root@10.18.11.203:/root/

root@10.18.11.203's password:

hadoop-2.2.0.tar.gz 100% 104MB 11.6MB/s 00:09

[root@master ~]# scp /usr/local/hadoop-2.2.0.tar.gz root@10.18.11.204:/root/

root@10.18.11.204's password:

hadoop-2.2.0.tar.gz 100% 104MB 10.4MB/s 00:10

master的配置已经完成。

slaver的配置仿照master的配置。具体过程参见:

######################## slaver1 #######################

###禁用IPV6

[root@slaver1 ~]# echo "install ipv6 /bin/true" >> /etc/modprobe.d/anaconda.conf

[root@slaver1 ~]# vim /etc/modprobe.d/anaconda.conf

[root@slaver1 ~]# lsmod |grep -i ipv6

nf_conntrack_ipv6 7207 2

nf_defrag_ipv6 8897 1 nf_conntrack_ipv6

nf_conntrack 65661 3 nf_conntrack_ipv4,nf_conntrack_ipv6,xt_state

ipv6 261089 35 ip6t_REJECT,nf_conntrack_ipv6,nf_defrag_ipv6

[root@slaver1 ~]# ifconfig |grep -i inet6

inet6 addr: fdd8:8f0:124b:0:201:6cff:fe45:6728/64 Scope:Global

inet6 addr: fe80::201:6cff:fe45:6728/64 Scope:Link

inet6 addr: ::1/128 Scope:Host

[root@slaver1 ~]# reboot

### 禁用防火墙

[root@slaver1 ~]# chkconfig --list |grep iptables

iptables 0:off 1:off 2:on 3:on 4:on 5:on 6:off

[root@slaver1 ~]# chkconfig iptables off

[root@slaver1 ~]# /etc/init.d/iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@slaver1 ~]# ls

anaconda-ks.cfg hadoop-2.2.0.tar.gz install.log install.log.syslog jdk-8u45-linux-i586.tar.gz

### 安装jdk

[root@slaver1 ~]# tar zxvf jdk-8u45-linux-i586.tar.gz -C /usr/local/

[root@slaver1 ~]# tail -n 4 /etc/profile

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

[root@slaver1 ~]# source /etc/profile

[root@slaver1 ~]# java -version

java version "1.8.0_45"

Java(TM) SE Runtime Environment (build 1.8.0_45-b14)

Java HotSpot(TM) Server VM (build 25.45-b02, mixed mode)

### 建立组及安装openssh-client

[root@slaver1 ~]# groupadd hadoop

[root@slaver1 ~]# useradd -g hadoop hduser

[root@slaver1 ~]# passwd hduser

Changing password for user hduser.

New password:

BAD PASSWORD: it is based on a dictionary word

Retype new password:

passwd: all authentication tokens updated successfully.

[root@slaver1 ~]# chmod u+w /etc/sudoers

[root@slaver1 ~]# vi /etc/sudoers

[root@slaver1 ~]# chmod u-w /etc/sudoers

[root@slaver1 ~]# yum install -y openssh-clients

### hduser 登陆

login as: hduser

hduser@10.18.11.199's password:

[hduser@slaver1 ~]$ ls

[hduser@slaver1 ~]$ sudo -i

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for hduser:

[root@slaver1 ~]# exit

logout

[hduser@slaver1 ~]$ sudo -i

[root@slaver1 ~]# exit

logout

### 创建目录

[hduser@slaver1 ~]$ sudo mkdir -p /usr/local/app/hadoop

[hduser@slaver1 ~]$ sudo chown -R hduser:hadoop /usr/local/app/hadoop

[hduser@slaver1 ~]$ mkdir -p /usr/local/app/hadoop/dfs/name

[hduser@slaver1 ~]$ mkdir -p /usr/local/app/hadoop/dfs/data

[hduser@slaver1 ~]$ mkdir -p /usr/local/app/hadoop/tmp/node

[hduser@slaver1 ~]$ mkdir -p /usr/local/app/hadoop/tmp/app-logs

[hduser@slaver1 ~]$ chmod 750 -R /usr/local/app/hadoop/dfs

[hduser@slaver1 ~]$ chmod 750 -R /usr/local/app/hadoop/tmp

[hduser@slaver1 ~]$ ls -ld /usr/local/app/hadoop/tmp/app-logs

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:25 /usr/local/app/hadoop/tmp/app-logs

[hduser@slaver1 ~]$ ls -l /usr/local/app/hadoop/dfs/

total 8

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:24 data

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:24 name

[hduser@slaver1 ~]$ ls -l /usr/local/app/hadoop/tmp/

total 8

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:25 app-logs

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:25 app-logs

drwxr-x---. 2 hduser hadoop 4096 Sep 23 13:25 node

### 安装和配置hadoop

[hduser@slaver1 ~]$ tar zxvf hadoop-2.2.0.tar.gz -C /usr/local/ <---此包配置文件已经做过修改,无需再次配置

[hduser@slaver1 ~]$ sudo chown -R hduser:hadoop /usr/local/hadoop-2.2.0

[sudo] password for hduser:上述执行完毕后,请进入master.hadoop做hdfs格式化

######## master hdfs格式化

[hduser@master ~]$ hdfs namenode -format

16/01/19 16:43:15 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master.hadoop/10.18.11.198

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /usr/local/hadoop-2.2.0/etc/hadoop:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.2.0 /share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop-2.2.0/share/hadoop /common/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jsp -api-2.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-el-1.0.jar: /usr/local/hadoop-2.2.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local /hadoop-2.2.0/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hado op-2.2.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/had oop-2.2.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop-2 .2.0/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop-2.2.0/share/h adoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop-2.2.0/share/hadoop/common /lib/activation-1.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons- logging-1.1.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-net-3. 1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/loca l/hadoop-2.2.0/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoo p-2.2.0/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop-2 .2.0/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop-2.2.0/share/hadoop/co mmon/lib/jets3t-0.6.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jetty- util-6.1.26.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jettison-1.1.jar :/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/loca l/hadoop-2.2.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/ha doop-2.2.0/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-2.2.0/share/hado op/common/lib/paranamer-2.3.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/ commons-collections-3.2.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/js ch-0.1.42.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-cli-1.2.ja r:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/ usr/local/hadoop-2.2.0/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/ hadoop-2.2.0/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop-2 .2.0/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.2.0/share/h adoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.2.0/share/hadoop/comm on/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jun it-4.8.2.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-lang-2.5.ja r:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-math-2.1.jar:/usr/loca l/hadoop-2.2.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop-2.2. 0/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop-2.2.0/share/hadoop/co mmon/lib/commons-io-2.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jasp er-runtime-5.5.23.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/xmlenc-0.5 2.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/l ocal/hadoop-2.2.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoo p-2.2.0/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop-2.2.0/sha re/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.2.0/share/hadoo p/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/pr otobuf-java-2.5.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/jasper-com piler-5.5.23.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/commons-configu ration-1.6.jar:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/guava-11.0.2.jar: /usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/h adoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop-2. 2.0/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoo p/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop-2.2.0/share/hadoop/comm on/hadoop-common-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs:/usr/local/ hadoop-2.2.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.2.0/shar e/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/ commons-el-1.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/jackson-core-as l-1.8.8.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/u sr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local /hadoop-2.2.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop-2.2.0/sha re/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop-2.2.0/share/ha doop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/jetty-ut il-6.1.26.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/jersey-server-1.9.ja r:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/h adoop-2.2.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-2. 2.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop-2.2.0/share/hado op/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/ commons-lang-2.5.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/commons-io-2. 1.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/u sr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop-2. 2.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.2.0/share/ha doop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/pro tobuf-java-2.5.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/lib/guava-11.0.2. jar:/usr/local/hadoop-2.2.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/loc al/hadoop-2.2.0/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop-2.2.0/ share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop-2.2.0/share/hado op/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn /lib/junit-4.10.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/guice-3.0.jar: /usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/h adoop-2.2.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop-2.2.0/share /hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop-2.2.0/share/hadoop /yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/ja ckson-mapper-asl-1.8.8.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/asm-3.2 .jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/lo cal/hadoop-2.2.0/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop-2.2.0/share/ hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/ javax.inject-1.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/hadoop-annotati ons-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar :/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/ha doop-2.2.0/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop-2.2.0/share/ha doop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/a opalliance-1.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/netty-3.6.2.Fin al.jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/us r/local/hadoop-2.2.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/ha doop-2.2.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar :/usr/local/hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0. jar:/usr/local/hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.ja r:/usr/local/hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/lo cal/hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop-2 .2.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop- 2.2.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop-2.2 .0/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop-2.2.0/share/ hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/ hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr /local/hadoop-2.2.0/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hado op-2.2.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop -2.2.0/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop-2.2.0/share/h adoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce /lib/hamcrest-core-1.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/lo g4j-1.2.17.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/jackson-core-a sl-1.8.8.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/guice-servlet-3. 0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8. 8.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/ hadoop-2.2.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop- 2.2.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop-2.2.0/share/hadoop /mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/ lib/javax.inject-1.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/hadoop -annotations-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/jersey -guice-1.9.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/jersey-core-1. 9.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/loc al/hadoop-2.2.0/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop- 2.2.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop-2.2.0/sha re/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop-2.2.0/share/hado op/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop-2.2.0/share/hadoop/ma preduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapredu ce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapr educe/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop /mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/usr/local/hadoop-2.2.0/sha re/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop-2.2 .0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr /local/hadoop-2.2.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0. jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2. 2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/hadoop-mapreduce-client-h s-plugins-2.2.0.jar:/usr/local/hadoop-2.2.0/share/hadoop/mapreduce/hadoop-mapred uce-client-jobclient-2.2.0.jar:/usr/local/hadoop-2.2.0/contrib/capacity-schedule r/*.jar

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common -r 1529768; compiled by 'hortonmu' on 2013-10-07T06:28Z

STARTUP_MSG: java = 1.8.0_45

************************************************************/

16/01/19 16:43:15 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

16/01/19 16:43:16 INFO namenode.FSNamesystem: HA Enabled: false

16/01/19 16:43:16 INFO namenode.FSNamesystem: Append Enabled: true

16/01/19 16:43:17 INFO util.GSet: Computing capacity for map INodeMap

16/01/19 16:43:17 INFO util.GSet: VM type = 32-bit

16/01/19 16:43:17 INFO util.GSet: 1.0% max memory = 96.7 MB

16/01/19 16:43:17 INFO util.GSet: capacity = 2^18 = 262144 entries

16/01/19 16:43:17 INFO namenode.NameNode: Caching file names occuring more than 10 times

。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。

16/01/19 16:43:17 INFO util.GSet: capacity = 2^13 = 8192 entries

16/01/19 16:43:17 INFO common.Storage: Storage directory /usr/local/app/hadoop/dfs/name has been successfully formatted.

16/01/19 16:43:17 INFO util.ExitUtil: Exiting with status 0

16/01/19 16:43:17 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master.hadoop/10.18.11.198

************************************************************/

[hduser@master ~]$ start-dfs.sh

Starting namenodes on [master.hadoop]

master.hadoop: namenode running as process 3395. Stop it first.

slaver1.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver1.hadoop.out

slaver5.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver5.hadoop.out

slaver3.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver3.hadoop.out

slaver4.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver4.hadoop.out

slaver2.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver2.hadoop.out

Starting secondary namenodes [master.hadoop]

master.hadoop: starting secondarynamenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-secondarynamenode-master.hadoop.out

[hduser@master ~]$ jps

3395 NameNode

3863 SecondaryNameNode

3960 Jps

[hduser@slaver2 ~]$ jps

2023 DataNode

2092 Jps

[hduser@slaver3 ~]$ jps

1841 Jps

1774 DataNode

[hduser@master ~]$ stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [master.hadoop]

master.hadoop: stopping namenode

slaver3.hadoop: stopping datanode

slaver4.hadoop: stopping datanode

slaver5.hadoop: stopping datanode

slaver1.hadoop: stopping datanode

slaver2.hadoop: stopping datanode

Stopping secondary namenodes [master.hadoop]

master.hadoop: stopping secondarynamenode

stopping yarn daemons

no resourcemanager to stop

slaver1.hadoop: no nodemanager to stop

slaver5.hadoop: no nodemanager to stop

slaver4.hadoop: no nodemanager to stop

slaver3.hadoop: no nodemanager to stop

slaver2.hadoop: no nodemanager to stop

no proxyserver to stop

[hduser@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master.hadoop]

master.hadoop: starting namenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-namenode-master.hadoop.out

slaver5.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver5.hadoop.out

slaver3.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver3.hadoop.out

slaver4.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver4.hadoop.out

slaver1.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver1.hadoop.out

slaver2.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver2.hadoop.out

Starting secondary namenodes [master.hadoop]

master.hadoop: starting secondarynamenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-secondarynamenode-master.hadoop.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-resourcemanager-master.hadoop.out

slaver3.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver3.hadoop.out

slaver4.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver4.hadoop.out

slaver5.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver5.hadoop.out

slaver1.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver1.hadoop.out

slaver2.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver2.hadoop.out

此时即可看到所有的slaver1-slaver5已经成功启动。

在Windows上通过做如下的修改就可以进行查看了。

########################

修改windows 系统下的hosts文件:

C:\Windows\System32\drivers\etc\hosts

在最后面添加如下的几行

10.18.11.198 master.hadoop

10.18.11.199 slaver1.hadoop

10.18.11.201 slaver2.hadoop

10.18.11.202 slaver3.hadoop

10.18.11.203 slaver4.hadoop

10.18.11.204 slaver5.hadoop

查看Hadoop资源管理器

http://master.hadoop:50070/

http://master.hadoop:50060/

http://master.hadoop:9088/cluster到此hadoop已经OK了。

下面在此基础上进行ZooKeeper的部署

[hduser@master ~]$ sudo tar zxvf zookeeper-3.4.5.tar.gz -C /usr/local/

[sudo] password for hduser:

[hduser@master ~]$ sudo vim /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@master ~]$ source /etc/profile

[hduser@master ~]$ echo $ZOOKEEPER_HOME

/usr/local/zookeeper-3.4.5

[hduser@master ~]$ ls /usr/local/app/hadoop/ -l

total 8

drwxr-x---. 4 hduser hadoop 4096 Jan 19 11:53 dfs

drwxr-x---. 5 hduser hadoop 4096 Jan 19 17:07 tmp

[hduser@master ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@master ~]$ cp /usr/local/zookeeper-3.4.5/conf/zoo_sample.cfg /usr/local/zookeeper-3.4.5/conf/zoo.cfg

[hduser@master ~]$ cat /usr/local/zookeeper-3.4.5/conf/zoo.cfg |grep -v "#"

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/app/hadoop/zookeeper

clientPort=2181

server.0=10.18.11.199:2888:3888

server.1=10.18.11.201:2888:3888

server.2=10.18.11.202:2888:3888

server.3=10.18.11.203:2888:3888

server.4=10.18.11.204:2888:3888

slaver1-slaver5的配置如下,最主要的配置就是从节点的环境变量及各个zookeeper的myid值

[hduser@slaver1 ~]$ sudo vi /etc/profile

[sudo] password for hduser:

[hduser@slaver1 ~]$ tail -n 10 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@slaver1 ~]$ sudo chown -R hduser:hadoop /usr/local/zookeeper-3.4.5

[sudo] password for hduser:

[hduser@slaver1 ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@slaver1 ~]$ ls -ld /usr/local/app/hadoop/zookeeper

drwxr-xr-x. 2 hduser hadoop 4096 Sep 23 16:19 /usr/local/app/hadoop/zookeeper

[hduser@slaver1 ~]$ vi /usr/local/app/hadoop/zookeeper/myid

[hduser@slaver1 ~]$ cat /usr/local/app/hadoop/zookeeper/myid

0

[hduser@slaver2 ~]$ sudo vi /etc/profile

[sudo] password for hduser:

[hduser@slaver2 ~]$ tail -n 10 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@slaver2 ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@slaver2 ~]$ vi /usr/local/app/hadoop/zookeeper/myid

[hduser@slaver2 ~]$ cat /usr/local/app/hadoop/zookeeper/myid

1

[hduser@slaver3 ~]$ sudo vi /etc/profile

[sudo] password for hduser:

[hduser@slaver3 ~]$ tail -n 10 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@slaver3 ~]$ sudo chown -R hduser:hadoop /usr/local/zookeeper-3.4.5

[sudo] password for hduser:

[hduser@slaver3 ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@slaver3 ~]$ ls -ld /usr/local/app/hadoop/zookeeper

drwxr-xr-x. 2 hduser hadoop 4096 Sep 24 00:26 /usr/local/app/hadoop/zookeeper

[hduser@slaver3 ~]$ echo '2' >/usr/local/app/hadoop/zookeeper/myid

[hduser@slaver3 ~]$ cat /usr/local/app/hadoop/zookeeper/myid

2

[hduser@slaver4 ~]$ sudo vi /etc/profile

[sudo] password for hduser:

[hduser@slaver4 ~]$ tail -n 10 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@slaver4 ~]$ sudo chown -R hduser:hadoop /usr/local/zookeeper-3.4.5

[sudo] password for hduser:

[hduser@slaver4 ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@slaver4 ~]$ echo '3' >/usr/local/app/hadoop/zookeeper/myid

[hduser@slaver4 ~]$ cat /usr/local/app/hadoop/zookeeper/myid

3

[hduser@master ~]$ ssh slaver5.hadoop

Last login: Fri Sep 23 22:56:41 2016 from master.hadoop

[hduser@slaver5 ~]$ sudo vi /etc/profile

[sudo] password for hduser:

[hduser@slaver5 ~]$ tail -n 10 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$PATH

[hduser@slaver5 ~]$ sudo chown -R hduser:hadoop /usr/local/zookeeper-3.4.5

[sudo] password for hduser:

[hduser@slaver5 ~]$ mkdir /usr/local/app/hadoop/zookeeper

[hduser@slaver5 ~]$ echo '4' >/usr/local/app/hadoop/zookeeper/myid

下面可以启动了。

#### 直接启动,日志在后台显示

[hduser@slaver1 ~]$ zkServer.sh start

[hduser@slaver2 ~]$ zkServer.sh start

[hduser@slaver3 ~]$ zkServer.sh start

[hduser@slaver4 ~]$ zkServer.sh start

[hduser@slaver5 ~]$ zkServer.sh start

#### 日志在前台显示的启动方式

[hduser@slaver1 ~]$ zkServer.sh start-foreground

[hduser@slaver2 ~]$ zkServer.sh start-foreground

[hduser@slaver3 ~]$ zkServer.sh start-foreground

[hduser@slaver4 ~]$ zkServer.sh start-foreground

[hduser@slaver5 ~]$ zkServer.sh start-foreground

##### 问题##########

启动时报错:

Error contacting service. It is probably not running.####查看zookeeper的运行状态

login as: hduser

hduser@10.18.11.198's password:

Last login: Tue Jan 19 18:41:13 2016 from 10.17.74.212

[hduser@master ~]$ ssh slaver2.hadoop

Last login: Sat Sep 24 00:42:57 2016 from master.hadoop

[hduser@slaver2 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

[hduser@slaver2 ~]$ exit

logout

Connection to slaver2.hadoop closed.

[hduser@master ~]$ ssh slaver3.hadoop

Last login: Sat Sep 24 00:43:33 2016 from master.hadoop

[hduser@slaver3 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: leader

[hduser@slaver3 ~]$ exit

logout

Connection to slaver3.hadoop closed.

[hduser@master ~]$ ssh slaver4.hadoop

Last login: Sat Sep 24 00:42:07 2016 from master.hadoop

[hduser@slaver4 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

[hduser@slaver4 ~]$ exit

logout

Connection to slaver4.hadoop closed.

[hduser@master ~]$ ssh slaver5.hadoop

Last login: Sat Sep 24 00:31:02 2016 from master.hadoop

[hduser@slaver5 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

[hduser@slaver5 ~]$ exit

logout

Connection to slaver5.hadoop closed.

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Fri Sep 23 16:39:16 2016 from master.hadoop

[hduser@slaver1 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

###关闭slaver3.hadoop后,重新查看运行状态

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Fri Sep 23 16:39:16 2016 from master.hadoop

[hduser@slaver1 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

[hduser@slaver1 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: follower

[hduser@slaver1 ~]$ exit

logout

Connection to slaver1.hadoop closed.

[hduser@master ~]$ ssh slaver5.hadoop

Last login: Sat Sep 24 00:32:16 2016 from master.hadoop

[hduser@slaver5 ~]$ zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Mode: leader

下面开始HBase集群部署

[hduser@master ~]$ ls

hadoop-2.2.0-32bit.tar.gz hbase-0.98.8-hadoop2-bin.tar.gz jdk-8u45-linux-i586.tar.gz zookeeper-3.4.5.tar.gz

[hduser@master ~]$ tar zxvf hbase-0.98.8-hadoop2-bin.tar.gz

[hduser@master ~]$ sudo mv hbase-0.98.8-hadoop2

hbase-0.98.8-hadoop2/ hbase-0.98.8-hadoop2-bin.tar.gz

[hduser@master ~]$ sudo mv hbase-0.98.8-hadoop2 /usr/local/

[sudo] password for hduser:

[hduser@master ~]$ sudo chown -R hduser:hadoop /usr/local/hbase-0.98.8-hadoop2/

[hduser@master ~]$ vim /usr/local/hbase-0.98.8-hadoop2/conf/hbase-env.sh

[hduser@master ~]$ vim /usr/local/hbase-0.98.8-hadoop2/conf/hbase-env.sh

[hduser@master ~]$ cat /usr/local/hbase-0.98.8-hadoop2/conf/hbase-env.sh | grep -v "#"|grep -v "^$"

export JAVA_HOME=/usr/local/jdk1.8.0_45

export HBASE_CLASSPATH=/usr/local/hadoop-2.2.0/etc/hadoop

export HBASE_OPTS="-XX:+UseConcMarkSweepGC"

export HBASE_PID_DIR=/usr/local/app/hadoop/hbase/tmp

export HBASE_MANAGES_ZK=false

[hduser@master ~]$ mkdir -p /usr/local/app/hadoop/hbase/tmp

[hduser@master ~]$ ls -ld /usr/local/app/hadoop/hbase

drwxr-xr-x. 3 hduser hadoop 4096 Jan 19 19:10 /usr/local/app/hadoop/hbase

[hduser@master ~]$ ls -l /usr/local/app/hadoop/hbase

total 4

drwxr-xr-x. 2 hduser hadoop 4096 Jan 19 19:10 tmp

[hduser@master ~]$ hdfs dfs -mkdir /hbase

[hduser@master ~]$ vim /usr/local/hbase-0.98.8-hadoop2/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.master</name>

<value>master.hadoop:60000</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master.hadoop:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>slaver1.hadoop,slaver2.hadoop,slaver3.hadoop,slaver4.hadoop,slaver5.hadoop</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/local/app/hadoop/zookeeper</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/usr/local/app/hadoop/hbase/tmp</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.defaults.for.version</name>

<value>0.98.8-hadoop2</value>

</property>

</configuration>

[hduser@master ~]$ vim /usr/local/hbase-0.98.8-hadoop2/conf/regionservers

[hduser@master ~]$ cat /usr/local/hbase-0.98.8-hadoop2/conf/regionservers

slaver1.hadoop

slaver2.hadoop

slaver3.hadoop

slaver4.hadoop

slaver5.hadoop

### 配置HBase的环境变量

[hduser@master ~]$ sudo vim /etc/profile

[sudo] password for hduser:

[hduser@master ~]$ cat /etc/profile |tail -n 12

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

# set hbase environment

export HBASE_HOME=/usr/local/hbase-0.98.8-hadoop2

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin:$PATH

### 将配置好的HBase目录复制到其它的slaver1-slaver5上

[hduser@master ~]$ cd /usr/local

[hduser@master local]$ sudo tar zxvf hbase-0.98.8-hadoop2-to-hbasenode.tar.gz hadoop-2.2.0/

[hduser@master local]$ scp hbase-0.98.8-hadoop2-to-hbasenode.tar.gz slaver1.hadoop:/home/hduser/

hbase-0.98.8-hadoop2-to-hbasenode.tar.gz 100% 104MB 11.6MB/s 00:09

[hduser@master local]$ scp hbase-0.98.8-hadoop2-to-hbasenode.tar.gz slaver2.hadoop:/home/hduser/

hbase-0.98.8-hadoop2-to-hbasenode.tar.gz 100% 104MB 11.6MB/s 00:09

[hduser@master local]$ scp hbase-0.98.8-hadoop2-to-hbasenode.tar.gz slaver3.hadoop:/home/hduser/

hbase-0.98.8-hadoop2-to-hbasenode.tar.gz 100% 104MB 11.6MB/s 00:09

[hduser@master local]$ scp hbase-0.98.8-hadoop2-to-hbasenode.tar.gz slaver4.hadoop:/home/hduser/

hbase-0.98.8-hadoop2-to-hbasenode.tar.gz 100% 104MB 11.6MB/s 00:09

[hduser@master local]$ scp hbase-0.98.8-hadoop2-to-hbasenode.tar.gz slaver5.hadoop:/home/hduser/

hbase-0.98.8-hadoop2-to-hbasenode.tar.gz 100% 104MB 11.6MB/s 00:09

### 解压并复制到对应目录,并赋予权限,其它的从节点都可以仿照如下的配置

hduser@slaver1 ~]$ tar zxvf hbase-0.98.8-hadoop2-to-hbasenode.tar.gz

[hduser@slaver1 ~]$ sudo mv hbase-0.98.8-hadoop2 /usr/local/

[hduser@slaver1 ~]$ sudo chown -R hduser:hadoop /usr/local/hbase-0.98.8-hadoop2

[hduser@slaver1 ~]$ mkdir -p /usr/local/app/hadoop/hbase/tmp

[hduser@slaver1 ~]$ vim /etc/profile

[hduser@slaver1 ~]$ source /etc/profile

[hduser@slaver1 ~]$ cat /etc/profile |tail -n 12

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

# set hbase environment

export HBASE_HOME=/usr/local/hbase-0.98.8-hadoop2

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin:$PATH

### 启动hadoop、zookeeper、hbase应用

三者的启动顺序如下:

1、登陆slaver1-slaver5并启动对应的进程

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Mon Sep 26 17:26:52 2016 from master.hadoop

[hduser@slaver1 ~]$ jps

2002 DataNode

2105 NodeManager

13051 Jps

[hduser@slaver1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver1 ~]$ jps

13073 QuorumPeerMain <----ZooKeeper 进程

2002 DataNode

13108 Jps

2105 NodeManager

[hduser@master ~]$ jps

24049 HMaster

4658 SecondaryNameNode

4786 ResourceManager

4475 NameNode

24381 Jps

[hduser@slaver1 ~]$ jps

2967 DataNode

14472 QuorumPeerMain

15786 Jps

3071 NodeManager

[hduser@master ~]$ hdfs dfs -ls /hbase

Found 5 items

drwxr-xr-x - hduser supergroup 0 2016-01-22 12:05 /hbase/.tmp

drwxr-xr-x - hduser supergroup 0 2016-01-22 11:52 /hbase/data

-rw-r--r-- 3 hduser supergroup 42 2016-01-22 11:52 /hbase/hbase.id

-rw-r--r-- 3 hduser supergroup 7 2016-01-22 11:52 /hbase/hbase.version

drwxr-xr-x - hduser supergroup 0 2016-01-22 11:52 /hbase/oldWALs

##########故障 ################

hbase数据库的hregionserver没有启动

[hduser@master ~]$ date

Fri Jan 22 14:18:41 CST 2016

[hduser@slaver3 ~]$ date

Mon Sep 26 20:24:00 CST 2016

[hduser@slaver1 ~]$ date

Mon Sep 26 12:25:43 CST 2016

修改所有的slaver1.hadoop-slaver4.hadoop的系统时间

[root@slaver1 ~]# vim /etc/sysconfig/clock

[root@slaver1 ~]# cat /etc/sysconfig/clock

ZONE="Asia/Shanghai"

UTC=false

ARC=false

[root@slaver1 ~]# clock -w

[root@slaver1 ~]# date -s "2016-09-26 12:36:17"

Mon Sep 26 12:36:17 CST 2016

[root@slaver1 ~]# date

Mon Sep 26 12:36:18 CST 2016

###############################

[hduser@master ~]$ start-hbase.sh

starting master, logging to /usr/local/hbase-0.98.8-hadoop2/logs/hbase-hduser-master-master.hadoop.out

slaver3.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver3.hadoop.out

slaver1.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver1.hadoop.out

slaver5.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver5.hadoop.out

slaver4.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver4.hadoop.out

slaver2.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver2.hadoop.out

[hduser@master ~]$ jps

28689 NameNode

30515 HMaster

30644 Jps

29016 ResourceManager

28876 SecondaryNameNode

[hduser@slaver1 ~]$ jps

17141 QuorumPeerMain

16984 NodeManager

16874 DataNode

17836 Jps

17597 HRegionServer

其他的一些启动过程之类的。

##############################################

启动过程

##############################################

[hduser@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master.hadoop]

master.hadoop: starting namenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-namenode-master.hadoop.out

slaver1.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver1.hadoop.out

slaver2.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver2.hadoop.out

slaver3.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver3.hadoop.out

slaver4.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver4.hadoop.out

slaver5.hadoop: starting datanode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-datanode-slaver5.hadoop.out

Starting secondary namenodes [master.hadoop]

master.hadoop: starting secondarynamenode, logging to /usr/local/hadoop-2.2.0/logs/hadoop-hduser-secondarynamenode-master.hadoop.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-resourcemanager-master.hadoop.out

slaver5.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver5.hadoop.out

slaver4.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver4.hadoop.out

slaver3.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver3.hadoop.out

slaver1.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver1.hadoop.out

slaver2.hadoop: starting nodemanager, logging to /usr/local/hadoop-2.2.0/logs/yarn-hduser-nodemanager-slaver2.hadoop.out

[hduser@master ~]$ jps

1796 SecondaryNameNode

2187 Jps

1611 NameNode

1932 ResourceManager

########### slaver1.hadoop

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Mon Sep 26 13:45:48 2016 from master.hadoop

[hduser@slaver1 ~]$ jps

1674 NodeManager

1562 DataNode

1820 Jps

[hduser@slaver1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver1 ~]$ jps

1843 QuorumPeerMain

1674 NodeManager

1866 Jps

1562 DataNode

########### slaver2.hadoop

[hduser@master ~]$ ssh slaver2.hadoop

Last login: Mon Sep 26 13:46:05 2016 from master.hadoop

[hduser@slaver2 ~]$ jps

1831 Jps

1692 NodeManager

1582 DataNode

[hduser@slaver2 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver2 ~]$ jps

1878 Jps

1692 NodeManager

1853 QuorumPeerMain

1582 DataNode

[hduser@master ~]$ ssh slaver3.hadoop

Last login: Mon Sep 26 13:46:17 2016 from master.hadoop

[hduser@slaver3 ~]$ jps

1715 Jps

1573 NodeManager

1464 DataNode

[hduser@slaver3 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... already running as process 1504.

[hduser@slaver3 ~]$ zkServer.sh stop

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

[hduser@slaver3 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver3 ~]$ jps

1573 NodeManager

1757 QuorumPeerMain

1791 Jps

[hduser@master ~]$ ssh slaver4.hadoop

Last login: Mon Sep 26 13:46:26 2016 from master.hadoop

[hduser@slaver4 ~]$ jps

1704 NodeManager

1593 DataNode

1849 Jps

[hduser@slaver4 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver4 ~]$ jps

1904 Jps

1704 NodeManager

1593 DataNode

1871 QuorumPeerMain

[hduser@master ~]$ ssh slaver5.hadoop

Last login: Mon Sep 26 13:46:36 2016 from master.hadoop

[hduser@slaver5 ~]$ jps

1840 Jps

1697 NodeManager

1586 DataNode

[hduser@slaver5 ~]$ zkServer.sh start

JMX enabled by default

Using config: /usr/local/zookeeper-3.4.5/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hduser@slaver5 ~]$ jps

1697 NodeManager

1586 DataNode

1862 QuorumPeerMain

1896 Jps

[hduser@master ~]$ start-hbase.sh

starting master, logging to /usr/local/hbase-0.98.8-hadoop2/logs/hbase-hduser-master-master.hadoop.out

slaver5.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver5.hadoop.out

slaver2.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver2.hadoop.out

slaver4.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver4.hadoop.out

slaver3.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver3.hadoop.out

slaver1.hadoop: starting regionserver, logging to /usr/local/hbase-0.98.8-hadoop2/bin/../logs/hbase-hduser-regionserver-slaver1.hadoop.out

[hduser@master ~]$ jps

1796 SecondaryNameNode

1611 NameNode

1932 ResourceManager

2349 HMaster

2574 Jps

[hduser@master ~]$ ssh slaver1.hadoop

Last login: Mon Sep 26 13:50:53 2016 from master.hadoop

[hduser@slaver1 ~]$ jps

2131 Jps

1843 QuorumPeerMain

1674 NodeManager

1562 DataNode

1949 HRegionServer

[hduser@slaver1 ~]$ exit

logout

Connection to slaver1.hadoop closed.

[hduser@master ~]$ ssh slaver2.hadoop

Last login: Mon Sep 26 13:51:29 2016 from master.hadoop

[hduser@slaver2 ~]$ jps

2129 Jps

1958 HRegionServer

1692 NodeManager

1853 QuorumPeerMain

1582 DataNode

[hduser@slaver2 ~]$ exit

logout

Connection to slaver2.hadoop closed.

[hduser@master ~]$ ssh slaver3.hadoop

Last login: Mon Sep 26 13:52:20 2016 from master.hadoop

[hduser@slaver3 ~]$ jps

1573 NodeManager

1865 HRegionServer

2027 Jps

1757 QuorumPeerMain

[hduser@slaver3 ~]$ exit

logout

Connection to slaver3.hadoop closed.

[hduser@master ~]$ ssh slaver4.hadoop

Last login: Mon Sep 26 13:53:10 2016 from master.hadoop

[hduser@slaver4 ~]$ jps

2150 Jps

1975 HRegionServer

1704 NodeManager

1593 DataNode

1871 QuorumPeerMain

[hduser@slaver4 ~]$ exit

logout

Connection to slaver4.hadoop closed.

[hduser@master ~]$ ssh slaver5.hadoop

Last login: Mon Sep 26 13:53:35 2016 from master.hadoop

[hduser@slaver5 ~]$ jps

1697 NodeManager

1586 DataNode

1862 QuorumPeerMain

2168 Jps

1966 HRegionServer

[hduser@slaver5 ~]$ exit

logout

Connection to slaver5.hadoop closed.

[hduser@master ~]$ hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Configured Capacity: 691105742848 (643.64 GB)

Present Capacity: 647142413354 (602.70 GB)

DFS Remaining: 647141740544 (602.70 GB)

DFS Used: 672810 (657.04 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 5 (5 total, 0 dead)

Live datanodes:

Name: 10.18.11.202:50010 (slaver3.hadoop)

Hostname: slaver3.hadoop

Decommission Status : Normal

Configured Capacity: 144660275200 (134.73 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 9146474496 (8.52 GB)

DFS Remaining: 135513776128 (126.21 GB)

DFS Used%: 0.00%

DFS Remaining%: 93.68%

Last contact: Mon Sep 26 16:09:37 CST 2016

Name: 10.18.11.204:50010 (slaver5.hadoop)

Hostname: slaver5.hadoop

Decommission Status : Normal

Configured Capacity: 51770945536 (48.22 GB)

DFS Used: 167130 (163.21 KB)

Non DFS Used: 4461466406 (4.16 GB)

DFS Remaining: 47309312000 (44.06 GB)

DFS Used%: 0.00%

DFS Remaining%: 91.38%

Last contact: Mon Sep 26 16:09:37 CST 2016

Name: 10.18.11.203:50010 (slaver4.hadoop)

Hostname: slaver4.hadoop

Decommission Status : Normal

Configured Capacity: 51770945536 (48.22 GB)

DFS Used: 168006 (164.07 KB)

Non DFS Used: 4461875130 (4.16 GB)

DFS Remaining: 47308902400 (44.06 GB)

DFS Used%: 0.00%

DFS Remaining%: 91.38%

Last contact: Mon Sep 26 16:09:39 CST 2016

Name: 10.18.11.201:50010 (slaver2.hadoop)

Hostname: slaver2.hadoop

Decommission Status : Normal

Configured Capacity: 391132631040 (364.27 GB)

DFS Used: 166926 (163.01 KB)

Non DFS Used: 21433738226 (19.96 GB)

DFS Remaining: 369698725888 (344.31 GB)

DFS Used%: 0.00%

DFS Remaining%: 94.52%

Last contact: Mon Sep 26 16:09:37 CST 2016

Name: 10.18.11.199:50010 (slaver1.hadoop)

Hostname: slaver1.hadoop

Decommission Status : Normal

Configured Capacity: 51770945536 (48.22 GB)

DFS Used: 146172 (142.75 KB)

Non DFS Used: 4459775236 (4.15 GB)

DFS Remaining: 47311024128 (44.06 GB)

DFS Used%: 0.00%

DFS Remaining%: 91.39%

Last contact: Mon Sep 26 16:09:37 CST 2016

#########################

如果nodejs需要调用hbase,那么需要开启hbase.rest.port

具体配置如下:

[hduser@master ~]$ sed -n '60,63p' /usr/local/hbase-0.98.8-hadoop2/conf/hbase-site.xml

<property>

<name>hbase.rest.port</name>

<value>8090</value>

</property>

查看是否已经开启对应的rest.port:

[hduser@master conf]$ netstat -atn |grep 8090

开启方式如下:

[hduser@master conf]$ hbase-daemon.sh start rest

starting rest, logging to /usr/local/hbase-0.98.8-hadoop2/logs/hbase-hduser-rest-master.hadoop.out

[hduser@master conf]$ netstat -atn |grep 8090

tcp 0 0 0.0.0.0:8090 0.0.0.0:* LISTEN

##########################

HBase无法停止时的做法

##########################

当运行./stop-hbase时,出现stopping hbase..........无限点时候,先运行./start-hbase.sh,

这时候会提示hbase的各个组件正在运行,

并且给出这些程序的pid,运行kill -9 pid来终止hbase的进程,

此时hbase就停止了,再运行./start-hbase.sh来重启hbase#### 查看hdfs的目录下的文件

[hduser@master ~]$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - hduser supergroup 0 2016-09-26 17:08 /hbase

[hduser@master ~]$ hdfs dfs -ls /hbase

Found 8 items

drwxr-xr-x - hduser supergroup 0 2016-09-26 16:07 /hbase/.tmp

drwxr-xr-x - hduser supergroup 0 2016-09-26 16:07 /hbase/WALs

drwxr-xr-x - hduser supergroup 0 2016-09-26 17:13 /hbase/archive

drwxr-xr-x - hduser supergroup 0 2016-09-26 13:54 /hbase/corrupt

drwxr-xr-x - hduser supergroup 0 2016-09-26 12:39 /hbase/data

-rw-r--r-- 3 hduser supergroup 42 2016-01-22 11:52 /hbase/hbase.id

-rw-r--r-- 3 hduser supergroup 7 2016-01-22 11:52 /hbase/hbase.version

drwxr-xr-x - hduser supergroup 0 2016-09-27 15:18 /hbase/oldWALs############hive的安装

[hduser@master ~]$ sudo mv apache-hive-0.14.0-bin /usr/local/

[sudo] password for hduser:

[hduser@master ~]$ sudo chown -R hduser:hadoop /usr/local/apache-hive-0.14.0-bin

[hduser@master ~]$ tar zxvf apache-hive-0.14.0-src.tar.gz

[hduser@master ~]$ jar cvfM0 hive-hwi-0.14.0.war -C apache-hive-0.14.0-src/hwi/web .

[hduser@master ~]$ ls -l hive-hwi-0.14.0.war

-rw-r--r--. 1 hduser hadoop 151343 Sep 28 09:08 hive-hwi-0.14.0.war

[hduser@master ~]$ mv hive-hwi-0.14.0.war /usr/local/apache-hive-0.14.0-bin/lib/

[hduser@master ~]$ sudo vim /etc/profile

[hduser@master ~]$ source /etc/profile

[hduser@master ~]$ tail -n 18 /etc/profile

# set jdk and hadoop environment

export JAVA_HOME=/usr/local/jdk1.8.0_45

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export HADOOP_HOME=/usr/local/hadoop-2.2.0

# set zookeeper environment

export ZOOKEEPER_HOME=/usr/local/zookeeper-3.4.5

export CLASSPATH=$CLASSPATH:$ZOOKEEPER_HOME/lib

# set hbase environment

export HBASE_HOME=/usr/local/hbase-0.98.8-hadoop2

# set hive environment

export HIVE_HOME=/usr/local/apache-hive-0.14.0-bin

export HCATALOG_HOME=/usr/local/apache-hive-0.14.0-bin/hcatalog

export CLASSPATH=$CLASSPATH:$HIVE_HOME/lib

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin:$HIVE_HOME/bin:$HCATALOG_HOME/bin:$HCATALOG_HOME/sbin:$PATH

[hduser@master ~]$ cp /usr/local/apache-hive-0.14.0-bin/conf/hive-default.xml.template /usr/local/apache-hive-0.14.0-bin/conf/hive-site.xml

[hduser@master ~]$ vim /usr/local/apache-hive-0.14.0-bin/conf/hive-site.xml

[hduser@master ~]$ hdfs dfs -mkdir /tmp

[hduser@master ~]$ hdfs dfs -ls /

Found 2 items

drwxr-xr-x - hduser supergroup 0 2016-09-26 17:08 /hbase

drwxr-xr-x - hduser supergroup 0 2016-09-27 15:42 /tmp

[hduser@master ~]$ hdfs dfs -mkdir -p /user/hive/warehouse

[hduser@master ~]$ hdfs dfs -chmod g+w /tmp

[hduser@master ~]$ hdfs dfs -chmod g+w /user/hive/warehouse

[hduser@master ~]$ hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hduser supergroup 0 2016-09-26 17:08 /hbase

drwxrwxr-x - hduser supergroup 0 2016-09-27 15:42 /tmp

drwxr-xr-x - hduser supergroup 0 2016-09-27 15:42 /user

[hduser@master ~]$ hdfs dfs -ls -R /user

drwxr-xr-x - hduser supergroup 0 2016-09-28 09:18 /user/hive

drwxrwxr-x - hduser supergroup 0 2016-09-28 09:18 /user/hive/warehouse

[hduser@master ~]$ vim /usr/local/apache-hive-0.14.0-bin/conf/hive-site.xml

[hduser@master ~]$ cp /usr/local/apache-hive-0.14.0-bin/conf/hive-log4j.properties.template /usr/local/apache-hive-0.14.0-bin/conf/hive-log4j.properties

[hduser@master ~]$ vim /usr/local/apache-hive-0.14.0-bin/conf/hive-log4j.properties

[hduser@master ~]$ mkdir /usr/local/apache-hive-0.14.0-bin/hcatalog/logs

[hduser@master ~]$ vim /usr/local/apache-hive-0.14.0-bin/hcatalog/sbin/hcat_server.sh

[hduser@master ~]$ grep HCAT_LOG_DIR /usr/local/apache-hive-0.14.0-bin/hcatalog/sbin/hcat_server.sh

HCAT_LOG_DIR=/usr/local/apache-hive-0.14.0-bin/hcatalog/logs

HCAT_PID_DIR=${HCAT_PID_DIR:-$HCAT_LOG_DIR}

export HADOOP_OPTS="${HADOOP_OPTS} -server -XX:+UseConcMarkSweepGC -XX:ErrorFile=${HCAT_LOG_DIR}/hcat_err_pid%p.log -Xloggc:${HCAT_LOG_DIR}/hcat_gc.log-`date +'%Y%m%d%H%M'` -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps"

nohup ${HIVE_HOME}/bin/hive --service metastore >${HCAT_LOG_DIR}/hcat.out 2>${HCAT_LOG_DIR}/hcat.err &

echo "Metastore startup failed, see ${HCAT_LOG_DIR}/hcat.err"

echo "Metastore startup failed, see ${HCAT_LOG_DIR}/hcat.err"

[hduser@master ~]$ vimdiff /usr/local/apache-hive-0.14.0-bin/conf/hive-default.xml.template /usr/local/apache-hive-0.14.0-bin/conf/hive-site.xml

</property> | </property>

<property> | <property>

<name>hive.hwi.war.file</name> | <name>hive.hwi.war.file</name>

<value>${env:HWI_WAR_FILE}</value> | <!--<value>${env:HWI_WAR_FILE}</value> -->

----------------------------------------------------------------------------| <value>lib/hive-hwi-0.14.0.war</value>

<description>This sets the path to the HWI war file, relative to ${HIVE_| <description>This sets the path to the HWI war file, relative to ${HIVE_

</property> | </property>

<property> | <property>

<name>hive.mapred.local.mem</name> | <name>hive.mapred.local.mem</name>

<value>0</value> | <value>0</value>

<description>mapper/reducer memory in local mode</description> | <description>mapper/reducer memory in local mode</description>

+ +--2178 lines: </property>--------------------------------------------------|+ +--2178 lines: </property>--------------------------------------------------

##################error#######################

[hduser@master ~]$ hive

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/09/28 09:30:12 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

Logging initialized using configuration in file:/usr/local/apache-hive-0.14.0-bin/conf/hive-log4j.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-0.14.0-bin/lib/hive-jdbc-0.14.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Exception in thread "main" java.lang.RuntimeException: java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:672)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.util.RunJar.main(RunJar.java:212)

Caused by: java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:206)

at org.apache.hadoop.fs.Path.<init>(Path.java:172)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:487)