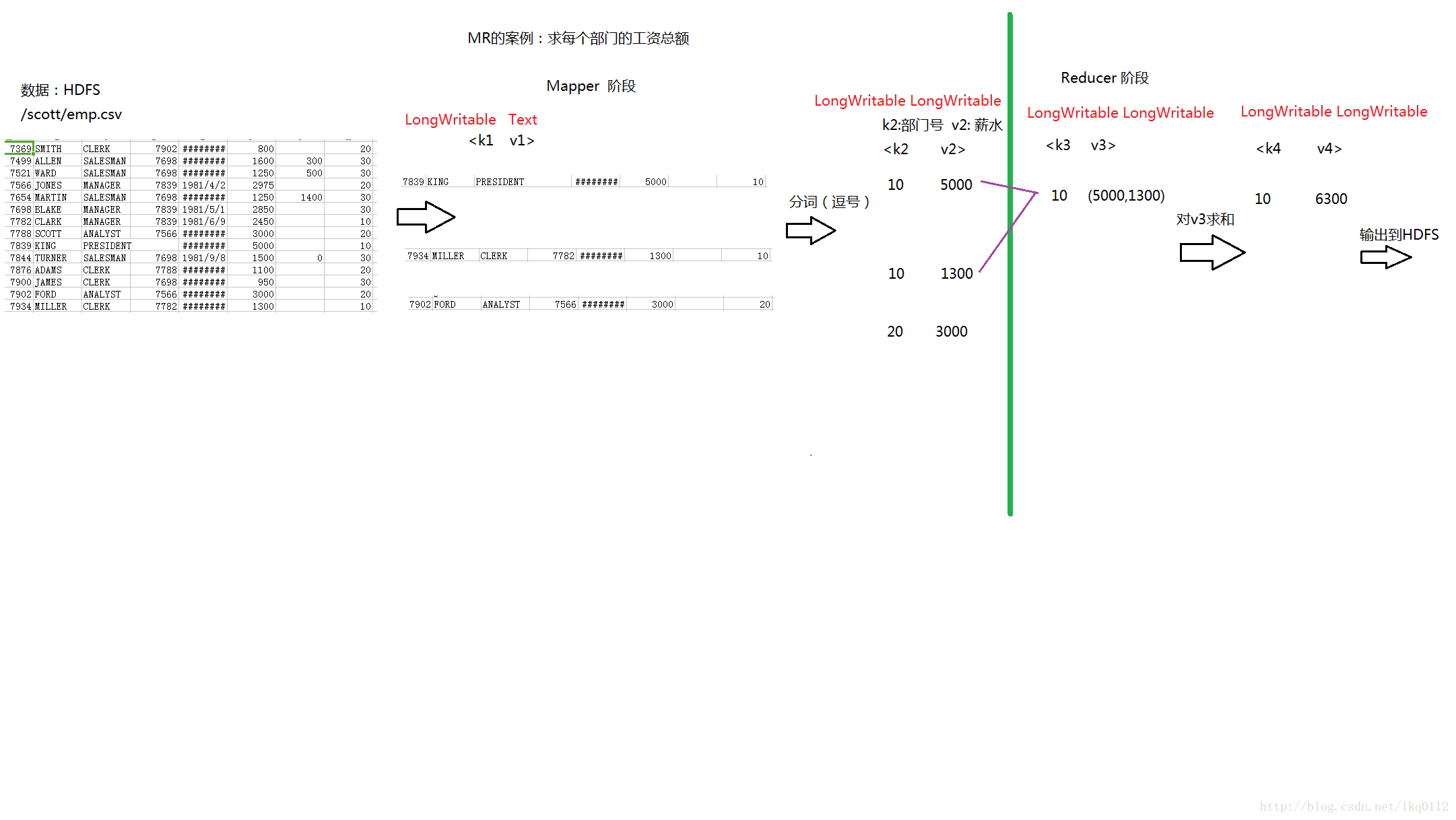

MR的案例:求每个部门的工资总额

1、表:员工表emp

SQL: select deptno,sum(sal) from emp group by deptno;

DEPTNO SUM(SAL)

---------- ----------

30 9400

20 10875

10 8750

2、开发MR实现

[root@111 temp]# hdfs dfs -cat /output/09/s2/part-r-00000

=======================================================================

1、Mapper阶段

package demo.saltotal;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class SalaryTotalMapper extends Mapper<LongWritable, Text, LongWritable, LongWritable> {

@Override

protected void map(LongWritable k1, Text v1,Context context)

throws IOException, InterruptedException {

// 数据:7654,MARTIN,SALESMAN,7698,1981/9/28,1250,1400,30

String data = v1.toString();

//分词

String[] words = data.split(",");

//输出:k2 部门号,v2:员工薪水

context.write(new LongWritable(Long.parseLong(words[7])), new LongWritable(Long.parseLong(words[5])));

}

}

---------------------------------------------------------------------------------------------------------------

Reduce阶段

package demo.saltotal;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Reducer;

public class SalaryTotalReducer extends Reducer<LongWritable, LongWritable, LongWritable, LongWritable> {

@Override

protected void reduce(LongWritable k3, Iterable<LongWritable> v3,Context context)

throws IOException, InterruptedException {

// 得到v3,代表一个部门中所有员工的薪水

long total = 0;

for(LongWritable v:v3){

total = total + v.get();

}

//输出 k4 部门号 v4 总额

context.write(k3, new LongWritable(total));

}

}

-----------------------------------------------------------------------------------------------------------------------

3、主程序job阶段

package demo.saltotal;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class SalaryTotalMain {

public static void main(String[] args) throws Exception {

// 创建一个任务job = map + reduce

Job job = Job.getInstance(new Configuration());

//指定任务的入口

job.setJarByClass(SalaryTotalMain.class);

//指定任务的Map和输出的数据类型

job.setMapperClass(SalaryTotalMapper.class);

job.setMapOutputKeyClass(LongWritable.class);

job.setMapOutputValueClass(LongWritable.class);

//指定任务的Reduce和输出的数据类型

job.setReducerClass(SalaryTotalReducer.class);

job.setOutputKeyClass(LongWritable.class);

job.setOutputValueClass(LongWritable.class);

//指定输入和输出的HDFS路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//提交任务

job.waitForCompletion(true);

}

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?