Hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供完整的sql查询功能,可以将sql语句转换为MapReduce任务进行运行。 其优点是学习成本低,可以通过类SQL语句快速实现简单的MapReduce统计,不必开发专门的MapReduce应用,十分适合数据仓库的统计分析。

Hive与HBase整合的实现是利用两者本身对外的API接口互相进行通信,相互通信主要是依靠Hive安装包lib/hive-hbase-handler.jar工具类,它负责Hbase和Hive进行通信的。

hadoop,hbase,hive都已经集群正常安装。

hadoop,hbase,hive都已正常启动。

命令行模式连接连接hbase

[root@node1 bin]# ./hbase shell

list 看下table

hbase(main):006:0* list

TABLE

test

user

2 row(s) in 0.4750 seconds

hbase(main):007:0> describe 'user'

Table user is ENABLED

user

COLUMN FAMILIES DESCRIPTION

{NAME => 'account', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEE

P_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

{NAME => 'address', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEE

P_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

{NAME => 'info', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_D

ELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

{NAME => 'userid', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP

_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

4 row(s) in 0.7020 seconds

然后扫描user表数据看看。

hbase(main):004:0> scan 'user'

ROW COLUMN+CELL

lisi column=account:name, timestamp=1495708477345, value=lisi

lisi column=account:passport, timestamp=1495708477353, value=96857123123231

lisi column=account:password, timestamp=1495708477349, value=654321

lisi column=address:city, timestamp=1495708477381, value=\xE6\xB7\xB1\xE5\x9C\xB3

lisi column=address:province, timestamp=1495708477377, value=\xE5\xB9\xBF\xE4\xB8\x9C

lisi column=info:age, timestamp=1495708477358, value=38

lisi column=info:sex, timestamp=1495708477363, value=\xE5\xA5\xB3

lisi column=userid:id, timestamp=1495708477330, value=002

zhangsan column=account:name, timestamp=1495708405658, value=zhangsan

zhangsan column=account:passport, timestamp=1495708405699, value=968574321

zhangsan column=account:password, timestamp=1495708405669, value=123456

zhangsan column=address:city, timestamp=1495708405773, value=\xE6\xB7\xB1\xE5\x9C\xB3

zhangsan column=address:province, timestamp=1495708405764, value=\xE5\xB9\xBF\xE4\xB8\x9C

zhangsan column=info:age, timestamp=1495708405712, value=26

zhangsan column=info:sex, timestamp=1495708405755, value=\xE7\x94\xB7

zhangsan column=userid:id, timestamp=1495708405444, value=001

2 row(s) in 0.2020 seconds

在hive/bin中运行hive命令行模式

[root@master bin]# ./hive

执行建立关联hbase关联语句hbase_user表

会发现有报错:return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:org.apache.hadoop.hbase.client.RetriesExhaustedException: Can't get the locations

hive> CREATE EXTERNAL TABLE hbase_user(key string, idcard string,passport string,country string,name string,password string,

> province string,city string,age string,sex string ,id string)

> STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

> WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,account:idcard,account:passport,account:country,account:name,account:password,

> address:province,address:city,info:age,info:sex,userid:id")

> TBLPROPERTIES("hbase.table.name" = "user");

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:org.apache.hadoop.hbase.client.RetriesExhaustedException: Can't get the locations

at org.apache.hadoop.hbase.client.RpcRetryingCallerWithReadReplicas.getRegionLocations(RpcRetryingCallerWithReadReplicas.java:312)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:153)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:61)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:200)

at org.apache.hadoop.hbase.client.ClientScanner.call(ClientScanner.java:320)

at org.apache.hadoop.hbase.client.ClientScanner.nextScanner(ClientScanner.java:295)

at org.apache.hadoop.hbase.client.ClientScanner.initializeScannerInConstruction(ClientScanner.java:160)

at org.apache.hadoop.hbase.client.ClientScanner.<init>(ClientScanner.java:155)

at org.apache.hadoop.hbase.client.HTable.getScanner(HTable.java:811)

at org.apache.hadoop.hbase.MetaTableAccessor.fullScan(MetaTableAccessor.java:602)

at org.apache.hadoop.hbase.MetaTableAccessor.tableExists(MetaTableAccessor.java:366)

at org.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:303)

at org.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:313)

at org.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:205)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:742)

[root@master conf]# jps

3945 HMaster

18681 RunJar

2699 NameNode

3330 NodeManager

2951 SecondaryNameNode

3226 ResourceManager

3874 HQuorumPeer

18901 Jps

接着查看下hive日志发现: 都是zookeeper连接失败

Opening socket connection to server localhost/127.0.0.1:21812017-05-25T03:06:12,259 INFO [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2017-05-25T03:06:12,260 WARN [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081)

2017-05-25T03:06:13,362 INFO [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2017-05-25T03:06:13,363 WARN [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081)

2017-05-25T03:06:13,465 INFO [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2017-05-25T03:06:13,466 WARN [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081)

2017-05-25T03:06:14,568 INFO [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2017-05-25T03:06:14,569 WARN [9b1835d1-6488-4521-99d5-88d3e786be46 main-SendThread(localhost:2181)] zookeeper.ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

<property>

<name>hive.zookeeper.quorum</name>

<value>master,node1,node2</value>

<description>

List of ZooKeeper servers to talk to. This is needed for:

1. Read/write locks - when hive.lock.manager is set to

org.apache.hadoop.hive.ql.lockmgr.zookeeper.ZooKeeperHiveLockManager,

2. When HiveServer2 supports service discovery via Zookeeper.

3. For delegation token storage if zookeeper store is used, if

hive.cluster.delegation.token.store.zookeeper.connectString is not set

4. LLAP daemon registry service

</description>

</property><property>

<name>hive.zookeeper.client.port</name>

<value>2181</value>

<description>

The port of ZooKeeper servers to talk to.

If the list of Zookeeper servers specified in hive.zookeeper.quorum

does not contain port numbers, this value is used.

</description>

</property>而 hbase集群中的用zookeeper的默认的端口是2222,所以为了端口统一, 把hbase-site.xml中的改成2181,记得重启服务

或者直接 把hbase-site.xml 复制到hive的conf目录下,hive会读取hbase的zookeeper的 zookeeper.quorum和 zookeeper.port

两种方法都可以解决问题

接着在hive中再次执行 create table语句

hive> CREATE EXTERNAL TABLE hbase_user(key string, idcard string,passport string,country string,name string,password string,

> province string,city string,age string,sex string ,id string)

> STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

> WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,account:idcard,account:passport,account:country,account:name,account:password,

> address:province,address:city,info:age,info:sex,userid:id")

> TBLPROPERTIES("hbase.table.name" = "user");

OK

Time taken: 20.323 seconds

hive> show tables;

OK

apachelog

hbase_user

Time taken: 2.75 seconds, Fetched: 2 row(s)

执行成功,

接着用hiveql查询下数据

hive> select * from hbase_user;

OK

lisi NULL 96857123123231 NULL lisi 654321 广东 深圳 38 女 002

zhangsan NULL 968574321 NULL zhangsan 123456 广东 深圳 26 男 001

Time taken: 5.798 seconds, Fetched: 2 row(s)

hive> describe hbase_user;

OK

key string

idcard string

passport string

country string

name string

password string

province string

city string

age string

sex string

id string

Time taken: 3.785 seconds, Fetched: 11 row(s)

hive> select key ,idcard,password,country,name, passport,province,city,age,sex,id from hbase_user;

OK

lisi NULL 654321 NULL lisi 96857123123231 广东 深圳 38 女 002

zhangsan NULL 123456 china zhangsan 968574321 广东 深圳 26 男 001

Time taken: 2.341 seconds, Fetched: 2 row(s)..null 是因为 hbase的column没有设置idcard字段值,和 country的值所以是为null

给hbase 表 user设置country看看,和idcard

./hbase shell

hbase(main):003:0> put 'user','zhangsan','account:idcard','420923156366998855';

hbase(main):004:0* put 'user','lisi','account:idcard','520369856366998855';

hbase(main):005:0* put 'user','lisi','account:country','china';hive> select key ,idcard,password,country,name, passport,province,city,age,sex,id from hbase_user;

OK

lisi 520369856366998855 654321 china lisi 96857123123231 广东 深圳 38 女 002

zhangsan 420923156366998855 123456 china zhangsan 968574321 广东 深圳 26 男 001

Time taken: 2.388 seconds, Fetched: 2 row(s)

hive> select * from hbase_user where name='zhangsan';

OK

zhangsan 420923156366998855 968574321 china zhangsan 123456 广东 深圳 26 男 001

Time taken: 2.651 seconds, Fetched: 1 row(s)

hive> select count(key) from hbase_user;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

Query ID = root_20170525040249_f808c765-79f6-43c0-aa94-ebfed7751091

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1495621107567_0001, Tracking URL = http://master:8088/proxy/application_1495621107567_0001/

Kill Command = /usr/tools/hadoop/bin/hadoop job -kill job_1495621107567_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

FAILED: Execution Error, return code -101 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask. PermGen space

执行count的时候,运行mapreducer,PermGen space了。。。。 也是醉了

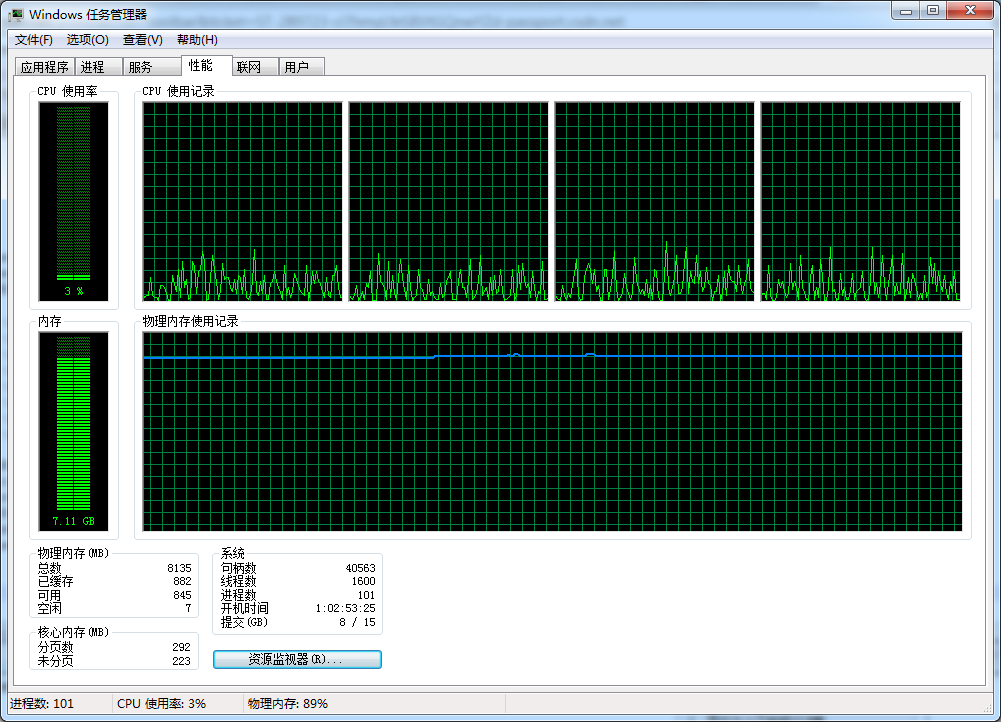

window7机器上了运行了虚拟机,3个linux组成的 hadoop,hbase,hive集群, 8g内存 内存使用率走横线,.....

实际上有时是可以count出来数据的,估计windows开了不少进程

710

710

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?