Spark Streaming+IntelliJ Idea+Maven开发环境搭建

国内关于Spark流处理方面的资料实在是少之又少,开发环境搭建上一些细节上的说明就更少了,本文主要介绍在Windows下通过IntelliJ Idea连接远程服务器的Spark节点,接收FlumeNG收集的日志数据实现实时的数据处理。开发语言为Scala。

这里我们假设已经部署好Spark 1.5.2集群,并且集群的运行模式为Standalone HA,假设已经存在FlumeNG的Agent发过来的实时数据流。

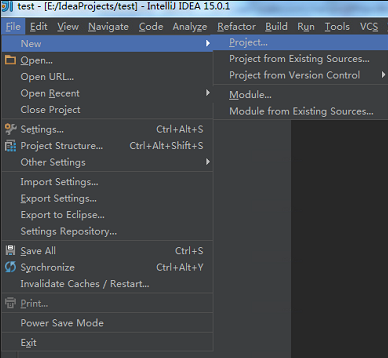

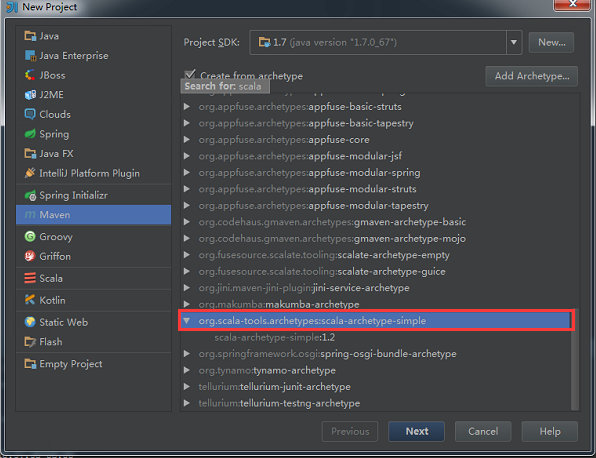

新建MAVEN工程

选择 Create from archivetype –> scala-archetype-simple

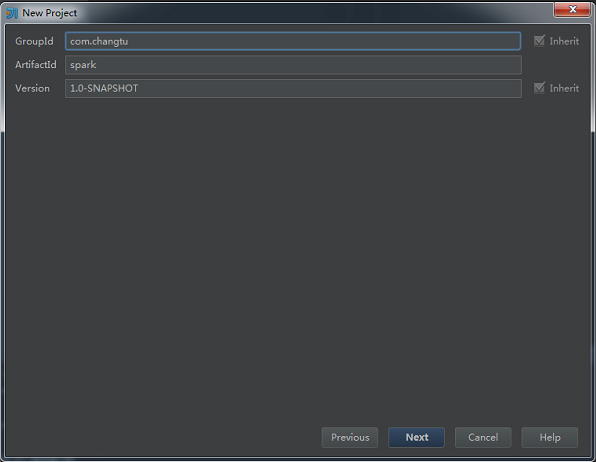

配置好GroupId和ArtifactId,下一步、下一步

pom.xml文件配置如下,需要引入依赖

flume-ng-sdk、spark-streaming-flume_2.10、spark-streaming_2.10、jackson.core、jackson-databind、jackson-module-scala_2.10:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.changtu</groupId>

<artifactId>spark</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.10.6</scala.version>

</properties>

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-sdk</artifactId>

<version>1.5.2</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-flume_2.10</artifactId>

<version>1.5.2</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.10</artifactId>

<version>1.5.2</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-core</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.module</groupId>

<artifactId>jackson-module-scala_2.10</artifactId>

<version>2.4.4</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.7</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>- log4J.properties配置信息如下

log4j.rootLogger=WARN,stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%5p - %m%n- 新建Scala object

IPHandler.scala

package com.changtu

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.flume.FlumeUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* IP处理

*

*/

object IPHandler {

/**

* 使用淘宝的REST接口获取IP数据, 返回JSON数据

* @param ipAddr ip地址

*/

// def getIPJSON(ipAddr: String): String = {

// Source.fromURL("http://ip.taobao.com/service/getIpInfo.php?ip=" + ipAddr).mkString

// }

/**

* 使用淘宝的REST接口获取IP数据, 返回运营商信息

* @param ipAddr ip地址

*/

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("FlumeNG sink")

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(2))

val stream = FlumeUtils.createStream(ssc, "xxx.xxx.xxx(配置FlumeNG Agent的IP)", 22221, StorageLevel.MEMORY_AND_DISK)

stream.map(e => "FlumeNG:header:" + e.event.get(0).toString + "body: " + new String(e.event.getBody.array)).print()

ssc.start()

ssc.awaitTermination()

sc.stop()

}

}新建Scala object

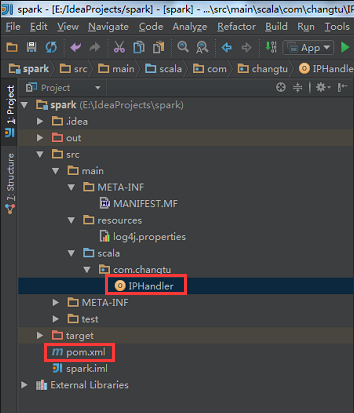

工程整体的目录结构如下所示

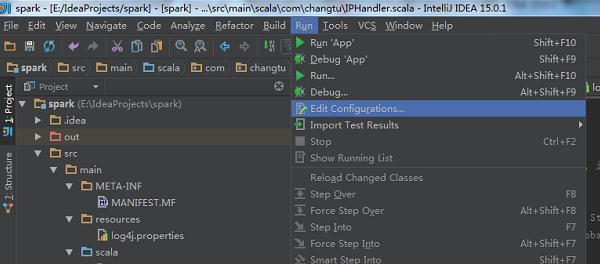

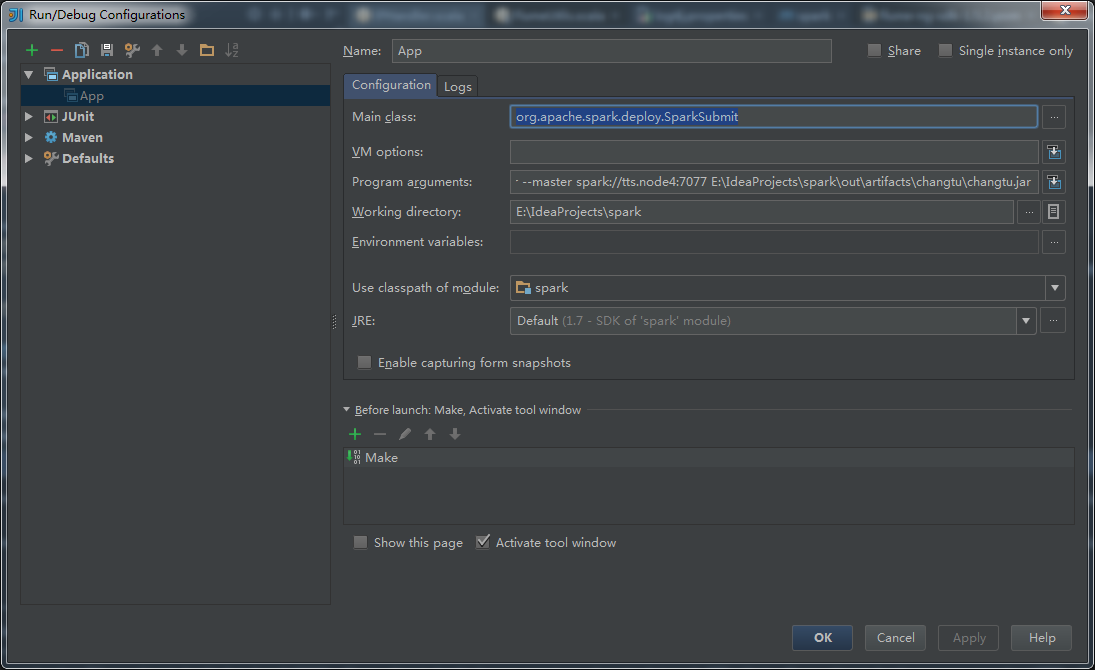

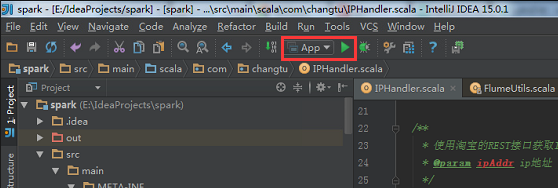

选择菜单栏run-edit configurations

添加配置信息

Main class

org.apache.spark.deploy.SparkSubmit

Program arguments

--class

com.changtu.IPHandler

--jars

E:\IdeaProjects\jars\spark-streaming-flume_2.10-1.5.2.jar,E:\IdeaProjects\jars\flume-ng-sdk-1.5.2.jar

--master

spark://tts.node4:7077

E:\IdeaProjects\spark\out\artifacts\changtu\changtu.jar-

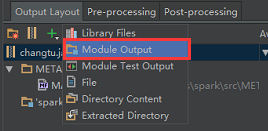

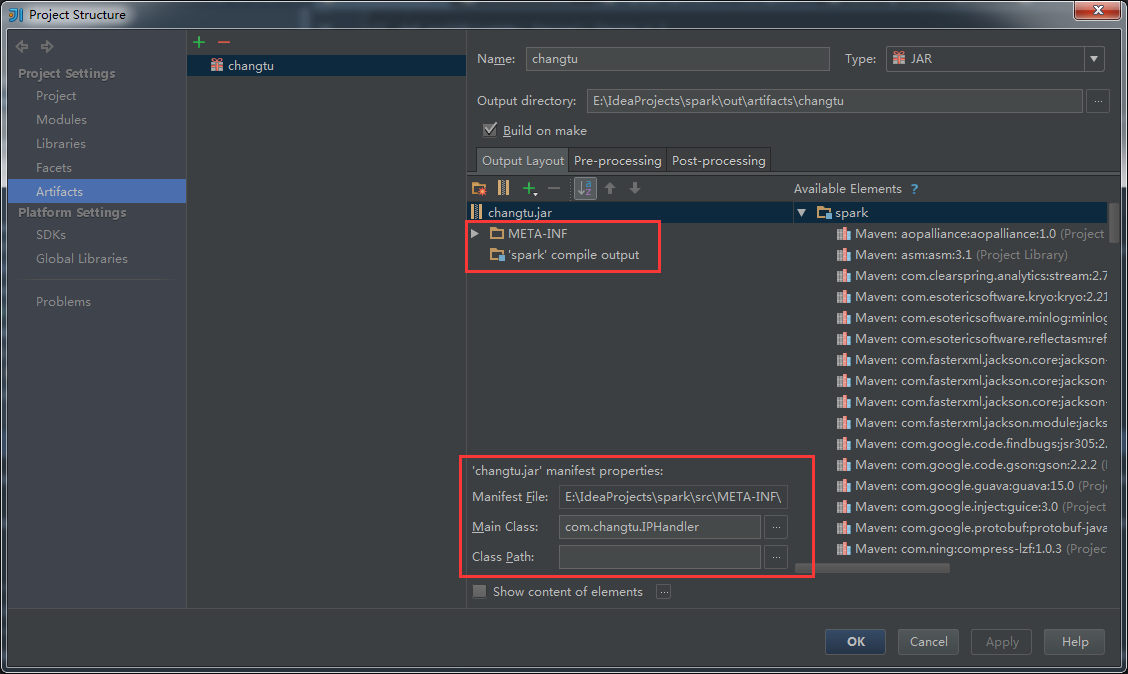

选择菜单栏file project structure进行打包配置

主要添加Module Output和META-INF配置完后,run

运行结果:

-------------------------------------------

Time: 1457234138000 ms

-------------------------------------------

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234136498}body: 2016-03-06 11:15:36 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGES0

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234137595}body: 2016-03-06 11:15:37 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE1

-------------------------------------------

Time: 1457234140000 ms

-------------------------------------------

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234138597}body: 2016-03-06 11:15:38 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE2

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234139604}body: 2016-03-06 11:15:39 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE3

-------------------------------------------

Time: 1457234142000 ms

-------------------------------------------

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234140606}body: 2016-03-06 11:15:40 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE4

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234141608}body: 2016-03-06 11:15:41 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE5

-------------------------------------------

Time: 1457234144000 ms

-------------------------------------------

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234142610}body: 2016-03-06 11:15:42 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE6

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234143612}body: 2016-03-06 11:15:43 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE7

-------------------------------------------

Time: 1457234146000 ms

-------------------------------------------

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234144614}body: 2016-03-06 11:15:44 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE8

FlumeNG:header:{flume.client.log4j.log.level=20000, flume.client.log4j.logger.name=com.changtu.datapush.DBToolkit, flume.client.log4j.message.encoding=UTF8, flume.client.log4j.timestamp=1457234145616}body: 2016-03-06 11:15:45 INFO [com.changtu.datapush.DBToolkit:22] - FlumeNG MESSAGE9如果要打包放到服务器调用,可以通过以下命令调用

spark-submit --name "spark-flume" --master spark://tts.node4:7077 --class com.changtu.IPHandler --jars /appl/scripts/spark-streaming-flume_2.10-1.5.2.jar,/appl/scripts/flume-ng-sdk-1.5.2.jar --executor-memory 300m /appl/scripts/changtu.jarEOF;

Added by lubinsu

QQ:380732421

Mail:lubinsu@gmail.com

Date:2016-03-06

5032

5032

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?