前面已经介绍了,通过live555来实现媒体文件的播放。这篇主要和大家说一下实时流的通过live555的播放。

相对之前的文件流,这里实时流只需要多实现一个子类:通过继承RTSPServer类来实现一些自己的相关操作。

如:有客户端请求过来的时候,需要先通过lookupServerMediaSession找到对应的session,这里可以定义自己的streamName,也就是url后面按个串,。如果没有找到,则新建生成自己需要的不同的session,还有填充自己的SDP信息等等操作。

继承RTSPServer的子类实现如下:具体的一些实现可以参考RTSPServer的实现,只需要修改填充自己的session的即可。

#include "DemoH264RTSPServer.h"

#include "DemoH264Interface.h"

#include "DemoH264MediaSubsession.h"

DemoH264RTSPServer* DemoH264RTSPServer::createNew(UsageEnvironment& env, Port rtspPort,

UserAuthenticationDatabase* authDatabase, unsigned reclamationTestSeconds)

{

int rtspSock = -1;

rtspSock = setUpOurSocket(env, rtspPort);

if(rtspSock == -1 )

{

DBG_LIVE555_PRINT("setUpOurSocket failed\n");

return NULL;

}

return new DemoH264RTSPServer(env, rtspSock, rtspPort, authDatabase, reclamationTestSeconds);

}

DemoH264RTSPServer::DemoH264RTSPServer(UsageEnvironment& env, int ourSock, Port rtspPort, UserAuthenticationDatabase* authDatabase,

unsigned reclamationTestSeconds):RTSPServer(env, ourSock, rtspPort, authDatabase, reclamationTestSeconds), fRTSPServerState(true)

{

DBG_LIVE555_PRINT("create DemoH264RTSPServer \n");

}

DemoH264RTSPServer::~DemoH264RTSPServer()

{

}

ServerMediaSession* DemoH264RTSPServer::lookupServerMediaSession(const char* streamName)

{

// streamName, 为URL地址后面的字符串 如

// rtsp://10.0.2.15/streamNameCH00StreamType00, 则streamName = "streamNameCH00StreamType00";

// 当客户端发来url请求时,可以解析streamName来判断请求那个通道的哪种码流

// 1 解析url 我这里不处理,可以自己回调接口进来处理

int channelNO = 0; // 通道号

int streamType = 0; // 码流类型

int videoType = 1; // 视频 or 音频

int requestType = 0; // 请求类型 实时预览 or 回放

ServerMediaSession* sms = NULL;

switch(requestType)

{

case 0: // realtime

sms = RTSPServer::lookupServerMediaSession(streamName);

if ( NULL == sms )

{

sms = ServerMediaSession::createNew(envir(), streamName, NULL, NULL);

DemoH264MediaSubsession *session = DemoH264MediaSubsession::createNew(envir(), streamType, videoType, channelNO, false);

sms->addSubsession(session);

}

break;

case 1:

// play back

DBG_LIVE555_PRINT("play back request !\n");

break;

default:

DBG_LIVE555_PRINT("unknown request type!\n");

break;

}

this->addServerMediaSession(sms);

return sms;

}

DemoH264RTSPServer::DemoH264RTSPClientSession* DemoH264RTSPServer::createNewClientSession(unsigned clientSessionID, int clientSocket, struct sockaddr_in clientAddr)

{

DemoH264RTSPServer::DemoH264RTSPClientSession* client = new DemoH264RTSPClientSession(*this, clientSessionID, clientSocket, clientAddr);

fClientSessionList.push_back(client);

DBG_LIVE555_PRINT("add client session success!\n");

return client;

}

int DemoH264RTSPServer::stopDemoH264RTSPServer()

{

// 删除所有的客户端的session

std::list<DemoH264RTSPServer::DemoH264RTSPClientSession*> ::iterator pos =

this->fClientSessionList.begin();

for(pos; pos != this->fClientSessionList.end(); pos ++ )

{

DemoH264RTSPServer::DemoH264RTSPClientSession* tmp = *pos;

delete tmp;

}

delete this; //

return 0;

}

DemoH264RTSPServer::DemoH264RTSPClientSession::DemoH264RTSPClientSession(DemoH264RTSPServer& rtspServer,unsigned clietnSessionID, int clientSocket, struct sockaddr_in clientAddr):

RTSPServer::RTSPClientSession(rtspServer, clietnSessionID, clientSocket, clientAddr)

{

}

DemoH264RTSPServer::DemoH264RTSPClientSession::~DemoH264RTSPClientSession()

{

/*

std::list<DemoH264RTSPServer::DemoH264RTSPClientSession*> ::iterator pos =

((DemoH264RTSPServer&)fOurServer).fClientSessionList.begin();

for(pos; pos != ((DemoH264RTSPServer&)fOurServer).fClientSessionList.end(); pos ++ )

{

if ((*pos)->fOurSessionId == this->fOurSessionId)

{

((DemoH264RTSPServer&)fOurServer).fClientSessionList.erase(pos);

DBG_LIVE555_PRINT("client session has been delete !\n");

break ;

}

}

*/

}

#include "DemoH264FrameSource.h"

#include "DemoH264Interface.h"

DemoH264FrameSource::DemoH264FrameSource(UsageEnvironment& env, long sourceHandle, int sourceType):

FramedSource(env), fSourceHandle(sourceHandle), fLastBufSize(0), fLeftDataSize(0), fSourceType(sourceType), fFirstFrame(1)

{

// 打开流媒体文件,在实时流时,这里就是开始传送流之前的一些准备工作

fDataBuf = (char*)malloc(2*1024*1024);

if(fDataBuf == NULL )

{

DBG_LIVE555_PRINT(" create source data buf failed!\n");

}

}

DemoH264FrameSource::~DemoH264FrameSource()

{

if(fDataBuf)

{

free(fDataBuf);

fDataBuf = NULL;

}

}

DemoH264FrameSource* DemoH264FrameSource::createNew(UsageEnvironment& env, int streamType, int channelNO, int sourceType)

{

//通过streamType和channelNO来创建source,向前端请求对应的码流//

long sourceHandle = openStreamHandle(channelNO, streamType);

if(sourceHandle == 0)

{

DBG_LIVE555_PRINT("open the source stream failed!\n");

return NULL;

}

DBG_LIVE555_PRINT("create H264FrameSource !\n");

return new DemoH264FrameSource(env, sourceHandle, sourceType);

}

/* 获取需要读取文件的总长度,live555对每次数据的发送有长度限制 */

long filesize(FILE *stream)

{

long curpos, length;

curpos = ftell(stream);

fseek(stream, 0L, SEEK_END);

length = ftell(stream);

fseek(stream, curpos, SEEK_SET);

return length;

}

void DemoH264FrameSource::doGetNextFrame()

{

int ret = 0;

//调用设备接口获取一帧数据

if (fLeftDataSize == 0)

{

ret = getStreamData(fSourceHandle, fDataBuf,&fLastBufSize, &fLeftDataSize,fSourceType);

if (ret <= 0)

{

DBG_LIVE555_PRINT("getStreamData failed!\n");

return;

}

}

int fNewFrameSize = fLeftDataSize;

if(fNewFrameSize > fMaxSize)

{ // the fMaxSize data

fFrameSize = fMaxSize;

fNumTruncatedBytes = fNewFrameSize - fMaxSize;

fLeftDataSize = fNewFrameSize - fMaxSize;

// 注意memmove函数的用法,允许内存空间叠加的

memmove(fTo, fDataBuf, fFrameSize);

memmove(fDataBuf, fDataBuf+fMaxSize, fLeftDataSize);

}

else

{ //all the data

fFrameSize = fNewFrameSize;

fLeftDataSize = 0;

memmove(fTo, fDataBuf, fFrameSize);

}

gettimeofday(&fPresentationTime, NULL);

if (fFirstFrame)

{

fDurationInMicroseconds = 40000;

nextTask() = envir().taskScheduler().scheduleDelayedTask(100000,

(TaskFunc*)FramedSource::afterGetting, this);

fFirstFrame = 0;

}

else

{

FramedSource::afterGetting(this);

}

}

void DemoH264FrameSource::doStopGetFrame()

{

closeStreamHandle(fSourceHandle);

}#include "DemoH264MediaSubsession.h"

#include "DemoH264FrameSource.h"

#include "DemoH264Interface.h"

#include "H264VideoStreamFramer.hh"

#include "H264VideoRTPSink.hh"

DemoH264MediaSubsession::DemoH264MediaSubsession(UsageEnvironment& env, int streamType, int videoType, int channelNO, bool reuseFirstSource, portNumBits initalNumPort)

:OnDemandServerMediaSubsession(env, reuseFirstSource), fStreamType(streamType), fVideoType(videoType), fChannelNO(channelNO)

{

}

DemoH264MediaSubsession::~DemoH264MediaSubsession()

{

}

DemoH264MediaSubsession* DemoH264MediaSubsession::createNew(UsageEnvironment& env, int streamType, int videoType, int channelNO,

bool reuseFirstSource, portNumBits initalNumPort)

{

DemoH264MediaSubsession* sms = new DemoH264MediaSubsession(env, streamType, videoType, channelNO, reuseFirstSource, initalNumPort);

return sms;

}

FramedSource* DemoH264MediaSubsession::createNewStreamSource(unsigned clientsessionId, unsigned& estBitrate)

{

DBG_LIVE555_PRINT("create new stream source !\n");

//这里根据实际请求的类型创建不同的source对象

if(fVideoType == 0x01)

{ // H264 video

estBitrate = 2000; // kbps

DemoH264FrameSource * source = DemoH264FrameSource::createNew(envir(), fStreamType, fChannelNO, 0);

if ( source == NULL )

{

DBG_LIVE555_PRINT("create source failed videoType:%d!\n", fVideoType );

return NULL;

}

return H264VideoStreamFramer::createNew(envir(), source);

}

else if ( fVideoType == 0x2)

{// Mpeg-4 video

}

else if( fVideoType == 0x04)

{ // G711 audio

estBitrate = 128; // kbps

DemoH264FrameSource * source = DemoH264FrameSource::createNew(envir(), fStreamType, fChannelNO, 1);

if ( source == NULL )

{

DBG_LIVE555_PRINT("create source failed videoType:%d!\n", fVideoType );

return NULL;

}

return source;

}

else

{ // unknow type

}

return NULL;

}

RTPSink* DemoH264MediaSubsession::createNewRTPSink(Groupsock* rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource* inputSource)

{

// 这里可以根据类型的不同创建不同sink

// 根据实际开发需要,继承不同的子类

DBG_LIVE555_PRINT("createNewRTPnk videoType:%d!\n", fVideoType );

if( fVideoType == 0x01)

{

return H264VideoRTPSink::createNew(envir(), rtpGroupsock, rtpPayloadTypeIfDynamic);

}

else if( fVideoType == 0x02)

{ // Mpeg-4

}

else if(fVideoType == 0x04)

{// G711 audio

}

else

{ // unknow type ;

return NULL;

}

}

/* 根据开发实际情况填写SDP信息 */

/*

char const* DemoH264MediaSubsession::sdpLines()

{

// create sdp info

return fSDPLines;

}

*/#include "DemoH264Interface.h"

#include "DemoH264RTSPServer.h"

/*打开实时码流句柄*/

long openStreamHandle(int channelNO, int streamType)

{

//开始实时流的一些准备工作:获取此类型实时码流的句柄,方便后面直接get码流

// 我这里测试,所以还是用自己定义的文件码流来读,不过每次都是读一帧数据

// 文件流格式为 FrameHeader_S + H264 + FrameHeader_S + H264 ...

FILE* fp = fopen("stream264file.h264", "rb+");

if (NULL == fp )

{

DBG_LIVE555_PRINT("open streamhandle failed!\n");

return -1;

}

return (long)fp;

}

/*实时获取一帧数据*/

int getStreamData(long lHandle, char* buf, unsigned* bufsize, unsigned* leftbufsize, int sourcetype)

{

if(lHandle <= 0)

{

DBG_LIVE555_PRINT(" lHandle error !\n");

return -1;

}

FrameHead_S stFrameHead;

memset(&stFrameHead, 0, sizeof(FrameHead_S));

FILE* fp = (FILE*)lHandle;

int readlen = 0;

// 1、先读取一帧数据的头信息

readlen = fread(&stFrameHead, 1, sizeof(FrameHead_S), fp);

if( readlen != sizeof(FrameHead_S))

{

DBG_LIVE555_PRINT(" read Frame Header Failed !\n");

return -1;

}

//2、获取一帧H264实时数据

if(stFrameHead.FrameLen > 2*1024*1024) // 在source中databuf指分配了2M

{

DBG_LIVE555_PRINT("data is too long:framlen=%d\n", stFrameHead.FrameLen);

//重新分配内存处理

return 0;

}

readlen = fread(buf, 1, stFrameHead.FrameLen, fp);

if(readlen != stFrameHead.FrameLen)

{

DBG_LIVE555_PRINT("read Frame rawdata Failed!\n");

return -1;

}

return stFrameHead.FrameLen;

}

/*关闭码流句柄*/

void closeStreamHandle(long lHandle)

{

//一些关闭码流的清理工作

fclose((FILE*)lHandle);

}

DemoH264Interface* DemoH264Interface::m_Instance = NULL;

DemoH264Interface* DemoH264Interface::createNew()

{

if(NULL == m_Instance)

{

m_Instance = new DemoH264Interface();

}

return m_Instance;

}

DemoH264Interface::DemoH264Interface()

{

m_liveServerFlag = false;

}

DemoH264Interface::~DemoH264Interface()

{

}

void DemoH264Interface::InitLive555(void *param)

{

//初始化

DBG_LIVE555_PRINT(" ~~~~Init live555 stream\n");

// Begin by setting up the live555 usage environment

m_scheduler = BasicTaskScheduler::createNew();

m_env = BasicUsageEnvironment::createNew(*m_scheduler);

#if ACCESS_CONTROL // 认证

m_authDB = new UserAuthenticationDatabase;

m_authDB->addUserRecord("username", "password");

#endif

m_rtspServer = NULL;

m_rtspServerPortNum = 554; // 可以修改

m_liveServerFlag = true;

}

int DemoH264Interface::startLive555()

{

if( !m_liveServerFlag)

{

DBG_LIVE555_PRINT("Not Init the live server !\n");

return -1;

}

DBG_LIVE555_PRINT(" ~~~~Start live555 stream\n");

// 建立RTSP服务

m_rtspServer = DemoH264RTSPServer::createNew(*m_env, m_rtspServerPortNum, m_authDB);

if( m_rtspServer == NULL)

{

// *m_env << " create RTSPServer Failed:" << m_env->getResultMsg() << "\n";

DBG_LIVE555_PRINT("create RTSPServer Failed:%s\n", m_env->getResultMsg());

return -1;

}

// loop and not come back~

m_env->taskScheduler().doEventLoop();

return 0;

}

int DemoH264Interface::stopLive555()

{

DBG_LIVE555_PRINT(" ~~~~stop live555 stream\n");

if(m_liveServerFlag)

{

if(m_rtspServer)

m_rtspServer->stopDemoH264RTSPServer();

m_liveServerFlag = false;

}

}#include <stdio.h>

#include "DemoH264Interface.h"

int main(int argc, char* argv[])

{

// Init

// 添加一些需要设置的rtsp服务信息,如用户名,密码 端口等,通过参数传递

void* param = NULL;

DemoH264Interface::createNew()->InitLive555(param);

// start

if( -1 == DemoH264Interface::createNew()->startLive555())

{

DBG_LIVE555_PRINT(" start live555 moudle failed!\n");

return 0;

}

//stop

DemoH264Interface::createNew()->stopLive555();

return 0;

}

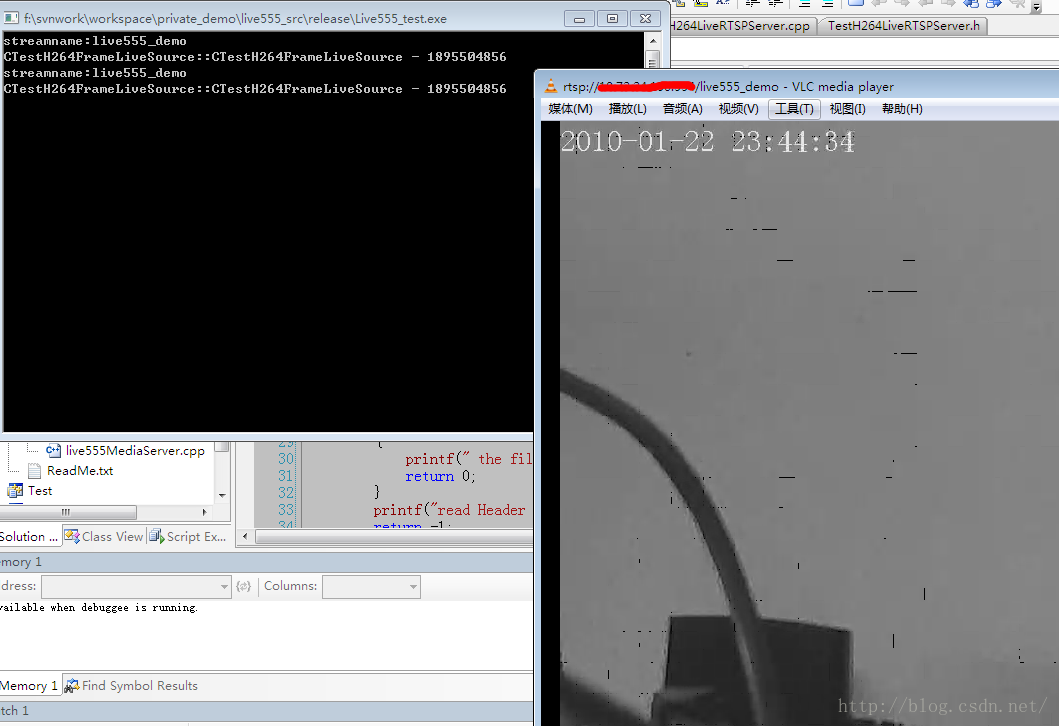

完成这些操作后,我在Windows下测试是能正常预览的j结果如下:

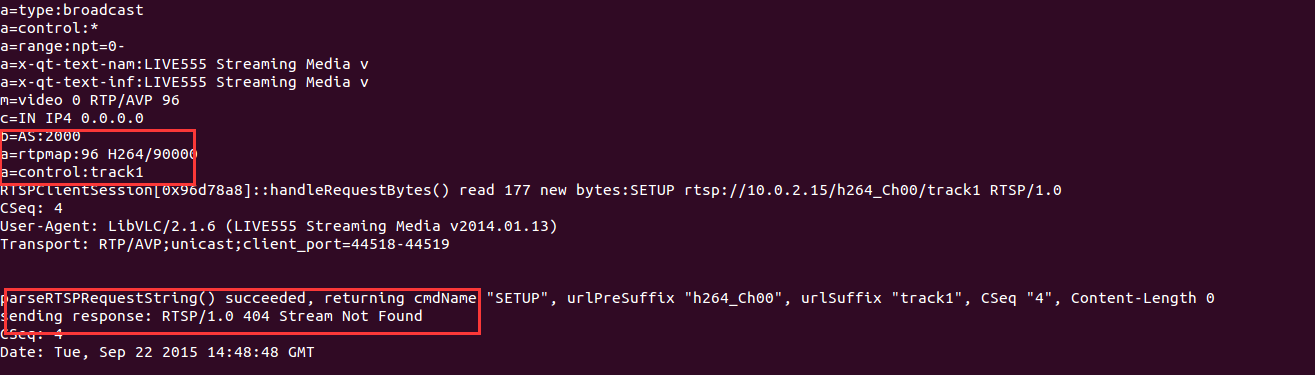

但是在linux系统下貌似不行,出了问题,错误如下:

未找到stream,排查了几天也没查出来原因,在网上看了其他网友的的解惑,貌似说trackId不对,但是我此处ID只有一个,应该不会引起这个问题的,估计病因不在这里,

因为没有研究源码的实现,所以暂时未能定位到问题,希望有遇到的知道缘由的大侠能留言告诉我,我将万分感谢。

上面代码的完整路径可以到这里下载:live555完整代码

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?