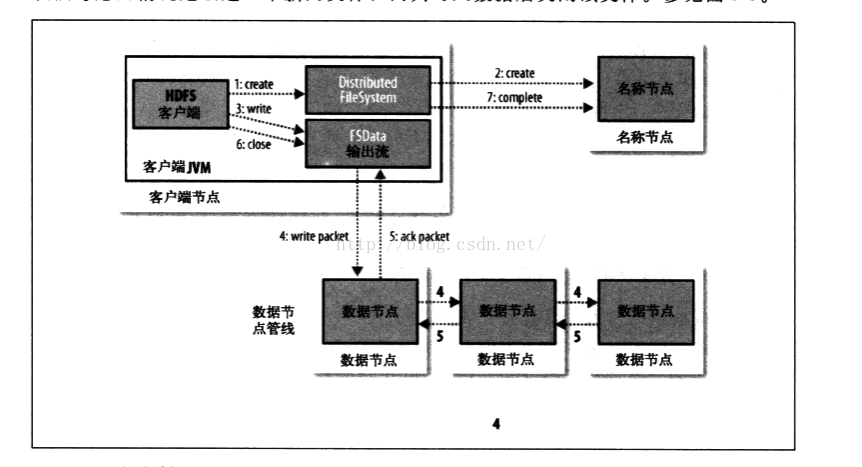

1.继续研究HDFS写操作的源码过程,客户端通过DistributedFileststem中的create方法创建文件。结构与上一篇读文件的操作类似,会执行FileSystemLinkResolver的resolve方法,即调用docall方法。

public FSDataOutputStream doCall(final Path p)

throws IOException, UnresolvedLinkException {

final DFSOutputStream dfsos = dfs.create(getPathName(p), permission,

cflags, replication, blockSize, progress, bufferSize,

checksumOpt);

return dfs.createWrappedOutputStream(dfsos, statistics);

}

/**

* Same as {@link #create(String, FsPermission, EnumSet, boolean, short, long,

* Progressable, int, ChecksumOpt)} with the addition of favoredNodes that is

* a hint to where the namenode should place the file blocks.

* The favored nodes hint is not persisted in HDFS. Hence it may be honored

* at the creation time only. HDFS could move the blocks during balancing or

* replication, to move the blocks from favored nodes. A value of null means

* no favored nodes for this create

*/

public DFSOutputStream create(String src,

FsPermission permission,

EnumSet<CreateFlag> flag,

boolean createParent,

short replication,

long blockSize,

Progressable progress,

int buffersize,

ChecksumOpt checksumOpt,

InetSocketAddress[] favoredNodes) throws IOException {

checkOpen();

if (permission == null) {

permission = FsPermission.getFileDefault();

}

FsPermission masked = permission.applyUMask(dfsClientConf.uMask);

if(LOG.isDebugEnabled()) {

LOG.debug(src + ": masked=" + masked);

}

String[] favoredNodeStrs = null;

if (favoredNodes != null) {

favoredNodeStrs = new String[favoredNodes.length];

for (int i = 0; i < favoredNodes.length; i++) {

favoredNodeStrs[i] =

favoredNodes[i].getHostName() + ":"

+ favoredNodes[i].getPort();

}

}

final DFSOutputStream result = DFSOutputStream.newStreamForCreate(this,

src, masked, flag, createParent, replication, blockSize, progress,

buffersize, dfsClientConf.createChecksum(checksumOpt),

favoredNodeStrs);

beginFileLease(result.getFileId(), result);

return result;

}static DFSOutputStream newStreamForCreate(DFSClient dfsClient, String src,

FsPermission masked, EnumSet<CreateFlag> flag, boolean createParent,

short replication, long blockSize, Progressable progress, int buffersize,

DataChecksum checksum, String[] favoredNodes) throws IOException {

HdfsFileStatus stat = null;

// Retry the create if we get a RetryStartFileException up to a maximum

// number of times

boolean shouldRetry = true;

int retryCount = CREATE_RETRY_COUNT;

while (shouldRetry) {

shouldRetry = false;

try {

stat = dfsClient.namenode.create(src, masked, dfsClient.clientName,

new EnumSetWritable<CreateFlag>(flag), createParent, replication,

blockSize, SUPPORTED_CRYPTO_VERSIONS);

break;

} catch (RemoteException re) {

IOException e = re.unwrapRemoteException(

AccessControlException.class,

DSQuotaExceededException.class,

FileAlreadyExistsException.class,

FileNotFoundException.class,

ParentNotDirectoryException.class,

NSQuotaExceededException.class,

RetryStartFileException.class,

SafeModeException.class,

UnresolvedPathException.class,

SnapshotAccessControlException.class,

UnknownCryptoProtocolVersionException.class);

if (e instanceof RetryStartFileException) {

if (retryCount > 0) {

shouldRetry = true;

retryCount--;

} else {

throw new IOException("Too many retries because of encryption" +

" zone operations", e);

}

} else {

throw e;

}

}

}

Preconditions.checkNotNull(stat, "HdfsFileStatus should not be null!");

final DFSOutputStream out = new DFSOutputStream(dfsClient, src, stat,

flag, progress, checksum, favoredNodes);

out.start();

return out;

}<span style="font-size:18px;">/*

* streamer thread is the only thread that opens streams to datanode,

* and closes them. Any error recovery is also done by this thread.

*/

@Override

</span><span style="font-size:12px;">public void run() {

long lastPacket = Time.now();

TraceScope traceScope = null;

if (traceSpan != null) {

traceScope = Trace.continueSpan(traceSpan);

}

while (!streamerClosed && dfsClient.clientRunning) {

// if the Responder encountered an error, shutdown Responder

if (hasError && response != null) {

try {

response.close();

response.join();

response = null;

} catch (InterruptedException e) {

DFSClient.LOG.warn("Caught exception ", e);

}

}

Packet one;

try {

// process datanode IO errors if any

boolean doSleep = false;

if (hasError && (errorIndex >= 0 || restartingNodeIndex >= 0)) {

doSleep = processDatanodeError();

}

synchronized (dataQueue) {

// wait for a packet to be sent.

long now = Time.now();

while ((!streamerClosed && !hasError && dfsClient.clientRunning

&& dataQueue.size() == 0 &&

(stage != BlockConstructionStage.DATA_STREAMING ||

stage == BlockConstructionStage.DATA_STREAMING &&

now - lastPacket < dfsClient.getConf().socketTimeout/2)) || doSleep ) {

long timeout = dfsClient.getConf().socketTimeout/2 - (now-lastPacket);

timeout = timeout <= 0 ? 1000 : timeout;

timeout = (stage == BlockConstructionStage.DATA_STREAMING)?

timeout : 1000;

try {

dataQueue.wait(timeout);

} catch (InterruptedException e) {

DFSClient.LOG.warn("Caught exception ", e);

}

doSleep = false;

now = Time.now();

}

if (streamerClosed || hasError || !dfsClient.clientRunning) {

continue;

}

// get packet to be sent.

if (dataQueue.isEmpty()) {

one = createHeartbeatPacket();

} else {

one = dataQueue.getFirst(); // regular data packet

}

}

assert one != null;

// get new block from namenode.

if (stage == BlockConstructionStage.PIPELINE_SETUP_CREATE) {

if(DFSClient.LOG.isDebugEnabled()) {

DFSClient.LOG.debug("Allocating new block");

}

setPipeline(nextBlockOutputStream());

initDataStreaming();

} else if (stage == BlockConstructionStage.PIPELINE_SETUP_APPEND) {

if(DFSClient.LOG.isDebugEnabled()) {

DFSClient.LOG.debug("Append to block " + block);

}

setupPipelineForAppendOrRecovery();

initDataStreaming();

}

long lastByteOffsetInBlock = one.getLastByteOffsetBlock();

if (lastByteOffsetInBlock > blockSize) {

throw new IOException("BlockSize " + blockSize +

" is smaller than data size. " +

" Offset of packet in block " +

lastByteOffsetInBlock +

" Aborting file " + src);

}

if (one.lastPacketInBlock) {

// wait for all data packets have been successfully acked

synchronized (dataQueue) {

while (!streamerClosed && !hasError &&

ackQueue.size() != 0 && dfsClient.clientRunning) {

try {

// wait for acks to arrive from datanodes

dataQueue.wait(1000);

} catch (InterruptedException e) {

DFSClient.LOG.warn("Caught exception ", e);

}

}

}

if (streamerClosed || hasError || !dfsClient.clientRunning) {

continue;

}

stage = BlockConstructionStage.PIPELINE_CLOSE;

}

// send the packet

synchronized (dataQueue) {

// move packet from dataQueue to ackQueue

if (!one.isHeartbeatPacket()) {

dataQueue.removeFirst();

ackQueue.addLast(one);

dataQueue.notifyAll();

}

}

if (DFSClient.LOG.isDebugEnabled()) {

DFSClient.LOG.debug("DataStreamer block " + block +

" sending packet " + one);

}

// write out data to remote datanode

try {

one.writeTo(blockStream);

blockStream.flush();

} catch (IOException e) {

// HDFS-3398 treat primary DN is down since client is unable to

// write to primary DN. If a failed or restarting node has already

// been recorded by the responder, the following call will have no

// effect. Pipeline recovery can handle only one node error at a

// time. If the primary node fails again during the recovery, it

// will be taken out then.

tryMarkPrimaryDatanodeFailed();

throw e;

}

lastPacket = Time.now();

// update bytesSent

long tmpBytesSent = one.getLastByteOffsetBlock();

if (bytesSent < tmpBytesSent) {

bytesSent = tmpBytesSent;

}

if (streamerClosed || hasError || !dfsClient.clientRunning) {

continue;

}

// Is this block full?

if (one.lastPacketInBlock) {

// wait for the close packet has been acked

synchronized (dataQueue) {

while (!streamerClosed && !hasError &&

ackQueue.size() != 0 && dfsClient.clientRunning) {

dataQueue.wait(1000);// wait for acks to arrive from datanodes

}

}

if (streamerClosed || hasError || !dfsClient.clientRunning) {

continue;

}

endBlock();

}

if (progress != null) { progress.progress(); }

// This is used by unit test to trigger race conditions.

if (artificialSlowdown != 0 && dfsClient.clientRunning) {

Thread.sleep(artificialSlowdown);

}

} catch (Throwable e) {

// Log warning if there was a real error.

if (restartingNodeIndex == -1) {

DFSClient.LOG.warn("DataStreamer Exception", e);

}

if (e instanceof IOException) {

setLastException((IOException)e);

} else {

setLastException(new IOException("DataStreamer Exception: ",e));

}

hasError = true;

if (errorIndex == -1 && restartingNodeIndex == -1) {

// Not a datanode issue

streamerClosed = true;

}

}

}

if (traceScope != null) {

traceScope.close();

}

closeInternal();

}</span>

562

562

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?