本来想在单节点上面玩玩hive和hbase的整合,结果由于单节点上面的hive和hbase版本不兼容,整合的时候报错:org.apache.hadoop.hbase.HTableDescriptor.addFamily 没有addFamily这个方法,搞了半天还是没搞定,就在5节点上的cdh上来测试,现在cdh上面没有安装hive,所以现在先记录下hive的安装。

- 1.卸载centOS6.5自带的mysql

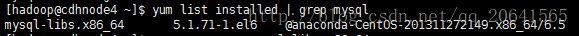

[hadoop@cdhnode4 ~]$ yum list installed | grep mysql

- 2.删除自带mysql

[hadoop@cdhnode4 ~]$ yum -y remove mysql-libs.x86_64- 3.使用yum安装mysql

[root@cdhnode4 hadoop]# yum -y install mysql-server mysql mysql-devel

- 4.安装完成启动mysql并且登陆

[root@cdhnode4 hadoop]# service mysqld start

# 切换回hadoop mysql账号为root 密码默认为空

[hadoop@cdhnode4 ~]$ mysql -uroot -p

- 5.为hive创建一个mysql用户,并且赋予权限

create user 'hive'@'%' identified by 'hive';

grant all on *.* to 'hive'@'%' identified by 'hive';

flush privileges; - 6.在cdhnode5节点 解压安装文件,设置环境变量

[hadoop@cdhnode5 app]$ tar -xzvf hive-1.1.0-cdh5.4.5.tar.gz

#添加环境变量

[hadoop@cdhnode5 hive-1.1.0-cdh5.4.5]$ vi /home/hadoop/.bash_profile

#添加如下配置

HIVE_HOME=/home/hadoop/app/hive-1.1.0-cdh5.4.5

PATH=$PATH:$HIVE_HOME/bin

export HIVE_HOME PATH

source /home/hadoop/.bash_profile - 7.创建一个hive-site.xml文件,添加如下配置

<configuration>

#mysql 数据配置

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://cdhnode4:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

#加载mysql jdbc驱动包

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property>

#配置hive ui

<!-- hwi -->

<property>

<name>hive.hwi.war.file</name>

<value>lib/hive-hwi-1.1.0-cdh5.4.5.jar</value>

<description>This sets the path to the HWI war file, relative to ${HIVE_HOME}. </description>

</property>

<property>

<name>hive.hwi.listen.host</name>

<value>0.0.0.0</value>

<description>This is the host address the Hive Web Interface will listen on</description>

</property>

<property>

<name>hive.hwi.listen.port</name>

<value>9999</value>

<description>This is the port the Hive Web Interface will listen on</description>

</property>

#配置 Hive 临时文件存储地址

<property>

<name>hive.exec.scratchdir</name>

<value>/home/hadoop/data/hive/hive-${user.name}</value>

<description>Scratch space for Hive jobs</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/hadoop/data/hive/${user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

</configuration>- 8.创建目录

[hadoop@cdhnode5 data]$ mkdir /home/hadoop/data/hive- 9.上传mysql JDBC的jar到hive的lib下

[hadoop@cdhnode5 lib]$ ll | grep mysql

-rw-r--r-- 1 hadoop hadoop 827942 Apr 7 2016 mysql-connector-java-5.1.21.jar- 10.修改hive-env.xml

先将hive-env.sh.template复制一份为hive-env.sh

[hadoop@cdhnode5 conf]$ cp hive-env.sh.template hive-env.sh

修改如下内容

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/home/hadoop/app/hadoop-2.6.0-cdh5.4.5

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/home/hadoop/app/hive-1.1.0-cdh5.4.5/conf

# Folder containing extra ibraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/home/hadoop/app/hive-1.1.0-cdh5.4.5/lib

- 11.测试hive

11.1 创建测试表

hive> create table lijietest(

> name string,

> age int

> )

> row format delimited fields terminated by ','

> stored as textfile;

OK

Time taken: 1.047 seconds

11.2 load数据到测试表

hive> load data local inpath '/home/hadoop/test.txt' overwrite into table lijietest;

Loading data to table default.lijietest

Table default.lijietest stats: [numFiles=1, numRows=0, totalSize=29, rawDataSize=0]

OK

Time taken: 0.942 seconds11.3 执行查询操作测试

hive> select * from lijietest;

OK

lijie 24

zhangsan 42

lisi 88

Time taken: 1.155 seconds, Fetched: 3 row(s)

11.4 执行left join查询操作测试

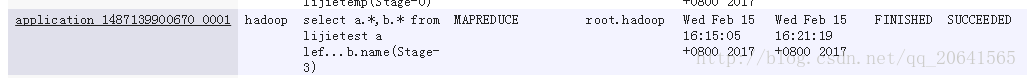

hive> select a.*,b.* from lijietest a left join lijietest b on a.name = b.name;

由于电脑内存太小,job跑了很长时间,还把主resourcemanager跑挂了。。。。

结果如下:

Total MapReduce CPU Time Spent: 5 seconds 780 msec

OK

lijie 24 lijie 24

zhangsan 42 zhangsan 42

lisi 88 lisi 88

Time taken: 584.787 seconds, Fetched: 3 row(s)

770

770

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?