前言:最近研究了一下语音识别,从百度语音识别到讯飞语音识别;首先说一下个人针对两者的看法,讯飞毫无疑问比较专业,识别率也很高真对语音识别是比较精准的,但是很多开发者和我一样期望离线识别,而讯飞离线是收费的;请求次数来讲,两者都可以申请高配额,真对用户较多的几乎都一样。基于免费并且支持离线我选择了百度离线语音识别。比较简单,UI设计多一点,下面写一下教程:

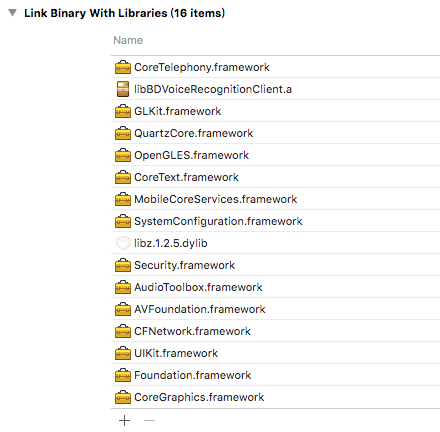

1.首先:需要的库

2.我是自定义的UI所以以功能实现为主(头文件)

- // 头文件

- #import "BDVRCustomRecognitonViewController.h"

- #import "BDVRClientUIManager.h"

- #import "WBVoiceRecordHUD.h"

- #import "BDVRViewController.h"

- #import "MyViewController.h"

- #import "BDVRSConfig.h"

3.需要知道的功能:能用到的如下:

- //-------------------类方法------------------------

- // 创建语音识别客户对像,该对像是个单例

- + (BDVoiceRecognitionClient *)sharedInstance;

- // 释放语音识别客户端对像

- + (void)releaseInstance;

- //-------------------识别方法-----------------------

- // 判断是否可以录音

- - (BOOL)isCanRecorder;

- // 开始语音识别,需要实现MVoiceRecognitionClientDelegate代理方法,并传入实现对像监听事件

- // 返回值参考 TVoiceRecognitionStartWorkResult

- - (int)startVoiceRecognition:(id<MVoiceRecognitionClientDelegate>)aDelegate;

- // 说完了,用户主动完成录音时调用

- - (void)speakFinish;

- // 结束本次语音识别

- - (void)stopVoiceRecognition;

- /**

- * @brief 获取当前识别的采样率

- *

- * @return 采样率(16000/8000)

- */

- - (int)getCurrentSampleRate;

- /**

- * @brief 得到当前识别模式(deprecated)

- *

- * @return 当前识别模式

- */

- - (int)getCurrentVoiceRecognitionMode __attribute__((deprecated));

- /**

- * @brief 设置当前识别模式(deprecated),请使用-(void)setProperty:(TBDVoiceRecognitionProperty)property;

- *

- * @param 识别模式

- *

- * @return 是否设置成功

- */

- - (void)setCurrentVoiceRecognitionMode:(int)aMode __attribute__((deprecated));

- // 设置识别类型

- - (void)setProperty:(TBDVoiceRecognitionProperty)property __attribute__((deprecated));

- // 获取当前识别类型

- - (int)getRecognitionProperty __attribute__((deprecated));

- // 设置识别类型列表, 除EVoiceRecognitionPropertyInput和EVoiceRecognitionPropertySong外

- // 可以识别类型复合

- - (void)setPropertyList: (NSArray*)prop_list;

- // cityID仅对EVoiceRecognitionPropertyMap识别类型有效

- - (void)setCityID: (NSInteger)cityID;

- // 获取当前识别类型列表

- - (NSArray*)getRecognitionPropertyList;

- //-------------------提示音-----------------------

- // 播放提示音,默认为播放,录音开始,录音结束提示音

- // BDVoiceRecognitionClientResources/Tone

- // record_start.caf 录音开始声音文件

- // record_end.caf 录音结束声音文件

- // 声音资源需要加到项目工程里,用户可替换资源文件,文件名不可以变,建音提示音不宜过长,0。5秒左右。

- // aTone 取值参考 TVoiceRecognitionPlayTones,如没有找到文件,则返回NO

- - (BOOL)setPlayTone:(int)aTone isPlay:(BOOL)aIsPlay;

4.录音按钮相关动画(我自定义的,大家可以借鉴)

每日更新关注:http://weibo.com/hanjunqiang 新浪微博

- // 录音按钮相关

- @property (nonatomic, weak, readonly) UIButton *holdDownButton;// 说话按钮

- /**

- * 是否取消錄音

- */

- @property (nonatomic, assign, readwrite) BOOL isCancelled;

- /**

- * 是否正在錄音

- */

- @property (nonatomic, assign, readwrite) BOOL isRecording;

- /**

- * 当录音按钮被按下所触发的事件,这时候是开始录音

- */

- - (void)holdDownButtonTouchDown;

- /**

- * 当手指在录音按钮范围之外离开屏幕所触发的事件,这时候是取消录音

- */

- - (void)holdDownButtonTouchUpOutside;

- /**

- * 当手指在录音按钮范围之内离开屏幕所触发的事件,这时候是完成录音

- */

- - (void)holdDownButtonTouchUpInside;

- /**

- * 当手指滑动到录音按钮的范围之外所触发的事件

- */

- - (void)holdDownDragOutside;

5.初始化系统UI

- #pragma mark - layout subViews UI

- /**

- * 根据正常显示和高亮状态创建一个按钮对象

- *

- * @param image 正常显示图

- * @param hlImage 高亮显示图

- *

- * @return 返回按钮对象

- */

- - (UIButton *)createButtonWithImage:(UIImage *)image HLImage:(UIImage *)hlImage ;

- - (void)holdDownDragInside;

- - (void)createInitView; // 创建初始化界面,播放提示音时会用到

- - (void)createRecordView; // 创建录音界面

- - (void)createRecognitionView; // 创建识别界面

- - (void)createErrorViewWithErrorType:(int)aStatus; // 在识别view中显示详细错误信息

- - (void)createRunLogWithStatus:(int)aStatus; // 在状态view中显示详细状态信息

- - (void)finishRecord:(id)sender; // 用户点击完成动作

- - (void)cancel:(id)sender; // 用户点击取消动作

- - (void)startVoiceLevelMeterTimer;

- - (void)freeVoiceLevelMeterTimerTimer;

6.最重要的部分

- // 录音完成

- [[BDVoiceRecognitionClient sharedInstance] speakFinish];

- // 取消录音

- [[BDVoiceRecognitionClient sharedInstance] stopVoiceRecognition];

7.两个代理方法

- - (void)VoiceRecognitionClientWorkStatus:(int)aStatus obj:(id)aObj

- {

- switch (aStatus)

- {

- case EVoiceRecognitionClientWorkStatusFlushData: // 连续上屏中间结果

- {

- NSString *text = [aObj objectAtIndex:0];

- if ([text length] > 0)

- {

- // [clientSampleViewController logOutToContinusManualResut:text];

- UILabel *clientWorkStatusFlushLabel = [[UILabel alloc]initWithFrame:CGRectMake(kScreenWidth/2 - 100,64,200,60)];

- clientWorkStatusFlushLabel.text = text;

- clientWorkStatusFlushLabel.textAlignment = NSTextAlignmentCenter;

- clientWorkStatusFlushLabel.font = [UIFont systemFontOfSize:18.0f];

- clientWorkStatusFlushLabel.numberOfLines = 0;

- clientWorkStatusFlushLabel.backgroundColor = [UIColor whiteColor];

- [self.view addSubview:clientWorkStatusFlushLabel];

- }

- break;

- }

- case EVoiceRecognitionClientWorkStatusFinish: // 识别正常完成并获得结果

- {

- [self createRunLogWithStatus:aStatus];

- if ([[BDVoiceRecognitionClient sharedInstance] getRecognitionProperty] != EVoiceRecognitionPropertyInput)

- {

- // 搜索模式下的结果为数组,示例为

- // ["公园", "公元"]

- NSMutableArray *audioResultData = (NSMutableArray *)aObj;

- NSMutableString *tmpString = [[NSMutableString alloc] initWithString:@""];

- for (int i=0; i < [audioResultData count]; i++)

- {

- [tmpString appendFormat:@"%@\r\n",[audioResultData objectAtIndex:i]];

- }

- clientSampleViewController.resultView.text = nil;

- [clientSampleViewController logOutToManualResut:tmpString];

- }

- else

- {

- NSString *tmpString = [[BDVRSConfig sharedInstance] composeInputModeResult:aObj];

- [clientSampleViewController logOutToContinusManualResut:tmpString];

- }

- if (self.view.superview)

- {

- [self.view removeFromSuperview];

- }

- break;

- }

- case EVoiceRecognitionClientWorkStatusReceiveData:

- {

- // 此状态只有在输入模式下使用

- // 输入模式下的结果为带置信度的结果,示例如下:

- // [

- // [

- // {

- // "百度" = "0.6055192947387695";

- // },

- // {

- // "摆渡" = "0.3625582158565521";

- // },

- // ]

- // [

- // {

- // "一下" = "0.7665404081344604";

- // }

- // ],

- // ]

- //暂时关掉 -- 否则影响跳转结果

- // NSString *tmpString = [[BDVRSConfig sharedInstance] composeInputModeResult:aObj];

- // [clientSampleViewController logOutToContinusManualResut:tmpString];

- break;

- }

- case EVoiceRecognitionClientWorkStatusEnd: // 用户说话完成,等待服务器返回识别结果

- {

- [self createRunLogWithStatus:aStatus];

- if ([BDVRSConfig sharedInstance].voiceLevelMeter)

- {

- [self freeVoiceLevelMeterTimerTimer];

- }

- [self createRecognitionView];

- break;

- }

- case EVoiceRecognitionClientWorkStatusCancel:

- {

- if ([BDVRSConfig sharedInstance].voiceLevelMeter)

- {

- [self freeVoiceLevelMeterTimerTimer];

- }

- [self createRunLogWithStatus:aStatus];

- if (self.view.superview)

- {

- [self.view removeFromSuperview];

- }

- break;

- }

- case EVoiceRecognitionClientWorkStatusStartWorkIng: // 识别库开始识别工作,用户可以说话

- {

- if ([BDVRSConfig sharedInstance].playStartMusicSwitch) // 如果播放了提示音,此时再给用户提示可以说话

- {

- [self createRecordView];

- }

- if ([BDVRSConfig sharedInstance].voiceLevelMeter) // 开启语音音量监听

- {

- [self startVoiceLevelMeterTimer];

- }

- [self createRunLogWithStatus:aStatus];

- break;

- }

- case EVoiceRecognitionClientWorkStatusNone:

- case EVoiceRecognitionClientWorkPlayStartTone:

- case EVoiceRecognitionClientWorkPlayStartToneFinish:

- case EVoiceRecognitionClientWorkStatusStart:

- case EVoiceRecognitionClientWorkPlayEndToneFinish:

- case EVoiceRecognitionClientWorkPlayEndTone:

- {

- [self createRunLogWithStatus:aStatus];

- break;

- }

- case EVoiceRecognitionClientWorkStatusNewRecordData:

- {

- break;

- }

- default:

- {

- [self createRunLogWithStatus:aStatus];

- if ([BDVRSConfig sharedInstance].voiceLevelMeter)

- {

- [self freeVoiceLevelMeterTimerTimer];

- }

- if (self.view.superview)

- {

- [self.view removeFromSuperview];

- }

- break;

- }

- }

- }

- - (void)VoiceRecognitionClientNetWorkStatus:(int) aStatus

- {

- switch (aStatus)

- {

- case EVoiceRecognitionClientNetWorkStatusStart:

- {

- [self createRunLogWithStatus:aStatus];

- [[UIApplication sharedApplication] setNetworkActivityIndicatorVisible:YES];

- break;

- }

- case EVoiceRecognitionClientNetWorkStatusEnd:

- {

- [self createRunLogWithStatus:aStatus];

- [[UIApplication sharedApplication] setNetworkActivityIndicatorVisible:NO];

- break;

- }

- }

- }

8.录音按钮的一些操作

- #pragma mark ------ 关于按钮操作的一些事情-------

- - (void)holdDownButtonTouchDown {

- // 开始动画

- _disPlayLink = [CADisplayLink displayLinkWithTarget:self selector:@selector(delayAnimation)];

- _disPlayLink.frameInterval = 40;

- [_disPlayLink addToRunLoop:[NSRunLoop currentRunLoop] forMode:NSDefaultRunLoopMode];

- self.isCancelled = NO;

- self.isRecording = NO;

- // 开始语音识别功能,之前必须实现MVoiceRecognitionClientDelegate协议中的VoiceRecognitionClientWorkStatus:obj方法

- int startStatus = -1;

- startStatus = [[BDVoiceRecognitionClient sharedInstance] startVoiceRecognition:self];

- if (startStatus != EVoiceRecognitionStartWorking) // 创建失败则报告错误

- {

- NSString *statusString = [NSString stringWithFormat:@"%d",startStatus];

- [self performSelector:@selector(firstStartError:) withObject:statusString afterDelay:0.3]; // 延迟0.3秒,以便能在出错时正常删除view

- return;

- }

- // "按住说话-松开搜索"提示

- [voiceImageStr removeFromSuperview];

- voiceImageStr = [[UIImageView alloc]initWithFrame:CGRectMake(kScreenWidth/2 - 40, kScreenHeight - 153, 80, 33)];

- voiceImageStr.backgroundColor = [UIColor colorWithPatternImage:[UIImage imageNamed:@"searchVoice"]];

- [self.view addSubview:voiceImageStr];

- }

- - (void)holdDownButtonTouchUpOutside {

- // 结束动画

- [self.view.layer removeAllAnimations];

- [_disPlayLink invalidate];

- _disPlayLink = nil;

- // 取消录音

- [[BDVoiceRecognitionClient sharedInstance] stopVoiceRecognition];

- if (self.view.superview)

- {

- [self.view removeFromSuperview];

- }

- }

- - (void)holdDownButtonTouchUpInside {

- // 结束动画

- [self.view.layer removeAllAnimations];

- [_disPlayLink invalidate];

- _disPlayLink = nil;

- [[BDVoiceRecognitionClient sharedInstance] speakFinish];

- }

- - (void)holdDownDragOutside {

- //如果已經開始錄音了, 才需要做拖曳出去的動作, 否則只要切換 isCancelled, 不讓錄音開始.

- if (self.isRecording) {

- // if ([self.delegate respondsToSelector:@selector(didDragOutsideAction)]) {

- // [self.delegate didDragOutsideAction];

- // }

- } else {

- self.isCancelled = YES;

- }

- }

- #pragma mark - layout subViews UI

- - (UIButton *)createButtonWithImage:(UIImage *)image HLImage:(UIImage *)hlImage {

- UIButton *button = [[UIButton alloc] initWithFrame:CGRectMake(kScreenWidth/2 -36, kScreenHeight - 120, 72, 72)];

- if (image)

- [button setBackgroundImage:image forState:UIControlStateNormal];

- if (hlImage)

- [button setBackgroundImage:hlImage forState:UIControlStateHighlighted];

- return button;

- }

- #pragma mark ----------- 动画部分 -----------

- - (void)startAnimation

- {

- CALayer *layer = [[CALayer alloc] init];

- layer.cornerRadius = [UIScreen mainScreen].bounds.size.width/2;

- layer.frame = CGRectMake(0, 0, layer.cornerRadius * 2, layer.cornerRadius * 2);

- layer.position = CGPointMake([UIScreen mainScreen].bounds.size.width/2,[UIScreen mainScreen].bounds.size.height - 84);

- // self.view.layer.position;

- UIColor *color = [UIColor colorWithRed:arc4random()%10*0.1 green:arc4random()%10*0.1 blue:arc4random()%10*0.1 alpha:1];

- layer.backgroundColor = color.CGColor;

- [self.view.layer addSublayer:layer];

- CAMediaTimingFunction *defaultCurve = [CAMediaTimingFunction functionWithName:kCAMediaTimingFunctionDefault];

- _animaTionGroup = [CAAnimationGroup animation];

- _animaTionGroup.delegate = self;

- _animaTionGroup.duration = 2;

- _animaTionGroup.removedOnCompletion = YES;

- _animaTionGroup.timingFunction = defaultCurve;

- CABasicAnimation *scaleAnimation = [CABasicAnimation animationWithKeyPath:@"transform.scale.xy"];

- scaleAnimation.fromValue = @0.0;

- scaleAnimation.toValue = @1.0;

- scaleAnimation.duration = 2;

- CAKeyframeAnimation *opencityAnimation = [CAKeyframeAnimation animationWithKeyPath:@"opacity"];

- opencityAnimation.duration = 2;

- opencityAnimation.values = @[@0.8,@0.4,@0];

- opencityAnimation.keyTimes = @[@0,@0.5,@1];

- opencityAnimation.removedOnCompletion = YES;

- NSArray *animations = @[scaleAnimation,opencityAnimation];

- _animaTionGroup.animations = animations;

- [layer addAnimation:_animaTionGroup forKey:nil];

- [self performSelector:@selector(removeLayer:) withObject:layer afterDelay:1.5];

- }

- - (void)removeLayer:(CALayer *)layer

- {

- [layer removeFromSuperlayer];

- }

- - (void)delayAnimation

- {

- [self startAnimation];

- }

完成以上操作,就大功告成了!

温馨提示:

1.由于是语音识别,需要用到麦克风相关权限,模拟器会爆12个错误,使用真机可以解决;

2.涉及到授权文件相关并不复杂,工程Bundle Identifier只需要设置百度的离线授权一致即可,如下图:

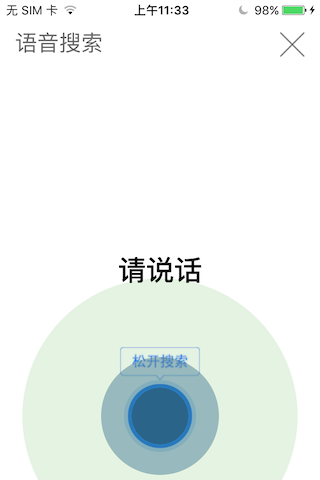

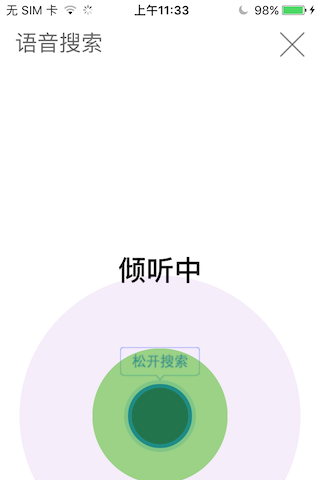

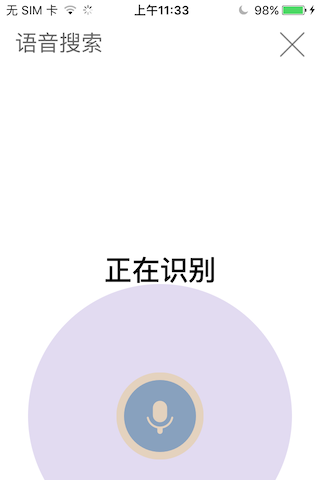

最终效果如下:

756

756

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?