一、前言

池化层的输入来自上一个卷积层的输出,主要作用是提供了平移不变性,并且减少了参数的数量,防止过拟合现象的发生。比如在最大池化中,选择区域内最大的值为采样点,这样在发生平移的时候,采样点不变。

池化层一般没有参数,所以反向传播的时候,只需对输入参数求导,不需要进行权值更新。

平均值效果不佳,一般选择最大池化。

二、源码分析

1、LayerSetUp函数

跟卷积层类似,主要是导入池化层的各参数

template <typename Dtype>

void PoolingLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

PoolingParameter pool_param = this->layer_param_.pooling_param();//池化参数

if (pool_param.global_pooling()) {全局池化不需要参数

CHECK(!(pool_param.has_kernel_size() ||

pool_param.has_kernel_h() || pool_param.has_kernel_w()))

<< "With Global_pooling: true Filter size cannot specified";

} else {

CHECK(!pool_param.has_kernel_size() !=

!(pool_param.has_kernel_h() && pool_param.has_kernel_w()))//kernel_size和kernel_h、kernel_w 二选一

<< "Filter size is kernel_size OR kernel_h and kernel_w; not both";

CHECK(pool_param.has_kernel_size() ||

(pool_param.has_kernel_h() && pool_param.has_kernel_w()))

<< "For non-square filters both kernel_h and kernel_w are required.";

}

CHECK((!pool_param.has_pad() && pool_param.has_pad_h()

&& pool_param.has_pad_w())

|| (!pool_param.has_pad_h() && !pool_param.has_pad_w()))

<< "pad is pad OR pad_h and pad_w are required.";

CHECK((!pool_param.has_stride() && pool_param.has_stride_h()

&& pool_param.has_stride_w())

|| (!pool_param.has_stride_h() && !pool_param.has_stride_w()))

<< "Stride is stride OR stride_h and stride_w are required.";

global_pooling_ = pool_param.global_pooling();

if (global_pooling_) {

kernel_h_ = bottom[0]->height();

kernel_w_ = bottom[0]->width();

} else {

if (pool_param.has_kernel_size()) {

kernel_h_ = kernel_w_ = pool_param.kernel_size();

} else {

kernel_h_ = pool_param.kernel_h();

kernel_w_ = pool_param.kernel_w();

}

}

CHECK_GT(kernel_h_, 0) << "Filter dimensions cannot be zero.";

CHECK_GT(kernel_w_, 0) << "Filter dimensions cannot be zero.";

if (!pool_param.has_pad_h()) {

pad_h_ = pad_w_ = pool_param.pad();

} else {

pad_h_ = pool_param.pad_h();

pad_w_ = pool_param.pad_w();

}

if (!pool_param.has_stride_h()) {

stride_h_ = stride_w_ = pool_param.stride();

} else {

stride_h_ = pool_param.stride_h();

stride_w_ = pool_param.stride_w();

}

if (global_pooling_) {

CHECK(pad_h_ == 0 && pad_w_ == 0 && stride_h_ == 1 && stride_w_ == 1)

<< "With Global_pooling: true; only pad = 0 and stride = 1";

}

if (pad_h_ != 0 || pad_w_ != 0) {

CHECK(this->layer_param_.pooling_param().pool()

== PoolingParameter_PoolMethod_AVE

|| this->layer_param_.pooling_param().pool()

== PoolingParameter_PoolMethod_MAX)

<< "Padding implemented only for average and max pooling.";

CHECK_LT(pad_h_, kernel_h_);

CHECK_LT(pad_w_, kernel_w_);

}

}

2、reshape

template <typename Dtype>

void PoolingLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

CHECK_EQ(4, bottom[0]->num_axes()) << "Input must have 4 axes, "

<< "corresponding to (num, channels, height, width)";

channels_ = bottom[0]->channels();

height_ = bottom[0]->height();

width_ = bottom[0]->width();

if (global_pooling_) {//全局池化,核的大小和输入图像相同

kernel_h_ = bottom[0]->height();

kernel_w_ = bottom[0]->width();

}//否则按公式计算:( height_ + 2 * pad_h_ - kernel_h_) / stride_h_)) + 1

pooled_height_ = static_cast<int>(ceil(static_cast<float>(

height_ + 2 * pad_h_ - kernel_h_) / stride_h_)) + 1;

pooled_width_ = static_cast<int>(ceil(static_cast<float>(

width_ + 2 * pad_w_ - kernel_w_) / stride_w_)) + 1;

if (pad_h_ || pad_w_) {

//确保在有填充的情况下,采样从图像内开始

if ((pooled_height_ - 1) * stride_h_ >= height_ + pad_h_) {

--pooled_height_;

}

if ((pooled_width_ - 1) * stride_w_ >= width_ + pad_w_) {

--pooled_width_;

}

CHECK_LT((pooled_height_ - 1) * stride_h_, height_ + pad_h_);

CHECK_LT((pooled_width_ - 1) * stride_w_, width_ + pad_w_);

}

top[0]->Reshape(bottom[0]->num(), channels_, pooled_height_,

pooled_width_);//输出形状:(num,channels_,pooled_height_,pooled_width_)

if (top.size() > 1) {

top[1]->ReshapeLike(*top[0]);

}

// If max pooling, we will initialize the vector index part.

if (this->layer_param_.pooling_param().pool() ==

PoolingParameter_PoolMethod_MAX && top.size() == 1) {

max_idx_.Reshape(bottom[0]->num(), channels_, pooled_height_,

pooled_width_);//最大池化,记录取到的最大值的索引的形状

}

// If stochastic pooling, we will initialize the random index part.

if (this->layer_param_.pooling_param().pool() ==

PoolingParameter_PoolMethod_STOCHASTIC) {

rand_idx_.Reshape(bottom[0]->num(), channels_, pooled_height_,

pooled_width_);//随机池化,同样还有记录随机采样的索引的形状

}

}

3、前向计算

前向计算过程中,我们对卷积层输出map的每个不重叠(有时也可以使用重叠的区域进行池化)的n*n区域进行降采样,选取每个区域中的最大值(max-pooling)或是平均值(mean-pooling),也有最小值的降采样,计算过程和最大值的计算类似。

max-pooling:

template <typename Dtype>

void PoolingLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data();//输入数据明显只读

Dtype* top_data = top[0]->mutable_cpu_data();

const int top_count = top[0]->count();

// We'll output the mask to top[1] if it's of size >1.

const bool use_top_mask = top.size() > 1;

int* mask = NULL; // 未初始化变量

Dtype* top_mask = NULL;

// 不同的池化方式

switch (this->layer_param_.pooling_param().pool()) {

case PoolingParameter_PoolMethod_MAX://最大池化

// Initialize

if (use_top_mask) {//表示输出大于1,那么就用top_mask记录索引

top_mask = top[1]->mutable_cpu_data();

caffe_set(top_count, Dtype(-1), top_mask);//初始化-1

} else {//输出只有一个的时候

mask = max_idx_.mutable_cpu_data();

caffe_set(top_count, -1, mask);

}

caffe_set(top_count, Dtype(-FLT_MAX), top_data);

// The main loop

for (int n = 0; n < bottom[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_);//防止核滑到图像外

int wend = min(wstart + kernel_w_, width_);//防止核滑到图像外

hstart = max(hstart, 0);//防止核滑到图像外

wstart = max(wstart, 0);//防止核滑到图像外

const int pool_index = ph * pooled_width_ + pw;//行优先

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

const int index = h * width_ + w;/滑动的坐标

if (bottom_data[index] > top_data[pool_index]) {

top_data[pool_index] = bottom_data[index];//每个值相比,取最大值

if (use_top_mask) {

top_mask[pool_index] = static_cast<Dtype>(index);

} else {

mask[pool_index] = index;//记录索引

}

}

}

}

}

}

// compute offset

bottom_data += bottom[0]->offset(0, 1);//地址偏移,每次移动W×H,表明一张图处理完毕

top_data += top[0]->offset(0, 1);

if (use_top_mask) {

top_mask += top[0]->offset(0, 1);

} else {

mask += top[0]->offset(0, 1);

}

}

}

break;

case PoolingParameter_PoolMethod_AVE://平均池化

for (int i = 0; i < top_count; ++i) {

top_data[i] = 0;

}

// The main loop

for (int n = 0; n < bottom[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_ + pad_h_);

int wend = min(wstart + kernel_w_, width_ + pad_w_);

int pool_size = (hend - hstart) * (wend - wstart);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

hend = min(hend, height_);

wend = min(wend, width_);

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

top_data[ph * pooled_width_ + pw] +=

bottom_data[h * width_ + w];/求和

}

}

top_data[ph * pooled_width_ + pw] /= pool_size;//做平均

}

}

// compute offset

bottom_data += bottom[0]->offset(0, 1);

top_data += top[0]->offset(0, 1);

}

}

break;

case PoolingParameter_PoolMethod_STOCHASTIC://随机池化没有实现,将报错

NOT_IMPLEMENTED;

break;

default:

LOG(FATAL) << "Unknown pooling method.";

}

}

GPU版的前向计算

template <typename Dtype>

__global__ void MaxPoolForward(const int nthreads,

const Dtype* const bottom_data, const int num, const int channels,

const int height, const int width, const int pooled_height,

const int pooled_width, const int kernel_h, const int kernel_w,

const int stride_h, const int stride_w, const int pad_h, const int pad_w,

Dtype* const top_data, int* mask, Dtype* top_mask) {

//index是线程索引

//nthreads为线程的总数,为该pooling层top blob的输出神经元总数,也就是说一个线程对应输出的一个结点

CUDA_KERNEL_LOOP(index, nthreads) {

const int pw = index % pooled_width;//线程对应的是输出Feature Map的中的宽

const int ph = (index / pooled_width) % pooled_height;//线程对应的是输出Feature Map的中的高

const int c = (index / pooled_width / pooled_height) % channels;//线程对应的是channels

const int n = index / pooled_width / pooled_height / channels;//线程对应的是num

int hstart = ph * stride_h - pad_h;//输入的坐标起始点

int wstart = pw * stride_w - pad_w;

const int hend = min(hstart + kernel_h, height);//输入的坐标终止点

const int wend = min(wstart + kernel_w, width);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

Dtype maxval = -FLT_MAX;

int maxidx = -1;

const Dtype* const bottom_slice =

bottom_data + (n * channels + c) * height * width;//输入的一个feature的切片

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

if (bottom_slice[h * width + w] > maxval) {

maxidx = h * width + w;

maxval = bottom_slice[maxidx];

}

}

}

// index正好是top blob中对应点的索引,这也是为什么线程都是用了一维的维度

// 数据在Blob.data中最后都是一维的形式保存的

top_data[index] = maxval;

if (mask) {

mask[index] = maxidx;

} else {

top_mask[index] = maxidx;

}

}

}

4、反向计算

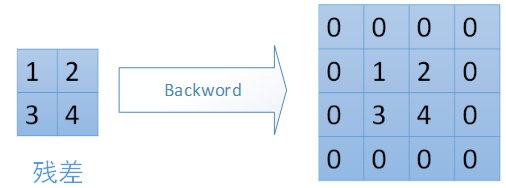

对于max-pooling,在前向计算时,是选取的每个2*2区域中的最大值,这里需要记录下最大值在每个小区域中的位置。在反向传播时,只有那个最大值对下一层有贡献,所以将残差传递到该最大值的位置,区域内其他2*2-1=3个位置置零。具体过程如下图,其中4*4矩阵中非零的位置即为前边计算出来的每个小区域的最大值的位置。

maxpooling层是非线性变换,但有输入与输出的关系可线性表达为bottom_dataj=top_datai(所以需要前向计算时需要记录索引i到索引j的映射max_idx_)

template <typename Dtype>

void PoolingLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {

if (!propagate_down[0]) {

return;

}

const Dtype* top_diff = top[0]->cpu_diff();

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

// Different pooling methods. We explicitly do the switch outside the for

// loop to save time, although this results in more codes.

caffe_set(bottom[0]->count(), Dtype(0), bottom_diff);//首先全部初始化为0

// We'll output the mask to top[1] if it's of size >1.

const bool use_top_mask = top.size() > 1;

const int* mask = NULL; // suppress warnings about uninitialized variables

const Dtype* top_mask = NULL;

switch (this->layer_param_.pooling_param().pool()) {

case PoolingParameter_PoolMethod_MAX:

// The main loop

if (use_top_mask) {

top_mask = top[1]->cpu_data();

} else {

mask = max_idx_.cpu_data();

}

for (int n = 0; n < top[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

const int index = ph * pooled_width_ + pw;

const int bottom_index =

use_top_mask ? top_mask[index] : mask[index];

bottom_diff[bottom_index] += top_diff[index];//在最大值索引处还原最大值,其余地方仍然为0

}

}

bottom_diff += bottom[0]->offset(0, 1);

top_diff += top[0]->offset(0, 1);

if (use_top_mask) {

top_mask += top[0]->offset(0, 1);

} else {

mask += top[0]->offset(0, 1);

}

}

}

break;

case PoolingParameter_PoolMethod_AVE:

// The main loop

for (int n = 0; n < top[0]->num(); ++n) {

for (int c = 0; c < channels_; ++c) {

for (int ph = 0; ph < pooled_height_; ++ph) {

for (int pw = 0; pw < pooled_width_; ++pw) {

int hstart = ph * stride_h_ - pad_h_;

int wstart = pw * stride_w_ - pad_w_;

int hend = min(hstart + kernel_h_, height_ + pad_h_);

int wend = min(wstart + kernel_w_, width_ + pad_w_);

int pool_size = (hend - hstart) * (wend - wstart);

hstart = max(hstart, 0);

wstart = max(wstart, 0);

hend = min(hend, height_);

wend = min(wend, width_);

for (int h = hstart; h < hend; ++h) {

for (int w = wstart; w < wend; ++w) {

bottom_diff[h * width_ + w] +=

top_diff[ph * pooled_width_ + pw] / pool_size;

}

}

}

}

// offset

bottom_diff += bottom[0]->offset(0, 1);

top_diff += top[0]->offset(0, 1);

}

}

break;

case PoolingParameter_PoolMethod_STOCHASTIC:

NOT_IMPLEMENTED;

break;

default:

LOG(FATAL) << "Unknown pooling method.";

}

}

相应的GPU版本:

template <typename Dtype>

__global__ void MaxPoolBackward(const int nthreads, const Dtype* const top_diff,

const int* const mask, const Dtype* const top_mask, const int num,

const int channels, const int height, const int width,

const int pooled_height, const int pooled_width, const int kernel_h,

const int kernel_w, const int stride_h, const int stride_w, const int pad_h,

const int pad_w, Dtype* const bottom_diff) {

CUDA_KERNEL_LOOP(index, nthreads) {

// find out the local index

// find out the local offset

const int w = index % width;

const int h = (index / width) % height;

const int c = (index / width / height) % channels;

const int n = index / width / height / channels;

const int phstart =

(h + pad_h < kernel_h) ? 0 : (h + pad_h - kernel_h) / stride_h + 1;

const int phend = min((h + pad_h) / stride_h + 1, pooled_height);

const int pwstart =

(w + pad_w < kernel_w) ? 0 : (w + pad_w - kernel_w) / stride_w + 1;

const int pwend = min((w + pad_w) / stride_w + 1, pooled_width);

Dtype gradient = 0;

const int offset = (n * channels + c) * pooled_height * pooled_width;

const Dtype* const top_diff_slice = top_diff + offset;

if (mask) {

const int* const mask_slice = mask + offset;

for (int ph = phstart; ph < phend; ++ph) {

for (int pw = pwstart; pw < pwend; ++pw) {

if (mask_slice[ph * pooled_width + pw] == h * width + w) {

gradient += top_diff_slice[ph * pooled_width + pw];

}

}

}

} else {

const Dtype* const top_mask_slice = top_mask + offset;

for (int ph = phstart; ph < phend; ++ph) {

for (int pw = pwstart; pw < pwend; ++pw) {

if (top_mask_slice[ph * pooled_width + pw] == h * width + w) {

gradient += top_diff_slice[ph * pooled_width + pw];

}

}

}

}

bottom_diff[index] = gradient;

}

}

1433

1433

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?