#include "stdafx.h"

#include "opencv2/opencv.hpp"

#include "Kinect.h"

#include "kinect.face.h"

#include "iostream"

#include "fstream"

using namespace cv;

using namespace std;

//安全释放指针

template<class Interface>

inline void SafeRelease(Interface *& pInterfaceToRelease)

{

if (pInterfaceToRelease != NULL)

{

pInterfaceToRelease->Release();

pInterfaceToRelease = NULL;

}

}

//三角片数据结构

struct TRIANGLE_DESC

{

Point pt1, pt2, pt3;

};

//计算三角面片

vector<TRIANGLE_DESC> delaunayAlgorithm(const Rect boundRc, const vector<Point>& points)

{

if (points.empty()||boundRc.width<=0||boundRc.height<=0)

{

return vector<TRIANGLE_DESC>();

}

vector<TRIANGLE_DESC> result;

vector<Vec6f> _temp_result;

Subdiv2D subdiv2d(boundRc);

for (const auto point : points)

{

subdiv2d.insert(Point2f((float)point.x, (float)point.y));

}

subdiv2d.getTriangleList(_temp_result);

for (const auto _tmp_vec : _temp_result)

{

Point pt1((int)_tmp_vec[0], (int)_tmp_vec[1]);

Point pt2((int)_tmp_vec[2], (int)_tmp_vec[3]);

Point pt3((int)_tmp_vec[4], (int)_tmp_vec[5]);

if (boundRc.contains(pt1) && boundRc.contains(pt2) && boundRc.contains(pt3)) //去除最外头的三角形

{

TRIANGLE_DESC vec1;

vec1.pt1 = pt1;

vec1.pt2= pt2;

vec1.pt3= pt3;

result.push_back(vec1);

}

}

return result;

}

//画三角面片

void drawTriangel(Mat &srcImg,Rect rectImg,vector<Point> pointsVec,int index)

{

//每个模型的三角面片

if (pointsVec.size() <= 2) //防止画面片时报错

{

return ;

}

Rect rect1 = boundingRect(pointsVec);

rect1 = rect1&rectImg;

string label = format("Person %d", index);

putText(srcImg, label, rect1.tl(), FONT_HERSHEY_SIMPLEX, 3.0f, Scalar(220, 0, 220), 4);

vector<TRIANGLE_DESC> triangles = delaunayAlgorithm(rect1, pointsVec);

rectangle(srcImg, rect1, Scalar(0, 0, 255), 5);

for (int triangleCount = 0; triangleCount < triangles.size(); triangleCount++)

{

TRIANGLE_DESC triangle = triangles[triangleCount];

line(srcImg, triangle.pt1, triangle.pt2, Scalar(255, 0, 0), 2);

line(srcImg, triangle.pt2, triangle.pt3, Scalar(255, 0, 0), 2);

line(srcImg, triangle.pt3, triangle.pt1, Scalar(255, 0, 0), 2);

}//每个模型的三角面片

}

int _tmain(int argc, _TCHAR* argv[])

{

IKinectSensor *kinectSensor;

HRESULT hr = GetDefaultKinectSensor(&kinectSensor);

kinectSensor->Open();

//开启深度数据获取

IDepthFrameSource* depths = nullptr;

kinectSensor->get_DepthFrameSource(&depths); // 获取深度数据源

int height, width; //图像宽和高

IFrameDescription * myDescription = nullptr; //取得深度数据的分辨率

depths->get_FrameDescription(&myDescription);

myDescription->get_Height(&height);

myDescription->get_Width(&width);

myDescription->Release();

IDepthFrameReader* depthRead = nullptr;

depths->OpenReader(&depthRead); // 打开深度解析器

cout << "width " << width << " height " << height << endl;

Mat img16u(height, width, CV_16UC1);

Mat img8u(height, width, CV_8UC1);

//开启彩色数据获取

IColorFrameSource* colorFramSouce = nullptr;

kinectSensor->get_ColorFrameSource(&colorFramSouce); // 获取图像数据源

IColorFrameReader* colorFramRead = nullptr;

colorFramSouce->OpenReader(&colorFramRead); // 打开解析器

IFrameDescription* frameDs = nullptr;

colorFramSouce->get_FrameDescription(&frameDs); // 获取信息的属性

int heightColor, widthColor;

frameDs->get_Height(&heightColor);

frameDs->get_Width(&widthColor);

cout << "heightColor " << heightColor << " widthColor " << widthColor << endl;

Mat imgColor(heightColor, widthColor, CV_8UC4);

//开启骨骼数据获取

IBodyFrameSource * myBodySource = nullptr; //source

kinectSensor->get_BodyFrameSource(&myBodySource);

int heightBody, widthBody;

IBodyFrameReader * myBodyReader = nullptr; //reader

myBodySource->OpenReader(&myBodyReader);

int myBodyCount = 0;

myBodySource->get_BodyCount(&myBodyCount);

ICoordinateMapper * myMapper = nullptr;

kinectSensor->get_CoordinateMapper(&myMapper);

//高清脸部跟踪

IHighDefinitionFaceFrameSource* hdfacesource[BODY_COUNT];

IHighDefinitionFaceFrameReader* hdfacereader[BODY_COUNT];

IFaceModelBuilder* facemodelbuilder[BODY_COUNT]; // 创建面部特征对齐

bool produce[BODY_COUNT] = { false };

IFaceAlignment* facealignment[BODY_COUNT];

IFaceModel* facemodel[BODY_COUNT];

vector<vector<float>> deformations(BODY_COUNT, vector<float>(FaceShapeDeformations_Count));

for (int count = 0; count < BODY_COUNT; count++)

{

CreateHighDefinitionFaceFrameSource(kinectSensor, &hdfacesource[count]);

hdfacesource[count]->OpenReader(&hdfacereader[count]);

hdfacesource[count]->OpenModelBuilder(FaceModelBuilderAttributes_None, &facemodelbuilder[count]);

facemodelbuilder[count]->BeginFaceDataCollection();

CreateFaceAlignment(&facealignment[count]);

CreateFaceModel(1.0f, FaceShapeDeformations_Count, &deformations[count][0], &facemodel[count]);

}

UINT32 vertex = 0;

GetFaceModelVertexCount(&vertex); //人脸顶点数量,1347

while (true)

{

//获取深度摄像头数据

IDepthFrame* depthFram = nullptr;

if (depthRead->AcquireLatestFrame(&depthFram) == S_OK) //通过Reader尝试获取最新的一帧深度数据,放入深度帧中,并判断是否成功获取

{

depthFram->CopyFrameDataToArray(height * width, (UINT16 *)img16u.data); //先把数据存入16位的图像矩阵中

img16u.convertTo(img8u, CV_8UC1, 255.0 / 4500); //再把16位转换为8位

imshow("深度图像", img8u);

depthFram->Release();

}

//获取RGB摄像头数据

IColorFrame* colorFram = nullptr;

if (colorFramRead->AcquireLatestFrame(&colorFram) == S_OK) //通过Reader尝试获取最新的一帧深度数据,放入深度帧中,并判断是否成功获取

{

colorFram->CopyConvertedFrameDataToArray(heightColor*widthColor * 4, (BYTE*)imgColor.data, ColorImageFormat_Bgra);

colorFram->Release();

}

//获取人骨胳数据

IBodyFrame *myBodyFrame = nullptr;

if (myBodyReader->AcquireLatestFrame(&myBodyFrame) == S_OK)

{

myBodyCount = 0;

IBody ** bodyArr = nullptr;

myBodySource->get_BodyCount(&myBodyCount);

bodyArr = new IBody *[myBodyCount];

for (int i = 0; i < myBodyCount; i++) //bodyArr的初始化

bodyArr[i] = nullptr;

if (myBodyFrame->GetAndRefreshBodyData(myBodyCount, bodyArr) == S_OK)

{

for (int i = 0; i < myBodyCount; i++) //遍历6个人(可能用不完)

{

BOOLEAN result = false;

if (bodyArr[i]->get_IsTracked(&result) == S_OK && result) //判断此人是否被侦测到

{

cout << "Body " << i << " tracked!" << endl;

UINT64 trackingId = _UI64_MAX;

if (bodyArr[i]->get_TrackingId(&trackingId) == S_OK)

{

hdfacesource[i]->put_TrackingId(trackingId); //检测到的人体索映值传入

}

}

}

}

for (int i = 0; i < myBodyCount; i++)

{

IHighDefinitionFaceFrame* hdfaceframe = nullptr;

if (hdfacereader[i]->AcquireLatestFrame(&hdfaceframe) == S_OK && hdfaceframe != nullptr)

{

BOOLEAN tracked = false;

if (SUCCEEDED(hdfaceframe->get_IsTrackingIdValid(&tracked)) && tracked)

{

if (SUCCEEDED(hdfaceframe->GetAndRefreshFaceAlignmentResult(facealignment[i])) && facealignment[i] != nullptr)

{

if (!produce[i])

{

FaceModelBuilderCollectionStatus collection;

facemodelbuilder[i]->get_CollectionStatus(&collection);

if (collection == FaceModelBuilderCollectionStatus::FaceModelBuilderCollectionStatus_Complete)

{

cout << "Status : Complete" << endl;

putText(imgColor, "Status : Complete", Point(50, 50), FONT_HERSHEY_SIMPLEX, 1.0f, Scalar(0, 0, 255, 255), 2);

IFaceModelData* facemodeldata = nullptr;

if (SUCCEEDED(facemodelbuilder[i]->GetFaceData(&facemodeldata)) && facemodeldata != nullptr)

{

if (SUCCEEDED(facemodeldata->ProduceFaceModel(&facemodel[i])) && facemodel[i] != nullptr)

{

produce[i] = true;

}

}

SafeRelease(facemodeldata);

}

vector<CameraSpacePoint> facePoints(vertex); //facePoints(vertex)存的三维点 x,y,z坐标

//vector<Point> points3Dto2D;//全部点有的不在平面上

vector<Point> inImgrectPoints;//在图像内的点

Rect rectImg = Rect(0, 0, widthColor, heightColor);

if (SUCCEEDED(facemodel[i]->CalculateVerticesForAlignment(facealignment[i], vertex, &facePoints[0])))

{

ofstream outfile3D("Face3Ddata.txt");

ofstream outfile2D("Face2Ddata.txt");

//#pragma omp parallel for

for (int point = 0; point < vertex; point+=1)

{

ColorSpacePoint colorSpacePoint;

myMapper->MapCameraPointToColorSpace(facePoints[point], &colorSpacePoint);

circle(imgColor, Point(colorSpacePoint.X, colorSpacePoint.Y), 4, Scalar(0, 255, 0, 255), -1);

//points3Dto2D.push_back(Point(colorSpacePoint.X, colorSpacePoint.Y)); //注意:这里映射的点不一定在彩色图像上

if (rectImg.contains(Point(colorSpacePoint.X, colorSpacePoint.Y)))

{

inImgrectPoints.push_back(Point(colorSpacePoint.X, colorSpacePoint.Y));

outfile2D << colorSpacePoint.X << " \t" << colorSpacePoint.Y << endl;

}

outfile3D << facePoints[point].X << " \t" << facePoints[point].Y <<" \t"<< facePoints[point].Z << endl;

}

outfile2D.close();

outfile3D.close();

//画每个模型的三角面片

//drawTriangel(imgColor,rectImg,inImgrectPoints,i);

}

}

}

}

}

}

Mat imgShow;

//imshow("原图", imgColor);

resize(imgColor,imgShow,Size(800,480));

imshow("彩色图", imgShow);

myBodyFrame->Release();

}

waitKey(1);

}

depthRead->Release(); //释放不用的变量并且关闭感应器

colorFramRead->Release();

kinectSensor->Close();

return 0;

}

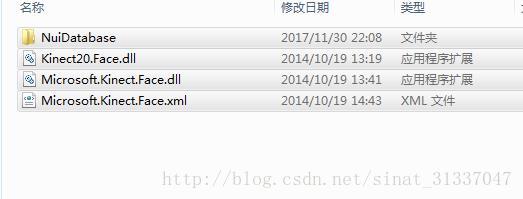

这里注意下Kinect的开发环境配置,win8以上(我的win10),usb3.0接口

安装kinect2.0SDk,安装后一定重启,关机后再开似乎效果不一样

kinect.face.dll文件要复制到工程目录下

需要的库:

6351

6351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?