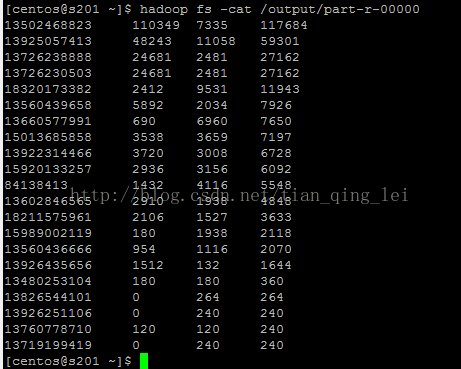

对日志数据中的上下行流量信息汇总,并输出按照总流量倒序排序的结果

数据如下:

13480253104 180 180 0

13502468823 110349 7335 0

13560436666 954 1116 0

13560439658 5892 2034 0

13602846565 2910 1938 0

13660577991 690 6960 0

13719199419 0 240 0

13726230503 24681 2481 0

13726238888 24681 2481 0

13760778710 120 120 0

13826544101 0 264 0

13922314466 3720 3008 0

13925057413 48243 11058 0

13926251106 0 240 0

13926435656 1512 132 0

15013685858 3538 3659 0

15920133257 2936 3156 0

15989002119 180 1938 0

18211575961 2106 1527 0

18320173382 2412 9531 0

84138413 1432 4116 0

基本思路:实现自定义的bean来封装流量信息,并将bean作为map输出的key来传输

MR程序在处理数据的过程中会对数据排序(map输出的kv对传输到reduce之前,会排序),排序的依据是map输出的key

所以,我们如果要实现自己需要的排序规则,则可以考虑将排序因素放到key中,让key实现接口:WritableComparable

然后重写key的compareTo方法

定义一个bean

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

/**

* 把流星信息封装成对象

*

* @author

*

*/

public class FlowBean implements WritableComparable<FlowBean> {

private long upFlow;

private long dFlow;

private long sumFlow;

public FlowBean() {

super();

}

public FlowBean(long upFlow, long dFlow) {

this.upFlow = upFlow;

this.dFlow = dFlow;

this.sumFlow = upFlow + dFlow;

}

public void set(long upFlow, long dFlow) {

this.upFlow = upFlow;

this.dFlow = dFlow;

this.sumFlow = upFlow + dFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getdFlow() {

return dFlow;

}

public void setdFlow(long dFlow) {

this.dFlow = dFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

// 序列化 :将字段信息写到输出流中

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upFlow);

out.writeLong(dFlow);

out.writeLong(sumFlow);

}

// 反序列化:从输出流中读取各个字段的信息

// 注意:反序列化的顺序必须跟序列化的对象一致

@Override

public void readFields(DataInput in) throws IOException {

upFlow = in.readLong();

dFlow = in.readLong();

sumFlow = in.readLong();

}

// 重写toString()方法

@Override

public String toString() {

return upFlow + "\t" + dFlow + "\t" + sumFlow;

}

@Override

public int compareTo(FlowBean o) {

return this.sumFlow > o.getSumFlow() ? -1 : 1;

}

}

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class FlowCountMapper extends Mapper<LongWritable, Text, FlowBean, Text> {

FlowBean bean = new FlowBean();

Text v = new Text();

@Override

protected void map(LongWritable key, Text values, Context context) throws IOException, InterruptedException {

// 将一行的内容转化为String

String value = values.toString();

// 切分字段

String[] split = value.split("\t");

// 取出手机号码

String phoneNum = split[0];

// 取出上行流量和下行流量

long upFlow = Long.parseLong(split[1]);

long dFlow = Long.parseLong(split[2]);

// context.write(new FlowBean(upFlow, dFlow),new Text(phoneNum));

bean.set(upFlow, dFlow);

v.set(phoneNum);

System.out.println(bean.toString() + v);

context.write(bean, v);

}

}

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class FlowCountReduce extends Reducer<FlowBean, Text, Text, FlowBean> {

@Override

protected void reduce(FlowBean key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

System.out.println(key);

context.write(values.iterator().next(), key);

}

}

定义一个FlowCount主类

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowCount {

public static void main(String[] args) throws Exception {

String inPath = "";

String outPath = "";

if (args.length == 2) {

inPath = args[0];

outPath = args[1];

}

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

// 指定jar包所在的本地路径

job.setJarByClass(FlowCount.class);

// 指定jar包使用的mapper和Reduce业务类

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReduce.class);

// 指定mapper输出数据的kv类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

// 指定最终的输出数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

// 指定job的输入原始文件所在的目录

FileInputFormat.setInputPaths(job, new Path(inPath));

FileOutputFormat.setOutputPath(job, new Path(outPath));

boolean res = job.waitForCompletion(true);

System.exit(res ? 0 : 1);

}

}

排序结果如下:

6214

6214

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?