一. Preparing the database for Oracle GoldenGate

1.1 Preparing constraints for Oracle GoldenGate

The followingtable attributes must be addressed in an Oracle GoldenGate environment.

1.1.1 Disabling triggers and cascade constraints

You will need to make some changes to the triggers, cascade update, and cascade delete constraintson the target tables. Oracle GoldenGate replicates DML that results from a triggeror a cascade constraint. If the same trigger or constraint gets activated onthe target table, it becomes redundant because of the replicated version, andthe database returns an error.

--在target table上,需要禁用triggers或者cascadeconstraints,因为source 上会将这些改变同步过去,如果在target 端在做一次就会报错,下面的示例使整个流程:

Consider the following example, where the source tables are “emp_src” and “salary_src” and the target tables are “emp_targ” and “salary_targ.”

1. A delete is issued for emp_src.

2. It cascades a delete to salary_src.

3. Oracle GoldenGate sends both deletes tothe target.

4. The parent delete arrives first and isapplied to emp_targ.

5. The parent delete cascades a delete tosalary_targ.

6. The cascaded delete from salary_src isapplied to salary_targ.

7. The row cannot be located because it wasalready deleted in step 5.

Oracle GoldenGate provides some options to handle triggers or cascade constraints automatically,depending on the Oracle version:

--GG 提供了选选项自动处理triggers 或者 cascade constraints,不同的版本,方法也不一样。

(1) For Oracle10.2.0.5 and later patches to 10.2.0.5, and for Oracle 11.2.0.2 and later 11gR2 versions, you can use the Replicat parameter DBOPTIONS with the SUPPRESSTRIGGERS option to cause Replicat to disable the triggers during its session.

(2) For Oracle9.2.0.7 and later, you can use the Replicat parameter DBOPTIONS with the DEFERREFCONSToption to delay the checking and enforcement of cascade update and cascadedelete constraints until the Replicat transaction commits.

(3) For earlier Oracle versions, you must disable triggers and integrity constraints or alter themmanually to ignore the Replicat database user.

1.1.2 Deferring constraint checking

If constraintsare DEFERRABLE on the source, the constraints on the target must also be DEFERRABLE.You can use one of the following parameter statements to defer constraint checkinguntil a Replicat transaction commits:

--如果source 端的约束是deferrable(延时)的,那么target端也必须是deferrable的。

(1) Use SQLEXEC at the root level ofthe Replicat parameter file to defer the constraints for an entire Replicatsession.

--使用SQLEXEC设置这个Replicat session的defer 属性

SQLEXEC (“altersession set constraint deferred”)

(2) For Oracle 9.2.0.7 and later, youcan use the Replicat parameter DBOPTIONS with the DEFERREFCONST option to delayconstraint checking for each Replicat transaction.

--9.2.0.7 之后可以通过DBOPTIONS参数的DEFERREFCONST选项来设置delay constraint checking。

Replicat mightneed to set constraints to DEFERRED if it is possible that an update transactioncould affect the primary keys of multiple rows. Called a transient primary key updatein Oracle GoldenGate terminology, this kind of operation typically uses an x+n formulaor other form of manipulation that shifts the values and causes a new value tobe the same as an old one.

The followingillustrates a sequence of value changes that can cause this condition if constraintsare not deferred. The example assumes the primary key column is “CODE” and thecurrent key values (before the updates) are 1, 2, and 3.

update item set code = 2 where code = 1;

update item set code = 3 where code = 2;

update item set code = 4 where code = 3;

In this example,when Replicat applies the first update to the target, there is an error becausethe key value of 2 already exists in the table. The Replicat transactionreturns constraint violation errors. By default,Replicat does not handle these violations and abends.

--上面的示例演示了deferred 的影响,如果不设置,Replicat 事务会报错,而且在默认情况下Replicat 不会处理这种异常。

To enable Replicat tomanage these updates:

--启用Replicat管理以上的update 情况:

(1) Use the Replicat parameter HANDLETPKUPDATE to enable Replicat to handle the transient primary key updates.

(2) Create the constraints as DEFERRABLE INITIALLY IMMEDIATE on the target tables. The constraints arechecked when Replicat commits the transaction. You can:

1) Use SQLEXEC at the root level of the Replicat parameter file todefer the constraints for an entire Replicat session.

SQLEXEC (“alter session set constraintdeferred”)

2) For Oracle 9.2.0.7 and later, you can use the Replicat parameterDBOPTIONS with the DEFERREFCONST option to delay constraint checking for eachReplicat transaction. If constraints are not DEFERRABLE, Replicat handles theerrors according to rules that are specified with the HANDLECOLLISIONS andREPERROR parameters, if they exist, or else it abends.

1.1.3 Assigning row identifiers

Oracle GoldenGate requires some form of unique row identifier on the source and targettables to locate the correct target rows for replicated updates and deletes.

1.1.3.1 How Oracle GoldenGate determines the kind of row identifier to use

Unless a KEYCOLS clause is used in the TABLE or MAP statement, Oracle GoldenGate automatically selectsa row identifier to use in the following order of priority:

1. Primary key

2. First uniquekey alphanumerically with no virtual columns, no UDTs, no functionbased columns,and no nullable columns

3. First uniquekey alphanumerically with no virtual columns, no UDTs, and no functionbased columns,but can include nullable columns

4. If none of the preceding key types exist (even though there might be other types of keys definedon the table) Oracle GoldenGate constructs a pseudo key of all columns that thedatabase allows to be used in a unique key, excluding virtual columns, UDTs, function-basedcolumns, and any columns that are explicitly excluded from the Oracle GoldenGateconfiguration.

Depending on whether schema-level or table-level logging was activated, there might be just one key or multiple keys logged to the redo log.

NOTE:

If there are other, non-usable keys on a table or if there are no keys at all on the table,Oracle GoldenGate logs an appropriate message to the report file. Constructinga key from all of the columns impedes the performance of Oracle GoldenGate onthe source system. On the target, this key causes Replicat to use a larger,less efficient WHERE clause.

1.1.3.2 Using KEYCOLS tospecify a custom key

If a table does not have an appropriate key, or if you prefer the existing key(s) not to be used,you can define a substitute key if the table has columns that always containunique values. You define this substitute key by including a KEYCOLS clausewithin the Extract TABLE parameter and the Replicat MAP parameter. The specified key will override any existing primary or unique key that OracleGoldenGate finds.

1.1.4 Configuring the database to log key values

GGSCI provides commands to configure the source database to log the appropriate key values whenever it logs a row change, so that they are available to Oracle GoldenGate in the redo record. By default, the database only logs column values that arechanged. The appropriate command must be issued before you start OracleGoldenGate processing.

--GGSCI 可以配置source database 上写入log的内容,在默认情况下只log 变化的列值,在启动GG 进程之前需要发布合适的命令来控制log内容。

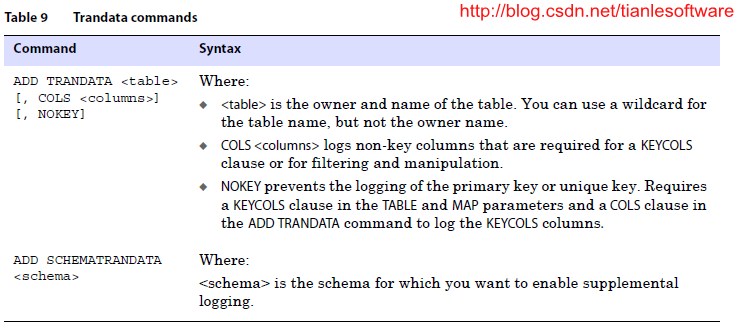

1.1.4.1 ADD TRANDATA

The ADD TRANDATA command enables table-level logging and is generally appropriate if you willnot be using the Oracle GoldenGate DDL replication feature, or if you want to use that feature and your data meets certain requirements documented for this command in the Windows and UNIX Reference Guide.

1.1.4.2 ADD SCHEMATRANDATA

The ADD SCHEMATRANDATA command enables schema-level logging. It logs more key values to the redo log than ADD TRANDATA does, and it affects all of the current and future tables of a given schema. Because ADD SCHEMATRANDATA logs key values atomically when each DDL operation occurs, it is the preferred logging method to use if you will be using the Oracle GoldenGate DDL replication feature. (If thedatabase system can tolerate the extra redo data, you can also use ADDSCHEMATRANDATA without using the DDL replication feature.)

To initiate the logging of key values

1. On the source system, run GGSCI from theOracle GoldenGate directory.

2. In GGSCI, issue the following command tolog on to the database.

DBLOGIN USERID<user>, PASSWORD <password>

Where:<user> is a database user who has the privilege to enable table-level orschemalevel supplemental logging, depending on the logging command that youwill be choosing from Table 9, and <password> is that user’s password.

3. Issue the ADD TRANDATA or ADD SCHEMATRANDATA command.

4. Log in to SQL*Plus as a user with ALTERSYSTEM privilege, and then issue the following command to enable minimalsupplemental logging at the database level. This logging is required to processupdates to primary keys and chained rows.

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

5. To start the supplemental logging,switch the log files.

ALTER SYSTEM SWITCH LOGFILE;

6. Verify that supplemental logging isenabled at the database level with this command:

SELECT SUPPLEMENTAL_LOG_DATA_MIN FROM V$DATABASE;

(1) For Oracle 9i, the output must beYES.

(2) For Oracle 10g, the output must beYES or IMPLICIT.

7. If using ADD TRANDATA with the COLSoption, create a unique index for those columns on the target to optimize rowretrieval. If you are logging those columns as a substitute key for a KEYCOLSclause, make a note to add the KEYCOLS clause to the TABLE and MAP statementswhen you configure the Oracle GoldenGate processes.

1.1.5 Limiting row changes in tables that do not have a key

If a target table does not have a primary key or a unique key, duplicate rows can exist. In this case, Oracle GoldenGate could update or delete too many target rows,causing the source and target data to go out of synchronization without error messages to alert you.

To limit the number of rows that are updated, use the DBOPTIONS parameter with the LIMITROWS option in the Replicat parameter file. LIMITROWS can increase the performance of Oracle GoldenGate on the target system because only one row is processed.

1.2 Configuring character sets

To ensure accurate character representation from one database to another, the following mustbe true:

(1) The character set of the targetdatabase must be a superset of the character set of the source database.

(2) If your client applications usedifferent character sets, the database character set must be a superset of thecharacter sets of the client applications. In this configuration, every characteris represented when converting from a client character set to the database characterset.

1.2.1 To view globalization settings

To determine the globalization settings of the database and whether it is using byte or character semantics, use the following commands in SQL*Plus:

SHOW PARAMETER NLS_LANGUAGE

SHOW PARAMETER NLS_TERRITORY

SELECT name,value$ from SYS.PROPS$ WHERE name = 'NLS_CHARACTERSET';

SHOW PARAMETERNLS_LENGTH_SEMANTICS

1.2.2 To view globalization settings from GGSCI

The VIEW REPORT<group> command in GGSCI shows the current database language and charactersettings and indicates whether or not NLS_LANG is set.

1.2.3 To set NLS_LANG

1. Set the NLS_LANG parameter according to the documentation for your database version and operating system. On UNIX systems, you can set NLS_LANG through the operating system or by using a SETENV parameter in the Extract and Replicat parameter files. For best results, set NLS_LANG from the parameter file, where it is less likely to be changed than at the system level.

NLS_LANG must beset in the format of:

<NLS_LANGUAGE>_<NLS_TERRITORY>.<NLS_CHARACTERSET>

This is an example in UNIX, using the SETENV parameter in the Oracle GoldenGate parameterfile:

SETENV (NLS_LANG= “AMERICAN_AMERICA.AL32UTF8”)

2. Stop and thenstart the Oracle GoldenGate Manager process so that the processes recognize thenew variable.

NOTE:

OracleGoldenGate reports Oracle error messages in U.S. English (AMERICAN_AMERICA),regardless of the actual character set of the reporting database. OracleGoldenGate performs any necessary language conversion internally withoutchanging the language configuration of the database.

1.3 Adjusting cursors

The Extract process maintains cursors for queries that fetch data and also for SQLEXEC operations.Without enough cursors, Extract must age more statements. Extract maintains as many cursors as permitted by the Extract MAXFETCHSTATEMENTS parameter. You can increase the value of this parameter as needed. Make an appropriate adjustment to the maximum number of open cursors that are permitted by the database.

1.4 Setting fetch options

To process certain update records from the redo log, Oracle GoldenGate fetches additional rowdata from the source database. Oracle GoldenGate fetches data for the following:

(1) Operations that contain LOBs.(Fetching of LOBs does not apply to Oracle 10g and later databases, becauseLOBs are captured from the redo log of those versions.)

(2) User-defined types

(3) Nested tables

(4) XMLType objects

By default,Oracle GoldenGate uses Flashback Query to fetch the values from the undo (rollback) tablespaces. That way, Oracle GoldenGate can reconstruct a read-consistent row imageas of a specific time or SCN to match the redo record.

--默认情况下,GG 使用Flashback query 从undo 里fetch values。

To configure the database for best fetch results

For best fetch results, configure thesource database as follows:

--为了更好的进行fetch,在source database 进行如下配置:

1. Set a sufficient amount of redoretention by setting the Oracle initialization parameters UNDO_MANAGEMENT andUNDO_RETENTION as follows (in seconds).

UNDO_MANAGEMENT=AUTO

UNDO_RETENTION=86400

UNDO_RETENTION can be adjusted upward in high-volume environments.

2. Calculate the space that is required inthe undo tablespace by using the following formula.

<undospace> = <UNDO_RETENTION> * <UPS> + <overhead>

Where:

(1) <undo space> is the numberof undo blocks.

(2) <UNDO_RETENTION> is thevalue of the UNDO_RETENTION parameter (in seconds).

(3) <UPS> is the number of undoblocks for each second.

(4) <overhead> is the minimaloverhead for metadata (transaction tables, etc.).

Use the system view V$UNDOSTAT to estimate<UPS> and <overhead>.

3. For tables that contain LOBs, do one of the following:

(1) Set the LOB storage clause toRETENTION. This is the default for tables that are created when UNDO_MANAGEMENTis set to AUTO.

--lob 存储设置为retention,那么UNDO_MANAGEMENT 设置为auto。

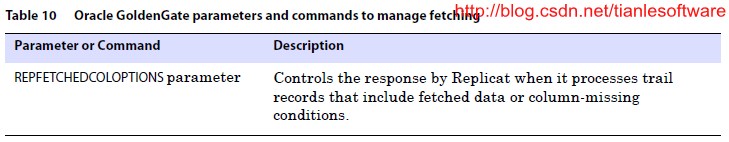

(2) If usingPCTVERSION instead of RETENTION, set PCTVERSION to an initial value of 25. You can adjust it based on the fetch statistics that are reportedwith the STATS EXTRACT command (see Table 10). If the value of theSTAT_OPER_ROWFETCH CURRENTBYROWID or STAT_OPER_ROWFETCH_CURRENTBYKEY field inthese statistics is high, increase PCTVERSION in increments of 10 until thestatistics show low values.

--如果使用PCTVERSION,那么该值设为25. LOB中PCTVERSION 和RETENTION的区别,参考我的blog:

http://www.cndba.cn/Dave/article/1122

4. Grant the following privileges to theOracle GoldenGate Extract user:

GRANT FLASHBACKANY TABLE TO <db_user>

Or ...

GRANT FLASHBACKON <owner.table> TO <db_user>

Oracle GoldenGate provides the following parameters to manage fetching.

1.5 Replicating TDE-encrypted data

Oracle GoldenGate supports the Transparent Data Encryption (TDE) at the column and tablespace level.

(1) Column-level encryption issupported for all versions of 10.2.0.5, 11.1, and 11.2.

(2) Tablespace-level encryption issupported for all versions of 10.2.0.5 and 11.1.0.2.

1.5.1 Required database patches

To support TDE,one of the following Oracle patches must be applied to the database,dependingon the version.

(1) Patch 10628966 for10.2.0.5.2PSU

(2) Patch 10628963 for11.1.0.7.6PSU

(3) Patch 10628961 for 11.2.0.2

1.5.2 Overview of TDE support

TDE support involves two kinds of keys:

(1) The encrypted key can be a tablekey (column-level encryption), an encrypted redo log key (tablespace-levelencryption), or both. A key is shared between the Oracle server and Extract.

(2) The decryption key is a passwordknown as the shared secret that is stored securely in both domains. Only aparty that has possession of the shared secret can decrypt the table and redolog keys.

The encrypted keys are delivered to the Extract process by means of built-in PL/SQL code. Extract uses the shared secret to decrypt the data. Extract never handles the walletMaster Key itself, nor is it aware of the Master Key password. Those remainwithin the Oracle server security framework.

Extract neverwrites the decrypted data to any file other than a trail file, not even adiscard file (specified with the DISCARDFILE parameter). The word “ENCRYPTED”will be written to any discard file that is in use.

The impact of this feature on Oracle GoldenGate performance should mirror that of the impactof decryption on database performance. Other than a slight increase in Extract startuptime, there should be a minimal affect on performance from replicating TDEdata.

1.5.3 Requirements for replicating TDE

(1) If DDL will ever be performed onan encrypted table, or if table keys will ever be rekeyed, you must eitherquiesce the table while the DDL is performed or enable Oracle GoldenGate DDLsupport. It is more practical to have the DDL environment active so that it isready, because a re-key usually is a response to a security violation and must beperformed immediately. To install the Oracle GoldenGate DDL environment, see theinstructions in this guide.

(2) To maintain high securitystandards, the Oracle GoldenGate Extract process should run as part of theOracle User (the user that runs the Oracle Server). That way, the keys areprotected in memory by the same privileges as the Oracle User.

(3) The Extract process must run onthe same machine as the database installation.

1.5.4 Recommendations for replicating TDE

Extract decryptsthe TDE data and writes it to the trail as clear text. To maintain data securitythroughout the path to the target tables, it is recommended that you alsodeploy Oracle GoldenGate security features to:

(1) encrypt the data in the trails

(2) encrypt the data in transitacross TCP/IP

1.5.5 Configuring TDE support

The followingoutlines the steps that the Oracle Security Officer and the Oracle GoldenGateAdministrator take to establish communication between the Oracle server and theExtract process.

1.5.5.1 Oracle SecurityOfficer and Oracle GoldenGate Administrator

Agree on ashared secret (password) that meets or exceeds Oracle password standards. Thispassword must not be known by anyone else.

1.5.5.2 Oracle Security Officer

1. Issue thefollowing MKSTORE command to create an “ORACLEGG” entry in the Oracle wallet.ORACLEGG must be the name of the key. Do not supply the shared secret on the commandline; instead, supply it when prompted.

Mkstore -wrl ./ -createEntryORACLE.SECURITY.CL.ENCRYPTION.ORACLEGG

Enter <secret>

2. (Oracle RAC10gR2 and 11gR1) Copy the wallet that contains the shared secret to each node,and then reopen the wallets.

NOTE

With Oracle11gR2, there is one wallet in a shared location, with synchronized access among all nodes.

1.5.5.3 Oracle GoldenGate Administrator

1. Compile thedbms_internal_cklm.plb package that is installed in the Oracle GoldenGate installationdirectory.

2. Grant EXECprivilege on the dbms_internal_cklm.get_key procedure to the Extract database user.This procedure facilitates sharing of the encrypted keys between the Oracle Serverand Extract.

3. Run GGSCI andissue the ENCRYPT PASSWORD command to encrypt the shared secret so that it isobfuscated within the Extract parameter file. This is a security requirement.

ENCRYPT PASSWORDtakes the clear-text string as input and provides options for encrypting itwith an Oracle GoldenGate-generated default key or a user-defined key that isstored in a secure local ENCKEYS file. For instructions, see the securitychapter of the Oracle GoldenGate Windows and UNIX Administrator’s Guide.

4. In theExtract parameter file, use the DBOPTIONS parameter with the DECRYPTPASSWORD option.As input, supply the encrypted shared secret and the Oracle GoldenGategeneratedor user-defined decryption key. For syntax options, see the Oracle GoldenGateWindows and UNIX Reference Guide.

5. Close, andthen open, the Oracle wallet before you start Extract. This step works aroundissues with caching that can cause an ORA-28360 (security module) error.

NOTE :

Close and thenopen the wallet whenever a shared secret is created or changed.

1.5.6 Changing the Oracle shared secret

Use this procedure to change the shared secret that supports Oracle Transparent Data Encryption.You can change the shared secret when needed, but “ORACLEGG” must remain thename of the key.

1. Stop the Extract process.

STOP EXTRACT<group>

2. Issue the following MKSTORE command tomodify the "ORACLEGG" entry in the Oracle wallet. Do not supply thenew shared secret on the command line; instead, supply it when prompted.

Mkstore -wrl ./ -modifyEntryORACLE.SECURITY.CL.ENCRYPTION.ORACLEGG

Enter <secret>

NOTE:

To change the shared secret, the parameter 'modifyEntry' is used instead of “createEntry,”because you are modifying an existing wallet entry.

3. Use the ENCRYPT PASSWORD command inGGSCI to encrypt the new shared secret. For instructions, see the securitychapter of the Oracle GoldenGate Windows and UNIX Administrator’s Guide.

4. Replace the old encrypted shared secretand decryption key with the new ones in the Extract parameter file by modifyingthe DECRYPTPASSWORD option of DBOPTIONS. For syntax options, see the OracleGoldenGate Windows and UNIX Reference Guide.

5. Close, and then open, the Oracle walletbefore you start Extract. This process works around issues with caching thatcan cause an ORA-28360 error.

6. Start Extract.

START EXTRACT<group>

1.6 Ensuring correct handling of Oracle Spatial objects

To replicate georaster tables (tables that contain one or more columns of SDO_GEORASTER objecttype), follow these instructions to configure Oracle GoldenGate to process themcorrectly.

1.6.1 Mapping the georaster tables

You must createa TABLE statement and a MAP statement for the georaster tables and also for therelated raster data tables.

1.6.2 Sizing the XML memory buffer

Evaluate yourspatial data before starting Oracle GoldenGate processes. If the METADATA attributeof the SDO_GEORASTER data type in any of the values exceeds 1 MB, you must increasethe size of the memory buffer that stores the embedded SYS.XMLTYPE attribute ofthe SDO_GEORASTER data type. If the data exceeds the buffer size, Extractabends. The size of the XML buffer is controlled by the DBOPTIONS parameterwith the XMLBUFSIZE option.

1.6.3 Handling triggers on the georaster tables

Every georastertable has a trigger that affects the raster data table. To ensure the integrityof the target georaster tables, do the following:

(1) Keep the trigger enabled on bothsource and target to ensure consistency of the spatial data.

(2) Use the REPERROR option of the MAPparameter to handle “ORA-01403 No data found” errors.

The error iscaused by redundant deletes on the target. When a row in the source georaster tableis deleted, the trigger cascades the delete to the raster data table. Bothdeletes are replicated. The replicated parent delete triggers the cascaded(child) delete on the target.

When thereplicated child delete arrives, it is redundant and generates the error.

To handle redundant deletes with REPERROR

1. Use a REPERROR statement in each MAPstatement that contains a raster data table.

2. Use Oracle error 1403 as the SQL error.

3. Use any of the response options as theerror handling.

See the following examples for ways you canconfigure the error handling.

Example:

A sufficient way to handle the errors is simply to use REPERROR with DISCARD to discard the cascadeddelete that triggers them. The trigger on the target georaster table performsthe delete to the raster data table, so the replicated one is not needed.

MAP geo.st_rdt,TARGET geo.st_rdt, REPERROR (-1403, DISCARD) ;

Example:

If you need tokeep an audit trail of the error handling, use REPERROR with EXCEPTION to invokeexceptions handling. For this, you create an exceptions table and map thesource raster data table twice:

(1) once to the actual targetraster data table (with REPERROR handling the 1403 errors).

(2) again to the exceptions table,which captures the 1403 error and other relevant information by means of aCOLMAP clause.

When usingexceptions handling like this, you must use the ALLOWDUPTARGETMAP parameter tokeep Replicat from abending on the dual source mapping.

This example provides a Replicat parameter file that contains the required parameters, andit provides a sample script that creates an exceptions table. Note that a macrois used in the parameter file to populate the TARGET and COLMAP portions of theexceptions MAP statements. The required INSERTALLRECORDS and EXCEPTIONSONLYparameters are also included in the macro. The macro eliminates the need totype the same information over again for each of the MAP statements.

Replicat parameter file

REPLICATrgeoras

SETENV(ORACLE_SID=tgt111)

USERIDgeo, PASSWORD xxxxx, ENCRYPTKEY DEFAULT

ASSUMETARGETDEFS

DISCARDFILE./dirrpt/rgeoras.dsc, purge

ALLOWDUPTARGETMAP

-- Thisstarts the macro

MACRO#exception_handling

BEGIN

, TARGETgeo.exceptions

, COLMAP( rep_id = "1"

,table_name = @GETENV ("GGHEADER", "TABLENAME")

, errno= @GETENV ("LASTERR", "DBERRNUM")

,dberrmsg = @GETENV ("LASTERR", "DBERRMSG")

, optype= @GETENV ("LASTERR", "OPTYPE")

,errtype = @GETENV ("LASTERR", "ERRTYPE")

, logrba= @GETENV ("GGHEADER", "LOGRBA")

,logposition = @GETENV ("GGHEADER", "LOGPOSITION")

,committimestamp = @GETENV ("GGHEADER", "COMMITTIMESTAMP")

)

,INSERTALLRECORDS

,EXCEPTIONSONLY ;

END;

-- Thisends the macro

EXTTRAIL./dirdat/eg

--Mapping of regular and georaster tables. Requires no exception handling.

--Replicat abends on errors, which is its default error handling.

MAPgeo.blob_table, TARGET geo.blob_table ;

MAPgeo.georaster_table, TARGET geo.georaster_table ;

MAPgeo.georaster_table2, TARGET geo.georaster_table2 ;

MAPgeo.georaster_tab1, TARGET geo.georaster_tab1 ;

MAPgeo.georaster_tab2, TARGET geo.georaster_tab2 ;

MAPgeo.mv_georaster_table1, TARGET geo.mv_georaster_table1 ;

-- Mapping of rasterdata tables. Requires exception handling for 1403 errors.

MAPgeo.st_rdt_3_table, TARGET geo.st_rdt_3_table, REPERROR (-1403, EXCEPTION)

;

MAPgeo.st_rdt_3_table #exception_handling()

MAP geo.rdt_1_table,TARGET geo.rdt_1_table, REPERROR (-1403, EXCEPTION) ;

MAP geo.rdt_1_table#exception_handling()

MAP geo.rdt_2_table,TARGET geo.rdt_2_table, REPERROR (-1403, EXCEPTION) ;

MAP geo.rdt_2_table#exception_handling()

MAPgeo.mv_rdt_1_table, TARGET geo.mv_rdt_1_table, REPERROR (-1403, EXCEPTION)

;

MAPgeo.mv_rdt_1_table #exception_handling()

Sample script that creates an exceptions table

drop tableexceptions

/

create tableexceptions

( rep_id number

, table_namevarchar2(61)

, errno number

, dberrmsgvarchar2(4000)

, optypevarchar2(20)

, errtypevarchar2(20)

, logrba number

, logposition number

, committimestamptimestamp

)

/

NOTE:

When using anexceptions table for numerous tables, someone should monitor its growth.

1.7 Replicating TIMESTAMP with TIME ZONE

Oracle GoldenGate supports the capture and replication of TIMESTAMP WITH TIME ZONE asa UTC offset (TIMESTAMP '2011-01-01 8:00:00 -8:00') but abends on TIMESTAMPWITH TIME ZONE as TZR (TIMESTAMP '2011-01-01 8:00:00 US/Pacific') by default.

To support TIMESTAMP WITH TIME ZONE as TZR, use the Extract parameter TRANLOGOPTIONS with oneof the following:

(1) INCLUDEREGIONID to replicateTIMESTAMP WITH TIME ZONE as TZR from an Oracle source to anOracle target of thesame version or later.

(2) INCLUDEREGIONIDWITHOFFSET toreplicate TIMESTAMP WITH TIMEZONE as TZR from an Oraclesource that is at leastv10g to an earlier Oracle target, or to a non-Oracle target.

These options allow replication to Oracle versions that do not support TIMESTAMP WITH TIME ZONEas TZR and to database systems that only support time zone as a UTC offset.

NOTE:

Oracle GoldenGate does not support TIMESTAMP WITH TIME ZONE as TZR for initial loads,situations where the column must be fetched from the database, or for theSQLEXEC feature. In these cases, the region ID is converted to a time offset bythe Oracle database when the column is selected. Replicat replicates the columndata as date and time data with a time offset value.

1.8 Controlling Replicat COMMIT options on an Oracle target

When an onlineReplicat group is configured to use a checkpoint table (recommended), it takes advantageof the asynchronous COMMIT feature that was introduced in Oracle 10gR2.

When applying atransaction to the Oracle target, Replicat includes the NOWAIT option in theCOMMIT statement. This improves performance by allowing Replicat to continue processingimmediately after applying the COMMIT, while the database engine logs the transactionin the background.

The checkpointtable supports data consistency with asynchronous COMMITs because it makes theReplicat checkpoint part of the Replicat transaction itself. The checkpointeither succeeds with, or fails with, that transaction. Asynchronous COMMIT isalso the default for initial loads and batch processing.

You can disablethe default asynchronous COMMIT behavior by using the DBOPTIONS parameter with theDISABLECOMMITNOWAIT option in the Replicat parameter file. If a checkpoint table is not used for a Replicat group, the checkpoints are maintained in a fileon disk, and Replicat uses the synchronous COMMIT option by default (COMMIT with WAIT), which forces Replicat to wait until the transaction is loggedbefore it can continue processing. This prevents inconsistencies that canresult after a database failure, where the state of the transaction that is recorded in the checkpoint file might be different than its state after therecovery.

1.9 Supporting delivery to Oracle Exadata with EHCC compressed data

OracleGoldenGate supports delivery to Oracle Exadata with Hybrid Columnar Compression(EHCC) enabled for insert operations. To ensure that this data is applied

correctly, use the INSERTAPPEND parameterin the Replicat parameter file. INSERTAPPEND causes Replicat to use an APPENDhint for inserts. Without this hint, the record will be inserted uncompressed.

NOTE:

Capture from Exadata is not supported at this time.

1.10 Managing LOB caching on a target Oracle database

Replicat writesLOB data to the target database in fragments. To minimize the effect of thisI/O on the system, Replicat enables Oracle’s LOB caching mechanism, caches the fragmentsin a buffer, and performs a write only when the buffer is full. For example, ifthe buffer is 25,000 bytes in size, Replicat only performs I/O four times givena LOB of 100,000 bytes.

(1) To optimize the buffer size tothe size of your LOB data, use the DBOPTIONS parameter with the LOBWRITESIZE<size> option. The higher the value, the fewer the I/O calls made byReplicat to write one LOB.

(2) To disable Oracle’s LOBcaching, use the DBOPTIONS parameter with the DISABLELOBCACHING option. WhenLOB caching is disabled, whatever is sent by Replicat to Oracle in one I/O callis written directly to the database media.

1.11 Additional requirements for Oracle RAC

This topiccovers additional configuration requirements that apply when Oracle GoldenGatewill be operating in an Oracle Real Application Clusters (RAC) environment.

1.11.1 General requirements

(1) All nodes in the RAC cluster musthave synchronized system clocks. The clocks must be synchronized with the clockon the system where Extract is executed. Oracle GoldenGate compares the time ofthe local system to the commit timestamps to make critical decisions. Forinformation about synchronizing system clocks, consult www.ntp.org or yoursystems administrator.

(2) All nodes in the cluster must havethe same COMPATIBLE parameter setting.

The following table shows some OracleGoldenGate parameters that are of specific benefitin Oracle RAC.

1.11.2 Special procedures on RAC

(1) If the primary database instanceagainst which Oracle GoldenGate is running stops or fails for any reason,Extract abends. To resume processing, you can restart the instance or mount theOracle GoldenGate binaries to another node where the database is running andthen restart the Oracle GoldenGate processes. Stop the Manager process on theoriginal node before starting Oracle GoldenGate processes from another node.

(2) Whenever the number of redothreads changes, the Extract group must be dropped and re-created.

(3) To write SQL operations to thetrail, Extract must verify that there are no other operations from other RACnodes that precede the ones in the redo log that it is reading. For example, ifa log contains operations that were performed from 1:00 a.m. to 2:00 a.m., andthe log from Node 2 contains operations that were performed from 1:30 a.m. to2:30 a.m., then only the operations up to, and including, the 2:00 a.m. one canbe moved to the server where the main Extract is coordinating the redo data. Extractmust ensure that there are no more operations between 2:00 a.m. and 2:30a.m.that need to be captured.

(4) In active-passive environments,the preceding requirement means that you might need to perform some operationsand archive log switching on the passive node to ensure that operations fromthe active node are passed to the passive node. This eliminates any issues thatcould arise from a slow archiver process, failed network links, and other latencyissues caused by moving archive logs from the Oracle nodes to the server where themain Extract is coordinating the redo data.

(5) To process the last transaction ina RAC cluster before shutting down Extract, insert a dummy record into a sourcetable that Oracle GoldenGate is replicating, and then switch log files on allnodes. This updates the Extract checkpoint and confirms that all availablearchive logs can be read. It also confirms that all transactions in those archivelogs are captured and written to the trail in the correct order.

1.12 Additional requirements for ASM

This topiccovers additional configuration requirements that apply when Oracle GoldenGateoperates in an Oracle Automatic Storage Management (ASM) instance.

1.12.1 Ensuring ASM connectivity

To ensure thatthe Oracle GoldenGate Extract process can connect to an ASM instance, do thefollowing.

(1) List the ASM instance in thetnsnames.ora file. The recommended method for connecting to an ASM instancewhen Oracle GoldenGate is running on the database host machine is to use abequeath (BEQ) protocol.

NOTE:

A BEQ connectiondoes not work when using a remote Extract configuration. In that case,configure TNSNAMES with the TCP/IP protocol.

(2) If using the TCP/IP protocol,verify that the Oracle listener is listening for new connections to the ASMinstance. The listener.ora file must contain an entry similar to the following.

SID_LIST_LISTENER_ASM=

(SID_LIST=

(SID_DESC=

(GLOBAL_DBNAME= ASM)

(ORACLE_HOME= /u01/app/grid)

(SID_NAME= +ASM1)

)

)

NOTE:

TheBEQ protocol does not require a listener.

1.12.2 Optimizing the ASM connection

Use theTRANLOGOPTIONS parameter with the DBLOGREADER option in the Extract parameter fileif the ASM instance is one of the following versions:

(1) Oracle 10.2.0.5 or later 10g R2versions

(2) Oracle 11.2.0.2 or later 11g R2versions

A newer ASM APIis available in those releases (but not in Oracle 11g R1 versions) that usesthe database server to access the redo and archive logs. When used, this APIenables Extract to use a read buffer size of up to 4 MB in size. A largerbuffer may improve the performance of Extract when redo rate is high. You canuse the DBLOGREADERBUFSIZE option of TRANLOGOPTIONS to specify a buffer size.

二.Preparing DBFS for active-activepropagation with Oracle GoldenGate

2.1 Supported operations and prerequisites

Oracle GoldenGate for DBFS supports the following:

(1) Supported DDL (like TRUNCATE or ALTER) on DBFS objects except for CREATE statements on the DBFS objects. CREATE onDBFS must be excluded from the configuration, as must any schemas that willhold the created DBFS objects. The reason to exclude CREATES is that themetadata for DBFS must be properly populated in the SYS dictionary tables (whichitself is excluded from Oracle GoldenGate capture by default).

(2) Capture and replication of DML onthe tables that underlie the DBFS filesystem.

The procedures that follow assume that Oracle GoldenGate is configured properly to support active-activeconfiguration. This means that it must be:

(1) Installed according to theinstructions in this guide.

(2) Configured according to theinstructions in the Oracle GoldenGate Windows and UNIX Administrator’s Guide.

2.2 Applying the required patch

Apply the OracleDBFS patch for bug-9651229 on both databases. To determine if the patch isinstalled, run the following query:

connect / as sysdba

select procedure_name from dba_procedures

where object_name = 'DBMS_DBFS_SFS_ADMIN'

and procedure_name = 'PARTITION_SEQUENCE';

The query should return a single row. Anything else indicates that the proper patched version ofDBFS is not available on your database.

2.3 Examples used in these procedures

The followingprocedures assume two systems and configure the environment so that DBFS userson both systems see the same DBFS files, directories, and contents that are keptin synchronization with Oracle GoldenGate. It is possible to extend theseconcepts to support three or more peer systems.

2.4 Partitioning the DBFS sequence numbers

DBFS uses aninternal sequence-number generator to construct unique names and unique IDs.These steps partition the sequences into distinct ranges to ensure that thereare no conflicts across the databases. After this is done, further DBFSoperations (both creation of new fileystems and subsequent filesystemoperations) can be performed without conflicts of names, primary keys, or IDsduring DML propagation.

1. Connect to each database as sysdba.

2. Issue the following query on eachdatabase.

select last_number

from dba_sequences

where sequence_owner = 'SYS'

and sequence_name = 'DBFS_SFS_$FSSEQ'

3. From this query, choose the maximumvalue of LAST_NUMBER across both systems, or pick a high value that issignificantly larger than the current value of the sequence on either system.

4. Substitute this value (“maxval” is usedhere as a placeholder) in both of the following procedures. These procedureslogically index each system as myid=0 and myid=1.

Node1

declare

begin

dbms_dbfs_sfs_admin.partition_sequence(nodes=> 2, myid => 0,

newstart=> :maxval);

commit;

end;

/

Node 2

declare

begin

dbms_dbfs_sfs_admin.partition_sequence(nodes => 2, myid => 1,

newstart=> :maxval);

commit;

end;

/

NOTE:

Notice thedifference in the value specified for the myid parameter. These are the different index values.

For a multi-way configuration among threeor more databases, you could make the following alterations:

(1) Adjust the maximum value that isset for “maxval” upward appropriately, and use that value on all nodes.

(2) Vary the value of “myid” in theprocedure from 0 for the first node, 1 for the second node, 2 for the thirdone, and so on.

5. (Recommended) After (and only after) theDBFS sequence generator is partitioned, create a new DBFS filesystem on eachsystem, and use only these filesystems for DML propagation with OracleGoldenGate. See “Configuring the DBFS filesystem”。

NOTE:

DBFS filesystemsthat were created before the patch for bug-9651229 was applied or before theDBFS sequence number was adjusted can be configured for propagation, but thatrequires additional steps not described in this document. If you must retainold filesystems, open a service request with Oracle Support.

2.5 Configuring the DBFS filesystem

To replicate DBFS filesystem operations, use a configuration that is similar to the standardbi-directional configuration for DML.

(1) Use matched pairs of identicallystructured tables.

(2) Allow each database to have writeprivileges to opposite tables in a set, and set the other one in the set toread-only. For example:

1)Node1 writes to local table "t1" and these changesare replicated to t1 on Node2.

2)Node2 writes to local table “t2” and these changes arereplicated to t2 on Node1.

3)On Node1, t2 is read-only. On Node2, t1 is read-only.

DBFS filesystems make this kind of table pairing simple because:

(1) The tables that underlie the DBFSfilesystems have the same structure.

(2) These tables are modified bysimple, conventional DML during higher-level filesystem operations.

(3) The DBFS Content API provides away of unifying the namespace of the individual DBFS stores by means of mountpoints that can be qualified as read-write or read-only.

The following steps create two DBFS filesystems (in this case named FS1 and FS2) and set the mto be read-write or read, as appropriate.

1. Run the following procedure to createthe two filesystems. (Substitute your store names for “FS1” and “FS2.”)

Example declare

dbms_dbfs_sfs.createFilesystem('FS1');

dbms_dbfs_sfs.createFilesystem('FS2');

dbms_dbfs_content.registerStore('FS1',

'posix', 'DBMS_DBFS_SFS');

dbms_dbfs_content.registerStore('FS2',

'posix', 'DBMS_DBFS_SFS');

commit;

end;

/

2. Run the following procedure to give eachfilesystem the appropriate access rights.

(Substitute your store names for “FS1” and“FS2.”)

Example Node 1

declare

dbms_dbfs_content.mountStore('FS1', 'local');

dbms_dbfs_content.mountStore('FS2', 'remote',read_only => true);

commit;

end;

/

Example Node 2

declare

dbms_dbfs_content.mountStore('FS1', 'remote',read_only => true);

dbms_dbfs_content.mountStore('FS2', 'local');

commit;

end;

/

In this example,note that on Node 1, store "FS1" is read-write and store"FS2" is read-only, while on Node 2 the converse is true: store"FS1" is read-only and store "FS2" is read-write.

Note also thatthe read-write store is mounted as "local" and the read-only store ismounted as "remote". This provides users on each system with anidentical namespace and identical semantics for read and write operations.Local path names can be modified, but remote path names cannot.

2.6 Mapping local and remote peers correctly

The names of thetables that underlie the DBFS filesystems are generated internally and dynamically.Continuing with the preceding example, there are:

(1) Two nodes (Node 1 and Node 2 inthe example).

(2) Four stores: two on each node(FS1 and FS2 in the example).

(3) Eight underlying tables: twofor each store (a table and a ptable). These tables must be identified,specified in Extract TABLE statements, and mapped in Replicat MAP statements.

1. To identify the table namesthat back each filesystem, issue the following query.(Substitute your storenames for “FS1” and “FS2.”)

Example select fs.store_name, tb.table_name,tb.ptable_name

fromtable(dbms_dbfs_sfs.listTables) tb,

table(dbms_dbfs_sfs.listFilesystems)fs

wherefs.schema_name = tb.schema_name

andfs.table_name = tb.table_name

andfs.store_name in ('FS1', 'FS2')

;

The output looks like the followingexamples.

2. Identify the tables that are locallyread-write to Extract by creating the following TABLE statements in the Extractparameter files. (Substitute your owner and table names.)

Example: Node 1

TABLE owner.SFS$_FST_100;

TABLE owner.SFS$_FSTP_100;

Example: Node 2

TABLE owner. SFS$_FST_119;

TABLE owner.SFS$_FSTP_119;

3. Link changes on each remotefilesystem to the corresponding local filesystem by creating the following MAP statementsin the Replicat parameter files. (Substitute your owner and table names.)

Example: Node 1

MAP owner.SFS$_FST_119,

TARGET owner.SFS$_FST_118;

MAP owner.SFS$_FSTP_119,

TARGET owner.SFS$_FSTP_118;

Example: Node 2

MAP owner.SFS$_FST_100,

TARGET owner.SFS$_FST_101;

MAP owner.SFS$_FSTP_100,

TARGET owner.SFS$_FSTP_101;

This mapping captures and replicates local read-write “source” tables to remote readonly peertables:

(1) Filesystem changes made to FS1 onNode 1 propagate to FS1 on Node 2.

(2) Filesystem changes made to FS2 onNode 2 propagate to FS2 on Node1.

Changes to thefilesystems can be made through the DBFS ContentAPI (package DBMS_DBFS_CONTENT)of the database or through dbfs_client mounts and conventional filesystemstools.

All changes are propagated in both directions.

(1) A user at the virtual root of theDBFS namespace on each system sees identical content.

(2) For mutable operations, users usethe "/local" sub-directory on each system.

(3) For read operations, users can useeither of the "/local" or "/remote" sub-directories, dependingon whether they want to see local or remote content.

三.Configuring the Oracle redo logs

3.1 Ensuring data availability

When operatingin its normal mode, Oracle GoldenGate reads the online logs by default, butwill read the archived logs if an online log is not available. Therefore, forbest results, enable archive logging. The archives provide a secondary datasource should the online logs recycle before Extract is finished with them. Thearchive logs for open transactions must be retained on the system in caseExtract needs to recapture data from them to perform a recovery.

默认情况下,GG 读取onlinelog 来获取capture 数据,如果online redo 不可用的时候就会去读归档日志。 所以尽可能的启用归档,并将归档日志保留一定的时间,以防Extract 进程从归档日志recapture data。

If you cannotenable archive logging, configure the online logs according to the following guidelinesto retain enough data for Extract to capture what it needs before the logs recycle.Allow for Extract backlogs caused by network outages and other externalfactors, as well as long-running transactions.

如果没有启用归档,那么就需要按照以下方法来配置onlinelog,以保证在log recycle 之前,Extrace 能从onlinelog里capture 足够的数据。

In a RACconfiguration, Extract must have access to the online and archived logs for allnodes in the cluster, including the one where Oracle GoldenGate is installed.

--RAC 环境下,Extract 必须能够访问所有节点的online 和archivedlog。 即RAC 环境下GG需要安装在共享文件系统上,这点在安装GG那部分有说明。

3.1.1 Log retention requirements per Extract recovery mode

The followingsummarizes the different recovery modes that Extract might use and their log-retentionrequirements:

(1) By default, the Bounded Recoverymode is in effect, and Extract requires access to the logs only as far back astwice the Bounded Recovery interval that is set with the BR parameter. Thisinterval is an integral multiple of the standard Extract checkpoint interval,as controlled by the CHECKPOINTSECS parameter. These two parameters control theOracle GoldenGate Bounded Recovery feature, which ensures that Extract can recoverin-memory captured data after a failure, no matter how old the oldest open transactionwas at the time of failure.

(2) In the unlikely event that theBounded Recovery mechanism fails when Extract attempts a recovery, Extractreverts to normal recovery mode and must have access to the archived log thatcontains the beginning of the oldest open transaction in memory at the time offailure and all logs thereafter.

3.1.2 Log retention options

Depending on theversion of Oracle, there are different options for ensuring that the requiredlogs are retained on the system.

(1)Oracle Enterprise Edition 10.2 and later

For these versions, Extract works with Oracle Recovery Manager (RMAN) to retain the logsthat Extract needs for recovery. This feature is enabled by default when youadd or register an Extract group in GGSCI with ADD EXTRACT (TRANLOG option) or REGISTEREXTRACT.

By default,Extract retains enough logs to perform a Bounded Recovery, but you can configureExtract to retain enough logs through RMAN for a normal recovery by using the TRANLOGOPTIONSparameter with the LOGRETENTION option set to SR. There also is an option to disablethe use of RMAN log retention. Review the options of LOGRETENTION in the OracleGoldenGate Windows and UNIX Reference Guide before you configure Extract. Ifyou set LOGRETENTION to DISABLED, see “Determining how much data to retain”.

NOTE:

To support RMANlog retention on Oracle RAC, you must download and install the database patchthat is provided in BUGFIX 11879974 before you add the Extract

groups.

The RMAN logretention feature creates an underlying (but non-functioning) Oracle StreamsCapture process for each Extract group. The name of the Capture is based on thename of the associated Extract group. The log retention feature can operateconcurrently with other local Oracle Streams installations. When you create anExtract group, the logs are retained from the current database SCN.

NOTE:

If the OracleFlashback storage area is full, RMAN purges the archive logs even when neededby Extract. This limitation exists so that the requirements of Extract (andother Oracle replication components) do not interfere with the availability of redoto the database.

(2)All other Oracle versions

For versions ofOracle other than Enterprise Edition 10.2 and later, you must manage the logretention process yourself with your preferred administrative tools. Follow thedirections in “Determining how much data to retain”.

3.1.3 Determining how much data to retain

When managinglog retention, try to ensure rapid access to the logs that Extract would requireto perform a normal recovery (not a Bounded Recovery). See “Log retention requirementsper Extract recovery mode”. If you must move the archives off the database system,the TRANLOGOPTIONS parameter provides a way to specify an alternate location.See “Specifying the archive location”.

The recommendedretention period is at least 24 hours worth of transaction data, including bothonline and archived logs. To determine the oldest log that Extract might needat any given point, issue the SEND EXTRACT command with the SHOWTRANS option.You might need to do some testing to determine the best retention time givenyour data volume and business requirements.

If data that Extract needs during processing was not retained, either in online or archived logs,one of the following corrective actions might be required:

(1) Alter Extract to capture from alater point in time for which log data is available (and accept possible dataloss on the target).

(2) Resynchronize the source and targetdata, and then start the Oracle GoldenGate environment over again.

3.1.4 Purging log archives

Make certain notto use backup or archive options that cause old archive files to be overwrittenby new backups. Ideally, new backups should be separate files with different namesfrom older ones. This ensures that if Extract looks for a particular log, itwill still exist, and it also ensures that the data is available in case it isneeded for a support case.

3.2 Specifying the archive location

If the archivedlogs reside somewhere other than the Oracle default directory, specify that directorywith the ALTARCHIVELOGDEST option of the TRANLOGOPTIONS parameter in theExtract parameter file.

You might alsoneed to use the ALTARCHIVEDLOGFORMAT option of TRANLOGOPTIONS if the format thatis specified with the Oracle parameter LOG_ARCHIVE_FORMAT containssub-directories.

ALTARCHIVEDLOGFORMATspecifies an alternate format that removes the sub-directory from the path. Forexample, %T/log_%t_%s_%r.arc would be changed to log_%t_%s_%r.arc. As an alternativeto using ALTARCHIVEDLOGFORMAT, you can create the sub-directory manually, and thenmove the log files to it.

3.3 Mounting logs that are stored on other platforms

If the onlineand archived redo logs are stored on a different platform from the one that Extractis built for, do the following:

(1) NFS-mount the archive files.

(2) Map the file structure to thestructure of the source system by using the LOGSOURCE and PATHMAP options ofthe Extract parameter TRANLOGOPTIONS.

3.4 Configuring Oracle GoldenGate to read only the archivedlogs

You can configure Extract to read exclusively from the archived logs. This is known as ArchivedLog Only (ALO) mode. In this mode, Extract reads exclusively from archived logsthat are stored in a specified location. ALO mode enables Extract to useproduction logs that are shipped to a secondary database (such as a standby) asthe data source. The online logs are not used at all. Oracle GoldenGateconnects to the secondary database to get metadata and other required data asneeded. As an alternative, ALO mode is supported on the production system.

3.4.1 Limitations and requirements of ALO mode

(1) Log resets (RESETLOG) cannot bedone on the source database after the standby databaseis created.

(2) ALO cannot be used on a standbydatabase if the production system is Oracle RAC and the standby database isnon-RAC. In addition to both systems being Oracle RAC, the number of nodes oneach system must be identical.

(3) ALO on Oracle RAC requires adedicated connection to the source server. If that connection is lost, OracleGoldenGate processing will stop.

(4) On Oracle RAC, the directoriesthat contain the archive logs must have unique names across all nodes;otherwise, Extract may return “out of order SCN” errors.

(5) ALO mode does not supportarchive log files in ASM mode. The archive log files must be outside the ASMenvironment for Extract to read them.

3.4.2 Configuring Extract for ALO mode

1. Enable supplemental logging at the tablelevel and the database level for the tables in the source database.

2. When Oracle GoldenGate is running on adifferent server from the source database, make certain that SQL*Net isconfigured properly to connect to a remote server, such as providing thecorrect entries in a TNSNAMES file. Extract must have permission to maintain aSQL*Net connection to the source database.

3. Use a SQL*Net connect string in:

(1) The USERID parameter in theparameter file of every Oracle GoldenGate process that connects to thatdatabase.

(2) The DBLOGIN command in GGSCI.

Example USERID statement:

USERID ggext@ora10g01, PASSWORD ggs123

NOTE:

If you have astandby server that is local to the server that Oracle GoldenGate is runningon, you do not need to use a connect string in USERID. You can just supply theuser login name.

4. Use the Extract parameter TRANLOGOPTIONSwith the ARCHIVEDLOGONLY option. This option forces Extract to operate in ALOmode against a primary or logical standby database, as determined by a value ofPRIMARY or LOGICAL STANDBY in the db_role column of the v$database view. Thedefault is to read the online logs. TRANLOGOPTIONS with ARCHIVEDLOGONLY is notneeded if using ALO mode against a physical standby database, as determined bya value of PHYSICAL STANDBY in the db_role column of v$database. Extract automatically operates in ALO mode if it detectsthat the database is a physical standby.

5. Other TRANLOGOPTIONS options might berequired for your environment. For example, depending on the copy program thatyou use, you might need to use the COMPLETEARCHIVEDLOGONLY option to preventExtract errors.

6. Use the MAP parameter for Extract to mapthe table names to the source object IDs.

7. Add the Extract group by issuing the ADDEXTRACT command with a timestamp as the BEGIN option, or by using ADD EXTRACT withthe SEQNO and RBA options. It is best to give Extract a known start point atwhich to begin extracting data, rather than by using the NOW argument. Thestart time of “NOW” corresponds to the time of the current online redo log, butan ALO Extract cannot read the online logs, so it must wait for that log to bearchived when Oracle switches logs. The timing of the switch depends on thesize of the redo logs and the volume of database activity, so there might be alag between when you start Extract and when data starts being captured. Thiscan happen in both regular and RAC database configurations.

NOTE:

If Extractappears to stall while operating in ALO mode。

3.5 Setting redo parallelism for Oracle 9i sources

If using OracleGoldenGate for an Oracle 9i source database, set the _LOG_PARALLELISM parameterto 1. Oracle GoldenGate does not support values higher than 1.

3.6 Avoiding log-read bottlenecks

When OracleGoldenGate captures data from the redo logs, I/O bottlenecks can occur becauseExtract is reading the same files that are being written by the databaselogging mechanism. Performance degradation increases with the number of Extractprocesses that read the same logs. You can:

(1) Try using faster drives and afaster controller. Both Extract and the database logging mechanism will befaster on a faster I/O system.

(2) Store the logs on RAID 0+1.Avoid RAID 5, which performs checksums on every block written and is not a goodchoice for high levels of continuous I/O. For more information, see the Oracledocumentation or search related web sites.

四.Managing the Oracle DDL replication environment

4.1 Enabling and disabling the DDL trigger

You can enableand disable the trigger that captures DDL operations without making any configurationchanges within Oracle GoldenGate. The following scripts control the DDL trigger.

(1) ddl_disable: Disables thetrigger. No further DDL operations are captured or replicated after you disablethe trigger.

(2) ddl_enable: Enables thetrigger. When you enable the trigger, Oracle GoldenGate starts capturingcurrent DDL changes, but does not capture DDL that was generated while thetrigger was disabled.

Before running these scripts, disable all sessions that ever issued DDL, including those of theOracle GoldenGate processes, SQL*Plus, business applications, and any othersoftware that uses Oracle. Otherwise the database might generate an ORA-04021error. Do not use these scripts if you intend to maintain consistent DDL on thesource and target systems.

4.2 Maintaining the DDL marker table

You can purge rows from the marker table at any time. It does not keep DDL history. To purgethe marker table, use the Manager parameter PURGEMARKERHISTORY. Manager getsthe name of the marker table from one of the following:

1. The namegiven with the MARKERTABLE <table> parameter in the GLOBALS file, if specified.

2. The defaultname of GGS_MARKER.

PURGEMARKERHISTORY provides options to specify maximum and minimum lengths of time to keep a row,based on the last modification date.

4.3 Deleting the DDL marker table

Do not delete the DDL marker table unless you want to discontinue synchronizing DDL.

The marker tableand the DDL trigger are interdependent. An attempt to drop the marker tablefails if the DDL trigger is enabled. This is a safety measure to prevent thetrigger from becoming invalid and missing DDL operations. If you remove themarker table, the following error is generated:

"ORA-04098:trigger 'SYS.GGS_DDL_TRIGGER_BEFORE' is invalid and failed re-validation"

The proper wayto remove an Oracle GoldenGate DDL object depends on your plans for the rest ofthe DDL environment. To choose the correct procedure, see one of the following:

(1) “Changing DDL object namesafter installation” on page 66

(2) “Restoring an existing DDLenvironment to a clean state” on page 68

(3) “Removing the DDL objects from thesystem” on page 69

4.4 Maintaining the DDL history table

You can purgethe DDL history table to control its size, but do so carefully. The DDL historytable maintains the integrity of the DDL synchronization environment. Purges tothis table cannot be recovered through the Oracle GoldenGate interface.

To maintain the DDL history table

1. To prevent any possibility of DDLhistory loss, make regular full backups of the history table.

2. To ensure the recoverability of purgedDDL, enable Oracle Flashback for the history table. Set the flashback retentiontime well past the point where it could be needed. For example, if your fullbackups are at most one week old, retain two weeks of flashback. OracleGoldenGate can be positioned backward into the flashback for reprocessing.

3. If possible, purge the DDL history tablemanually to ensure that essential rows are not purged accidentally. If yourequire an automated purging mechanism, use the PURGEDDLHISTORY parameter inthe Manager parameter file. You can specify maximum and minimum lengths of timeto keep a row.

NOTE:

Temporary tablescreated by Oracle GoldenGate to increase performance might be purged at the sametime as the DDL history table, according to the same rules. The names of thesetables are derived from the name of the history table, and their purging isreported in the Manager report file. This is normal behavior.

4.5 Deleting the DDL history table

Do not deletethe DDL history table unless you want to discontinue synchronizing DDL. Thehistory table contains a record of DDL operations that were issued.

The historytable and the DDL trigger are interdependent. An attempt to drop the history tablefails if the DDL trigger is enabled. This is a safety measure to prevent thetrigger from becoming invalid and missing DDL operations. If you remove thehistory table, the following error is generated:

"ORA-04098:trigger 'SYS.GGS_DDL_TRIGGER_BEFORE' is invalid and failed re-validation"

The proper wayto remove an Oracle GoldenGate DDL object depends on your plans for the rest ofthe DDL environment. To choose the correct procedure, see one of the following:

(1) “Changing DDL object namesafter installation” on page 66

(2) “Restoring an existing DDLenvironment to a clean state” on page 68

(3) “Removing the DDL objects fromthe system” on page 69

4.6 Purging the DDL trace file

To prevent theDDL trace file from consuming excessive disk space, run the ddl_cleartrace scripton a regular basis. This script deletes the trace file, but Oracle GoldenGatewill create it again.

The default nameof the DDL trace file is ggs_ddl_trace.log. It is in the USER_DUMP_DEST directoryof Oracle. The ddl_cleartrace script is in the Oracle GoldenGate directory.

4.7 Applying database patches and upgrades when DDL supportis enabled

Database patchesand upgrades usually invalidate the Oracle GoldenGate DDL trigger and otherOracle GoldenGate DDL objects. Before applying a database patch, do the following.

1. Disable the Oracle GoldenGate DDLtrigger by running the following script.

@ddl_disable

2. Apply the patch.

3. Enable the DDL trigger by running thefollowing script.

@ddl_enable

NOTE:

Databaseupgrades and patches generally operate on Oracle objects. Because OracleGoldenGate filters out those objects automatically, DDL from those proceduresis not replicated when replication starts again.

To avoidrecompile errors after the patch or upgrade, which are caused if the trigger isnot disabled before the procedure, consider adding calls to @ddl_disable and @ddl_enableat the appropriate locations within your scripts.

4.8 Applying Oracle GoldenGate patches and upgrades when DDLsupport is enabled

NOTE:

If there are instructionslike these in the release notes or upgrade instructions that accompany arelease, follow those instead of these. Do not use this procedure for anupgrade from an Oracle GoldenGate version that does not support DDL statementsthat are larger than 30K (pre-version 10.4).

Follow these steps to apply a patch or upgrade to the DDL objects. This procedure may or maynot preserve the current DDL synchronization configuration, depending onwhether the new build requires a clean installation.

1. Run GGSCI. Keep the session open for theduration of this procedure.

2. Stop Extract to stop DDL capture.

STOP EXTRACT<group>

3. Stop Replicat to stop DDL replication.

STOP REPLICAT<group>

4. Download or extract the patch or upgradefiles according to the instructions provided by Oracle GoldenGate.

5. Change directories to the OracleGoldenGate installation directory.

6. Run SQL*Plus and log in as a user thathas SYSDBA privileges.

7. Disconnect all sessions that ever issuedDDL, including those of Oracle GoldenGate processes, SQL*Plus, businessapplications, and any other software that uses Oracle. Otherwise the databasemight generate an ORA-04021 error.

8. Run the ddl_disable script to disablethe DDL trigger.

9. Run the ddl_setup script. You areprompted for:

(1) The name of the Oracle GoldenGateDDL schema. If you changed the schema name, use the new one.

(2) The installation mode: Selecteither NORMAL or INITIALSETUP mode, depending on what the installation orupgrade instructions require. NORMAL mode recompiles the DDL environmentwithout removing DDL history. INITIALSETUP removes the DDL history.

10. Run the ddl_enable.sql script to enablethe DDL trigger.

11. In GGSCI, start Extract to resume DDLcapture.

START EXTRACT<group>

12. Start Replicat to start DDLreplication.

START REPLICAT<group>

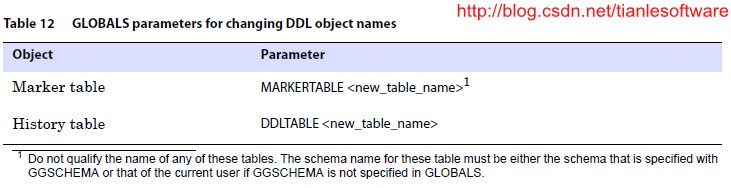

4.9 Changing DDL object names after installation

Follow these steps to change the names of the Oracle GoldenGate DDL schema or other DDLobjects after they are installed. This procedure preserves the continuity ofsource and target DDL operations.

1. Run GGSCI. Keep the session open for theduration of this procedure.

2. Stop Extract to stop DDL capture.

STOP EXTRACT<group>

3. Stop Replicat to stop DDL replication.

STOP REPLICAT<group>

4. Change directories to the OracleGoldenGate installation directory.

5. Run SQL*Plus and log in as a user thathas SYSDBA privileges.

6. Disconnect all sessions that ever issuedDDL, including those of Oracle GoldenGate processes, SQL*Plus, businessapplications, and any other software that uses Oracle. Otherwise the databasemight generate an ORA-04021 error.

7. Run the ddl_disable script to disablethe DDL trigger.

8. To change the DDL schema name, specifythe new name in the local GLOBALS file.

GGSCHEMA<new_schema_name>

9. To change the names of any otherobjects, do the following:

(1) Specify the new names in the params.sqlscript. Do not run this script.

(2) If changing objects in Table 12,specify the new names in the local GLOBALS file. The correct parameters to useare listed in the Parameter column of this table.

10. If using a new schema for the DDLsynchronization objects, create it now.

11. Change directories to the OracleGoldenGate installation directory.

12. Run SQL*Plus and log in as a user thathas SYSDBA privileges.

13. Run the ddl_setup script. You areprompted for:

(1) The name of the Oracle GoldenGateDDL schema. If you changed the schema name, use the new one.

(2) The installation mode: Select the NORMALmode to recompile the DDL environment without removing the DDL history table.

14. Run the ddl_enable.sql script to enablethe DDL trigger.

15. In GGSCI, start Extract to resume DDLcapture.

START EXTRACT<group>

16. Start Replicat to start DDLreplication.

START REPLICAT<group>

4.10 Restoring an existing DDL environment to a clean state

Follow these steps to completely remove, and then reinstall, the Oracle GoldenGate DDL objects.This procedure creates a new DDL environment, but removes DDL history.

NOTE:

Due to objectinterdependencies, all objects must be removed and reinstalled in this procedure.

1. If you are performing thisprocedure in conjunction with the installation of a new Oracle GoldenGateversion, download and install the Oracle GoldenGate files, and create or updateprocess groups and parameter files as necessary.

2. (Optional) To preserve the continuity ofsource and target structures, stop DDL activities and then make certain thatReplicat finished processing all of the DDL and DML data in the trail. Todetermine when Replicat is finished, issue the following command until you seea message that there is no more data to process.

INFO REPLICAT<group>

NOTE:

Instead of usingINFO Replicat, you can use the EVENTACTIONS option of TABLE and MAP to stop theExtract and Replicat processes after the DDL and DML has been processed.

3. Run GGSCI.

4. Stop Extract to stop DDL capture.

STOP EXTRACT<group>

5. Stop Replicat to stop DDL replication.

STOP REPLICAT<group>

6. Change directories to the OracleGoldenGate installation directory.

7. Run SQL*Plus and log in as a user thathas SYSDBA privileges.

8. Disconnect all sessions that ever issuedDDL, including those of Oracle GoldenGate processes, SQL*Plus, businessapplications, and any other software that uses Oracle. Otherwise the databasemight generate an ORA-04021 error.

9. Run the ddl_disable script to disablethe DDL trigger.

10. Run the ddl_remove script to remove theOracle GoldenGate DDL trigger, the DDL history and marker tables, and otherassociated objects. This script produces a ddl_remove_spool.txt file that logsthe script output and a ddl_remove_set.txt file that logs environment settingsin case they are needed for debugging.

11. Run the marker_remove script to removethe Oracle GoldenGate marker support system. This script produces a marker_remove_spool.txtfile that logs the script output and a marker_remove_set.txt file that logsenvironment settings in case they are needed for debugging.

12. Run the marker_setup script toreinstall the Oracle GoldenGate marker support system. You are prompted for thename of the Oracle GoldenGate schema.

13. Run the ddl_setup script. You areprompted for:

(1) The name of the Oracle GoldenGateDDL schema.

(2) The installation mode. Toreinstall DDL objects, use the INITIALSETUP mode. This mode drops and recreatesexisting DDL objects before creating new objects.

14. Run the role_setup script to recreatethe Oracle GoldenGate DDL role.

15. Grant the role to all Oracle GoldenGateusers under which the following Oracle

GoldenGate processes run: Extract,Replicat, GGSCI, and Manager. You might need to make multiple grants if theprocesses have different user names.

16. Run the ddl_enable.sql script to enablethe DDL trigger.

4.11 Removing the DDL objects from the system

This procedure removes the DDL environment and removes the history that maintains continuitybetween source and target DDL operations.

NOTE:

Due to objectinterdependencies, all objects must be removed.

1. Run GGSCI.

2. Stop Extract to stop DDL capture.

STOP EXTRACT<group>

3. Stop Replicat to stop DDL replication.

STOP REPLICAT<group>

4. Change directories to the OracleGoldenGate installation directory.

5. Run SQL*Plus and log in as a user thathas SYSDBA privileges.

6. Disconnect all sessions that ever issuedDDL, including those of Oracle GoldenGate processes, SQL*Plus, businessapplications, and any other software that uses Oracle. Otherwise the databasemight generate an ORA-04021 error.

7. Run the ddl_disable script to disablethe DDL trigger.

8. Run the ddl_remove script to remove theOracle GoldenGate DDL trigger, the DDL history and marker tables, and theassociated objects. This script produces a ddl_remove_spool.txt file that logsthe script output and a ddl_remove_set.txt file that logs current userenvironment settings in case they are needed for debugging.

9. Run the marker_remove script to removethe Oracle GoldenGate marker support system. This script produces a marker_remove_spool.txtfile that logs the script output and a marker_remove_set.txt file that logsenvironment settings in case they are needed for debugging.

-------------------------------------------------------------------------------------------------------

版权所有,文章允许转载,但必须以链接方式注明源地址,否则追究法律责任!

QQ:492913789

Email:ahdba@qq.com

Blog: http://www.cndba.cn/dave

Weibo: http://weibo.com/tianlesoftware

Twitter: http://twitter.com/tianlesoftware

Facebook: http://www.facebook.com/tianlesoftware

Linkedin: http://cn.linkedin.com/in/tianlesoftware

-------加群需要在备注说明Oracle表空间和数据文件的关系,否则拒绝申请----

DBA1 群:62697716(满); DBA2 群:62697977(满) DBA3 群:62697850(满)

DBA 超级群:63306533(满); DBA4 群:83829929(满) DBA5群: 142216823(满)

DBA6 群:158654907(满) DBA7 群:69087192(满) DBA8 群:172855474

DBA 超级群2:151508914 DBA9群:102954821 聊天 群:40132017(满)

1147

1147

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?