从搭建Windows下的Kafka运行环境和搭建Linux下的Kafka运行环境可以看出,环境一旦搭建完成,执行以下逻辑程序是固定的顺序,与环境无关:

启动Zookeeper

启动Kafka

创建Topic

启动生产者Producer发消息

启动消费者Consumer消费消息因此,这里以Windows下的运行环境为例(windows环境搭建可以参见笔者之前的博客),展示Kafka在程序中的应用:

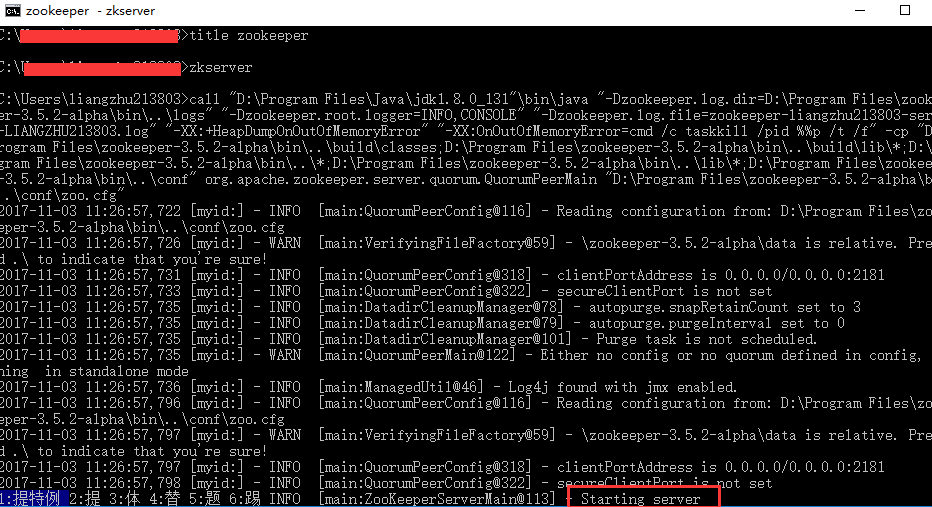

第一步:打开cmd,运行zookeeper

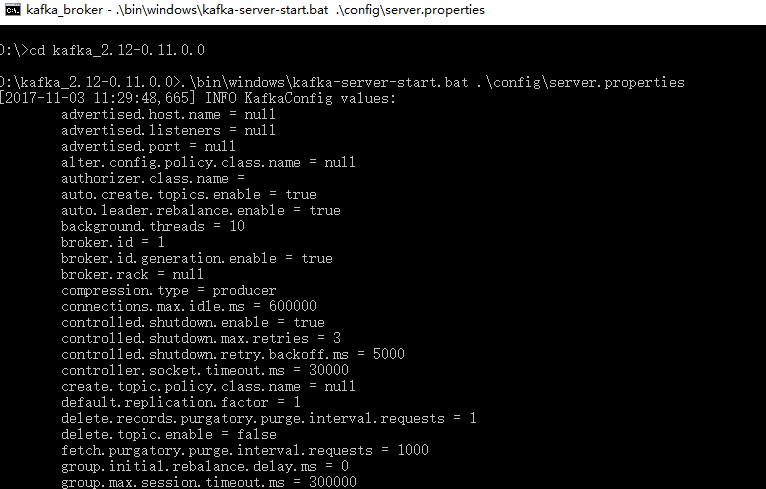

zkserver第二步:进入kafka目录,启动kafka

.\bin\windows\kafka-server-start.bat .\config\server.properties ===========================================================

【实现方案一:在程序中创建Topic】

(创建Maven工程,添加依赖)

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.10</artifactId>

<version>0.8.2.2</version>

</dependency>

</dependencies>(2)创建消息生产者Producer

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import java.util.Properties;

public class MsgProducer {

private static Producer<String, String> producer;

private final Properties properties = new Properties();

public MsgProducer(){

//配置连接的Broker List

properties.put("metadata.broker.list", "127.0.0.1:9092");

//序列化

properties.put("serializer.class", "kafka.serializer.StringEncoder");

producer = new Producer<String, String>(new ProducerConfig(properties));

}

public static void main(String[] args){

MsgProducer msgProducer = new MsgProducer();

//定义topic

String topic = "testKafka";

//定义要发送的消息

String msg = "2017.11.03,kafka测试";

//构建消息对象

KeyedMessage<String, String> data = new KeyedMessage<String, String>(topic, msg);

//发送消息

producer.send(data);

producer.close();

}

}(3)创建消息消费者Consumer

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

public class MsgConsumer {

private ConsumerConnector consumer;

private String topic;

public MsgConsumer(String zookeeper, String groupId, String topic){

Properties properties = new Properties();

//配置zookeeper信息

properties.put("zookeeper.connect", zookeeper);

//配置消费群组

properties.put("group.id", groupId);

//配置超时连接

properties.put("zookeeper.session.timeout.ms", "500");

//配置重连接时间间隔

properties.put("auto.commit.interval.ms", "1000");

consumer = Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

this.topic = topic;

}

public void testConsumer(){

Map<String, Integer> topicCount = new HashMap<String, Integer>();

//定义订阅topic数量

topicCount.put(topic, new Integer(1));

//返回的是所有topic的Map

Map<String, List<KafkaStream<byte[], byte[]>>> consumerStreams = consumer.createMessageStreams(topicCount);

//取出我们需要的topic中的消息流

List<KafkaStream<byte[], byte[]>> streams = consumerStreams.get(topic);

for (final KafkaStream stream : streams) {

ConsumerIterator<byte[], byte[]> consumerIte = stream.iterator();

while (consumerIte.hasNext()) {

System.out.println(new String(consumerIte.next().message()));

}

}

if (consumer != null) {

consumer.shutdown();

}

}

public static void main(String[] args){

String topic = "testKafka";

MsgConsumer msgConsumer = new MsgConsumer("127.0.0.1:2181", "Msg", topic);

msgConsumer.testConsumer();

}

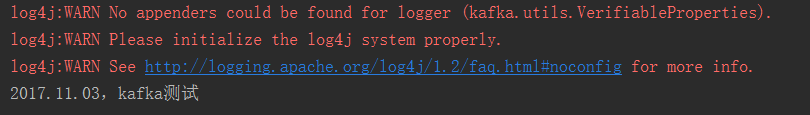

}启动消费者、然后启动消息生产者,程序运行结果如下:

Consumer

=============================================================

【实现方案二:转下面“第三步”,全部写到配置文件中,并启动多线程执行程序】

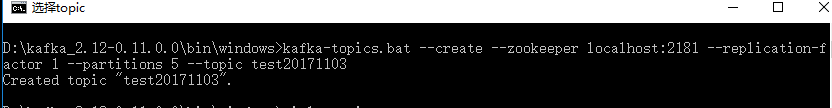

第三步:进入kafka文件目录D:\kafka_2.12-0.11.0.0\bin\windows,创建kafka的消息topics

kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 5 --topic test20171103

第四步:程序实现Producer、Consumer,实现消息的生产和消费

(1)创建Maven工程,添加如下依赖:

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.10</artifactId>

<version>0.8.2.2</version>

</dependency>

</dependencies>(2)将相关的配置信息单独写在一个文件中KafkaConf.java中,便于维护和修改

public interface KafkaConf {

String zookeeperConnect = "127.0.0.1:2181";

String groupId = "group";

String topic1 = "test20171103";

String brokerList ="127.0.0.1:9092";

String zkSessionTimeout = "20000";

String zkSyncTime = "200";

String reconnectIntervel = "1000";

}(3)实现消息生产者Producer

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import java.util.Properties;

public class KafkaProducer extends Thread{

private Producer<Integer, String> producer;

private String topic;

public KafkaProducer(String topic){

Properties properties = new Properties();

properties.put("serializer.class", "kafka.serializer.StringEncoder");

properties.put("metadata.broker.list", KafkaConf.brokerList);

producer = new Producer<>(new ProducerConfig(properties));

this.topic = topic;

}

@Override

public void run(){

int messageNo = 1;

while(true){

String message = new String("Message_" + messageNo);

System.out.println("Send: " + message);

producer.send(new KeyedMessage<>(topic, message));

messageNo++;

try{

sleep(3000);

}catch (InterruptedException e){

e.printStackTrace();

}

}

}

}(4)实现消息消费者Consumer

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

public class KafkaConsumer extends Thread{

private ConsumerConnector consumer;

private String topic;

public KafkaConsumer(String topic){

Properties properties = new Properties();

properties.put("zookeeper.connect", KafkaConf.zookeeperConnect);

properties.put("group.id", KafkaConf.groupId);

properties.put("zookeeper.session.timeout.ms", KafkaConf.zkSessionTimeout);

properties.put("zookeeper.sync.time.ms", KafkaConf.zkSyncTime);

properties.put("auto.commit.interval.ms", KafkaConf.reconnectIntervel);

consumer = Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

this.topic = topic;

}

@Override

public void run(){

Map<String, Integer> topicCountMap = new HashMap<>();

topicCountMap.put(topic, new Integer(1));

Map<String, List<KafkaStream<byte[], byte[]>>> consumerMap = consumer.createMessageStreams(topicCountMap);

KafkaStream<byte[], byte[]> stream = consumerMap.get(topic).get(0);

ConsumerIterator<byte[], byte[]> it = stream.iterator();

while(it.hasNext()){

System.out.println("Receive: " + new String(it.next().message()));

try{

sleep(1000);

}catch (InterruptedException e){

e.printStackTrace();

}

}

}

}(5)启动客户端程序,实现消费的生产与消费

public class Main {

public static void main(String[] args){

KafkaProducer producerThread = new KafkaProducer(KafkaConf.topic1);

producerThread.start();

KafkaConsumer consumerThread = new KafkaConsumer(KafkaConf.topic1);

consumerThread.start();

}

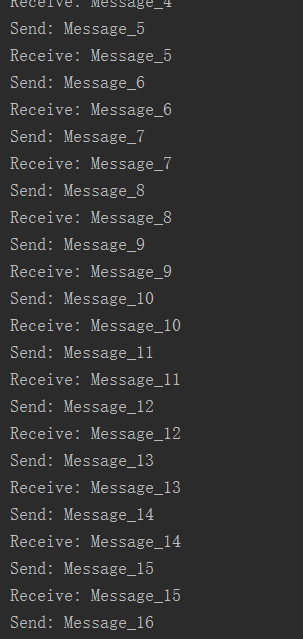

}程序运行结果如下:

消息生产者消费一条“Message+编号”,消息消费者消费该条消息

参考资料:

1、https://cwiki.apache.org/confluence/display/KAFKA/Index

2、http://www.nohup.cc/article/195/

3、http://blog.csdn.net/honglei915/article/details/37563647

5552

5552

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?