regress.py

标准回归

#!/usr/bin/python

# -*- coding: utf-8 -*-

#coding=utf-8

from numpy import *

#导入函数

#前几行为x,最后一行为y

def loadDataSet(fileName):

numFeat = len(open(fileName).readline().split('\t')) - 1

dataMat = []

labelMat = []

fr = open(fileName)

for line in fr.readlines():

lineArr = []

curLine = line.strip().split('\t')

for i in range(numFeat):

lineArr.append(float(curLine[i]))

dataMat.append(lineArr)

labelMat.append(float(curLine[-1]))

return dataMat, labelMat

#标准回归函数

def standRegress(xArr, yArr):

xMat = mat(xArr)

yMat = mat(yArr).T

xTx = xMat.T * xMat

if linalg.det(xTx) == 0.0: #如果行列式为0,则逆不存在

print "This matrix is singular, cannot do inverse"

return

ws = xTx.I * (xMat.T * yMat) #回归系数

return ws

#测试标准回归函数

def testStandRetress(xArr, yArr):

xArr, yArr = loadDataSet('ex0.txt')

ws = standRegress(xArr, yArr)

print ws

xMat = mat(xArr)

yMat = mat(yArr) #真实值

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

#原始图像

ax.scatter(xMat[:,1].flatten().A[0], yMat.T[:,0].flatten().A[0])

xCopy = xMat.copy()

xCopy.sort(0)

yHat = xCopy * ws #预测值

#预测图像

ax.plot(xCopy[:,1], yHat)

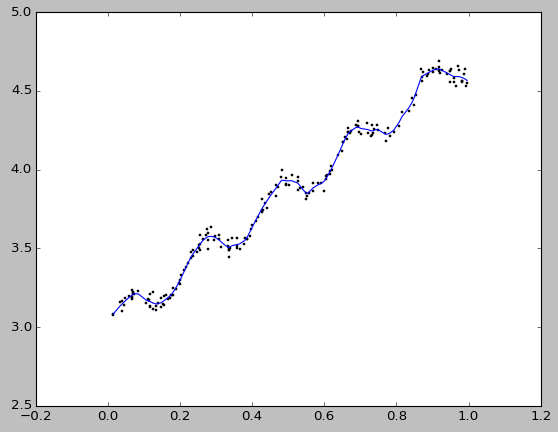

plt.show()测试:

>>> import regress

>>> xArr, yArr = loadDataSet('ex0.txt')

>>> testStandRetress(xArr, yArr)

w= [[ 3.00774324]

[ 1.69532264]]

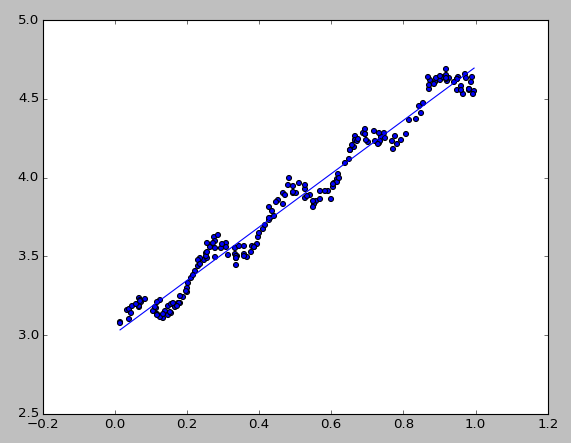

corrcoef= [[ 1. 0.98647356]

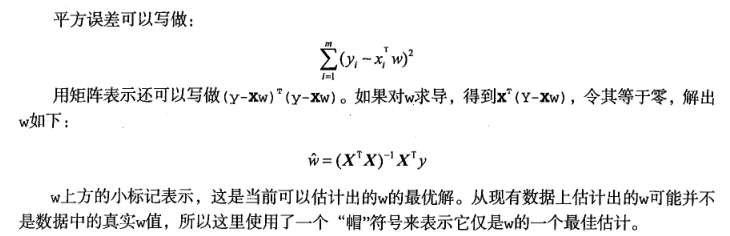

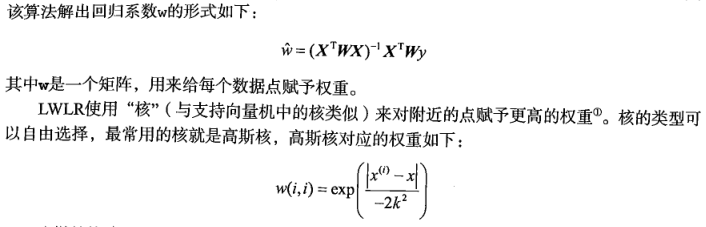

[ 0.98647356 1. ]] #yHat与yMat的相关系数为0.986局部加权线性回归

#局部加权线性回归

#k决定了对附近的嗲赋予多大的权重

def lwlr(testPoint, xArr, yArr, k=1.0):

xMat = mat(xArr)

yMat = mat(yArr).T

m = shape(xMat)[0]

weights = mat(eye((m))) #单位矩阵

for j in range(m):

diffMat = testPoint - xMat[j, :]

weights[j, j] = exp(diffMat * diffMat.T / (-2.0 * k ** 2)) #权重大小以指数级衰减

xTx = xMat.T * (weights * xMat)

if linalg.det(xTx) == 0.0:

print "This matrix is singular, cannot do inverse"

return

ws = xTx.I * (xMat.T * (weights * yMat))

return testPoint * ws

#测试局部加权线性回归函数

def lwlrTest(xArr, yArr):

print "yArr[0]", yArr[0]

print "yHrr[0], k=0.01", lwlr(xArr[0], xArr, yArr, 0.01)

print "yHrr[0], k=0.5", lwlr(xArr[0], xArr, yArr, 0.5)

print "yHrr[0], k=1", lwlr(xArr[0], xArr, yArr, 1)

m = shape(xArr)[0]

yHat = zeros(m)

for i in range(m):

yHat[i] = lwlr(xArr[i], xArr, yArr, k=0.01)

xMat = mat(xArr)

srtInd= xMat[:,1].argsort(0) #对x排序

xSort = xMat[srtInd][:,0,:]

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(xSort[:,1], yHat[srtInd]) #预测图像

ax.scatter(xMat[:,1].flatten().A[0], mat(yArr).T.flatten().A[0], s=2, c='red') #真实图像

plt.show()测试:

>>> import regress

>>> xArr, yArr = loadDataSet('ex0.txt')

>>> lwlrTest(xArr, yArr)

yArr[0] 3.176513

yHrr[0], k=0.01 [[ 3.20366661]]

yHrr[0], k=0.5 [[ 3.12201662]]

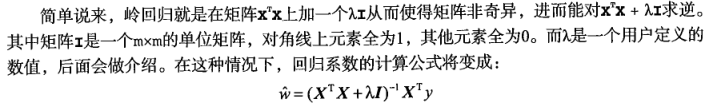

yHrr[0], k=1 [[ 3.12204471]]缩减法:岭回归

缩减法可将系数缩减的很小的值直接缩减为0

#缩减法:岭回归

def ridgeRegres(xMat, yMat, lam=0.2):

xTx = xMat.T * xMat

denom = xTx + eye(shape(xMat[1])[1]) * lam

if linalg.det(denom) == 0.0:

print "This matrix is singular, cannot do inverse"

return

ws = denom.I * (xMat.T * yMat)

return ws

def ridgeTest(xArr, yArr):

xMat = mat(xArr)

yMat = mat(yArr).T

#数据标准化

yMean = mean(yMat, 0) #均值

xMean = mean(xMat, 0)

yMat = yMat - yMean

xVar = var(xMat, 0) #方差

xMat = (xMat - xMean)/xVar

numTestPts = 30

wMat = zeros((numTestPts, shape(xMat)[1]))

for i in range(numTestPts): #在30个不同的lambda下调用ridgeRegres

ws = ridgeRegres(xMat, yMat, exp(i-10)) #lambda以指数级变化

wMat[i,:] = ws.T #将所有的回归系数输出到一个矩阵

print wMat

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(wMat)

plt.show()

#交叉测试岭回归

#numVal为算法中交叉验证的次数

def crossValidation(xArr, yArr, numVal = 10):

m = len(yArr)

indexList = range(m)

errorMat = zeros((numVal, 30))

#创建训练集和测试集容器

for i in range(numVal):

trainX = []

trainY = []

testX = []

testY = []

random.shuffle(indexList)

for j in range(m):

#将数据分为训练集和测试集

if j < m * 0.9: #90%的数据为训练集,10%为测试集

trainX.append(xArr[indexList[j]])

trainY.append(yArr[indexList[j]])

else:

testX.append(xArr[indexList[j]])

testY.append(yArr[indexList[j]])

wMat = ridgeTest(trainX, trainY) #保存所有回归系数

#用训练时的参数将测试数据标准化

for k in range(30):

matTestX = mat(testX)

matTrainX = mat(trainX)

meanTrainX = mean(matTrainX, 0)

varTrainX = var(matTrainX, 0)

matTestX = (matTestX - meanTrainX) / varTrainX

yEst = matTestX * mat(wMat[k,:]).T + mean(trainY)

errorMat[i,k] = rssError(yEst.T.A, array(testY)) #误差

meanErrors = mean(errorMat, 0)

minMean = float(min(meanErrors))

bestWeights = wMat[nonzero(meanErrors == minMean)]

xMat = mat(xArr)

yMat = mat(yArr).T

meanX = mean(xMat, 0)

varX = var(xMat, 0)

#因为作了标准化,需进行数据还原

unReg = bestWeights / varX

print "the best model from Ridge Regression is:\n", unReg

print "with constant term: ", -1 * sum(multiply(meanX, unReg)) + mean(yMat)测试:

>>> import regress

>>> xArr, yArr = loadDataSet('abalone.txt')

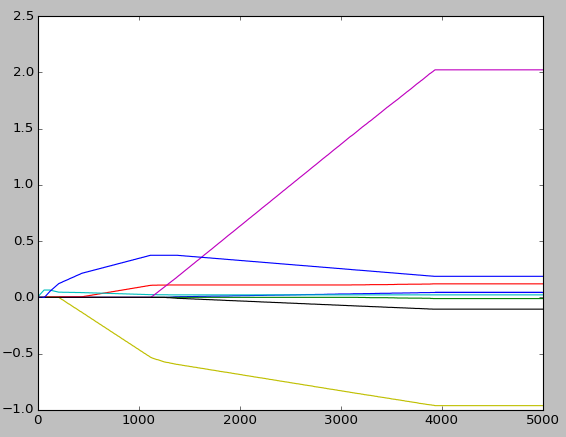

>>> ridgeTest(xArr, yArr)

[[ 4.30441949e-02 -2.27416346e-02 1.32140875e-01 2.07518171e-02 2.22403745e+00 -9.98952980e-01 -1.17254237e-01 1.66229222e-01]

[ 4.30441928e-02 -2.27416370e-02 1.32140878e-01 2.07518175e-02 2.22403626e+00 -9.98952746e-01 -1.17254174e-01 1.66229339e-01]

[ 4.30441874e-02 -2.27416435e-02 1.32140885e-01 2.07518187e-02 2.22403305e+00 -9.98952110e-01 -1.17254003e-01 1.66229656e-01]

... ...

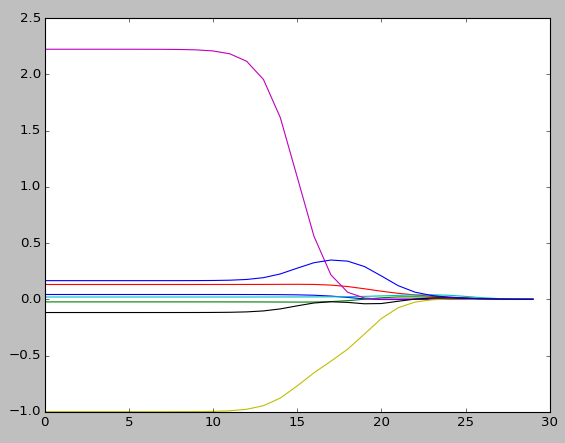

得到8个特征值的系数与log(lambda)的关系图像。最左端,lambda最小时,所有系数值与线性回归一致;最右边,都缩减成0;在中间的某值可以取得最好的预测效果

crossValidation(xArr, yArr, numVal = 10)

the best model from Ridge Regression is:

[[ 0.0823653 -3.91472054 16.9506189 10.7538564 8.09628256 -19.35008479 -7.85980015 9.56536757]]

with constant term: 2.94541561804

缩减法:前向逐步线性回归

#缩减法:前向逐步线性回归

def stageWise(xArr, yArr, eps=0.01, numItr=100):

xMat = mat(xArr)

yMat = mat(yArr).T

#数据标准化

yMean = mean(yMat, 0)

yMat = yMat - yMean

xMat = regularize(xMat)

m, n = shape(xMat)

returnMat = zeros((numItr, n))

ws = zeros((n, 1))

wsTest = ws.copy()

wsMax = ws.copy()

for i in range(numItr):

print ws.T

lowestError = inf

for j in range(n): #对于每个特征值

for sign in [-1, 1]: #分别计算增加或减少该特征值对误差的影响

wsTest = ws.copy()

wsTest[j] += eps * sign

yTest = xMat * wsTest

rssE = rssError(yMat.A, yTest.A) #与所有误差比较后,取最小的误差

if rssE < lowestError:

lowestError = rssE

wsMax = wsTest

ws = wsMax.copy()

returnMat[i, :] = ws.T

return returnMat

def stageWiseTest():

xArr, yArr = loadDataSet('abalone.txt')

wMat = stageWise(xArr, yArr, eps=0.01, numItr=200)

print "前向逐步线性回归,eps=0.01, numTtr=200:", wMat

wMat = stageWise(xArr, yArr, eps=0.001, numItr=5000)

print "前向逐步线性回归,eps=0.001, numTtr=5000:", wMat

#与最小二乘法比较

xMat = mat(xArr)

yMat = mat(yArr).T

yMean = mean(yMat, 0)

xMat = regularize(xMat)

yMat = yMat - yMean

wMat = standRegress(xMat, yMat.T)

print "标准回归", wMat.T测试:

>>> import regress

>>> stageWiseTest()

前向逐步线性回归,eps=0.01, numTtr=200:

[[ 0. 0. 0. ..., 0. 0. 0. ]

[ 0. 0. 0. ..., 0. 0. 0. ]

[ 0. 0. 0. ..., 0. 0. 0. ]

...,

[ 0.05 0. 0.09 ..., -0.64 0. 0.36]

[ 0.04 0. 0.09 ..., -0.64 0. 0.36]

[ 0.05 0. 0.09 ..., -0.64 0. 0.36]]

#第2列和第7列都是0,说明不对目标值造成任何影响

前向逐步线性回归,eps=0.001, numTtr=5000:

[[ 0. 0. 0. ..., 0. 0. 0. ]

[ 0. 0. 0. ..., 0. 0. 0. ]

[ 0. 0. 0. ..., 0. 0. 0. ]

...,

[ 0.043 -0.011 0.12 ..., -0.963 -0.105 0.187]

[ 0.044 -0.011 0.12 ..., -0.963 -0.105 0.187]

标准回归:

[[ 0.0430442 -0.02274163 0.13214087 0.02075182 2.22403814 -0.99895312 -0.11725427 0.16622915]]

4949

4949

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?