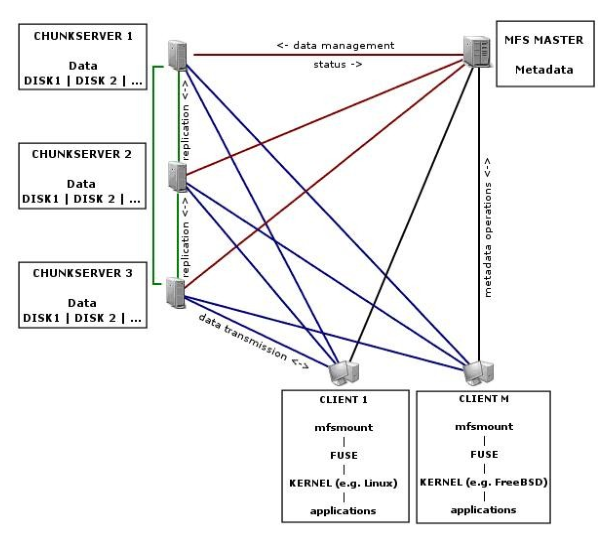

分布式文件系统mfs

vm1,vm2做高可用;vm3,vm4做存储结点,物理机做client

192.168.2.199 vm1.example.com

192.168.2.202 vm2.example.com

192.168.2.205 vm3.example.com

192.168.2.175 vm4.example.com

192.168.2.199 mfsmaster

vip 213

1、master配置启动

lftp i:~> get pub/docs/mfs/mfs-1.6.27-1.tar.gz[root@vm1 ~]# mv mfs-1.6.27-1.tar.gz mfs-1.6.27.tar.gz

[root@vm1 ~]# yum install -y fuse-devel

[root@vm1 ~]# rpmbuild -tb mfs-1.6.27.tar.gz

[root@vm1 ~]# cd rpmbuild/RPMS/x86_64/

[root@vm1 x86_64]# rpm -ivh mfs-cgi-1.6.27-2.x86_64.rpm mfs-cgiserv-1.6.27-2.x86_64.rpm mfs-master-1.6.27-2.x86_64.rpm

[root@vm1 x86_64]# cd /etc/mfs/

[root@vm1 mfs]# cp mfsmaster.cfg.dist mfsmaster.cfg

[root@vm1 mfs]# cp mfsexports.cfg.dist mfsexports.cfg

[root@vm1 mfs]# cp mfstopology.cfg.dist mfstopology.cfg

[root@vm1 mfs]# cd /var/lib/mfs/

[root@vm1 mfs]# cp metadata.mfs.empty metadata.mfs

[root@vm1 mfs]# chown -R nobody .

[root@vm1 mfs]# vim /etc/hosts

192.168.2.199 mfsmaster

[root@vm1 mfs]# mfsmaster 启动mfsmaster

启动mfscgiserv

[root@vm1 mfscgi]# mfsmaster

[root@vm1 mfs]# cd /usr/share/mfscgi/

[root@vm1 mfscgi]# chmod +x *.cgi

[root@vm1 mfscgi]# mfscgiserv

物理机访问192.168.2.199:9425

2、配置存储结点

[root@vm1 ~]# scp rpmbuild/RPMS/x86_64/mfs-chunkserver-1.6.27-2.x86_64.rpm vm3.example.com:[root@vm1 ~]# scp rpmbuild/RPMS/x86_64/mfs-chunkserver-1.6.27-2.x86_64.rpm vm4.example.com:

两个结点均做相同配置

[root@vm4 ~]# rpm -ivh mfs-chunkserver-1.6.27-2.x86_64.rpm

[root@vm4 ~]# mkdir /mnt/chunk1

[root@vm4 ~]# mkdir /var/lib/mfs

[root@vm4 ~]# chown nobody /mnt/chunk1/ /var/lib/mfs/

[root@vm4 ~]# cd /etc/mfs/

[root@vm4 mfs]# cp mfschunkserver.cfg.dist mfschunkserver.cfg

[root@vm4 mfs]# cp mfshdd.cfg.dist mfshdd.cfg

[root@vm4 mfs]# vim mfshdd.cfg

/mnt/chunk1

[root@vm4 mfs]# vim /etc/hosts

192.168.2.199 mfsmaster

[root@vm4 mfs]# mfschunkserver

然后刷新192.168.2.199:9425网页,查看存储结点服务器

3、client客户端配置

[root@vm1 x86_64]# scp mfs-client-1.6.27-2.x86_64.rpm 192.168.2.168:[root@ankse ~]# rpm -ivh mfs-client-1.6.27-2.x86_64.rpm

[root@ankse ~]# cd /etc/mfs/

[root@ankse mfs]# cp mfsmount.cfg.dist mfsmount.cfg

[root@ankse mfs]# vim mfsmount.cfg

/mnt/mfs

[root@ankse mfs]# vim /etc/hosts

192.168.2.199 mfsmaster

[root@ankse mfs]# mkdir /mnt/mfs

[root@ankse mfs]# mfsmount 就会挂载

测试

[root@ankse mfs]# mfssetgoal -r 2 dir2/ 设置文件夹dir2中的文件均保存2份

[root@ankse mfs]# mfsgetgoal dir1/

dir1/: 1

[root@ankse mfs]# mfsgetgoal dir2/

dir2/: 2

[root@ankse mfs]# cp /etc/passwd dir1/

[root@ankse mfs]# cp /etc/fstab dir2/

[root@ankse mfs]# mfsfileinfo dir1/passwd

dir1/passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 192.168.2.175:9422

[root@ankse mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 192.168.2.175:9422

copy 2: 192.168.2.205:9422

[root@vm3 ~]# mfschunkserver stop 停止存储结点的服务

[root@ankse mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 192.168.2.175:9422

再次开启,又会看到两份,这样避免单点故障

[root@vm4 mfs]# mfschunkserver stop再次关闭

[root@ankse mfs]# mfsfileinfo dir1/passwd

dir1/passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

no valid copies !!! 虽然能看到文件,但是是无效的

[root@ankse mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

no valid copies !!!

误删文件回复

[root@ankse ~]# mkdir /mnt/meta

[root@ankse ~]# mfsmount -m /mnt/meta/ -H mfsmaster

[root@ankse ~]# cd /mnt/meta/trash/

[root@ankse trash]# mv 0000093F\|etc\|xdg\|autostart\|pulseaudio.desktop undel/

master恢复

[root@vm1 ~]# mfsmetarestore -a

[root@vm1 ~]# mfsmaster

4、制作master的HA

停止mfs[root@ankse ~]# umount /mnt/mfs/

[root@vm3 chunk1]# mfschunkserver stop

[root@vm4 chunk1]# mfschunkserver stop

[root@vm1 ~]# mfsmaster stop

制作启动脚本

[root@vm1 init.d]# vim mfs

#!/bin/bash

#

# Init file for the MooseFS master service

#

# chkconfig: - 92 84

#

# description: MooseFS master

#

# processname: mfsmaster

# Source function library.

# Source networking configuration.

. /etc/init.d/functions

. /etc/sysconfig/network

# Source initialization configuration.

# Check that networking is up.

[ "${NETWORKING}" == "no" ] && exit 0

[ -x "/usr/sbin/mfsmaster" ] || exit 1

[ -r "/etc/mfs/mfsmaster.cfg" ] || exit 1

[ -r "/etc/mfs/mfsexports.cfg" ] || exit 1

RETVAL=0

prog="mfsmaster"

datadir="/var/lib/mfs"

mfsbin="/usr/sbin/mfsmaster"

mfsrestore="/usr/sbin/mfsmetarestore"

start () {

echo -n $"Starting $prog: "

$mfsbin start >/dev/null 2>&1

if [ $? -ne 0 ];then

$mfsrestore -a >/dev/null 2>&1 && $mfsbin start >/dev/null 2>&1

fi

RETVAL=$?

echo

return $RETVAL

}

stop () {

echo -n $"Stopping $prog: "

$mfsbin -s >/dev/null 2>&1 || killall -9 $prog #>/dev/null 2>&1

RETVAL=$?

echo

return $RETVAL

}

restart () {

stop

start

}

reload () {

echo -n $"reload $prog: "

$mfsbin reload >/dev/null 2>&1

RETVAL=$?

echo

return $RETVAL

}

restore () {

echo -n $"restore $prog: "

$mfsrestore -a >/dev/null 2>&1

RETVAL=$?

echo

return $RETVAL

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

restart

;;

reload)

reload

;;

restore)

restore

;;

status)

status $prog

RETVAL=$?

;;

*)

echo $"Usage: $0 {start|stop|restart|reload|restore|status}"

RETVAL=1

esac

exit $RETVAL

[root@vm1 init.d]# chmod +x mfs

[root@vm1 init.d]# /etc/init.d/mfs start 测试

[root@vm1 ~]# ps -axu | grep mfsmaster

[root@vm1 init.d]# /etc/init.d/mfs stop

[root@vm1 init.d]# scp mfs vm2.example.com:/etc/init.d/

[root@vm1 x86_64]# scp mfs-master-1.6.27-2.x86_64.rpm mfs-cgi-1.6.27-2.x86_64.rpm mfs-cgiserv-1.6.27-2.x86_64.rpm vm2.example.com:

[root@vm2 ~]# rpm -ivh mfs-master-1.6.27-2.x86_64.rpm mfs-cgi-1.6.27-2.x86_64.rpm mfs-cgiserv-1.6.27-2.x86_64.rpm

[root@vm2 mfs]# cp mfsmaster.cfg.dist mfsmaster.cfg

[root@vm2 mfs]# cp mfsexports.cfg.dist mfsexports.cfg

[root@vm2 mfs]# cp mfstopology.cfg.dist mfstopology.cfg

[root@vm2 mfs]# cd /var/lib/mfs/

[root@vm2 mfs]# cp metadata.mfs.empty metadata.mfs

[root@vm2 mfs]# chown -R nobody .

[root@vm2 mfs]# cd /usr/share/mfscgi/

[root@vm2 mfscgi]# chmod +x *.cgi

[root@ankse ~]# vim /etc/hosts 修改所有结点,mfsmaster解析为虚拟ip

192.168.2.213 mfsmaster

pacemaker还原

[root@vm1 ~]# /etc/init.d/corosync start 两个结点先启动

crm(live)resource# stop vip

crm(live)configure# delete vip

crm(live)configure# delete webdata

crm(live)configure# delete website

crm(live)configure# show

node vm1.example.com

node vm2.example.com

primitive vmfence stonith:fence_xvm \

params pcmk_host_map="vm1.example.com:vm1;vm2.example.com:vm2" \

op monitor interval="60s" \

meta target-role="Started"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="true" \

no-quorum-policy="ignore"

crm(live)configure# commit

[root@vm1 ~]# /etc/init.d/corosync stop 关闭

5、安装DRBD,存放master调用文件

lftp i:~> get pub/docs/drbd/rhel6/drbd-8.4.3.tar.gz[root@vm1 ~]# tar zxf drbd-8.4.3.tar.gz

[root@vm1 ~]# cd drbd-8.4.3

[root@vm1 drbd-8.4.3]# yum install -y flex kernel-devel

[root@vm1 drbd-8.4.3]# ./configure --enable-spec --with-km

[root@vm1 drbd-8.4.3]# cp ../drbd-8.4.3.tar.gz /root/rpmbuild/SOURCES/

[root@vm1 drbd-8.4.3]# rpmbuild -bb drbd.spec

[root@vm1 drbd-8.4.3]# rpmbuild -bb drbd-km.spec

[root@vm1 ~]# cd rpmbuild/RPMS/x86_64/

[root@vm1 x86_64]# rpm -ivh drbd-*

[root@vm1 x86_64]# scp drbd-* vm2.example.com:

[root@vm2 ~]# rpm -ivh drbd-*

然后vm1和vm2添加2G大小的虚拟磁盘

[root@vm1 ~]# vim /etc/drbd.d/mfsdata.res

resource mfsdata {

meta-disk internal;

device /dev/drbd1;

syncer {

verify-alg sha1;

}

on vm1.example.com {

disk /dev/vdb1;

address 192.168.2.199:7789;

}

on vm2.example.com {

disk /dev/vdb1;

address 192.168.2.202:7789;

}

}

[root@vm1 ~]# scp /etc/drbd.d/mfsdata.res vm2.example.com:/etc/drbd.d/

分出/dev/vdb1,两个结点都做。如下:

[root@vm1 ~]# fdisk -cu /dev/vdb

[root@vm1 ~]# drbdadm create-md mfsdata

[root@vm1 ~]# /etc/init.d/drbd start

设置结点1为主结点,格式化

[root@vm1 ~]# drbdsetup primary /dev/drbd1 --force

[root@vm1 ~]# mkfs.ext4 /dev/drbd1

给磁盘中写入mfs的文件

[root@vm1 ~]# mount /dev/drbd1 /mnt/

[root@vm1 ~]# cd /var/lib/mfs/

[root@vm1 mfs]# mv * /mnt/

[root@vm1 mfs]# cd /mnt/

[root@vm1 mnt]# chown nobody .

[root@vm1 ~]# umount /mnt/

[root@vm1 ~]# drbdadm secondary mfsdata

另一个结点查看

[root@vm2 ~]# drbdadm primary mfsdata

[root@vm2 ~]# mount /dev/drbd1 /var/lib/mfs/

[root@vm2 ~]# cd /var/lib/mfs/

[root@vm2 mfs]# ls

changelog.2.mfs changelog.6.mfs metadata.mfs metadata.mfs.empty stats.mfs

changelog.3.mfs lost+found metadata.mfs.back.1 sessions.mfs

[root@vm2 ~]# umount /var/lib/mfs/

关闭客户端iscsi

[root@vm1 ~]# iscsiadm -m node -u

[root@vm1 ~]# iscsiadm -m node -o delete

[root@vm1 ~]# /etc/init.d/iscsi stop

[root@vm1 ~]# chkconfig iscsi off

[root@vm1 ~]# chkconfig iscsid off

6、corosync加入资源

crm(live)configure# primitive MFSDATA ocf:linbit:drbd params drbd_resource=mfsdata 资源drbd用于mfsmastercrm(live)configure# primitive MFSfs ocf:heartbeat:Filesystem params device=/dev/drbd1 directory=/var/lib/mfs fstype=ext4 文件系统资源

crm(live)configure# ms mfsdataclone MFSDATA meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true 定义主备

crm(live)configure# primitive mfsmaster lsb:mfs op monitor interval=30s 定义mfsmaster资源

crm(live)configure# group mfsgrp vip MFSfs mfsmaster

crm(live)configure# colocation mfs-with-drbd inf: mfsgrp mfsdataclone:Master

crm(live)configure# order mfs-after-drbd inf: mfsdataclone:promote mfsgrp:start

crm(live)configure# commit

监控如下:

Online: [ vm1.example.com vm2.example.com ]

vmfence (stonith:fence_xvm): Started vm1.example.com

Master/Slave Set: mfsdataclone [MFSDATA]

Masters: [ vm1.example.com ]

Slaves: [ vm2.example.com ]

Resource Group: mfsgrp

vip (ocf::heartbeat:IPaddr2): Started vm1.example.com

MFSfs (ocf::heartbeat:Filesystem): Started vm1.example.com

mfsmaster (lsb:mfs): Started vm1.example.com

配置文件如下:

node vm1.example.com

node vm2.example.com

primitive MFSDATA ocf:linbit:drbd \

params drbd_resource="mfsdata"

primitive MFSfs ocf:heartbeat:Filesystem \

params device="/dev/drbd1" directory="/var/lib/mfs" fstype="ext4"

primitive mfsmaster lsb:mfs \

op monitor interval="30s"

primitive vip ocf:heartbeat:IPaddr2 \

params ip="192.168.2.213" cidr_netmask="32" \

op monitor interval="30s"

primitive vmfence stonith:fence_xvm \

params pcmk_host_map="vm1.example.com:vm1;vm2.example.com:vm2" \

op monitor interval="60s" \

meta target-role="Started"

group mfsgrp vip MFSfs mfsmaster

ms mfsdataclone MFSDATA \

meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

colocation mfs-with-drbd inf: mfsgrp mfsdataclone:Master

order mfs-after-drbd inf: mfsdataclone:promote mfsgrp:start

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="true" \

no-quorum-policy="ignore"

测试高可用

[root@vm1 ~]# /etc/init.d/corosync start

[root@vm2 ~]# /etc/init.d/corosync start

[root@vm3 ~]# mfschunkserver

[root@vm4 ~]# mfschunkserver

[root@ankse ~]# mfsmount

关闭vm1的corosync,vm2接管所有资源,vm1状态OFFLINE: [ vm1.example.com ],Masters: [ vm2.example.com ] Stopped: [ vm1.example.com ],再开启vm1的corosync,关闭再开启vm2的corosync,使资源启动在vm1上;

在客户端[root@ankse dir2]# dd if=/dev/zero of=bigfile bs=1M count=300 过程中关闭vm1的mfs,fence会将其重启,资源服务移到另一结点(这里出现了vm3的崩溃,可能是快照满了,若客户端有双份文件的话,会丢失一份);若关闭corosync资源服务也会移到另一结点,都不会影响客户端的文件。

之后使用cat查看文件来测试,客户端一直cat fstab,master关闭corosync测试,会有短暂切换延迟。

fence测试,关闭vm1的eth0,vm1进入重启,过程中vm2需要启动drbd,vm1开启之后,开启corosync,drbd是开机自启的,也可以把corosync开机自启。

总结:之后在自己电脑上做,遇到一些问题,导致重装master,使用rpm -e mfs-master卸载,重装然后格式化drbd,修改权限

这里corosync和drbd在一块,drbd做的是mfs的master的存储文件/var/lib/mfs,master相当于调度

vm3和vm4还是存储结点[root@vm3 ~]# cd /mnt/chunk1/

[root@vm3 ~]# ls /mnt/chunk1/

00 0D 1A 27 34 41 4E 5B 68 75 82 8F 9C A9 B6 C3 D0 DD EA F7

最后需要做的就是开机启动drbd,corosync!

7、使用heartbeat+mfsmaster高可用

这里是在自己原来做过heartbeat的电脑做的,主机名有变化先关闭所有,注意顺序,客户端先卸载

[root@ThinkPad ~]# umount /mnt/mfs/

[root@lb1 ~]# mfschunkserver stop

[root@lb2 ~]# mfschunkserver stop

[root@ha1 ~]# /etc/init.d/corosync stop

[root@ha2 ~]# /etc/init.d/corosync stop

[root@ha1 ~]# vim /etc/ha.d/haresources

[root@ha1 ~]# vim /etc/ha.d/haresources 修改

vm1.example.com IPaddr::192.168.2.213/24/eth0:0 drbddisk::mfsdata Filesystem::/dev/drbd1::/var/lib/mfs::ext4 mfs

[root@ha1 ~]# /etc/init.d/drbd start 开启drbd,比较重要

[root@ha2 ~]# /etc/init.d/drbd start 直到看到两个都是secondary

[root@ha1 ~]# /etc/init.d/heartbeat start 开启heartbeat,注意查看日志

[root@ha2 ~]# /etc/init.d/heartbeat start

[root@lb1 ~]# mfschunkserver 开启存储节点

[root@lb2 ~]# mfschunkserver

[root@ThinkPad ~]# mfsmount 挂载,df查看

[root@ThinkPad ~]# cat /mnt/mfs/1/fstab 查看文件内容

高可用测试

[root@ha1 ~]# /etc/init.d/heartbeat stop 资源跳到另一个结点,不影响查看文件,再次开启会跳回来

[root@ha1 ~]# /etc/init.d/mfs start 关闭MFS查看文件会卡住,不带有服务资源得检测。

334

334

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?