HOG特征向量归一化

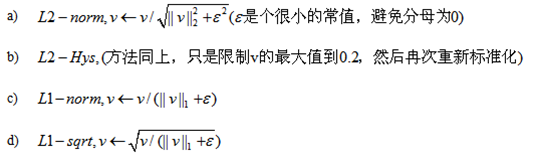

对block块内的HOG特征向量进行归一化。对block块内特征向量的归一化主要是为了使特征向量空间对光照,阴影和边缘变化具有鲁棒性。还有归一化是针对每一个block进行的,一般采用的归一化函数有以下四种:

在人体检测系统中进行HOG计算时一般使用L2-norm,Dalal的文章也验证了对于人体检测系统使用L2-norm的时候效果最好。

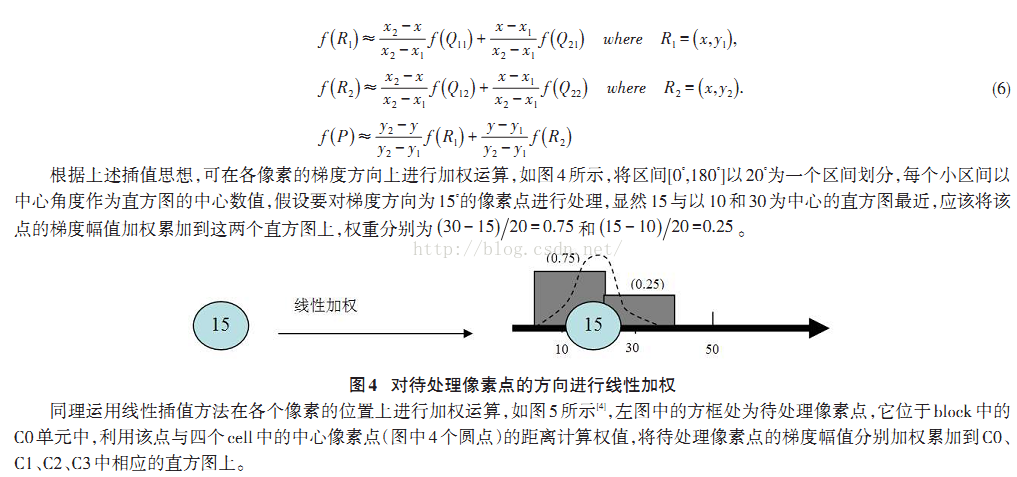

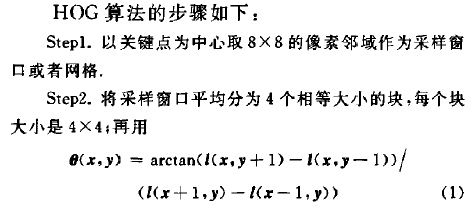

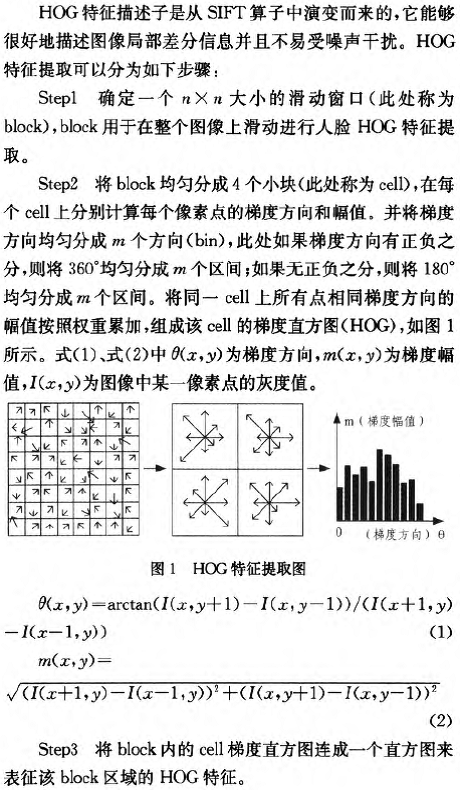

关于就算直方图时用到的三线性插值

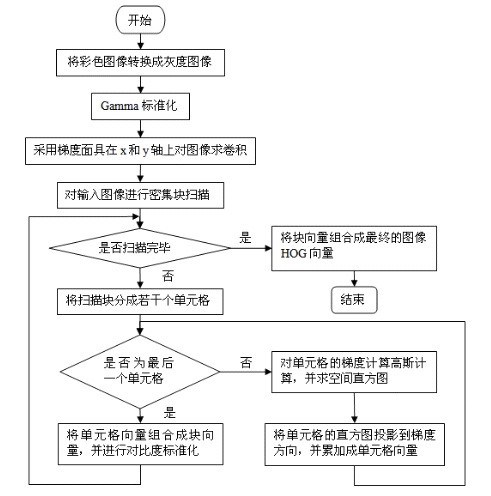

1.第一步的作用在于将图像规范化,通过两个方面GAMMA和COLOUR, GAMMA方面的话,其规范化后图像中的参量可以被直接提取出来,方便后面的操作,颜色的规范化则是去除图像中光强值同时保留颜色值,例如去除阴影或者光强变化的像素。

在低FPPW中,均方根的GAMMA压缩能够提高其表现。而LOG则起到了反作用。

2.斜率的计算直接影响识别的表现,不同的斜率计算方法在FPPW的表现上不同,总体而言,较为简单的斜率计算能够获得更好的效果,此外,对于颜色的斜率计算则是对每一个颜色通道进行独立的斜率测量,并且寻找到最标准的一个作为像素的斜率向量。求导不仅能够捕捉人物轮廓信息,也能进一步削弱光强差异。

3.这个模块的主要目的在于通过计算每个像素的权重投票,通过局部空间地区(CELL)累计投票,投票是用来反映某像素的斜率幅度的大小。

4.针对图像中前景和背景之间的信息的不同,运用归一化使得信息得以统一,通过局部空间单元的信息组成向量(最关键部分),空间中的模块是重合的,这样每个单元可以包含多个单元的信息,使得向量能够反映更多的图像信息。这可以大幅度提高图像识别能力。

5.HOG技术在人物识别窗口中共有16个像素,这大大降低了识别的错误率,而这一步就是将像素收集并整理信息。

6.将之前整理的向量送入SVM进行分级,来判断其是否是人物。

hog特征维数的计算

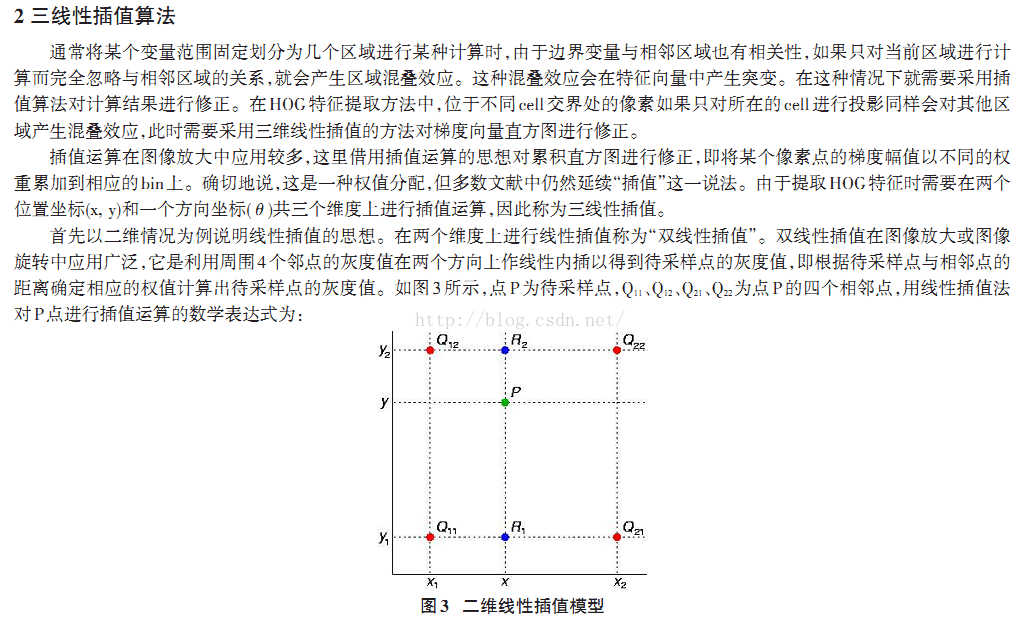

把样本图像分割为若干个像素的单元(cell),把梯度方向平均划分为9个区间(bin),在每个单元里面对所有像素的梯度方向在各个方向区间进行直方图统计,得到一个9维的特征向量,每相邻的4个单元构成一个块(block),把一个块内的特征向量联起来得到36维的特征向量,用块对样本图像进行扫描,扫描步长为一个单元。最后将所有块的特征串联起来,就得到了人体的特征。例如,对于64*128的图像而言,每2*2的单元(16*16的像素)构成一个块,每个块内有4*9=36个特征,以8个像素为步长,那么,水平方向将有7个扫描窗口,垂直方向将有15个扫描窗口。也就是说,64*128的图片,总共有36*7*15=3780个特征。

Hog.h

#pragma once

#include<vector>

#include<map>

#define PI 3.1416

typedef unsigned char BYTE;

#define GradType BYTE

#define MagType BYTE

#define FeaType double

class BlockManager

{

private:

std::map<std::pair<int, int>, double*>cache;

int level;

public:

bool find(int y, int x)

{

return cache.find(std::pair<int, int>(y, x)) != cache.end();

}

double*GetBlockData(int y, int x)

{

return cache[std::pair<int, int>(y, x)];

}

void AddBlock(int y, int x, double*data)

{

cache.insert(std::pair<std::pair<int, int>, double*>(std::pair<int, int>(y, x), data));

}

void SetLevel(const int lev)

{

level = lev;

}

void deleteBlock(int y, int x)

{

delete[]cache[std::pair<int, int>(y, x)];

cache.erase(std::pair<int, int>(y, x));

}

void deleteAllBlocks()

{

std::map<std::pair<int, int>, double*>::iterator it;

for (it = cache.begin(); it != cache.end(); it++)

{

delete[](*it).second;

cache.erase(it);

it = cache.begin();

if (cache.empty())

return;

}

}

~BlockManager()

{

deleteAllBlocks();

}

};

class Hog

{

public:

int img_width;//待检测图片的宽度

int img_height;//待检测图片的高度

int window_width;//检测窗口的宽度,64

int window_height;//检测窗口的高度,128

int CellSize;//cell的大小,设为8

int blkcell;//block尺寸是cell的几倍,2*2

int blocksize;//block的大小

int blockSkipStep;//Block在检测窗口中上下移动尺寸为8,与blocksize=16相比,

//即overlap=1/2,blockSkipStep减小到4使得overlap增加到3/4后,可使精度增加,但计算量增大

int windowSkipStep;//滑动窗口在检测图片中滑动的尺寸为8

int m_histBin;//180度分几个区间,设为9,即1个cell的梯度直方图化成9个bin

int win_fea_dim;

int xblkSkipStepNum;

int yblkSkipStepNum;

private:

int max_pyramid_height;//图像金字塔高度

int current_pyramid_height;//当前图像金字塔高度

double ratio;//缩放比例

bool isGaussianWeight;//是否使用高斯权重

BYTE*RGBdata;

BYTE*greydata;

GradType*grad;//梯度矩阵

MagType*theta;//角度矩阵

std::vector < FeaType* > windowHOGFeature;

BlockManager blockmanager;

bool GetImgData();

void Gamma();

void RGB2Grey();

double* GetBlkFeature(int offsetX, int offsetY);

double GaussianKernel(int x, int y, int cent_x, int cent_y, int Hx, int Hy);

void NextPyramid();

public:

GradType*get_grad(){ return grad; }

MagType*get_mag(){ return theta; }

void ComputeGradient();

Hog(const int winW, const int winH, const int CellSize,

const int blkcell, const int blockSkipStep, const int windowSkipStep,

const int m_histBin, const double rat) :ratio(rat),

window_width(winW), window_height(winH),

CellSize(CellSize), blkcell(blkcell), blockSkipStep(blockSkipStep),

windowSkipStep(windowSkipStep), m_histBin(m_histBin)

{

blocksize = CellSize*blkcell;

//RGBdata = new BYTE[imgw*imgh * 3];

max_pyramid_height = 0;

current_pyramid_height = 1;

int ww = img_width = 64;

int hh = img_height = 128;

while (ww >= window_width&&hh >= window_height)

{

ww = ww / 2;

hh = hh / 2;

max_pyramid_height++;

}

xblkSkipStepNum = floor((window_width - blkcell * CellSize) / blockSkipStep + 1);

yblkSkipStepNum = floor((window_height - blkcell * CellSize) / blockSkipStep + 1);

win_fea_dim = xblkSkipStepNum*yblkSkipStepNum*blkcell*blkcell*m_histBin;

_ASSERTE(max_pyramid_height >= 1);

};

int getwindow_width(){ return window_width; };

int getwindow_height(){ return window_height; };

void GetWindowFeature(const int offsetY_againstImg, const int offsetX_againstImg);

void L2Normalize(double*vec, int length);

void set_img_size(const int h, const int w){ img_height = h; img_width = w; }

void SingleScaleDetect();

void MultiScaleDetect();

void setgreyData(BYTE*src){ this->greydata = src; }

GradType*get_grad_data(){ return grad; }

void writeHogFea2File();

std::vector < FeaType* >getwindowHOGFeature(){ return windowHOGFeature; }

~Hog()

{

if (RGBdata != NULL)

delete[]RGBdata;

if (greydata != NULL)

{

delete[]greydata;

}

for (int i = 0; i < windowHOGFeature.size(); i++)

if (windowHOGFeature[i] != NULL)

delete[]windowHOGFeature[i];

delete[]grad;

delete[]theta;

};

};

Hog.cpp

#include "stdafx.h"

#include "Hog.h"

#include<cmath>

#include <fstream>

void Hog::writeHogFea2File()

{

std::ofstream myfile;

myfile.open("example.txt");

myfile << "Writing HOG Feature to File.\n";

_ASSERTE(windowHOGFeature.size() == 105);

for (int z = 0; z < 105; ++z)

{

for (int i = 0; i < 36; i++)

myfile << windowHOGFeature[z][i] << std::endl;

}

myfile.close();

}

void Hog::ComputeGradient()

{

if (grad != NULL)

delete[]grad;

grad = new GradType[img_height*img_width];

if (theta != NULL)

delete[]theta;

theta = new MagType[img_height*img_width];

for (int i = 1; i < img_height; i++)

for (int j = 1; j < img_width; j++)

{

double dx = greydata[i*img_width + j + 1] - greydata[i*img_width + j - 1];

double dy = greydata[(i + 1)*img_width + j] - greydata[(i - 1)*img_width + j];

if (fabs(dx) <= 1.0e-6 && fabs(dy) <= 1.0e-6) {

grad[i*img_width + j] = 0;

}

else

grad[i*img_width + j] = (sqrt(dx*dx + dy*dy));

double theta = atan2(dy, dx);

if (theta < 0)

theta = (theta + PI); // normalize to [0, PI], CV_PI

if (theta > PI)

theta = theta - PI;

theta = (theta * 180 / PI);

this->theta[i*img_width + j] = theta;

std::cout << theta + 0 << std::endl;

}

// 边界点的梯度取其近邻点的值

int i = 0;

for (int j = 0; j < img_width; j++) {

grad[i*img_width + j] = grad[(i + 1)*img_width + j];

this->theta[i*img_width + j] = this->theta[(i + 1)*img_width + j];

}

i = img_height - 1;

for (int j = 0; j < img_width; j++) {

grad[i*img_width + j] = grad[(i - 1)*img_width + j];

this->theta[i*img_width + j] = this->theta[(i - 1)*img_width + j];

}

int j = 0;

for (i = 0; i < img_height; i++) {

grad[i*img_width + j] = grad[i*img_width + j + 1];

this->theta[i*img_width + j] = this->theta[i*img_width + j + 1];

}

j = img_width - 1;

for (i = 0; i < img_height; i++) {

grad[i*img_width + j] = grad[i*img_width + j - 1];

this->theta[i*img_width + j] = this->theta[i*img_width + j - 1];

}

}

void Hog::L2Normalize(double*vec, int length)//归一化

{

double sum = 0;

for (int i = 0; i < length; i++)

sum += vec[i] * vec[i];

sum = (double)1.0 / sqrt(sum + FLT_EPSILON);

for (int i = 0; i < length; i++)

vec[i] = vec[i] * sum;

}

double* Hog::GetBlkFeature(int offsetY_againstImg, int offsetX_againstImg)

{

double *blkHOG = new double[blkcell*blkcell*m_histBin];

int aa = sizeof(char);

memset(blkHOG, 0, 36 * sizeof(double));

int center_cell_0_X = CellSize / 2;

int center_cell_0_Y = CellSize / 2;

/*int center_cell_1_X = CellSize / 2+CellSize;

int center_cell_1_Y = CellSize / 2;

int center_cell_2_X = CellSize / 2;

int center_cell_2_Y = CellSize / 2+CellSize;

int center_cell_3_X = CellSize / 2+CellSize;

int center_cell_3_Y = CellSize / 2+CellSize;*/

int regionsize = CellSize;

for (int cell_no_y = 0; cell_no_y < blkcell; cell_no_y++) {

for (int cell_no_x = 0; cell_no_x < blkcell; cell_no_x++) {

// cell index in the blk

int cell_idx = cell_no_y*blkcell + cell_no_x;

// start of a cell

int cell_start_y = cell_no_y*CellSize;

int cell_start_x = cell_no_x*CellSize;

// compute in the cell

for (int y = cell_start_y; y < cell_start_y + CellSize; y++) {

for (int x = cell_start_x; x<cell_start_x + CellSize; x++) {

double theta = this->theta[(offsetY_againstImg + y)*img_width + offsetX_againstImg + x];

double magn = grad[(offsetY_againstImg + y)*img_width + offsetX_againstImg + x];

// 如果幅值为0, 没有梯度则不处理

if (magn >= 0.0) {

int theta_idx = (int)(theta / (180.0 / m_histBin));

//double gaussweight = isGaussianWeight == true ? GaussianKernel(x, y, CellSize, CellSize, CellSize, CellSize) : 1;

//magn= magn*gaussweight;//用高斯核函数调制

double tt = 1.0 - fabs(double(theta) / (180.0 / double(m_histBin)) - (double(theta_idx) + 0.5));

double fx0 = 1.0 - fabs(double(x - center_cell_0_X)) / double(regionsize);

double fy0 = 1.0 - fabs(double(y - center_cell_0_Y)) / double(regionsize);

if (y <= CellSize / 2 && x <= CellSize / 2 || y >= CellSize*blkcell - CellSize / 2 && x <= CellSize / 2

|| y >= CellSize*blkcell - CellSize / 2 && x >= CellSize*blkcell - CellSize / 2

|| x >= CellSize*blkcell - CellSize / 2 && y <= CellSize / 2)//四个角点不做三线性插值

{

blkHOG[m_histBin*cell_idx + theta_idx] = blkHOG[m_histBin*cell_idx + theta_idx] + double(magn)*tt;

blkHOG[m_histBin*cell_idx + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt);

}

if (x>CellSize / 2 && x < CellSize && (y < CellSize / 2 || y> CellSize*blkcell - CellSize / 2))

{

blkHOG[m_histBin*cell_idx + theta_idx] += double(magn)*tt*fx0;

blkHOG[m_histBin*(cell_idx + 1) + theta_idx] += double(magn)*tt*(1.0 - fx0);

blkHOG[m_histBin*cell_idx + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fx0;

blkHOG[m_histBin*(cell_idx + 1) + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fx0);

}

if (x>CellSize && x < CellSize*blkcell - CellSize / 2 && (y < CellSize / 2 || y> CellSize*blkcell - CellSize / 2))

{

blkHOG[m_histBin*cell_idx + theta_idx] += double(magn)*tt*fx0;

blkHOG[m_histBin*(cell_idx - 1) + theta_idx] += double(magn)*tt*(1.0 - fx0);

blkHOG[m_histBin*cell_idx + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fx0;

blkHOG[m_histBin*(cell_idx - 1) + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fx0);

}

if (y>CellSize / 2 && y < CellSize && (x < CellSize / 2 || x> CellSize*blkcell - CellSize / 2))

{

blkHOG[m_histBin*cell_idx + theta_idx] += double(magn)*tt*fy0;

blkHOG[m_histBin*(cell_idx + blkcell) + theta_idx] += double(magn)*tt*(1.0 - fy0);

blkHOG[m_histBin*cell_idx + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fy0;

blkHOG[m_histBin*(cell_idx + blkcell) + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fy0);

}

if (y>CellSize && y < CellSize*blkcell - CellSize / 2 && (x < CellSize / 2 || x> CellSize*blkcell - CellSize / 2))

{

blkHOG[m_histBin*cell_idx + theta_idx] += double(magn)*tt*fy0;

blkHOG[m_histBin*(cell_idx - blkcell) + theta_idx] += double(magn)*tt*(1.0 - fy0);

blkHOG[m_histBin*cell_idx + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fy0;

blkHOG[m_histBin*(cell_idx - blkcell) + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fy0);

}

else//做三线性插值,将 4 个cell中的直方图串接起来

{

blkHOG[m_histBin * 0 + theta_idx] += double(magn)*tt*fx0*fy0;

blkHOG[m_histBin * 0 + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fx0*fy0;

blkHOG[m_histBin * 1 + theta_idx] += double(magn)*tt*(1.0 - fx0)*fy0;

blkHOG[m_histBin * 1 + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fx0)*fy0;

blkHOG[m_histBin * 2 + theta_idx] += double(magn)*tt*fx0*(1.0 - fy0);

blkHOG[m_histBin * 2 + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*fx0*(1.0 - fy0);

blkHOG[m_histBin * 3 + theta_idx] += double(magn)*tt*(1.0 - fx0)*(1.0 - fy0);

blkHOG[m_histBin * 3 + (theta_idx + 1) % m_histBin] += double(magn)*(1.0 - tt)*(1.0 - fx0)*(1.0 - fy0);

}

}

} // for(x)

} // for(y)

} // for(cell_no_x)

} // for(cell_no_y)

/*for (int i = 0; i < 36; i++)

std::cout << blkHOG[i] << std::endl;

std::cout << std::endl << std::endl;*/

L2Normalize(blkHOG, blkcell*blkcell*m_histBin);//以一个block为单位进行归一化

/*for (int i = 0; i < 36; i++)

std::cout << blkHOG[i] << std::endl;*/

blockmanager.AddBlock(offsetY_againstImg, offsetX_againstImg, blkHOG);//存入cache避免重复计算

return blkHOG;

}

double Hog::GaussianKernel(int x, int y, int cent_x, int cent_y, int Hx, int Hy)//高斯核函数

{

int dx = x - cent_x;

int dy = y - cent_y;

double temp = 1 - ((double)(dx*dx) / (Hx*Hx) + (double)(dy*dy) / (Hy*Hy)) / 2;

if (temp >= 0)

{

return (double)(4.0 * temp / (2 * PI));

}

else

{

return 0.0f;

}

}

void Hog::GetWindowFeature(const int offsetY_againstImg, const int offsetX_againstImg)//获得window的feature

{

windowHOGFeature.clear();

//double*imgHOGFeature = new double[blkcell*blkcell*m_histBin*xSkipStepNum*ySkipStepNum];

for (int i = 0; i < yblkSkipStepNum; i++)

for (int j = 0; j < xblkSkipStepNum; j++)

{

double*blkFea;

if (blockmanager.find(offsetY_againstImg + i*blockSkipStep, offsetX_againstImg + j*blockSkipStep))

blkFea = blockmanager.GetBlockData(offsetY_againstImg + i*blockSkipStep,

offsetX_againstImg + j*blockSkipStep);

else

blkFea = GetBlkFeature(offsetY_againstImg + i*blockSkipStep, offsetX_againstImg + j*blockSkipStep);

/*memcpy(imgHOGFeature + (i*xSkipStepNum + j)*blkcell*blkcell*m_histBin, blkFea,

blkcell*blkcell*m_histBin);

delete[]blkFea;*/

windowHOGFeature.push_back(blkFea);

}

}

void Hog::RGB2Grey()

{

if (greydata == NULL)

greydata = new BYTE[img_width*img_height];

for (int i = 0; i < img_height; i++)

for (int j = 0; j < img_width; j++)

{

greydata[i*img_width + j] = 0.299*RGBdata[i*img_width * 3 + 3 * j] +

0.587*RGBdata[i*img_width * 3 + 3 * j + 1] +

0.114*RGBdata[i*img_width * 3 + 3 * j + 2];

}

delete[]RGBdata;

}

void Hog::NextPyramid()//双线性插值获得下一层图像

{

int new_img_height = img_height / ratio;

int new_img_width = img_width / ratio;

BYTE*new_greydata = new BYTE[new_img_height*new_img_width];

double fw = ratio;//double(nW) / W1;

double fh = ratio;//double(nH) / H1;

int y1, y2, x1, x2, x0, y0;

double fx1, fx2, fy1, fy2;

for (int i = 0; i < new_img_height; i++)

{

y0 = i*fh;

y1 = int(y0);

if (y1 == img_height - 1) y2 = y1;

else y2 = y1 + 1;

fy1 = y1 - y0;

fy2 = 1.0f - fy1;

for (int j = 0; j < new_img_width; j++)

{

x0 = j*fw;

x1 = int(x0);

if (x1 == img_width - 1) x2 = x1;

else x2 = x1 + 1;

fx1 = y1 - y0;

fx2 = 1.0f - fx1;

double s1 = fx1*fy1;

double s2 = fx2*fy1;

double s3 = fx2*fy2;

double s4 = fx1*fy2;

BYTE c1r, c2r, c3r, c4r;

c1r = greydata[y1*img_width + x1];

c2r = greydata[y1*img_width + x2];

c3r = greydata[y2*img_width + x1];

c4r = greydata[y2*img_width + x2];

BYTE r;

r = (BYTE)(c1r*s3) + (BYTE)(c2r*s4) + (BYTE)(c3r*s2) + (BYTE)(c4r*s1);

new_greydata[i*new_img_width + j] = r;

}

}

delete[]greydata;

greydata = new_greydata;

img_height = new_img_height;

img_width = new_img_width;

current_pyramid_height++;

blockmanager.deleteAllBlocks();

blockmanager.SetLevel(current_pyramid_height);

}

/*void Hog::SingleScaleDetect()

{

int xSkipStepNum = floor((img_width - window_width) / windowSkipStep + 1);

int ySkipStepNum = floor((img_height - window_width) / windowSkipStep + 1);

//double*imgHOGFeature = new double[blkcell*blkcell*m_histBin*xSkipStepNum*ySkipStepNum];

for (int i = 0; i < ySkipStepNum; i++)

for (int j = 0; j < xSkipStepNum; j++)

{

GetWindowFeature(i*windowSkipStep, j*windowSkipStep);

}

}

void Hog::MultiScaleDetect()

{

while (current_pyramid_height < max_pyramid_height)

{

SingleScaleDetect();

NextPyramid();

}

}*/

利用opencv训练好的检测器

#include <iostream>

#include <string>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/ml/ml.hpp>

using namespace std;

using namespace cv;

int main()

{

Mat src = imread("5.png");

HOGDescriptor hog;//HOG特征检测器

hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());//设置SVM分类器为默认参数

vector<Rect> found, found_filtered;//矩形框数组

hog.detectMultiScale(src, found, 0, Size(8,8), Size(32,32), 1.05, 2);//对图像进行多尺度检测,检测窗口移动步长为(8,8)

cout<<"矩形个数:"<<found.size()<<endl;

//找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的话,则取外面最大的那个矩形框放入found_filtered中

for(int i=0; i < found.size(); i++)

{

Rect r = found[i];

int j=0;

for(; j < found.size(); j++)

if(j != i && (r & found[j]) == r)

break;

if( j == found.size())

found_filtered.push_back(r);

}

cout<<"过滤后矩形的个数:"<<found_filtered.size()<<endl;

//画矩形框,因为hog检测出的矩形框比实际人体框要稍微大些,所以这里需要做一些调整

for(int i=0; i<found_filtered.size(); i++)

{

Rect r = found_filtered[i];

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.8);

r.y += cvRound(r.height*0.07);

r.height = cvRound(r.height*0.8);

rectangle(src, r.tl(), r.br(), Scalar(0,255,0), 3);

}

imwrite("ImgProcessed.jpg",src);

namedWindow("src",0);

imshow("src",src);

waitKey();//注意:imshow之后一定要加waitKey,否则无法显示图像

system("pause");

} 保存为hog.cpp,ubuntu下放入一个文件夹里,该文件夹下建立CMakeLists.txt文件,内容如下

project(hog)

find_package(OpenCV REQUIRED)

add_executable(hog hog)

target_link_libraries(hog ${OpenCV_LIBS})

cmake_minimum_required(VERSION 2.8)

cd到该目录

cmake .

make

该文件夹下放一个5.png的待见测图片,执行./hog,即可看到检测结果

自己训练一个检测模型

训练

#include <opencv2/opencv.hpp>

#include <iostream>

#include <ctype.h>

#include "cv.h"

#include "highgui.h"

#include <ml.h>

#include <fstream>

#include <math.h>

#include <string>

#include <vector>

#include <stdio.h>

using namespace cv;

using namespace std;

class Mysvm: public CvSVM

{

public:

int get_alpha_count()

{

return this->sv_total;

}

int get_sv_dim()

{

return this->var_all;

}

int get_sv_count()

{

return this->decision_func->sv_count;

}

double* get_alpha()

{

return this->decision_func->alpha;

}

float** get_sv()

{

return this->sv;

}

float get_rho()

{

return this->decision_func->rho;

}

};

int main()

{

int positiveSampleCount = 2772; //正样本数

int negativeSampleCount = 8100; //负样本数

int totalSampleCount = positiveSampleCount + negativeSampleCount;

FILE* fp = fopen("PeopleDetectResult_INRIA2416.txt","w");

if(fp==NULL)

{

//int n = GetLastError();

exit(1);

}

CvMat *sampleFeaturesMat = cvCreateMat(totalSampleCount , 3780, CV_32FC1); //这里第二个参数1764是hog维数,即矩阵的列是由下面的featureVec的大小决定的,可以由descriptors.size()得到,

//64*64的训练样本,该矩阵将是totalSample*1764;若是64*128,则对应3780维

cvSetZero(sampleFeaturesMat);

CvMat *sampleLabelMat = cvCreateMat(totalSampleCount, 1, CV_32FC1); //存储样本图片类型 ,正样本1,负样本-1

cvSetZero(sampleLabelMat);

// fprintf(fp, "%s\n","Hog描述算子:\n SVM参数:");

cout<<"waiting......"<<endl;

cout<<"start to training positive samples..."<<endl;

string buf;

char line[512];

vector<string> img_path;//输入文件名变量

ifstream svm_data( "pos_list.txt");

while (svm_data)

{

if (getline( svm_data, buf ))

{

img_path.push_back( buf );

}

}

svm_data.close();//关闭文件

//IplImage* src;

IplImage* img;//=cvCreateImage(cvSize(64,128),IPL_DEPTH_8U,1);

//需要分析的图片,这里默认设定图片是64*128大小,所以上面定义了3780,如果要更改图片大小,可以先用debug查看一下descriptors是多少,然后设定好再运行

//long t1 = GetTickCount();

for(int i=0; i<positiveSampleCount; i++)

{

img=cvLoadImage(img_path[i].c_str(),0);

//cvResize(src,img); //读取正样本图片

if( img == NULL )

{

cout<<" can not load the image:"<<img_path[i].c_str()<<endl;

continue;

}

cout<<" processing "<<img_path[i].c_str()<<endl;

//namedWindow("src",0);

// cvShowImage("src",img);

// waitKey();

cv::HOGDescriptor hog(cv::Size(64,128), cv::Size(16,16), cv::Size(8,8),cv::Size(8,8), 9);

//HOG的描述函数。如果是64*128的训练样本,需要把第一个参数改为cv::Size(64,128)

vector<float> featureVec;

hog.compute(img, featureVec, cv::Size(8,8)); //featureVec--存储每个样本图片的HOG特征向量

for (int j=0; j<featureVec.size(); j++)

{

CV_MAT_ELEM( *sampleFeaturesMat, float, i, j ) = featureVec[j];

}

sampleLabelMat->data.fl[i] = 1;

}

//cvReleaseImage( &src);

cvReleaseImage( &img);

cout<<"end of training for positive samples..."<<endl;

//long t2 = GetTickCount();

//fprintf(fp, "%s%d\n", "training for positive samples timeis:",t2-t1);

cout<<"waiting......"<<endl;

cout<<"start to train negative samples..."<<endl;

string buff;

int m;

vector<string> img_neg_path;//输入文件名变量

ifstream svm_neg_data( "neg_list.txt");

while (svm_neg_data)

{

if (getline( svm_neg_data, buff ))

{

img_neg_path.push_back( buff );

}

}

svm_neg_data.close();//关闭文件

//IplImage* neg;

IplImage* neg_img;//=cvCreateImage(cvSize(64,128),8,1);

for (int i=0; i<negativeSampleCount; i++)

{

neg_img = cvLoadImage(img_neg_path[i].c_str(),0); //读取负样本图片

//cvResize(neg,neg_img); //读取正样本图片

if( neg_img == NULL )

{

cout<<" can not load the image:"<<img_path[i].c_str()<<endl;

continue;

}

cout<<" processing"<<img_neg_path[i].c_str()<<endl;

//namedWindow("src",0);

// cvShowImage("src",neg_img);

// waitKey();

cv::HOGDescriptor hog(cv::Size(64,128), cv::Size(16,16), cv::Size(8,8),cv::Size(8,8), 9); //若为64*128的样本,需要将第一个参数做出改动(上述)

vector<float> featureVec;

hog.compute(neg_img,featureVec,cv::Size(8,8)); //featureVec.size(),且对于不同大小的输入训练图片,这个值是不同的

cvReleaseImage( &neg_img);

//cout<<"The featureVector number of negativeSample is:"<<featureVec.size()<<endl; //查看一下featureVec的大小

for ( int j=0; j<featureVec.size(); j ++)

{

CV_MAT_ELEM( *sampleFeaturesMat, float, i + positiveSampleCount, j ) =featureVec[ j ]; //把HOG存储下来

}

sampleLabelMat->data.fl[ i + positiveSampleCount ] = -1;

m = i+1;

}

cout<<"The count of negativeSample is:"<<m<<endl;//统计处理过的负样本数目

fprintf(fp, "%s%d\n", "The count of negativeSample is:",m);

cout<<"end of training for negative samples..."<<endl;

//long t3 = GetTickCount();

cout<<"waiting......"<<endl;

//fprintf(fp, "%s%d\n", "training for negative samples timeis:",t3-t1);

//☆☆☆☆☆☆☆☆☆(5)SVM学习☆☆☆☆☆☆☆☆☆☆☆☆

cout<<"start to train for SVM classifier..."<<endl;

// SVM种类:CvSVM::C_SVC

// Kernel的种类:CvSVM::LINEAR

// degree:10.0(此次不使用)

// gamma:8.0

// coef0:1.0(此次不使用)

// C:0.01

// nu:0.5(此次不使用)

// p:0.1(此次不使用)

// 然后对训练数据正规化处理,并放在CvMat型的数组里。

CvSVMParams params;

params.svm_type = CvSVM::C_SVC;

params.kernel_type = CvSVM::LINEAR;

params.term_crit = cvTermCriteria(CV_TERMCRIT_ITER, 1000, FLT_EPSILON);

params.C = 0.01;

Mysvm svm;

//long t4 = GetTickCount();

svm.train( sampleFeaturesMat, sampleLabelMat, NULL, NULL, params ); //用SVM线性分类器训练

//sampleFeaturesMat保存各样本的特征值,sampleLabelMat保存图片类型*/

//保存最终的SVM

//long t5 = GetTickCount();

//fprintf(fp, "%s%d\n", "Time of train for SVM classifier is:",t5-t4);

svm.save( "SVM_DATA.xml" );

cout<<"SVM_DATA.xml保存完毕!"<<endl;

cvReleaseMat(&sampleFeaturesMat);

cvReleaseMat(&sampleLabelMat);

int supportVectorSize = svm.get_support_vector_count();

cout<<"support vector size of SVM:"<<supportVectorSize<<endl;

cout<<"SVM completed"<<endl;

CvMat *sv,*alp,*re; //所有样本特征向量

sv = cvCreateMat(supportVectorSize , 3780, CV_32FC1);

alp = cvCreateMat(1 , supportVectorSize, CV_32FC1);

re = cvCreateMat(1 , 3780, CV_32FC1);

CvMat *res = cvCreateMat(1 , 1, CV_32FC1);

cvSetZero(sv);

cvSetZero(re);

for(int i=0; i<supportVectorSize; i++)

{

memcpy( (float*)(sv->data.fl+i*3780), svm.get_support_vector(i), 3780*sizeof(float));

}

double* alphaArr = svm.get_alpha();

int alphaCount = svm.get_alpha_count();

for(int i=0; i<supportVectorSize; i++)

{

alp->data.fl[i] = alphaArr[i];

}

cvMatMul(alp, sv, re);

int posCount = 0;

for (int i=0; i<3780; i++)

{

re->data.fl[i] *= -1;

}

FILE* fpp = fopen("hogSVMDetector.txt","w");

if(fpp==NULL)

{

//int n = GetLastError();

exit(1);

}

for(int i=0; i<3780; i++)

{

fprintf(fpp,"%f \n",re->data.fl[i]);

}

float rho = svm.get_rho();

fprintf(fpp, "%f", rho);

cout<<"hogSVMDetector.txt 保存完毕"<<endl; //保存HOG能识别的分类器

fclose(fp);

fclose(fpp);

cvReleaseMat(&sv);

cvReleaseMat(&alp);

cvReleaseMat(&re);

cvReleaseMat(&res);

/*

// //***************************************检测正样本************************************

//

IplImage *test;

vector<string> img_tst_path;

// ifstream img_tst("D:\\program\\matlabprojects\\INRIAPerson\\test_my\\pos_bmp\\train_list.txt");//同输入训练样本,这里也是一样的,只不过不需要标注图片属于哪一类了

// ifstream img_tst("D:\\train_list.txt");

ifstream img_tst( "D:\\program\\matlabprojects\\PeopleSample\\pos1\\train_list2.txt");

while( img_tst )

{

if( getline( img_tst, buf ) )

{

img_tst_path.push_back( buf );

}

}

img_tst.close();

// CvMat *test_hog = cvCreateMat( 1, 1764, CV_32FC1 );//注意这里的1764,同上面一样

IplImage* trainImg=cvCreateImage(cvSize(64,128),8,3);

int right=0;

int wrong=0; //用于计算正确率

int n;

for( string::size_type j = 0; j != img_tst_path.size(); j++ )//依次遍历所有的待检测图片

{

test = cvLoadImage( img_tst_path[j].c_str(), 1);

if( test == NULL )

{

cout<<" can not load the image:"<<img_tst_path[j].c_str()<<endl;

continue;

}

cvZero(trainImg);

cvResize(test,trainImg); //读取图片

HOGDescriptor *hog=new HOGDescriptor(cvSize(64,128),cvSize(16,16),cvSize(8,8),cvSize(8,8),9); //具体意思见参考文章1,2

vector<float>descriptors;//结果数组

hog->compute(trainImg, descriptors,Size(1,1), Size(0,0)); //调用计算函数开始计算

cout<<"HOG dims: "<<descriptors.size()<<endl;

CvMat* SVMtrainMat=cvCreateMat(1,descriptors.size(),CV_32FC1);

n=0;

for(vector<float>::iterator iter=descriptors.begin();iter!=descriptors.end();iter++)

{

cvmSet(SVMtrainMat,0,n,*iter);

n++;

}

int ret = svm.predict(SVMtrainMat);//获取最终检测结果,这个predict的用法见 OpenCV的文档

if (ret == 1)

{

right += 1;

}

else if (ret == -1)

{

wrong += 1;

}

}

double result ;

result= double(right)/double(right+wrong);

cout<<"The Correct rateis:"<<result*100<<"%"<<endl; //检测正样本的正确率

fprintf(fp,"%s%f%s\n","The Correct rateis:",result*100,"%");

fclose(fp);

cvReleaseImage( &test );

cvReleaseImage( &trainImg );

*/

//*****************************************开始读入待识别的图像,检测车辆************************************

// IplImage* Img =cvLoadImage("D:\\program\\matlabprojects\\INRIAPerson\\Test\\pos_bmp\\00019.bmp"); //读入图像

// IplImage* DetecImg=cvCreateImage(cvSize(320,240),8,3);

// cvResize(Img,DetecImg);

// long t1 = GetTickCount();

//if (DetecImg == NULL)

//{

// cout<<" can not load the image"<<endl;

// exit(-1);

//} //检测图像有没有被读入

//vector<float> x;

//

//ifstream fileIn("Peopledetector4.txt", ios::in);

//float val = 0.0f;

//while(!fileIn.eof())

//{

// fileIn>>val;

// x.push_back(val);

//}

//fileIn.close();

//vector<cv::Rect> found; //检测出的车辆数量

//vector<cv::Rect> temp; //用于存放的容器

// vector<vector<cv::Rect>> bigfound;

//vector< vector<cv::Rect>> ::iterator bigit; //功能强大的迭代器

//IplImage* img = NULL;

//cv::HOGDescriptor hog(cv::Size(64,128),cv::Size(16,16), cv::Size(8,8), cv::Size(8,8), 9);

//hog.setSVMDetector(x); //x向量对应的分类器

//long t3 = GetTickCount();

//cout<<"The process time is:"<<t3-t1<<endl;

//

//

hog.detectMultiScale(DetecImg, found, 0.7, cv::Size(8,8), cv::Size(5,5),1.06, 1, false); //检测当前图片的HOG特征,found为检测结果向量

// hog.detectMultiScale(DetecImg, found, 0.3, cv::Size(8,8), cv::Size(8,8),1.05, 2, false);

//long t4 = GetTickCount();

//cout<<"The process time is:"<<t4-t1<<endl;

//cvNamedWindow("img", CV_WINDOW_AUTOSIZE);

//

//int i,j;

//vector<cv::Rect> found_filtered;

//for (i = 0; i<found.size();i++)

//{

// Rect r = found[i];

// //下面的这个for语句是找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的

// //话,则取外面最大的那个矩形框放入found_filtered中

// for(j = 0; j <found.size(); j++)

// if(j != i && (r&found[j])==r)

// break;

// if(j == found.size())

// found_filtered.push_back(r);

//}

//cout<<"The number of vehicle is:"<<found_filtered.size()<<endl; //检测车辆数

在图片img上画出矩形框,因为hog检测出的矩形框比实际人体框要稍微大些,所以这里需要

做一些调整

//for(i = 0; i <found_filtered.size(); i++)

//{

// Rect r = found_filtered[i];

// r.x += cvRound(r.width*0.1);

// r.width = cvRound(r.width*0.8);

// r.y += cvRound(r.height*0.07);

// r.height = cvRound(r.height*0.8);

// cvRectangle(DetecImg,cvPoint(r.x,r.y),cvPoint(r.x+r.width,r.y+r.height),CV_RGB(255,0,0),2);

//}

//cvShowImage("img",DetecImg);

//cvWaitKey(0);

//long t5 = GetTickCount();

//cout<<"The process time is:"<<t5-t1<<endl;

//system("pause");

//cvWaitKey(0);

//

// cvReleaseImage(&DetecImg);

/*

//**********************************处理视频************************************

CvCapture* cap = cvCreateFileCapture("D:\\项目文档\\智能交通\\行人视频\\20120925\\20120925174702950.avi");//读入视频

if (!cap)

{

cout<<"avi file load error……"<<endl;

system("pause");

exit(-1);

} //检测视频有没有被读入

vector<float> x;

ifstream fileIn("Peopledetector4.txt",ios::in);

float val = 0.0f;

while(!fileIn.eof())

{

fileIn>>val;

x.push_back(val);

}

fileIn.close();

vector<cv::Rect> found; //检测出的车辆数量

vector<cv::Rect> temp; //用于存放的容器

vector<vector<cv::Rect> > bigfound;

vector< vector<cv::Rect> > ::iterator bigit; //功能强大的迭代器

cvNamedWindow("img",CV_WINDOW_AUTOSIZE);

img = NULL;

IplImage* SizeImg=cvCreateImage(cvSize(320,240),8,3);

//long t1 = GetTickCount();

// cv::HOGDescriptor hog(cv::Size(64,64), cv::Size(16,16), cv::Size(8,8),cv::Size(8,8), 9);

cv::HOGDescriptor hog(cv::Size(64,128), cv::Size(16,16), cv::Size(8,8),cv::Size(8,8), 9);

//long t4 = GetTickCount();

//cout<<"time:"<<t4-t1<<endl;

hog.setSVMDetector(x); //x向量对应的分类器

while(1)

{

img=cvQueryFrame(cap);

// img = cvLoadImage("D:\\000120.bmp");

cvZero(SizeImg);

cvResize(img,SizeImg);

if(!img) break;

cvWaitKey(1);

//hog.detectMultiScale(img,found,0.9,cv::Size(2,2),cv::Size(64,64),1.06,1,false);

// hog.detectMultiScale(SizeImg, found, 0, Size(8, 8), Size(32, 32), 1.05, 2);

// hog.detectMultiScale(SizeImg, found, 0, cv::Size(11,11), cv::Size(32,32),0.5,2);

// hog.detectMultiScale(SizeImg, found, 1.0, cv::Size(16,16), cv::Size(32,32),1.05, 2, false);

hog.detectMultiScale(SizeImg, found, 0, Size(8, 8), Size(16, 16), 1.0,2,false);

//检测当前帧的HOG特征,found为检测结果向量

int i,j;

vector<cv::Rect> found_filtered;

for (i = 0; i<found.size();i++)

{

Rect r = found[i];

//下面的这个for语句是找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的

//话,则取外面最大的那个矩形框放入found_filtered中

for(j = 0; j <found.size(); j++)

if(j != i && (r&found[j])==r)

break;

if(j == found.size())

found_filtered.push_back(r);

}

cout<<"The number of vehicle is:"<<found_filtered.size()<<endl; //检测人数

//在图片img上画出矩形框,因为hog检测出的矩形框比实际人体框要稍微大些,所以这里需要

//做一些调整

for(i = 0; i <found_filtered.size(); i++)

{

Rect r = found_filtered[i];

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.7);

r.y += cvRound(r.height*0.1);

r.height = cvRound(r.height*0.7);

// rectangle(img, r.tl(), r.br(), Scalar(0, 255, 0), 3);

cvRectangle(SizeImg,cvPoint(r.x,r.y),cvPoint(r.x+r.width,r.y+r.height),CV_RGB(255,0,0),1);

}

cvShowImage("img",SizeImg);

cvWaitKey(1);

}

*/

return 0;

}

加载刚训练好的xml文件,检测

#include <iostream>

#include <string>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/ml/ml.hpp>

using namespace std;

using namespace cv;

//继承自CvSVM的类,因为生成setSVMDetector()中用到的检测子参数时,需要用到训练好的SVM的decision_func参数,

//但通过查看CvSVM源码可知decision_func参数是protected类型变量,无法直接访问到,只能继承之后通过函数访问

class MySVM : public CvSVM

{

public:

//获得SVM的决策函数中的alpha数组

double * get_alpha_vector()

{

return this->decision_func->alpha;

}

//获得SVM的决策函数中的rho参数,即偏移量

float get_rho()

{

return this->decision_func->rho;

}

};

int main()

{

Mat src = imread("5.png");

HOGDescriptor hog;//HOG特征检测器

//hog.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());//设置SVM分类器为默认参数

MySVM svm;

svm.load("SVM_DATA.xml");//从XML文件读取训练好的SVM模型

int DescriptorDim;//HOG描述子的维数,由图片大小、检测窗口大小、块大小、细胞单元中直方图bin个数决定

DescriptorDim = svm.get_var_count();//特征向量的维数,即HOG描述子的维数

int supportVectorNum = svm.get_support_vector_count();//支持向量的个数

cout<<"支持向量个数:"<<supportVectorNum<<endl;

Mat alphaMat = Mat::zeros(1, supportVectorNum, CV_32FC1);//alpha向量,长度等于支持向量个数

Mat supportVectorMat = Mat::zeros(supportVectorNum, DescriptorDim, CV_32FC1);//支持向量矩阵

Mat resultMat = Mat::zeros(1, DescriptorDim, CV_32FC1);//alpha向量乘以支持向量矩阵的结果

//将支持向量的数据复制到supportVectorMat矩阵中

for(int i=0; i<supportVectorNum; i++)

{

const float * pSVData = svm.get_support_vector(i);//返回第i个支持向量的数据指针

for(int j=0; j<DescriptorDim; j++)

{

//cout<<pData[j]<<" ";

supportVectorMat.at<float>(i,j) = pSVData[j];

}

}

//将alpha向量的数据复制到alphaMat中

double * pAlphaData = svm.get_alpha_vector();//返回SVM的决策函数中的alpha向量

for(int i=0; i<supportVectorNum; i++)

{

alphaMat.at<float>(0,i) = pAlphaData[i];

}

//计算-(alphaMat * supportVectorMat),结果放到resultMat中

//gemm(alphaMat, supportVectorMat, -1, 0, 1, resultMat);//不知道为什么加负号?

resultMat = -1 * alphaMat * supportVectorMat;

//得到最终的setSVMDetector(const vector<float>& detector)参数中可用的检测子

vector<float> myDetector;

//将resultMat中的数据复制到数组myDetector中

for(int i=0; i<DescriptorDim; i++)

{

myDetector.push_back(resultMat.at<float>(0,i));

}

//最后添加偏移量rho,得到检测子

myDetector.push_back(svm.get_rho());

cout<<"检测子维数:"<<myDetector.size()<<endl;

//设置HOGDescriptor的检测子

hog.setSVMDetector(myDetector);

//myHOG.setSVMDetector(HOGDescriptor::getDefaultPeopleDetector());

//保存检测子参数到文件

//ofstream fout("HOGDetectorForOpenCV.txt");

//for(int i=0; i<myDetector.size(); i++)

{

//fout<<myDetector[i]<<endl;

}

vector<Rect> found, found_filtered;//矩形框数组

hog.detectMultiScale(src, found, 0, Size(8,8), Size(32,32), 1.05, 2);//对图像进行多尺度检测,检测窗口移动步长为(8,8)

cout<<"矩形个数:"<<found.size()<<endl;

//找出所有没有嵌套的矩形框r,并放入found_filtered中,如果有嵌套的话,则取外面最大的那个矩形框放入found_filtered中

for(int i=0; i < found.size(); i++)

{

Rect r = found[i];

int j=0;

for(; j < found.size(); j++)

if(j != i && (r & found[j]) == r)

break;

if( j == found.size())

found_filtered.push_back(r);

}

cout<<"过滤后矩形的个数:"<<found_filtered.size()<<endl;

//画矩形框,因为hog检测出的矩形框比实际人体框要稍微大些,所以这里需要做一些调整

for(int i=0; i<found_filtered.size(); i++)

{

Rect r = found_filtered[i];

r.x += cvRound(r.width*0.1);

r.width = cvRound(r.width*0.8);

r.y += cvRound(r.height*0.07);

r.height = cvRound(r.height*0.8);

rectangle(src, r.tl(), r.br(), Scalar(0,255,0), 3);

}

imwrite("ImgProcessed.jpg",src);

namedWindow("src",0);

imshow("src",src);

waitKey();//注意:imshow之后一定要加waitKey,否则无法显示图像

system("pause");

}

检测结果

貌似结果比自带的稍好点

HOG:用于人体检测的梯度方向直方图 Histograms of Oriented Gradients for Human Detection

用初次训练的SVM+HOG分类器在负样本原图上检测HardExample

利用TinyXML读取VOC2012数据集的XML标注文件裁剪出所有人体目标保存为文件

行人检测(Pedestrian Detection)资源与更新

http://www.learnopencv.com/histogram-of-oriented-gradients/

269

269

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?