先抛图修改过的:

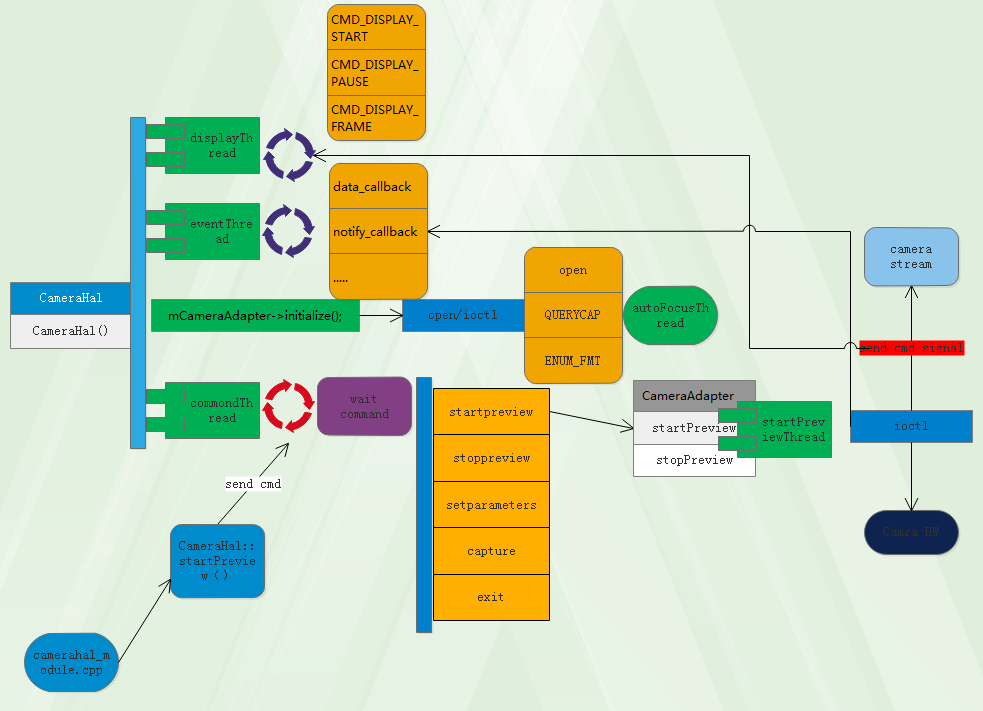

从前面几篇文章,可以知道camerahal是在initialize的时候open操作被声明初始化的,现在先分析一下CameraHal初始化的内容:

CameraHal::CameraHal(int cameraId)

:commandThreadCommandQ("commandCmdQ")

{

LOG_FUNCTION_NAME

{

char trace_level[PROPERTY_VALUE_MAX];

int level;

property_get(CAMERAHAL_TRACE_LEVEL_PROPERTY_KEY, trace_level, "0");

sscanf(trace_level,"%d",&level);

setTracerLevel(level);

}

mCamId = cameraId;

mCamFd = -1;

mCommandRunning = -1;

mCameraStatus = 0;

mDisplayAdapter = new DisplayAdapter();

mEventNotifier = new AppMsgNotifier();

#if (CONFIG_CAMERA_MEM == CAMERA_MEM_ION)

mCamMemManager = new IonMemManager();

LOG1("%s(%d): Camera Hal memory is alloced from ION device",__FUNCTION__,__LINE__);

#elif(CONFIG_CAMERA_MEM == CAMERA_MEM_IONDMA)

if((strcmp(gCamInfos[cameraId].driver,"uvcvideo") == 0) //uvc camera

|| (gCamInfos[cameraId].pcam_total_info->mHardInfo.mSensorInfo.mPhy.type == CamSys_Phy_end)// soc cif

) {

gCamInfos[cameraId].pcam_total_info->mIsIommuEnabled = (IOMMU_ENABLED == 1)? true:false;

}

mCamMemManager = new IonDmaMemManager(gCamInfos[cameraId].pcam_total_info->mIsIommuEnabled);

LOG1("%s(%d): Camera Hal memory is alloced from ION device",__FUNCTION__,__LINE__);

#elif(CONFIG_CAMERA_MEM == CAMERA_MEM_PMEM)

if(access(CAMERA_PMEM_NAME, O_RDWR) < 0) {

LOGE("%s(%d): %s isn't registered, CameraHal_Mem current configuration isn't support ION memory!!!",

__FUNCTION__,__LINE__,CAMERA_PMEM_NAME);

} else {

mCamMemManager = new PmemManager((char*)CAMERA_PMEM_NAME);

LOG1("%s(%d): Camera Hal memory is alloced from %s device",__FUNCTION__,__LINE__,CAMERA_PMEM_NAME);

}

#endif

usleep(1000);

mPreviewBuf = new PreviewBufferProvider(mCamMemManager);

mVideoBuf = new BufferProvider(mCamMemManager);

mRawBuf = new BufferProvider(mCamMemManager);

mJpegBuf = new BufferProvider(mCamMemManager);

usleep(1000);

char value[PROPERTY_VALUE_MAX];

property_get(/*CAMERAHAL_TYPE_PROPERTY_KEY*/"sys.cam_hal.type", value, "none");

if (!strcmp(value, "fakecamera")) {

LOGD("it is a fake camera!");

mCameraAdapter = new CameraFakeAdapter(cameraId);

} else {

if((strcmp(gCamInfos[cameraId].driver,"uvcvideo") == 0)) {

LOGD("it is a uvc camera!");

mCameraAdapter = new CameraUSBAdapter(cameraId);

}

else if(gCamInfos[cameraId].pcam_total_info->mHardInfo.mSensorInfo.mPhy.type == CamSys_Phy_Cif){

LOGD("it is a isp soc camera");

if(gCamInfos[cameraId].pcam_total_info->mHardInfo.mSensorInfo.mPhy.info.cif.fmt == CamSys_Fmt_Raw_10b)

mCameraAdapter = new CameraIspSOCAdapter(cameraId);

else

mCameraAdapter = new CameraIspAdapter(cameraId);

}

else if(gCamInfos[cameraId].pcam_total_info->mHardInfo.mSensorInfo.mPhy.type == CamSys_Phy_Mipi){

LOGD("it is a isp camera");

mCameraAdapter = new CameraIspAdapter(cameraId);

}

else{

LOGD("it is a soc camera!");

mCameraAdapter = new CameraSOCAdapter(cameraId);

}

}

//initialize

{

char *call_process = getCallingProcess();

if(strstr(call_process,"com.android.cts.verifier")) {

mCameraAdapter->setImageAllFov(true);

} else {

mCameraAdapter->setImageAllFov(false);

}

}

mCameraAdapter->initialize();

updateParameters(mParameters);

mCameraAdapter->setPreviewBufProvider(mPreviewBuf);

mCameraAdapter->setDisplayAdapterRef(*mDisplayAdapter);

mCameraAdapter->setEventNotifierRef(*mEventNotifier);

mDisplayAdapter->setFrameProvider(mCameraAdapter);

mEventNotifier->setPictureRawBufProvider(mRawBuf);

mEventNotifier->setPictureJpegBufProvider(mJpegBuf);

mEventNotifier->setVideoBufProvider(mVideoBuf);

mEventNotifier->setFrameProvider(mCameraAdapter);

//command thread

mCommandThread = new CommandThread(this);

mCommandThread->run("CameraCmdThread", ANDROID_PRIORITY_URGENT_DISPLAY);

bool dataCbFrontMirror;

bool dataCbFrontFlip;

#if CONFIG_CAMERA_FRONT_MIRROR_MDATACB

if (gCamInfos[cameraId].facing_info.facing == CAMERA_FACING_FRONT) {

#if CONFIG_CAMERA_FRONT_MIRROR_MDATACB_ALL

dataCbFrontMirror = true;

#else

const char* cameraCallProcess = getCallingProcess();

if (strstr(CONFIG_CAMERA_FRONT_MIRROR_MDATACB_APK,cameraCallProcess)) {

dataCbFrontMirror = true;

} else {

dataCbFrontMirror = false;

}

if (strstr(CONFIG_CAMERA_FRONT_FLIP_MDATACB_APK,cameraCallProcess)) {

dataCbFrontFlip = true;

} else {

dataCbFrontFlip = false;

}

#endif

} else {

dataCbFrontMirror = false;

dataCbFrontFlip = false;

}

#else

dataCbFrontMirror = false;

#endif

mEventNotifier->setDatacbFrontMirrorFlipState(dataCbFrontMirror,dataCbFrontFlip);

LOG_FUNCTION_NAME_EXIT

}

1.mDisplayAdapter = new DisplayAdapter(); 这里会启动一个线程,跟着进去看一下构造函数:

mDisplayThread = new DisplayThread(this);

mDisplayThread->run("DisplayThread",ANDROID_PRIORITY_DISPLAY);线程启动后会处于loop状态,等待其他线程发消息:

void DisplayAdapter::displayThread()

{

int err,stride,i,queue_cnt;

int dequeue_buf_index,queue_buf_index,queue_display_index;

buffer_handle_t *hnd = NULL;

NATIVE_HANDLE_TYPE *phnd;

GraphicBufferMapper& mapper = GraphicBufferMapper::get();

Message msg;

void *y_uv[3];

int frame_used_flag = -1;

Rect bounds;

LOG_FUNCTION_NAME

while (mDisplayRuning != STA_DISPLAY_STOP) {

display_receive_cmd:

if (displayThreadCommandQ.isEmpty() == false ) {

displayThreadCommandQ.get(&msg); 这个线程主要是用于显示用的,显示的地方也就是上层调用setPreviewTarget的时候,会把一个GraphicProducerBuffer类型的显示窗口buffer传下来。

2.mEventNotifier = new AppMsgNotifier();

对应的构造函数立面会启动两个线程:

//create thread

mCameraAppMsgThread = new CameraAppMsgThread(this);

mCameraAppMsgThread->run("AppMsgThread",ANDROID_PRIORITY_DISPLAY);

mEncProcessThread = new EncProcessThread(this);

mEncProcessThread->run("EncProcessThread",ANDROID_PRIORITY_NORMAL);第一个线程会比较重要,用来接收preview线程发过来的消息,通知这边向上回调数据:

void AppMsgNotifier::eventThread()

{

bool loop = true;

Message msg;

int index,err = 0;

FramInfo_s *frame = NULL;

int frame_used_flag = -1;

LOG_FUNCTION_NAME

while (loop) {

memset(&msg,0,sizeof(msg));

eventThreadCommandQ.get(&msg);

switch (msg.command)

{

case CameraAppMsgThread::CMD_EVENT_PREVIEW_DATA_CB:

frame = (FramInfo_s*)msg.arg2;

processPreviewDataCb(frame);

//return frame

frame_used_flag = (int)msg.arg3;

mFrameProvider->returnFrame(frame->frame_index,frame_used_flag);

break;

....

....

....至于cameraHAL层的消息发送机制,其实比较简单,是基于linux pipe实现的通信只针对两个进程之间。单向的通道。

第二个线程启动处理picture的线程,当我们按下拍照的按钮时就会发送cmd到这个线程上,这里会进行jpeg编解码相关操作,并保存到本地:

void AppMsgNotifier::encProcessThread()

{

bool loop = true;

Message msg;

int err = 0;

int frame_used_flag = -1;

LOG_FUNCTION_NAME

while (loop) {

memset(&msg,0,sizeof(msg));

encProcessThreadCommandQ.get(&msg); 3.接下来就是申请一些buffer的初始化了

4.选择camera类型的过程,这里涉及到了UVC camera,这里不同的选择,mCameraAdapter会被实例化成不同的cameraAdapter,其中的接口也就会变化。之后开始对camera初始化,

int CameraAdapter::initialize()

{

int ret = -1;

//create focus thread

LOG_FUNCTION_NAME

if((ret = cameraCreate(mCamId)) < 0)

return ret;

initDefaultParameters(mCamId);

LOG_FUNCTION_NAME_EXIT

return ret;

}第一个是create一个camera,这里会跟驱动进行交互,有实际的open动作,并得到fd保存起来,后面ioctl就会通过这个fd与驱动进行通信。

先看create:

//talk to driver

//open camera

int CameraAdapter::cameraCreate(int cameraId)

{

int err = 0,iCamFd;

int pmem_fd,i,j,cameraCnt;

char cam_path[20];

char cam_num[3];

char *ptr_tmp;

struct v4l2_fmtdesc fmtdesc;

char *cameraDevicePathCur = NULL;

char decode_name[50];

LOG_FUNCTION_NAME

memset(decode_name,0x00,sizeof(decode_name));

mLibstageLibHandle = dlopen("libstagefright.so", RTLD_NOW);

if (mLibstageLibHandle == NULL) {

LOGE("%s(%d): open libstagefright.so fail",__FUNCTION__,__LINE__);

} else {

mMjpegDecoder.get = (getMjpegDecoderFun)dlsym(mLibstageLibHandle, "get_class_On2JpegDecoder");

}

if (mMjpegDecoder.get == NULL) {

if (mLibstageLibHandle != NULL)

dlclose(mLibstageLibHandle); /* ddl@rock-chips.com: v0.4.0x27 */

mLibstageLibHandle = dlopen("librk_on2.so", RTLD_NOW);

if (mLibstageLibHandle == NULL) {

LOGE("%s(%d): open librk_on2.so fail",__FUNCTION__,__LINE__);

} else {

mMjpegDecoder.get = (getMjpegDecoderFun)dlsym(mLibstageLibHandle, "get_class_On2JpegDecoder");

if (mMjpegDecoder.get == NULL) {

LOGE("%s(%d): dlsym get_class_On2JpegDecoder fail",__FUNCTION__,__LINE__);

} else {

strcat(decode_name,"dec_oneframe_On2JpegDecoder");

}

}

} else {

strcat(decode_name,"dec_oneframe_class_On2JpegDecoder");

}

if (mMjpegDecoder.get != NULL) {

mMjpegDecoder.decoder = mMjpegDecoder.get();

if (mMjpegDecoder.decoder==NULL) {

LOGE("%s(%d): get mjpeg decoder failed",__FUNCTION__,__LINE__);

} else {

mMjpegDecoder.destroy =(destroyMjpegDecoderFun)dlsym(mLibstageLibHandle, "destroy_class_On2JpegDecoder");

if (mMjpegDecoder.destroy == NULL)

LOGE("%s(%d): dlsym destroy_class_On2JpegDecoder fail",__FUNCTION__,__LINE__);

mMjpegDecoder.init = (initMjpegDecoderFun)dlsym(mLibstageLibHandle, "init_class_On2JpegDecoder");

if (mMjpegDecoder.init == NULL)

LOGE("%s(%d): dlsym init_class_On2JpegDecoder fail",__FUNCTION__,__LINE__);

mMjpegDecoder.deInit =(deInitMjpegDecoderFun)dlsym(mLibstageLibHandle, "deinit_class_On2JpegDecoder");

if (mMjpegDecoder.deInit == NULL)

LOGE("%s(%d): dlsym deinit_class_On2JpegDecoder fail",__FUNCTION__,__LINE__);

mMjpegDecoder.decode =(mjpegDecodeOneFrameFun)dlsym(mLibstageLibHandle, decode_name);

if (mMjpegDecoder.decode == NULL)

LOGE("%s(%d): dlsym %s fail",__FUNCTION__,__LINE__,decode_name);

if ((mMjpegDecoder.deInit != NULL) && (mMjpegDecoder.init != NULL) &&

(mMjpegDecoder.destroy != NULL) && (mMjpegDecoder.decode != NULL)) {

mMjpegDecoder.state = mMjpegDecoder.init(mMjpegDecoder.decoder);

}

}

}

cameraDevicePathCur = (char*)&gCamInfos[cameraId].device_path[0];

iCamFd = open(cameraDevicePathCur, O_RDWR);

if (iCamFd < 0) {

LOGE("%s(%d): open camera%d(%s) is failed",__FUNCTION__,__LINE__,cameraId,cameraDevicePathCur);

goto exit;

}

memset(&mCamDriverCapability, 0, sizeof(struct v4l2_capability));

err = ioctl(iCamFd, VIDIOC_QUERYCAP, &mCamDriverCapability);

if (err < 0) {

LOGE("%s(%d): %s query device's capability failed.\n",__FUNCTION__,__LINE__,cam_path);

goto exit1;

}

LOGD("Camera driver: %s Driver version: %d.%d.%d CameraHal version: %d.%d.%d ",mCamDriverCapability.driver,

(mCamDriverCapability.version>>16) & 0xff,(mCamDriverCapability.version>>8) & 0xff,

mCamDriverCapability.version & 0xff,(CONFIG_CAMERAHAL_VERSION>>16) & 0xff,(CONFIG_CAMERAHAL_VERSION>>8) & 0xff,

CONFIG_CAMERAHAL_VERSION & 0xff);

memset(&fmtdesc, 0, sizeof(fmtdesc));

fmtdesc.index = 0;

fmtdesc.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

while (ioctl(iCamFd, VIDIOC_ENUM_FMT, &fmtdesc) == 0) {

mCamDriverSupportFmt[fmtdesc.index] = fmtdesc.pixelformat;

LOGD("mCamDriverSupportFmt: fmt = %d,index = %d",fmtdesc.pixelformat,fmtdesc.index);

fmtdesc.index++;

}

i = 0;

while (CameraHal_SupportFmt[i]) {

LOG1("CameraHal_SupportFmt:fmt = %d,index = %d",CameraHal_SupportFmt[i],i);

j = 0;

while (mCamDriverSupportFmt[j]) {

if (mCamDriverSupportFmt[j] == CameraHal_SupportFmt[i]) {

if ((mCamDriverSupportFmt[j] == V4L2_PIX_FMT_MJPEG) && (mMjpegDecoder.state == -1))

continue;

break;

}

j++;

}

if (mCamDriverSupportFmt[j] == CameraHal_SupportFmt[i]) {

break;

}

i++;

}

if (CameraHal_SupportFmt[i] == 0x00) {

LOGE("%s(%d): all camera driver support format is not supported in CameraHal!!",__FUNCTION__,__LINE__);

j = 0;

while (mCamDriverSupportFmt[j]) {

LOG1("pixelformat = '%c%c%c%c'",

mCamDriverSupportFmt[j] & 0xFF, (mCamDriverSupportFmt[j] >> 8) & 0xFF,

(mCamDriverSupportFmt[j] >> 16) & 0xFF, (mCamDriverSupportFmt[j] >> 24) & 0xFF);

j++;

}

goto exit1;

} else {

mCamDriverPreviewFmt = CameraHal_SupportFmt[i];

LOGD("%s(%d): mCamDriverPreviewFmt(%c%c%c%c) is cameraHal and camera driver is also supported!!",__FUNCTION__,__LINE__,

mCamDriverPreviewFmt & 0xFF, (mCamDriverPreviewFmt >> 8) & 0xFF,

(mCamDriverPreviewFmt >> 16) & 0xFF, (mCamDriverPreviewFmt >> 24) & 0xFF);

LOGD("mCamDriverPreviewFmt = %d",mCamDriverPreviewFmt);

}

LOGD("%s(%d): Current driver is %s, v4l2 memory is %s",__FUNCTION__,__LINE__,mCamDriverCapability.driver,

(mCamDriverV4l2MemType==V4L2_MEMORY_MMAP)?"V4L2_MEMORY_MMAP":"V4L2_MEMORY_OVERLAY");

mCamFd = iCamFd;

//create focus thread for soc or usb camera.

mAutoFocusThread = new AutoFocusThread(this);

mAutoFocusThread->run("AutoFocusThread", ANDROID_PRIORITY_URGENT_DISPLAY);

mExitAutoFocusThread = false;

LOG_FUNCTION_NAME_EXIT

return 0;

exit1:

if (iCamFd > 0) {

close(iCamFd);

iCamFd = -1;

}

exit:

LOGE("%s(%d): exit with error -1",__FUNCTION__,__LINE__);

return -1;

}代码比较多,一步步分析:

1.第一部分主要是跟decoder编解码相关,这里暂时没深入,先跳过;

2.开始根据device name实际打开camera,这里的name早在之前initialize的时候被保存到cameraDevicePathCur = (char*)&gCamInfos[cameraId].device_path[0];

1)打开camera之后第一步先进性camera的能力查询:

err = ioctl(iCamFd, VIDIOC_QUERYCAP, &mCamDriverCapability);2)第二部列出camera支持的格式:

ioctl(iCamFd, VIDIOC_ENUM_FMT, &fmtdesc)3)最后将文件句柄保存到实例成员变量中:mCamFd = iCamFd;

3.启动自动对焦线程:

mAutoFocusThread = new AutoFocusThread(this);

mAutoFocusThread->run("AutoFocusThread", ANDROID_PRIORITY_URGENT_DISPLAY);继续回到initialize,会调用到初始化camera参数的设置

void CameraUSBAdapter::initDefaultParameters(int camFd)该接口代码多,这里就不贴出了主要是针对camera的参数设置,然后并保存当前的camera参数到一个实例中,方便后续上层在camera参数没有变化的情况下获取camera参数的时候就不要再与driver交互了;

下面列出主要设置的几个参数:

1.ioctl(mCamFd, VIDIOC_ENUM_FRAMESIZES, &fsize);获取camera的frame的参数,主要是长和宽等

不过RK这边preview的size被默认设置成640*480,这些都保存到了params实例中,里面保存着camera的所有参数,会先在这边初始化一遍。

2./* set framerate */ ret = ioctl(mCamFd, VIDIOC_S_PARM, &setfps);设置帧率到底层,并把这些设置保存到params中

3.ioctl(mCamFd, VIDIOC_QUERYCTRL, &whiteBalance) 设置白平衡相关参数

4.ioctl(mCamFd, VIDIOC_QUERYCTRL, &scene) 场景设置

5.ioctl(mCamFd, VIDIOC_QUERYCTRL, &anti_banding)) /anti-banding setting/

6.ioctl(mCamFd, VIDIOC_QUERYCTRL, &flashMode) /flash mode setting/

7./focus mode setting/ 不过这里被写死了在params中

8.ioctl(mCamFd, VIDIOC_QUERYCTRL, &facedetect) //hardware face detect settings

9.ioctl(mCamFd, VIDIOC_QUERYCTRL, &query_control) /Exposure setting/

………..

最后会统一调用cameraConfig(params,true,isRestartPreview);把刚才保存在params中的要设置的参数全部设置下去,以后上层的设置cmd也会调用到这个接口。

这个接口实现逻辑结构比较清楚,根据 标志进行设置,与driver进行交互

比如下面这个判断白平衡的:

params.get(CameraParameters::KEY_SUPPORTED_WHITE_BALANCE)这里就不具体分析了。

继续回到camerahal的初始化过程:

5.最后启动commandThread线程:

mCommandThread = new CommandThread(this);

mCommandThread->run("CameraCmdThread", ANDROID_PRIORITY_URGENT_DISPLAY);这个线程主要接收上层的各种cmd消息,比如startPreview,stopPreview,setParameters等cmd,然后根据对应的cmd进行下一步操作,如果是startPreview会启动一个preview的线程。

至此,cameraHal的初始化过程就完成了,具体是比较复杂了,这里只是简单讲了下比较重要的几个线程。接下来就在等待上层的操作了。

libcameraservice 到HAL层的接口实际上都是发送cmd的实现:

CameraHal_module.cpp中

int camera_start_preview(struct camera_device * device)

{

int rv = -EINVAL;

rk_camera_device_t* rk_dev = NULL;

LOGV("%s", __FUNCTION__);

if(!device)

return rv;

rk_dev = (rk_camera_device_t*) device;

rv = gCameraHals[rk_dev->cameraid]->startPreview();

return rv;

}

会调到:

int CameraHal::startPreview()

{

LOG_FUNCTION_NAME

Message msg;

Mutex::Autolock lock(mLock);

if ((mCommandThread != NULL)) {

msg.command = CMD_PREVIEW_START;

msg.arg1 = NULL;

setCamStatus(CMD_PREVIEW_START_PREPARE, 1);

commandThreadCommandQ.put(&msg);

}

// mPreviewCmdReceived = true;

setCamStatus(STA_PREVIEW_CMD_RECEIVED, 1);

LOG_FUNCTION_NAME_EXIT

return NO_ERROR ;

}中 commandThreadCommandQ.put(&msg); 就是像 commandThread中发送消息。

在函数commandThread中loop:

commandThreadCommandQ.get(&msg);//接收camera的cmd,也就是上层调用到下面的接口转换成cmd

case CMD_PREVIEW_START:

case CMD_PREVIEW_STOP:

case CMD_SET_PREVIEW_WINDOW:

case CMD_SET_PARAMETERS:

case CMD_PREVIEW_CAPTURE_CANCEL:

case CMD_CONTINUOS_PICTURE:

case CMD_AF_START:

case CMD_AF_CANCEL:

case CMD_EXIT:err=mCameraAdapter->startPreview(app_previw_w,app_preview_h,drv_w, drv_h, 0, false);mCameraAdapter是camera的适配器类,在CameraHal类构造函数初始化的时候就会选择好camera的类型,这里一般是UVC标准的camera。

err = mDisplayAdapter->startDisplay(app_previw_w, app_preview_h);这里是在开始preview线程之后会调用的开始显示的操作,最终会向displayThread发送msg:

int DisplayAdapter::startDisplay(int width, int height)

{

int err = NO_ERROR;

Message msg;

Semaphore sem;

LOG_FUNCTION_NAME

mDisplayLock.lock();

if (mDisplayRuning == STA_DISPLAY_RUNNING) {

LOGD("%s(%d): display thread is already run",__FUNCTION__,__LINE__);

goto cameraDisplayThreadStart_end;

}

mDisplayWidth = width;

mDisplayHeight = height;

setDisplayState(CMD_DISPLAY_START_PREPARE);

msg.command = CMD_DISPLAY_START;

sem.Create();

msg.arg1 = (void*)(&sem);

displayThreadCommandQ.put(&msg);

mDisplayCond.signal();

cameraDisplayThreadStart_end:

mDisplayLock.unlock();

if(msg.arg1){

sem.Wait();

if(mDisplayState != CMD_DISPLAY_START_DONE)

err = -1;

}

LOG_FUNCTION_NAME_EXIT

return err;

}下面是display的thread loop:

void DisplayAdapter::displayThread()

{

int err,stride,i,queue_cnt;

int dequeue_buf_index,queue_buf_index,queue_display_index;

buffer_handle_t *hnd = NULL;

NATIVE_HANDLE_TYPE *phnd;

GraphicBufferMapper& mapper = GraphicBufferMapper::get();

Message msg;

void *y_uv[3];

int frame_used_flag = -1;

Rect bounds;

LOG_FUNCTION_NAME

while (mDisplayRuning != STA_DISPLAY_STOP) {

display_receive_cmd:

if (displayThreadCommandQ.isEmpty() == false ) {

displayThreadCommandQ.get(&msg);

......

......接着看startPreview的过程,在:

status_t CameraAdapter::startPreview(int preview_w,int preview_h,int w, int h, int fmt,bool is_capture)

{

//create buffer

LOG_FUNCTION_NAME

unsigned int frame_size = 0,i;

struct bufferinfo_s previewbuf;

int ret = 0,buf_count = CONFIG_CAMERA_PREVIEW_BUF_CNT;

LOGD("%s%d:preview_w = %d,preview_h = %d,drv_w = %d,drv_h = %d",__FUNCTION__,__LINE__,preview_w,preview_h,w,h);

mPreviewFrameIndex = 0;

mPreviewErrorFrameCount = 0;

switch (mCamDriverPreviewFmt)

{

case V4L2_PIX_FMT_NV12:

case V4L2_PIX_FMT_YUV420:

frame_size = w*h*3/2;

break;

case V4L2_PIX_FMT_NV16:

case V4L2_PIX_FMT_YUV422P:

default:

frame_size = w*h*2;

break;

}

//for test capture

if(is_capture){

buf_count = 1;

}

if(mPreviewBufProvider->createBuffer(buf_count,frame_size,PREVIEWBUFFER) < 0)

{

LOGE("%s%d:create preview buffer failed",__FUNCTION__,__LINE__);

return -1;

}

//set size

if(cameraSetSize(w, h, mCamDriverPreviewFmt,is_capture)<0){

ret = -1;

goto start_preview_end;

}

mCamDrvWidth = w;

mCamDrvHeight = h;

mCamPreviewH = preview_h;

mCamPreviewW = preview_w;

memset(mPreviewFrameInfos,0,sizeof(mPreviewFrameInfos));

//camera start

if(cameraStart() < 0){

ret = -1;

goto start_preview_end;

}

//new preview thread

mCameraPreviewThread = new CameraPreviewThread(this);

mCameraPreviewThread->run("CameraPreviewThread",ANDROID_PRIORITY_DISPLAY);

mPreviewRunning = 1;

LOGD("%s(%d):OUT",__FUNCTION__,__LINE__);

return 0;

start_preview_end:

mCamDrvWidth = 0;

mCamDrvHeight = 0;

mCamPreviewH = 0;

mCamPreviewW = 0;

return ret;

}主要做了三件事1.根据fmt创建buffer并设置camerasize 2,开始camera之前的一些操作 3.启动preview线程

在cameraSetSize(w, h, mCamDriverPreviewFmt,is_capture)

int CameraAdapter::cameraSetSize(int w, int h, int fmt, bool is_capture)

{

int err=0;

struct v4l2_format format;

/* Set preview format */

format.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

format.fmt.pix.width = w;

format.fmt.pix.height = h;

format.fmt.pix.pixelformat = fmt;

format.fmt.pix.field = V4L2_FIELD_NONE; /* ddl@rock-chips.com : field must be initialised for Linux kernel in 2.6.32 */

LOGD("%s(%d):IN, w = %d,h = %d",__FUNCTION__,__LINE__,w,h);

if (is_capture) { /* ddl@rock-chips.com: v0.4.1 add capture and preview check */

format.fmt.pix.priv = 0xfefe5a5a;

} else {

format.fmt.pix.priv = 0x5a5afefe;

}

err = ioctl(mCamFd, VIDIOC_S_FMT, &format);

if ( err < 0 ){

LOGE("%s(%d): VIDIOC_S_FMT failed",__FUNCTION__,__LINE__);

} else {

LOG1("%s(%d): VIDIOC_S_FMT %dx%d '%c%c%c%c'",__FUNCTION__,__LINE__,format.fmt.pix.width, format.fmt.pix.height,

fmt & 0xFF, (fmt >> 8) & 0xFF,(fmt >> 16) & 0xFF, (fmt >> 24) & 0xFF);

}

return err;

}上面代码应该很熟悉了,先填充format这个结构体然后调用ioctl下去设置成功。

在cameraStart()中主要是申请buffer和映射的动作:

int CameraAdapter::cameraStart()

{

int preview_size,i;

int err;

int nSizeBytes;

int buffer_count;

struct v4l2_format format;

enum v4l2_buf_type type;

struct v4l2_requestbuffers creqbuf;

struct v4l2_buffer buffer;

CameraParameters tmpparams;

LOG_FUNCTION_NAME

buffer_count = mPreviewBufProvider->getBufCount();

creqbuf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

creqbuf.memory = mCamDriverV4l2MemType;

creqbuf.count = buffer_count;

if (ioctl(mCamFd, VIDIOC_REQBUFS, &creqbuf) < 0) {

LOGE ("%s(%d): VIDIOC_REQBUFS Failed. %s",__FUNCTION__,__LINE__, strerror(errno));

goto fail_reqbufs;

}

if (buffer_count == 0) {

LOGE("%s(%d): preview buffer havn't alloced",__FUNCTION__,__LINE__);

goto fail_reqbufs;

}

memset(mCamDriverV4l2Buffer, 0x00, sizeof(mCamDriverV4l2Buffer));

for (int i = 0; i < buffer_count; i++) {

memset(&buffer, 0, sizeof(struct v4l2_buffer));

buffer.type = creqbuf.type;

buffer.memory = creqbuf.memory;

buffer.flags = 0;

buffer.index = i;

if (ioctl(mCamFd, VIDIOC_QUERYBUF, &buffer) < 0) {

LOGE("%s(%d): VIDIOC_QUERYBUF Failed",__FUNCTION__,__LINE__);

goto fail_bufalloc;

}

if (buffer.memory == V4L2_MEMORY_OVERLAY) {

buffer.m.offset = mPreviewBufProvider->getBufPhyAddr(i);

mCamDriverV4l2Buffer[i] = (char*)mPreviewBufProvider->getBufVirAddr(i);

} else if (buffer.memory == V4L2_MEMORY_MMAP) {

mCamDriverV4l2Buffer[i] = (char*)mmap(0 /* start anywhere */ ,

buffer.length, PROT_READ, MAP_SHARED, mCamFd,

buffer.m.offset);

if (mCamDriverV4l2Buffer[i] == MAP_FAILED) {

LOGE("%s(%d): Unable to map buffer(length:0x%x offset:0x%x) %s(err:%d)\n",__FUNCTION__,__LINE__, buffer.length,buffer.m.offset,strerror(errno),errno);

goto fail_bufalloc;

}

}

mCamDriverV4l2BufferLen = buffer.length;

mPreviewBufProvider->setBufferStatus(i, 1,PreviewBufferProvider::CMD_PREVIEWBUF_WRITING);

err = ioctl(mCamFd, VIDIOC_QBUF, &buffer);

if (err < 0) {

LOGE("%s(%d): VIDIOC_QBUF Failed,err=%d[%s]\n",__FUNCTION__,__LINE__,err, strerror(errno));

mPreviewBufProvider->setBufferStatus(i, 0,PreviewBufferProvider::CMD_PREVIEWBUF_WRITING);

goto fail_bufalloc;

}

}

mPreviewErrorFrameCount = 0;

mPreviewFrameIndex = 0;

cameraStream(true);

LOG_FUNCTION_NAME_EXIT

return 0;

fail_bufalloc:

mPreviewBufProvider->freeBuffer();

fail_reqbufs:

LOGE("%s(%d): exit with error(%d)",__FUNCTION__,__LINE__,-1);

return -1;

}申请buffer三部曲:

ioctl(mCamFd, VIDIOC_REQBUFS, &creqbuf)

ioctl(mCamFd, VIDIOC_QUERYBUF, &buffer)

ioctl(mCamFd, VIDIOC_QBUF, &buffer);

一切就绪后调用cameraStream(true);开始stream:

int CameraAdapter::cameraStream(bool on)

{

int err = 0;

int cmd ;

enum v4l2_buf_type type;

LOGD("%s(%d):on = %d",__FUNCTION__,__LINE__,on);

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

cmd = (on)?VIDIOC_STREAMON:VIDIOC_STREAMOFF;

mCamDriverStreamLock.lock();

mCamDriverStream = on;

err = ioctl(mCamFd, cmd, &type);

if (err < 0) {

LOGE("%s(%d): %s Failed",__FUNCTION__,__LINE__,((on)?"VIDIOC_STREAMON":"VIDIOC_STREAMOFF"));

goto cameraStream_end;

}

//mCamDriverStream = on;

cameraStream_end:

mCamDriverStreamLock.unlock();

return err;

}这样camera的所有初始化准备就都完成了,后面要获取数据只需要跟driver进行queue buffer和deque buffer操作即可。

继续回到startPreview:

mCameraPreviewThread = new CameraPreviewThread(this);

mCameraPreviewThread->run("CameraPreviewThread",ANDROID_PRIORITY_DISPLAY);启动preview的线程:

void CameraAdapter::previewThread(){

bool loop = true;

FramInfo_s* tmpFrame = NULL;

int buffer_log = 0;

int ret = 0;

while(loop){

//get a frame

//fill frame info

//dispatch a frame

tmpFrame = NULL;

mCamDriverStreamLock.lock();

if (mCamDriverStream == false) {

mCamDriverStreamLock.unlock();

break;

}

mCamDriverStreamLock.unlock();

ret = getFrame(&tmpFrame);

// LOG2("%s(%d),frame addr = %p,%dx%d,index(%d)",__FUNCTION__,__LINE__,tmpFrame,tmpFrame->frame_width,tmpFrame->frame_height,tmpFrame->frame_index);

if((ret!=-1) && (!camera_device_error) && (mCamDriverStream == true)){

mPreviewBufProvider->setBufferStatus(tmpFrame->frame_index, 0,PreviewBufferProvider::CMD_PREVIEWBUF_WRITING);

//set preview buffer status

ret = reprocessFrame(tmpFrame);

if(ret < 0){

returnFrame(tmpFrame->frame_index,buffer_log);

continue;

}

buffer_log = 0;

//display ?

if(mRefDisplayAdapter->isNeedSendToDisplay())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_DISPING;

//video enc ?

if(mRefEventNotifier->isNeedSendToVideo())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_VIDEO_ENCING;

//picture ?

if(mRefEventNotifier->isNeedSendToPicture())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_SNAPSHOT_ENCING;

//preview data callback ?

if(mRefEventNotifier->isNeedSendToDataCB())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_DATACB;

mPreviewBufProvider->setBufferStatus(tmpFrame->frame_index,1,buffer_log);

if(buffer_log & PreviewBufferProvider::CMD_PREVIEWBUF_DISPING){

tmpFrame->used_flag = PreviewBufferProvider::CMD_PREVIEWBUF_DISPING;

mRefDisplayAdapter->notifyNewFrame(tmpFrame);

}

if(buffer_log & (PreviewBufferProvider::CMD_PREVIEWBUF_SNAPSHOT_ENCING)){

tmpFrame->used_flag = PreviewBufferProvider::CMD_PREVIEWBUF_SNAPSHOT_ENCING;

mRefEventNotifier->notifyNewPicFrame(tmpFrame);

}

if(buffer_log & (PreviewBufferProvider::CMD_PREVIEWBUF_VIDEO_ENCING)){

tmpFrame->used_flag = PreviewBufferProvider::CMD_PREVIEWBUF_VIDEO_ENCING;

mRefEventNotifier->notifyNewVideoFrame(tmpFrame);

}

if(buffer_log & (PreviewBufferProvider::CMD_PREVIEWBUF_DATACB)){

tmpFrame->used_flag = PreviewBufferProvider::CMD_PREVIEWBUF_DATACB;

mRefEventNotifier->notifyNewPreviewCbFrame(tmpFrame);

}

if(buffer_log == 0)

returnFrame(tmpFrame->frame_index,buffer_log);

}else if(camera_device_error){

//notify app erro

break;

}else if((ret==-1) && (!camera_device_error)){

if(tmpFrame)

returnFrame(tmpFrame->frame_index,buffer_log);

}

}

LOG_FUNCTION_NAME_EXIT

return;

}这个函数刚开始会把一个地址传下去从摄像头获取数据frame:

ret = getFrame(&tmpFrame);

在int CameraAdapter::getFrame(FramInfo_s** tmpFrame){}这个函数里调用ioctl等操作,获取frame数据。

回到preview的线程,先会判断:

//display ?

if(mRefDisplayAdapter->isNeedSendToDisplay())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_DISPING;

因为是预览过程所以会进到这个if分之,并不会向上回调数据,会触发之前初始化好的display线程。

//video enc ?

if(mRefEventNotifier->isNeedSendToVideo())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_VIDEO_ENCING;

录视频的时候会进到这个分之,会触发dataCallbackTimesStamp这个回调

//picture ?

if(mRefEventNotifier->isNeedSendToPicture())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_SNAPSHOT_ENCING;

当进行拍照动作的时候会进到这个分之,并会触发EncProcessThread这个线程,主要处理picture的一些编解码保存动作

//preview data callback ?

if(mRefEventNotifier->isNeedSendToDataCB())

buffer_log |= PreviewBufferProvider::CMD_PREVIEWBUF_DATACB;

preview 的data需要回调的时候会进到这里,不过RK的代码预览的时候并不会回调数据,而是直接显示了,所以这里可以根据需要改动。不过我还是要讲一下这个回调的过程,比较具有代表性,前面文章也具体说明了这个回调的数据流走向:

if(buffer_log & (PreviewBufferProvider::CMD_PREVIEWBUF_DATACB)){

tmpFrame->used_flag = PreviewBufferProvider::CMD_PREVIEWBUF_DATACB;

mRefEventNotifier->notifyNewPreviewCbFrame(tmpFrame);

}这个是像上层抛callback的过程:

void AppMsgNotifier::notifyNewPreviewCbFrame(FramInfo_s* frame)

{

//send to app msg thread

Message msg;

Mutex::Autolock lock(mDataCbLock);

if(mRunningState & STA_RECEIVE_PREVIEWCB_FRAME){

msg.command = CameraAppMsgThread::CMD_EVENT_PREVIEW_DATA_CB;

msg.arg2 = (void*)(frame);

msg.arg3 = (void*)(frame->used_flag);

eventThreadCommandQ.put(&msg);

}else

mFrameProvider->returnFrame(frame->frame_index,frame->used_flag);

}也是向一个线程投递消息包消息包里面包含数据帧。

下面是接受消息的thread loop:这个线程是在cameraHAL构造函数初始化的时候启动的。

void AppMsgNotifier::eventThread()

{

bool loop = true;

Message msg;

int index,err = 0;

FramInfo_s *frame = NULL;

int frame_used_flag = -1;

LOG_FUNCTION_NAME

while (loop) {

memset(&msg,0,sizeof(msg));

eventThreadCommandQ.get(&msg);

switch (msg.command)

{

case CameraAppMsgThread::CMD_EVENT_PREVIEW_DATA_CB:

frame = (FramInfo_s*)msg.arg2;

processPreviewDataCb(frame);

//return frame

frame_used_flag = (int)msg.arg3;

mFrameProvider->returnFrame(frame->frame_index,frame_used_flag);

break;

case CameraAppMsgThread::CMD_EVENT_VIDEO_ENCING:

frame_used_flag = (int)msg.arg3;

frame = (FramInfo_s*)msg.arg2;

LOG2("%s(%d):get new frame , index(%d),useflag(%d)",__FUNCTION__,__LINE__,frame->frame_index,frame_used_flag);

processVideoCb(frame);

//return frame

mFrameProvider->returnFrame(frame->frame_index,frame_used_flag);

break;

case CameraAppMsgThread::CMD_EVENT_PAUSE:

{

Message filter_msg;

LOG1("%s(%d),receive CameraAppMsgThread::CMD_EVENT_PAUSE",__FUNCTION__,__LINE__);

while(!eventThreadCommandQ.isEmpty()){

encProcessThreadCommandQ.get(&filter_msg);

if((filter_msg.command == CameraAppMsgThread::CMD_EVENT_PREVIEW_DATA_CB)

||(filter_msg.command == CameraAppMsgThread::CMD_EVENT_VIDEO_ENCING)){

FramInfo_s *frame = (FramInfo_s*)msg.arg2;

mFrameProvider->returnFrame(frame->frame_index,frame->used_flag);

}

}

if(msg.arg1)

((Semaphore*)(msg.arg1))->Signal();

//wake up waiter

break;

}

case CameraAppMsgThread::CMD_EVENT_EXIT:

{

loop = false;

if(msg.arg1)

((Semaphore*)(msg.arg1))->Signal();

break;

}

default:

break;

}

}

LOG_FUNCTION_NAME_EXIT

return;

}数据回调走的是 第一个case会调用到:processPreviewDataCb(frame);

int AppMsgNotifier::processPreviewDataCb(FramInfo_s* frame){

int ret = 0;

mDataCbLock.lock();

if ((mMsgTypeEnabled & CAMERA_MSG_PREVIEW_FRAME) && mDataCb) {

//compute request mem size

int tempMemSize = 0;

//request bufer

camera_memory_t* tmpPreviewMemory = NULL;

if (strcmp(mPreviewDataFmt,android::CameraParameters::PIXEL_FORMAT_RGB565) == 0) {

tempMemSize = mPreviewDataW*mPreviewDataH*2;

} else if (strcmp(mPreviewDataFmt,android::CameraParameters::PIXEL_FORMAT_YUV420SP) == 0) {

tempMemSize = mPreviewDataW*mPreviewDataH*3/2;

} else if (strcmp(mPreviewDataFmt,android::CameraParameters::PIXEL_FORMAT_YUV422SP) == 0) {

tempMemSize = mPreviewDataW*mPreviewDataH*2;

} else if(strcmp(mPreviewDataFmt,android::CameraParameters::PIXEL_FORMAT_YUV420P) == 0){

tempMemSize = ((mPreviewDataW+15)&0xfffffff0)*mPreviewDataH

+((mPreviewDataW/2+15)&0xfffffff0)*mPreviewDataH;

}else {

LOGE("%s(%d): pixel format %s is unknow!",__FUNCTION__,__LINE__,mPreviewDataFmt);

}

mDataCbLock.unlock();

tmpPreviewMemory = mRequestMemory(-1, tempMemSize, 1, NULL);

if (tmpPreviewMemory) {

//fill the tmpPreviewMemory

if (strcmp(mPreviewDataFmt,android::CameraParameters::PIXEL_FORMAT_YUV420P) == 0) {

cameraFormatConvert(V4L2_PIX_FMT_NV12,0,mPreviewDataFmt,

(char*)frame->vir_addr,(char*)tmpPreviewMemory->data,0,0,tempMemSize,

frame->frame_width, frame->frame_height,frame->frame_width,

//frame->frame_width,frame->frame_height,frame->frame_width,false);

mPreviewDataW,mPreviewDataH,mPreviewDataW,mDataCbFrontMirror);

}else {

#if 0

//QQ voip need NV21

arm_camera_yuv420_scale_arm(V4L2_PIX_FMT_NV12, V4L2_PIX_FMT_NV21, (char*)(frame->vir_addr),

(char*)tmpPreviewMemory->data,frame->frame_width, frame->frame_height,mPreviewDataW, mPreviewDataH,mDataCbFrontMirror,frame->zoom_value);

#else

rga_nv12_scale_crop(frame->frame_width, frame->frame_height,

(char*)(frame->vir_addr), (short int *)(tmpPreviewMemory->data),

mPreviewDataW,mPreviewDataW,mPreviewDataH,frame->zoom_value,mDataCbFrontMirror,true,true);

#endif

//arm_yuyv_to_nv12(frame->frame_width, frame->frame_height,(char*)(frame->vir_addr), (char*)buf_vir);

}

if(mDataCbFrontFlip) {

LOG1("----------------need flip -------------------");

YuvData_Mirror_Flip(V4L2_PIX_FMT_NV12, (char*) tmpPreviewMemory->data,

(char*)frame->vir_addr,mPreviewDataW, mPreviewDataH);

}

//callback

mDataCb(CAMERA_MSG_PREVIEW_FRAME, tmpPreviewMemory, 0,NULL,mCallbackCookie);

//release buffer

tmpPreviewMemory->release(tmpPreviewMemory);

} else {

LOGE("%s(%d): mPreviewMemory create failed",__FUNCTION__,__LINE__);

}

} else {

mDataCbLock.unlock();

LOG1("%s(%d): needn't to send preview datacb",__FUNCTION__,__LINE__);

}

return ret;

}当中会对frame做一些转化,然后直接调用之前注册好的callback回调给上层:

//callback

mDataCb(CAMERA_MSG_PREVIEW_FRAME, tmpPreviewMemory, 0,NULL,mCallbackCookie); 传的参数第一个是回调数据的类型,第二个就是数据帧的虚拟地址了。

这个函数会根据之前注册回调的路径一直往上层回调到APP层。

3934

3934

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?