Week9_3Program_Anomaly Detection and Recommender Systems编程解析

1. Anomaly detection分析

1.1 Estimate gaussian parameters 0 / 15

计算公式:

μi=1m∑j=1mx(j)i

μ

i

=

1

m

∑

j

=

1

m

x

i

(

j

)

σ2i=1m∑j=1m(x(j)i−μi)2

σ

i

2

=

1

m

∑

j

=

1

m

(

x

i

(

j

)

−

μ

i

)

2

X=(300x2) K=3 centroids=(3x2) idx=(300x1)

在 estimateGaussian.m 中添加

mu = mean(X);

sigma2 = var(X,opt=1);octave中mean函数的作用:

mean (x) = SUM_i x(i) / N

octave中的var函数的作用:

var (x) = 1/(N-1) SUM_i (x(i) - mean(x))^2

1.2 Select threshold 0 / 15

计算公式:

p(x;μ,σ2)=12πσ2√e−(x−μ)22σ2

p

(

x

;

μ

,

σ

2

)

=

1

2

π

σ

2

e

−

(

x

−

μ

)

2

2

σ

2

上述求p的过程己经在 multivariateGaussian.m 中完成了, 直接用就行,程序中是pval

下面是 selectThreshold.m中用到的公式:

precison=tp+fptp

p

r

e

c

i

s

o

n

=

t

p

+

f

p

t

p

recall=tp+fntp

r

e

c

a

l

l

=

t

p

+

f

n

t

p

F1=prec+rec2∗prec∗rec

F

1

=

p

r

e

c

+

r

e

c

2

∗

p

r

e

c

∗

r

e

c

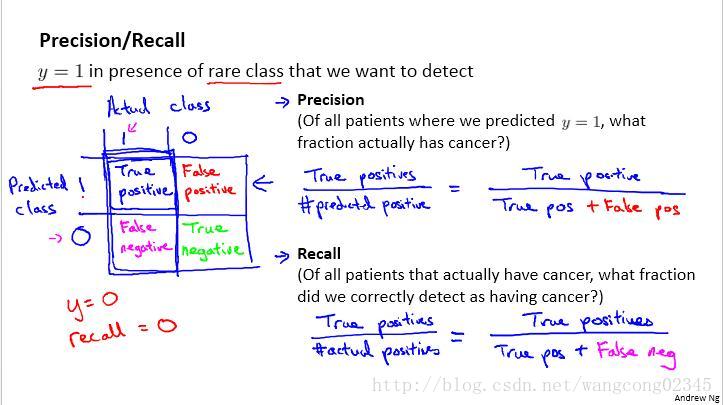

上图是lecture 11中的课件截图

* 将距离u1最近的找出来,取个平均值作为新的u1 *

predictions = (pval < epsilon);

truePositives = sum((predictions == 1) & (yval == 1));

falsePositives = sum((predictions == 1) & (yval == 0));

falseNegatives = sum((predictions == 0) & (yval == 1));

precision = truePositives / (truePositives + falsePositives);

recall = truePositives / (truePositives + falseNegatives);

F1 = (2 * precision * recall) / (precision + recall);2. Recommender Systems

2.1 Collaborative filtering cost 0 / 20

计算公式:

J(x(1),...,x(nm),θ(1),...,θ(nu))=12∑(i,j):r(i,j)=1((θ(j))Tx(i)−y(i,j))2

J

(

x

(

1

)

,

.

.

.

,

x

(

n

m

)

,

θ

(

1

)

,

.

.

.

,

θ

(

n

u

)

)

=

1

2

∑

(

i

,

j

)

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

2

X(5x3)

θ

θ

(4x3) Y(5x4)

在 cofiCostFunc.m 中添加, 实现没有正则化项的costFunction

error = (X*Theta'-Y) .* R;

J = (1/2)*sum(sum(error .^ 2));2.2 Collaborative filtering gradient 0 / 30

计算公式:

∂J∂x(i)k=∑j:r(i,j)=1((θ(j))Tx(i)−y(i,j))θ(j)k

∂

J

∂

x

k

(

i

)

=

∑

j

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

θ

k

(

j

)

∂J∂θ(j)k=∑i:r(i,j)=1((θ(j))Tx(i)−y(i,j))x(i)k

∂

J

∂

θ

k

(

j

)

=

∑

i

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

x

k

(

i

)

# 下面两行是2.1中添加的,计算costFunction

error = (X*Theta'-Y) .* R;

J = (1/2)*sum(sum(error .^ 2));

# 下面两行是2.2中的,梯度下降计算

X_grad = error * Theta ;

Theta_grad = error' * X ;2.3 Regularized cost 0 / 10

计算公式:

JnoReg(x(1),...,x(nm),θ(1),...,θ(nu))=12∑(i,j):r(i,j)=1((θ(j))Tx(i)−y(i,j))2

J

n

o

R

e

g

(

x

(

1

)

,

.

.

.

,

x

(

n

m

)

,

θ

(

1

)

,

.

.

.

,

θ

(

n

u

)

)

=

1

2

∑

(

i

,

j

)

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

2

reg=λ2∑j=1nu∑k=1n(θ(j)k)2+λ2∑j=1nm∑k=1n(x(j)k)2

r

e

g

=

λ

2

∑

j

=

1

n

u

∑

k

=

1

n

(

θ

k

(

j

)

)

2

+

λ

2

∑

j

=

1

n

m

∑

k

=

1

n

(

x

k

(

j

)

)

2

J=JnoReg+reg

J

=

J

n

o

R

e

g

+

r

e

g

error = (X*Theta'-Y) .* R;

J_noReg = (1/2)*sum(sum(error .^ 2));

X_grad = error * Theta ;

Theta_grad = error' * X ;

# 下面实现正则化的costFunction

costRegLeft = lambda/2 * sum(sum(Theta.^2));

costRegRight = lambda/2 * sum(sum(X.^2));

Reg = costRegLeft + costRegRight;

J = J_noReg + Reg;2.4 Gradient with regularization 0 / 10

计算公式:

XgradnoReg=∂J∂x(i)k=∑j:r(i,j)=1((θ(j))Tx(i)−y(i,j))θ(j)k

X

g

r

a

d

n

o

R

e

g

=

∂

J

∂

x

k

(

i

)

=

∑

j

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

θ

k

(

j

)

ThetagradnoReg=∂J∂θ(j)k=∑i:r(i,j)=1((θ(j))Tx(i)−y(i,j))x(i)k

T

h

e

t

a

g

r

a

d

n

o

R

e

g

=

∂

J

∂

θ

k

(

j

)

=

∑

i

:

r

(

i

,

j

)

=

1

(

(

θ

(

j

)

)

T

x

(

i

)

−

y

(

i

,

j

)

)

x

k

(

i

)

XReg=λx(i)k

X

R

e

g

=

λ

x

k

(

i

)

ThetaReg=λθ(i)k

T

h

e

t

a

R

e

g

=

λ

θ

k

(

i

)

ThetaGrad=ThetagradnoReg+XReg

T

h

e

t

a

G

r

a

d

=

T

h

e

t

a

g

r

a

d

n

o

R

e

g

+

X

R

e

g

ThetaGrad=ThetagradnoReg+ThetaReg

T

h

e

t

a

G

r

a

d

=

T

h

e

t

a

g

r

a

d

n

o

R

e

g

+

T

h

e

t

a

R

e

g

# 下面是计算costFuncton,分两步先计算不带cost的J,再计算reg项

error = (X*Theta'-Y) .* R;

J_noReg = (1/2)*sum(sum(error .^ 2));

costRegLeft = lambda/2 * sum(sum(Theta.^2));

costRegRight = lambda/2 * sum(sum(X.^2));

Reg = costRegLeft + costRegRight;

J = J_noReg + Reg;

# 下面是计算grad,分两步先计算不带reg的grad,再计算reg

X_grad_noReg = error * Theta ;

Theta_grad_noReg = error' * X ;

X_grad = X_grad_noReg + lambda * X;

Theta_grad = Theta_grad_noReg + lambda * Theta;3. 总结

1 Estimate gaussian parameters 0 / 15

2 Select threshold 0 / 15

3 Collaborative filtering cost 0 / 20

4 Collaborative filtering gradient 0 / 30

5 Regularized cost 0 / 10

6 Gradient with regularization 0 / 10

3223

3223

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?