lvs有三种模式:nat模式(LVS/NAT),直接路由模式( LVS/DR),ip隧道模式(LVS/TUN)

以及二度开发的第四种模式(FULL NAT)

1、DR直接路由模式

原理:负载均衡器和RS都使用同一个IP对外服务。但只有DR对ARP请求进行响应,所有RS对本身这个IP的ARP请求保持静默。也就是说,网关会把对这个服务IP的请求全部定向给DR,而DR收到数据包后根据调度算法,找出对应的RS,把目的MAC地址改为RS的MAC(因为IP一致)并将请求分发给这台RS。这时RS收到这个数据包,处理完成之后,由于IP一致,可以直接将数据返给客户,则等于直接从客户端收到这个数据包无异,处理后直接返回给客户端。由于负载均衡器要对二层包头进行改换,所以负载均衡器和RS之间必须在一个广播域,也可以简单的理解为在同一台交换机上。

1、配置环境:

三台redhat6.5版本虚拟机(server1,server2,server3)

server1作为vs

server2和server3作为rs

server1(VS)

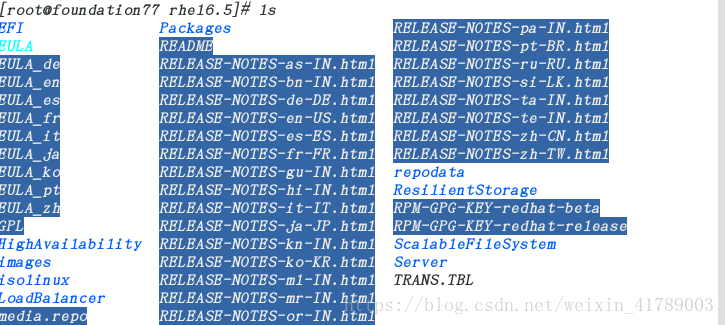

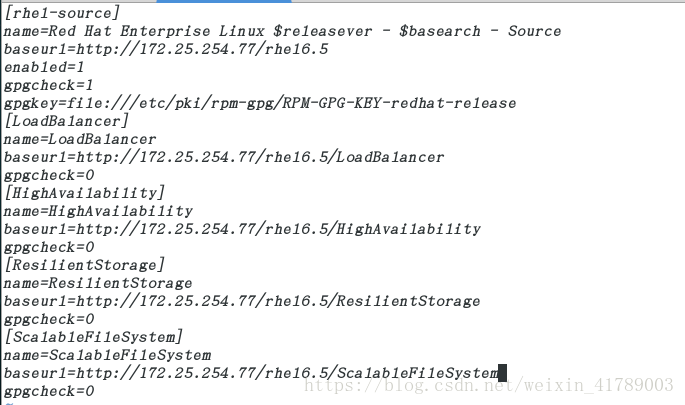

1、配置yum源

1、因为6.5版本的yum源不能一次性全部加载,所以需要将镜像中的东西,全部设置

将需要的东西全部在server1中设置

执行 yum repolist命令加载yum源

[root@server1 ~]# yum repolist

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

HighAvailability | 3.9 kB 00:00

HighAvailability/primary_db | 43 kB 00:00

LoadBalancer | 3.9 kB 00:00

LoadBalancer/primary_db | 7.0 kB 00:00

ResilientStorage | 3.9 kB 00:00

ResilientStorage/primary_db | 47 kB 00:00

ScalableFileSystem | 3.9 kB 00:00

ScalableFileSystem/primary_db | 6.8 kB 00:00

rhel-source | 3.9 kB 00:00

repo id repo name status

HighAvailability HighAvailability 56

LoadBalancer LoadBalancer 4

ResilientStorage ResilientStorage 62

ScalableFileSystem ScalableFileSystem 7

rhel-source Red Hat Enterprise Linux 6Server - x86_64 - Source 3,690

repolist: 3,819

2、安装lvs用户层面的插件ipvsadm

[root@server1 ~]# yum install ipvsadm -y

3、设置虚拟vip

[root@server1 ~]# ip addr add 172.25.254.100/24 dev eth0 #添加一个ip

[root@server1 ~]# ipvsadm -A -t 172.25.254.100:80 -s rr #将这个ip设置成lvs的虚拟ip,rr表示论询算法

[root@server1 ~]# ipvsadm -l ##此时在lvs规则中可以看到添加vip,但没有规则

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.254.100:http rr

[root@server1 ~]# ipvsadm -a -t 172.25.254.100:80 -r 172.25.254.2:80 -g ##添加规则 -g表示DR直接路由模式

[root@server1 ~]# ipvsadm -a -t 172.25.254.100:80 -r 172.25.254.3:80 -g

[root@server1 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.254.100:http rr

-> server2:http Route 1 0 0

-> server3:http Route 1 0 0

[root@server1 ~]# /etc/init.d/ipvsadm save ##保存规则

ipvsadm: Saving IPVS table to /etc/sysconfig/ipvsadm: [ OK ]

4、ipvsadm参数含义

-C:清除已有规则。

-A:添加VIP服务,后跟服务的访问地址。

-t:TCP协议,还是UDP协议(-u)。

-s:负载均衡算法,rr表示RoundRobin。

-a:添加RealServer到VIP,后跟虚地址。

-r:添加RealServer到VIP,后跟实地址。

-g:透传模式(-g表示Direct Routing即DR模式,-i表示ipip封装即Tunneling模式,-m表示Network Access Translation即NAT模式)

-p:Session粘连,同一客户端的请求在一段时间内都负载到同一RealServer。

server2(RS)

1、安装httpd服务

[root@server2 ~]# yum install httpd

[root@server2 ~]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.2 for ServerName

[ OK ]

[root@server2 ~]# vim /var/www/html/index.html

[root@server2 ~]# curl localhost

<h1>server2</h1>

2、添加ip

[root@server2 ~]# ip addr add 172.25.254.100/32 dev eth0

[root@server2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:b9:16:3e brd ff:ff:ff:ff:ff:ff

inet 172.25.254.2/24 brd 172.25.254.255 scope global eth0

inet 172.25.254.100/32 scope global eth0

inet6 fe80::5054:ff:feb9:163e/64 scope link

valid_lft forever preferred_lft forever

3、安装arptables_jf

因为设置172.25.254.100/24作为vip,不可以和外部通信,所以设用arptables将其的访问全部DROP,出去的包全部转化为本机的ip

[root@server2 ~]# arptables -A IN -d 172.25.254.100 -j DROP ##进来的包全部丢弃

[root@server2 ~]# arptables -A OUT -s 172.25.254.100 -j mangle --mangle-ip-s 172.25.254.2 ##出去的包转化为本机ip

[root@server2 ~]# arptables -L

Chain IN (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

DROP anywhere 172.25.254.100 anywhere anywhere any any any any

Chain OUT (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

mangle 172.25.254.100 anywhere anywhere anywhere any any any any --mangle-ip-s server2

Chain FORWARD (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

[root@server2 ~]# /etc/init.d/arptables_jf save ##保存规则

Saving current rules to /etc/sysconfig/arptables: [ OK ]

server3(RS)

[root@server3 ~]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.254.3 for ServerName

[ OK ]

[root@server3 ~]# vim /var/www/html/index.html

[root@server3 ~]# curl localhost

<h1>server3</h1>

[root@server3 ~]# yum install arptables_jf -y

[root@server3 ~]# arptables -A IN -d 172.25.254.100 -j DROP

[root@server3 ~]# arptables -A OUT -s 172.25.254.100 -j mangle --mangle-ip-s 172.25.254.3

[root@server3 ~]# /etc/init.d/arptables_jf save

Saving current rules to /etc/sysconfig/arptables: [ OK ]

测试:

外部网络测试172.25.254.100

访问四次到的结果为server2和server3的内容

[root@foundation77 ~]# curl 172.25.254.100

<h1>server3</h1>

[root@foundation77 ~]# curl 172.25.254.100

<h1>server2</h1>

[root@foundation77 ~]# curl 172.25.254.100

<h1>server3</h1>

[root@foundation77 ~]# curl 172.25.254.100

<h1>server2</h1>

在server查询:

四次均摊,每个RS两次

[root@server1 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.254.100:http rr

-> server2:http Route 1 0 2

-> server3:http Route 1 0 2

二、NAT模式

原理:就是把客户端发来的数据包的IP头的目的地址,在负载均衡器上换成其中一台RS的IP地址,并发至此RS来处理,RS处理完成后把数据交给经过负载均衡器,负载均衡器再把数据包的原IP地址改为自己的IP,将目的地址改为客户端IP地址即可。期间,无论是进来的流量,还是出去的流量,都必须经过负载均衡器。

在之前的DR模式下接着操作

server1(vs)

1、清除之前的规则

[root@server1 ~]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

2、 打开内部路由设置##

[root@server1 ~]# vim /etc/sysctl.conf

[root@server1 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@server1 ~]# cat /proc/sys/net/ipv4/ip_forward

1

[root@server1 ~]# sysctl -p

net.ipv4.ip_forward = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

error: "net.bridge.bridge-nf-call-ip6tables" is an unknown key

error: "net.bridge.bridge-nf-call-iptables" is an unknown key

error: "net.bridge.bridge-nf-call-arptables" is an unknown key

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

3、添加ipvsadm规则

(nat模式,最好添加两块网卡,eth0负责和外网通信,eth1负责和RS通信)

[root@server1 ~]# ipvsadm -A -t 172.25.254.162:80 -s rr

[root@server1 ~]# ipvsadm -a -t 172.25.254.162:80 -r 172.25.254.2:80 -m

[root@server1 ~]# ipvsadm -a -t 172.25.254.162:80 -r 172.25.254.3:80 -m

[root@server1 ~]# ipvsadm -L

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.254.162:http rr

-> server2:http Masq 1 0 0

-> server3:http Masq 1 0 0

[root@server1 ~]# modprobe iptable_nat ##导入net模块,否则会出现访问一次,然后再访问超时情况

server2(RS)

NAT模式只需要修改RS的网关指向即可,其他不用配置

1、配置RS的网关指向VS

[root@server2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

BOOTPROTO=static

IPADDR=172.25.62.2

PREFIX=24

GATEWAY=172.25.62.1

DNS1=114.114.114.114

2、ip

[root@server2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:b9:16:3e brd ff:ff:ff:ff:ff:ff

inet 172.25.62.2/24 brd 172.25.254.255 scope global eth0

inet6 fe80::5054:ff:feb9:163e/64 scope link

valid_lft forever preferred_lft forever

server3同server2

测试:

物理机

[kiosk@oundation62 Desktop]$ curl 172.25.254.162

<h1>server3</h1>

[kiosk@oundation62 Desktop]$ curl 172.25.254.162

<h1>server2</h1>

VS

[root@server1 ~]# ipvsadm -L

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.254.162:http rr

-> 172.25.62.2:http Masq 1 0 1

-> 172.25.62.3:http Masq 1 0 1

三、TUN隧道模式

原理:首先要知道,互联网上的大多Internet服务的请求包很短小,而应答包通常很大。那么隧道模式就是,把客户端发来的数据包,封装一个新的IP头标记(仅目的IP)发给RS,RS收到后,先把数据包的头解开,还原数据包,处理后,直接返回给客户端,不需要再经过负载均衡器。注意,由于RS需要对负载均衡器发过来的数据包进行还原,所以说必须支持IPTUNNEL协议。所以,在RS的内核中,必须编译支持IPTUNNEL这个选项

server1(VS)

1、设置规则

[root@server1 ~]# ipvsadm -C ##清除规则

[root@server1 ~]# ip addr add 172.25.62.100/32 dev eth0 ##添加一个ip

[root@server1 ~]# ipvsadm -A -t 172.25.62.100:80 -s rr ##将ip设置成vip

[root@server1 ~]# ipvsadm -a -t 172.25.62.100:80 -r 172.25.62.3:80 -i ##添加规则

[root@server1 ~]# ipvsadm -a -t 172.25.62.100:80 -r 172.25.62.2:80 -i

[root@server1 ~]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.62.1 for ServerName

[ OK ]

2、禁用rp_filter内核和打开内部路由

[root@server1 ~]# vim /etc/sysctl.conf

[root@server1 ~]# sysctl -p

net.ipv4.ip_forward = 1

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

error: "net.bridge.bridge-nf-call-ip6tables" is an unknown key

error: "net.bridge.bridge-nf-call-iptables" is an unknown key

error: "net.bridge.bridge-nf-call-arptables" is an unknown key

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

server2(RS)

1、安装arptables_jf

因为设置172.25.254.100/24作为vip,不可以和外部通信,所以设用arptables将其的访问全部DROP,出去的包全部转化为本机的ip

[root@server2 ~]# arptables -A IN -d 172.25.62.100 -j DROP ##进来的包全部丢弃

[root@server2 ~]# arptables -A OUT -s 172.25.62.100 -j mangle --mangle-ip-s 172.25.62.2 ##出去的包转化为本机ip

[root@server2 ~]# arptables -L

Chain IN (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

DROP anywhere 172.25.62.100 anywhere anywhere any any any any

Chain OUT (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

mangle 172.25.62.100 anywhere anywhere anywhere any any any any --mangle-ip-s server2

Chain FORWARD (policy ACCEPT)

target source-ip destination-ip source-hw destination-hw hlen op hrd pro

[root@server2 ~]# /etc/init.d/arptables_jf save ##保存规则

Saving current rules to /etc/sysconfig/arptables: [ OK ]

servr2(RS)

==

2、添加隧道tunl

[root@server2 ~]# ifconfig tunl0 172.25.62.100 netmask 255.255.255.255.255 up

255.255.255.255.255: Unknown host

[root@server2 ~]# ifconfig tunl0 172.25.62.100 netmask 255.255.255.255 up

[root@server2 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:00:37:2E:CC

inet addr:172.25.62.2 Bcast:172.25.62.255 Mask:255.255.255.0

inet6 addr: fe80::5054:ff:fe37:2ecc/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:2344 errors:0 dropped:0 overruns:0 frame:0

TX packets:833 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4586465 (4.3 MiB) TX bytes:103759 (101.3 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:10 errors:0 dropped:0 overruns:0 frame:0

TX packets:10 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1185 (1.1 KiB) TX bytes:1185 (1.1 KiB)

tunl0 Link encap:IPIP Tunnel HWaddr

inet addr:172.25.62.100 Mask:255.255.255.255

UP RUNNING NOARP MTU:1480 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

[root@server2 ~]# route add -host 172.25.62.100 dev tunl0 ##添加路由接口,确保从隧道进来的包由隧道出去

[root@server2 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.25.62.100 0.0.0.0 255.255.255.255 UH 0 0 0 tunl0

172.25.62.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

0.0.0.0 172.25.62.250 0.0.0.0 UG 0 0 0 eth0

server2和server3相同

测试:

用和vip网关相同的ip主机访问vip,如果访问到的页面有轮询,则负载均衡搭建成功

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server2</h1>

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server3</h1>

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server2</h1>

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server3</h1>

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server2</h1>

[root@oundation62 rhel6.5]# curl 172.25.62.100

<h1>server3</h1>

VS效果

[root@server1 ~]# ipvsadm -L

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.25.62.100:http rr

-> server2:http Tunnel 1 0 3

-> server3:http Tunnel 1 0 4

1566

1566

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?