hbase shell端运行正常,使用java api操作出错

本文主要参考:http://blog.csdn.net/kangkanglou/article/details/37329811

目前刚刚能够使用hbase shell 进行增删改查的操作了(http://blog.csdn.net/wild46cat/article/details/53284504),现在想利用java API 进行操作,但是在写一个demo的时候报错。下面分享一下这个错误,希望大家不要再倒进坑里。

错误如下:

hdfs://192.168.1.221:60000

log4j:WARN No appenders could be found for logger (org.apache.hadoop.security.Groups).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed after attempts=36, exceptions:

Tue Nov 22 16:49:50 CST 2016, null, java.net.SocketTimeoutException: callTimeout=60000, callDuration=79968: row 'test,,' on table 'hbase:meta' at region=hbase:meta,,1.1588230740, hostname=host3,16020,1479802559335, seqNum=0

at org.apache.hadoop.hbase.client.RpcRetryingCallerWithReadReplicas.throwEnrichedException(RpcRetryingCallerWithReadReplicas.java:276)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:207)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas.call(ScannerCallableWithReplicas.java:60)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithoutRetries(RpcRetryingCaller.java:210)

at org.apache.hadoop.hbase.client.ClientScanner.call(ClientScanner.java:326)

at org.apache.hadoop.hbase.client.ClientScanner.loadCache(ClientScanner.java:409)

at org.apache.hadoop.hbase.client.ClientScanner.next(ClientScanner.java:370)

at org.apache.hadoop.hbase.MetaTableAccessor.fullScan(MetaTableAccessor.java:604)

at org.apache.hadoop.hbase.MetaTableAccessor.tableExists(MetaTableAccessor.java:366)

at org.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:406)

at org.apache.hadoop.hbase.client.HBaseAdmin.tableExists(HBaseAdmin.java:416)

at com.xueyoucto.hbasett.Servlet.delete(Servlet.java:126)

at com.xueyoucto.hbasett.Servlet.doGet(Servlet.java:34)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:622)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:292)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:207)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:240)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:207)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:212)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:106)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:502)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:141)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:79)

at org.apache.catalina.valves.AbstractAccessLogValve.invoke(AbstractAccessLogValve.java:616)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:88)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:522)

at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1095)

at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:672)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1500)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.run(NioEndpoint.java:1456)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.SocketTimeoutException: callTimeout=60000, callDuration=79968: row 'test,,' on table 'hbase:meta' at region=hbase:meta,,1.1588230740, hostname=host3,16020,1479802559335, seqNum=0

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:169)

at org.apache.hadoop.hbase.client.ResultBoundedCompletionService$QueueingFuture.run(ResultBoundedCompletionService.java:65)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

... 1 more

Caused by: java.net.UnknownHostException: host3

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$BlockingRpcChannelImplementation.<init>(AbstractRpcClient.java:315)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.createBlockingRpcChannel(AbstractRpcClient.java:267)

at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.getClient(ConnectionManager.java:1639)

at org.apache.hadoop.hbase.client.ScannerCallable.prepare(ScannerCallable.java:162)

at org.apache.hadoop.hbase.client.ScannerCallableWithReplicas$RetryingRPC.prepare(ScannerCallableWithReplicas.java:372)

at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:134)

... 4 more

上边是日志中打印出的错误。从这个错误中能够清晰的看出UnknownHostException:host3。这个是哪里出来的host3。想了一下。我的环境是这样的,我的集群和hbase部署在虚拟机上,虚拟机是linux(3台),程序部署在windows上。那么问题来了,这个host3是三台linux互相之间认识的(在/etc/hosts中配置的),那么我的这台windows是不认识他们的。现在就可以分析问题了。

利用java api进行操作HBase的时候,访问集群中的各台主机时,有host3,host2,host1都不认识。所以造成了上面的问题。好了下面是解决方法:

C:\Windows\System32\drivers\etc\hosts文件中增加如下内容:

127.0.0.1 localhost

192.168.1.221 host1

192.168.1.222 host2

192.168.1.223 host3

程序再次运行:

hdfs://192.168.1.221:60000

log4j:WARN No appenders could be found for logger (org.apache.hadoop.security.Groups).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Delete Table test success!

************start create table**********

test create successfully!

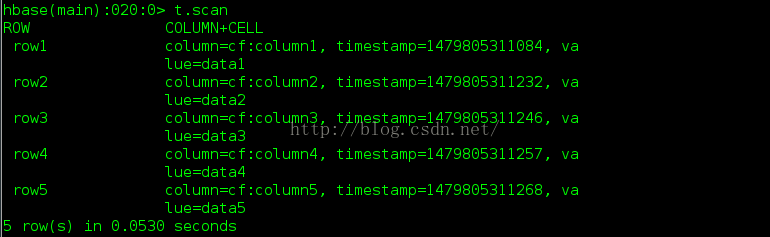

put 'row1', 'cf:column1', 'data1'

put 'row2', 'cf:column2', 'data2'

put 'row3', 'cf:column3', 'data3'

put 'row4', 'cf:column4', 'data4'

put 'row5', 'cf:column5', 'data5'

Get: keyvalues={row1/cf:column1/1479805311084/Put/vlen=5/seqid=0}

Scan: keyvalues={row1/cf:column1/1479805311084/Put/vlen=5/seqid=0}

Scan: keyvalues={row2/cf:column2/1479805311232/Put/vlen=5/seqid=0}

Scan: keyvalues={row3/cf:column3/1479805311246/Put/vlen=5/seqid=0}

Scan: keyvalues={row4/cf:column4/1479805311257/Put/vlen=5/seqid=0}

Scan: keyvalues={row5/cf:column5/1479805311268/Put/vlen=5/seqid=0}进入HBase shell查看test:

最后,附上这个工程的代码:

http://blog.csdn.net/wild46cat/article/details/53288537

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?