- nimbus-data

storm-core/backtype/storm/nimbus.clj

(defn nimbus-data [conf inimbus]

(let [forced-scheduler (.getForcedScheduler inimbus)]

{:conf conf

:inimbus inimbus ; INimbus实现类, standalone-nimbus的返回值

:submitted-count (atom 0) ; 已经提交的计算拓扑的数量, 初始值为原子值0.当提交一个拓扑,这个数字+1

:storm-cluster-state (cluster/mk-storm-cluster-state conf) ; 创建storm集群的状态. 保存在ZooKeeper中!

:submit-lock (Object.) ; 对象锁, 用于各个topology之间的互斥操作, 比如建目录

:heartbeats-cache (atom {}) ; 心跳缓存, 记录各个Topology的heartbeats的cache

:downloaders (file-cache-map conf) ; 文件下载和上传缓存, 是一个TimeCacheMap

:uploaders (file-cache-map conf)

:uptime (uptime-computer) ; 计算上传的时间

:validator (new-instance (conf NIMBUS-TOPOLOGY-VALIDATOR)) ; (map key)等价于(key map)

:timer (mk-timer :kill-fn (fn [t]

(log-error t "Error when processing event")

(halt-process! 20 "Error when processing an event")

))

:scheduler (mk-scheduler conf inimbus)

}))

1) TimeCacheMap

(defn file-cache-map [conf]

(TimeCacheMap.

(int (conf NIMBUS-FILE-COPY-EXPIRATION-SECS))

(reify TimeCacheMap$ExpiredCallback

(expire [this id stream]

(.close stream)

))

))

上面调用的是TimeCacheMap的第二个构造函数:

storm-core/backtype/storm/utils/TimeCacheMap.Java

public class TimeCacheMap<K, V> {

//this default ensures things expire at most 50% past the expiration time

private static final int DEFAULT_NUM_BUCKETS = 3;

public static interface ExpiredCallback<K, V> {

public void expire(K key, V val);

}

public TimeCacheMap(int expirationSecs, ExpiredCallback<K, V> callback) {

this(expirationSecs, DEFAULT_NUM_BUCKETS, callback);

}

public TimeCacheMap(int expirationSecs) {

this(expirationSecs, DEFAULT_NUM_BUCKETS);

}

public TimeCacheMap(int expirationSecs, int numBuckets) {

this(expirationSecs, numBuckets, null);

}

}

Twitter Storm源码分析之TimeCacheMap

TimeCacheMap是Twitter Storm里面一个类, Storm使用它来保存那些最近活跃的对象,并且可以自动删除那些已经过期的对象。

TimeCacheMap里面的数据是保存在内部变量 _bucket 里面的:

private LinkedList<HashMap<K, V>> _buckets;

在这点上跟ConcurrentHashMap有点类似, ConcurrentHashMap是利用多个bucket来缩小锁的粒度, 从而实现高并发的读写。

而TimeCacheMap则是利用多个bucket来使得数据清理线程占用锁的时间最小。

首先来看看TimeCacheMap的构造函数, 它的构造函数首先是生成numBuckets个空的HashMap:

_buckets = new LinkedList<HashMap<K, V>>();

for ( int i=0; i<numBuckets; i++) {

_buckets.add( new HashMap<K, V>());

}

然后就是最关键的清理线程部分,TimeCacheMap使用一个单独的线程来清理那些过期的数据:

private LinkedList<HashMap<K, V>> _buckets;

private final Object _lock = new Object();

private Thread _cleaner;

private ExpiredCallback _callback;

public TimeCacheMap(int expirationSecs, int numBuckets, ExpiredCallback<K, V> callback) {

_buckets = new LinkedList<HashMap<K, V>>();

for(int i=0; i<numBuckets; i++) {

_buckets.add(new HashMap<K, V>());

}

_callback = callback;

final long expirationMillis = expirationSecs * 1000L;

final long sleepTime = expirationMillis / (numBuckets-1);

_cleaner = new Thread(new Runnable() {

public void run() {

try {

while(true) {

Map<K, V> dead = null;

Time.sleep(sleepTime);

synchronized(_lock) {

dead = _buckets.removeLast(); //删除链表中的最后一个bucket

_buckets.addFirst(new HashMap<K, V>()); //往链表头部添加一个空的bucket

}

if(_callback!=null) {

for(Entry<K, V> entry: dead.entrySet()) { //要删除的最后一个bucket的每个Map的条目

_callback.expire(entry.getKey(), entry.getValue()); //这个Map里的每个条目都要被过期!

}

}

}

} catch (InterruptedException ex) {}

}

});

_cleaner.setDaemon(true);

_cleaner.start();

}

这个线程每隔 expirationSecs / (numBuckets - 1) 秒钟的时间去把最后一个bucket里面的数据全部都删除掉

— 这些被删除掉的数据其实就是过期的数据。(为什么不是每隔expirationSecs就来删除一次呢? 我们下面会说)。

这里值得注意的是: 正是因为这种分成多个桶的机制, 清理线程对于 _lock 的占用时间极短。

只要把最后一个bucket从_buckets解下,并且向头上面添加一个新的bucket就好了:

synchronized (_lock) {

dead = _buckets.removeLast();

_buckets.addFirst(new HashMap<K, V>());

}

如果不是这种机制的话, 那我能想到的最傻的办法可能就是给每条数据一个过期时间字段,

然后清理线程就要遍历每条数据来检查数据是否过期了。那显然要HOLD住这个锁很长时间了。

同时对于每条过期的数据TimeCacheMap会执行我们的callback函数:

if (_callback!= null ) {

for (Entry<K, V> entry: dead.entrySet()) {

_callback.expire(entry.getKey(), entry.getValue());

}

}

大致机制就是这样,那么我们现在回过头来看看前面的那个问题: 为什么这个清理线程是每隔 expirationSecs / (numBuckets - 1) 秒的时间来检查,

这样对吗?TimeCacheMap的内部有多个桶, 当你向这个TimeCacheMap里面添加数据的时候,数据总是添加到第一个桶里面去的。

public void put(K key, V value) {

synchronized (_lock) {

Iterator<HashMap<K, V>> it = _buckets.iterator();

HashMap<K, V> bucket = it.next();

bucket.put(key, value); // 在第一个bucket中

while (it.hasNext()) {

bucket = it.next();

bucket.remove(key); // 如果key有在其他的buckets中, 则要从那些bucket中删除该key. 因为map要保证同一个key只出现一次!

}

}

}

我们看个例子就明白了,假设 numBuckets = 3, expirationSecs = 2 。

我们先往里面填一条数据 {1: 1}, 这条数据被加到第一个桶里面去, 现在TimeCacheMap的状态是:

[{1:1}, {}, {}]

1> 过了sleepTime = expirationSecs / (numBuckets - 1) = 2 / (3 - 1) = 1秒钟之后。

清理线程干掉最后一个HashMap, 并且在头上添加一个新的空HashMap, 现在TimeCacheMap的状态是:

[{}, {1:1}, {}]

2> 再过了一秒钟, 同上, TimeCacheMap的状态会变成:

[{}, {}, {1:1}]

3> 再过一秒钟, 现在{1:1}是最后一个TimeCacheMap了,就被干掉了。于是TimeCacheMap的状态变成:

[{}, {}, {}]

所以从 {1:1} 被加入到这个TimeCacheMap到被干掉一共用了3秒,其实这个3秒就等于

3 = expirationSecs * ( 1 + 1 / (numBuckets -- 1)) = 2 * (1 + 1/(3-1)) = 2*(1 + 0.5) = 3

它的注释里面也提到了这一点

Expires keys that have not been updated in the configured number of seconds. 使还没有更新的key在配置的时间内失效

The algorithm used will take between expirationSecs and expirationSecs * (1 + 1 / (numBuckets-1)) to actually expire the message.

get, put, remove, containsKey, and size take O(numBuckets) time to run.

The advantage of this design is that the expiration thread only locks the object for O(1) time, meaning the object is essentially always available for gets/puts.

来看TimeCacheMap的containsKey, get, remove方法. 其实按照通常的Map是只有一个桶的. 分成多个桶的目的是减少锁的占用.

按照Map的语义, 一个Map里只能有相同的key, 如果有多个key,value,则会发生覆盖. 所以在添加元素的时候, 将新元素加到第一个桶.

并判断剩余的桶中是否有这个key, 如果有, 就要从中删除! 接下来在获取Map元素的时候, 只要在某个桶中找到key对应的元素, 则可以立即返回!

public boolean containsKey(K key) {

synchronized(_lock) {

for(HashMap<K, V> bucket: _buckets) {

if(bucket.containsKey(key)) {

return true;

}

}

return false;

}

}

public V get(K key) {

synchronized(_lock) {

for(HashMap<K, V> bucket: _buckets) {

if(bucket.containsKey(key)) {

return bucket.get(key);

}

}

return null;

}

}

public Object remove(K key) {

synchronized(_lock) {

for(HashMap<K, V> bucket: _buckets) {

if(bucket.containsKey(key)) {

return bucket.remove(key);

}

}

return null;

}

}

public int size() {

synchronized(_lock) {

int size = 0;

for(HashMap<K, V> bucket: _buckets) {

size+=bucket.size();

}

return size;

}

}

ExpiredCallback是在失效的时候做的回调函数. 比如清理资源. nimbus-data中的file-cache-map在expire的时候关闭了文件流.

新版本的storm把TimeCacheMap作为废弃的, 转而使用非线程安全版本的RotatingMap: (为啥不用线程安全的版本??)

RotatingMap和TimeCacheMap不同的是不再使用对象锁和单独的清理线程.

storm-core/backtype/storm/utils/RatatingMap.java

public class RotatingMap<K, V> {

private LinkedList<HashMap<K, V>> _buckets;

private ExpiredCallback _callback;

public Map<K, V> rotate() {

Map<K, V> dead = _buckets.removeLast();

_buckets.addFirst(new HashMap<K, V>());

if(_callback!=null) {

for(Entry<K, V> entry: dead.entrySet()) {

_callback.expire(entry.getKey(), entry.getValue());

}

}

return dead;

}

}

由于不使用单独的线程和锁, 把TimeCacheMap清理线程的部分移到了单独的方法rotate中.

2) Scheduler

(defn mk-scheduler [conf inimbus]

(let [forced-scheduler (.getForcedScheduler inimbus) ; standalone-nimbus的INimbus匿名类getForcedScheduler的实现为空

scheduler (cond ; 条件表达式 (cond cond1 exp1 cond2 exp2 :else default)

forced-scheduler ; INimbus指定了调度器的实现

(do (log-message "Using forced scheduler from INimbus " (class forced-scheduler))

forced-scheduler) ; do 表达式类似语句块

(conf STORM-SCHEDULER) ; 使用Storm的配置. defaults.yaml中并没有storm.scheduler这个配置项, 要自定义的话写在storm.yaml中

(do (log-message "Using custom scheduler: " (conf STORM-SCHEDULER))

(-> (conf STORM-SCHEDULER) new-instance)) ; 实例化

:else

(do (log-message "Using default scheduler")

(DefaultScheduler.)))] ; 默认的调度器DefaultScheduler.clj

(.prepare scheduler conf)

scheduler

))

cond表达式有三种情况. 1) standalone-nimbus中getForcedScheduler为nil, 不满足. 2) defaults.yaml中没有配置storm.scheduler配置项,

如果没有在storm.yaml中定义调度器实现, 则也不满足 3) 默认的调度器是DefaultScheduler, 实现了IScheduler接口

storm-core/backtype/storm/scheduler/IScheduler.java

public interface IScheduler {

void prepare(Map conf);

/** Set assignments for the topologies which needs scheduling. The new assignments is available through <code>cluster.getAssignments()</code>

* 为集群中的需要调度的计算拓扑分配任务. 新的任务可以通过cluster.getAssignments()获取

*@param topologies all the topologies in the cluster, some of them need schedule. Topologies object here

* only contain static information about topologies. Information like assignments, slots are all in the <code>cluster</code>object.

*@param cluster the cluster these topologies are running in. <code>cluster</code> contains everything user

* need to develop a new scheduling logic. e.g. supervisors information, available slots, current

* assignments for all the topologies etc. User can set the new assignment for topologies using <code>cluster.setAssignmentById</code> */

void schedule(Topologies topologies, Cluster cluster);

}

IScheduler的几个实现类都在storm-core/backtype/storm/scheduler/*.clj中:

-----IScheduler.java

|--------------DefaultScheduler.clj

|--------------EvenScheduler.clj

|--------------IsolationScheduler.clj

调度器分析到这里, 后面会专门针对调度器进行分析.

调度器是用来干嘛的?? Twitter Storm的新利器Pluggable Scheduler Twitter Storm: How to develop a pluggable scheduler

storm scheduler源码分析: http://www.cnblogs.com/fxjwind/archive/2013/06/14/3136008.html

3) ZooKeeper

我们知道Twitter Storm的所有的状态信息都是保存在Zookeeper里面,nimbus通过在zookeeper上面写状态信息来分配任务,supervisor,task通过从zookeeper中读状态来领取任务,同时supervisor, task也会定义发送心跳信息到zookeeper, 使得nimbus可以监控整个storm集群的状态, 从而可以重启一些挂掉的task。ZooKeeper 使得整个storm集群十分的健壮 — 任何一台工作机器挂掉都没有关系,只要重启然后从zookeeper上面重新获取状态信息就可以了。本文主要介绍Twitter Storm在ZooKeeper中保存的数据目录结构,源代码主要是: backtype.storm.cluster, 废话不多说,直接看下面的结构图:

一个要注意的地方是,作者在代码里面很多地方用到的 storm-id , 其实就是 topology-id 的意思。(以前他把topology叫做storm, 代码里面还没有改过来)

/-{storm--zk--root} storm 在zookeeper 上的根目录

|

|-/assignments topology 的任务分配信息

| |-/{topology--id} 这个下面保存的是每个topology 的assignments

| 信息包括: 对应的nimbus 上的代码目录, 所有task 的启动时间,每个task 与机器、端口的映射

|

|-/tasks 所有的task

| |-/{topology--id} 这个目录下面id 为{topology-id} 的topology所对应的所有的task-id

| |-/{task--id} 这个文件里面保存的是这个task 对应的component-id :可能是spout-id 或者bolt-id

|

|-/storms 这个目录保存所有正在运行的topology 的id

| |-/{topology--id} 保存这个topology的一些信息:topology的名字,topology开始运行的时间,topology的状态(StormBase)

|

|-/supervisors 这个目录保存所有的supervisor的心跳信息

| |-/{supervisor--id} 保存supervisor的心跳信息:心跳时间,主机名,这个supervisor 上worker的端口号运行时间(SupervisorInfo)

|

|-/taskbeats 所有task 的心跳

| |-/{topology--id} 这个目录保存这个topology 的所有的task 的心跳信息

| |-/{task--id} task 的心跳信息,包括心跳的时间,task 运行时间以及一些统计信息

|

|-/taskerrors 所有task 所产生的error 信息

|-/{topology--id} 这个目录保存这个topology 下面每个task 的出错信息

|-/{task--id} 这个task 的出错信息

在0.9.0.1+后的版本, zookeeper目录发生了变化: 没有了tasks. taskbeats更名为workerbeats. taskerrors更名为errors.

这个几个目录定义在cluster.clj. 在mk-storm-cluster-state时进行创建

storm-core/backtype/storm/cluster.clj

(def ASSIGNMENTS-ROOT "assignments")

(def CODE-ROOT "code")

(def STORMS-ROOT "storms")

(def SUPERVISORS-ROOT "supervisors")

(def WORKERBEATS-ROOT "workerbeats")

(def ERRORS-ROOT "errors")

zookeeper.clj中的mk-client函数就是初始化Curator即建立与zookeeperserver的连接。

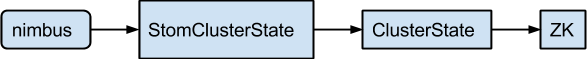

service-handler(nimbus)

|---------nimbus-data(nimbus)

|-------mk-storm-cluster-state(cluster.clj)

|--------------mk-distributed-cluster-state(cluster)

|----------------zk/mk-client(zookeeper.clj)

需要注意的是,nimbus中并没有注册任何回调函数来处理zookeeper送来的notification。???

nimbus是通过定时机制来定期轮询zookeeper中的目录进而感知到supervisor的加入和退出。

storm-core/backtype/storm/cluster.clj

;; Watches should be used for optimization. When ZK is reconnecting, they're not guaranteed to be called.

;; 应该使用Watches来优化. 当ZK重新连接时, 它们(这里)并没有保证被调用.

;; nimbus.clj的nimbus-data调用参数是conf, 是一个Map. 这里定义了一个新的协议接口. satisfies?返回false

;; 什么时候返回true? 参数cluster-state-spec是ClusterState的子类时

(defn mk-storm-cluster-state [cluster-state-spec]

(let [[solo? cluster-state] (if (satisfies? ClusterState cluster-state-spec)

[false cluster-state-spec]

[true (mk-distributed-cluster-state cluster-state-spec)])

… ...

(defn mk-distributed-cluster-state [conf]

(let [zk (zk/mk-client conf (conf STORM-ZOOKEEPER-SERVERS) (conf STORM-ZOOKEEPER-PORT) :auth-conf conf)]

(zk/mkdirs zk (conf STORM-ZOOKEEPER-ROOT)) ; defaults.yaml--> storm.zookeeper.root: "/storm"

(.close zk)) ; 创建完/storms目录后, 关闭zk

… …

storm-core/backtype/storm/zookeeper.clj

(defnk mk-client [conf servers port :root "" :watcher default-watcher :auth-conf nil]

(let [fk (Utils/newCurator conf servers port root (when auth-conf (ZookeeperAuthInfo. auth-conf)))]

(.. fk ; 两个.表示连续调用. fk = CuratorFramework

(getCuratorListenable) ; fk.getCuratorListenable().addListener(new CuratorListener(){...})

(addListener ; 监听器作为匿名类

(reify CuratorListener ; 通过监听器的方式指定Watcher

(^void eventReceived [this ^CuratorFramework _fk ^CuratorEvent e]

(when (= (.getType e) CuratorEventType/WATCHED) ; 事件类型为WATCHED

(let [^WatchedEvent event (.getWatchedEvent e)]

;; watcher即方法中参数:watcher. 默认为default-watcher函数, 接受三个参数[state, type, path]

(watcher (zk-keeper-states (.getState event)) ; zk-keeper-states是个map, event.getState()得到的是确定的key. (map key)

(zk-event-types (.getType event)) ; zk-event-types也是一个map

(.getPath event)))))))) ; watcher(Watcher.Event.KeeperState, Watcher.Event.EventType, path). watcher是一个function!

(.start fk) ; CuratorFramework.start()

fk)) ; 返回值为fk. 因为操作zk的接口使用的就是这个对象

storm-core/backtype/storm/utils/Utils.java

public static CuratorFramework newCurator(Map conf, List<String> servers, Object port, String root, ZookeeperAuthInfo auth) {

List<String> serverPorts = new ArrayList<String>();

for(String zkServer: (List<String>) servers) {

serverPorts.add(zkServer + ":" + Utils.getInt(port)); // storm.yaml中storm.zookeeper.servers可以配置多个选项, port只有一个

}

String zkStr = StringUtils.join(serverPorts, ",") + root; // root: 相对路径. h1:2181,h2:2181/root

try {

CuratorFrameworkFactory.Builder builder = CuratorFrameworkFactory.builder()

.connectString(zkStr) // 连接多个zookeeper servers.

.connectionTimeoutMs(Utils.getInt(conf.get(Config.STORM_ZOOKEEPER_CONNECTION_TIMEOUT)))

.sessionTimeoutMs(Utils.getInt(conf.get(Config.STORM_ZOOKEEPER_SESSION_TIMEOUT)))

.retryPolicy(new BoundedExponentialBackoffRetry( // 尝试策略. 设置时间间隔等参数

Utils.getInt(conf.get(Config.STORM_ZOOKEEPER_RETRY_INTERVAL)),

Utils.getInt(conf.get(Config.STORM_ZOOKEEPER_RETRY_TIMES)),

Utils.getInt(conf.get(Config.STORM_ZOOKEEPER_RETRY_INTERVAL_CEILING))));

if(auth!=null && auth.scheme!=null) builder = builder.authorization(auth.scheme, auth.payload);

return builder.build();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

zk的几个常量都是Map的形式. 获取map中key的方式都使用了这种形式: (map key)

(def zk-keeper-states

{Watcher$Event$KeeperState/Disconnected :disconnected

Watcher$Event$KeeperState/SyncConnected :connected

Watcher$Event$KeeperState/AuthFailed :auth-failed

Watcher$Event$KeeperState/Expired :expired

})

(def zk-event-types

{Watcher$Event$EventType/None :none

Watcher$Event$EventType/NodeCreated :node-created

Watcher$Event$EventType/NodeDeleted :node-deleted

Watcher$Event$EventType/NodeDataChanged :node-data-changed

Watcher$Event$EventType/NodeChildrenChanged :node-children-changed

})

(def zk-create-modes

{:ephemeral CreateMode/EPHEMERAL

:persistent CreateMode/PERSISTENT

:sequential CreateMode/PERSISTENT_SEQUENTIAL})

zookeeper.clj的mk-client的返回值是一个CuratorFramework对象. 这是操作zookeeper接口的对象. zk/mkdirs的第一个参数zk就是这个对象!

(defn mkdirs [^CuratorFramework zk ^String path] ;; 第一个参数zk就是mk-client的返回值CuratorFramework对象

(let [path (normalize-path path)]

(when-not (or (= path "/") (exists-node? zk path false)) ; path不是/ 或者不存在, 才可以新建

(mkdirs zk (parent-path path))

(try-cause

(create-node zk path (barr 7) :persistent) ; 最后一个参数是个keyword, 是参数mode, 因为mode的值是确定的

(catch KeeperException$NodeExistsException e)) ;; this can happen when multiple clients doing mkdir at same time

)))

(defn create-node

([^CuratorFramework zk ^String path ^bytes data mode] ; 假设参数mode=:persistent, 则(zk-create-modes mode)为CreateMode/PERSISTENT

(try

(.. zk (create) (withMode (zk-create-modes mode)) (withACL ZooDefs$Ids/OPEN_ACL_UNSAFE) (forPath (normalize-path path) data))

(catch Exception e (throw (wrap-in-runtime e)))))

([^CuratorFramework zk ^String path ^bytes data] ; 没有mode的处理方式: 递归

(create-node zk path data :persistent)))

curator使用java操作的示例: http://shift-alt-ctrl.iteye.com/blog/1983073

几个处理zookeeper路径的帮助函数在util.clj中

storm-core/backtype/storm/util.clj

;; 分隔路径. zookeeper的节点都是以/开头的绝对路径. 返回一个向量, 包含了某个节点的所有路径的部分

(defn tokenize-path [^String path] ;; path=/storm/storms/topo-id

(let [toks (.split path "/")]

(vec (filter (complement empty?) toks)) ;; 返回值为: #{storm storms topo-id}

))

(defn parent-path [path] ;; path=/storm/storms/topo-id

(let [toks (tokenize-path path)]

(str "/" (str/join "/" (butlast toks))) ;;父路径为: /storm/storms

))

;; 将toks重新组装成路径. 每个元素以/拼接, 最开始也必须是/

(defn toks->path [toks] ;; toks=#{storm storms topo-id}

(str "/" (str/join "/" toks)) ;; 返回值为: /storm/storms/topo-id

)

(defn normalize-path [^String path] ;; 规范化路径

(toks->path (tokenize-path path)))

(defn full-path [parent name] ;; parent=/storm/storms name=topo-id

(let [toks (tokenize-path parent)] ; #{storm storms}

(toks->path (conj toks name)) ; conj的结果为: #{storm storms topo-id}

)) ; toks-path后为: /storm/storms/topo-id

zookeeper.clj是操作zk的接口. cluster.clj的mk-distributed-cluster-state我们只分析到第一个let表达式. 第二个let表达式返回ClusterState匿名对象:

(defprotocol ClusterState

(set-ephemeral-node [this path data])

(delete-node [this path])

(create-sequential [this path data])

(set-data [this path data]) ;; if node does not exist, create persistent with this data

(get-data [this path watch?])

(get-children [this path watch?])

(mkdirs [this path])

(close [this])

(register [this callback])

(unregister [this id])

)

定义集群的状态为一个协议类型. 集群的状态是记录在zookeeper中的. 所以这个协议的方法都是一些zookeeper操作的API.

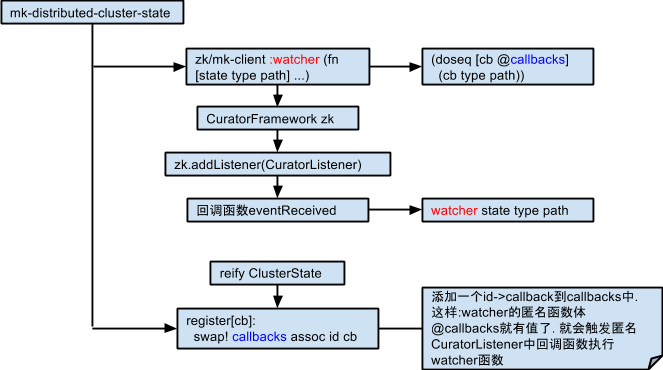

(defn mk-distributed-cluster-state [conf]

(let [zk (zk/mk-client conf (conf STORM-ZOOKEEPER-SERVERS) (conf STORM-ZOOKEEPER-PORT) :auth-conf conf)]

(zk/mkdirs zk (conf STORM-ZOOKEEPER-ROOT)) ; defaults.yaml--> storm.zookeeper.root: "/storm"

(.close zk)) ; 创建完/storms后, 关闭zk

(let [callbacks (atom {})

active (atom true)

zk (zk/mk-client conf ; 因为mk-client是一个defnk函数. :key value提供的是额外的参数,会覆盖方法中的参数

(conf STORM-ZOOKEEPER-SERVERS)

(conf STORM-ZOOKEEPER-PORT)

:auth-conf conf

:root (conf STORM-ZOOKEEPER-ROOT) ; 指定了相对路径为/storm, 则后面的mkdirs等方法均会加上这个相对路径!

:watcher (fn [state type path] ; event.getState(), event.getType(), path. 和default-watcher参数一样

(when @active ; 原子的解引用

(when-not (= :connected state) ; state和type的取值见zookeeper.clj的zk-keeper-states和zk-event-types

(log-warn "Received event " state ":" type ":" path " with disconnected Zookeeper."))

(when-not (= :none type)

(doseq [callback (vals @callbacks)]

(callback type path)))) ; 真正的回调函数只需要两个参数[type path]. state用于条件判断

))]

(reify ClusterState

(register [this callback]

(let [id (uuid)]

(swap! callbacks assoc id callback) ; 给id注册一个回调函数. 添加到callbacks中. 这样前面声明的@callbacks就有数据了!

id ; 返回值为id, 这样接下来的操作就可以使用这个id. 也就能取到对应的callback

))

(unregister [this id] (swap! callbacks dissoc id)) ; 不注册. 参数id就是上面第一个函数register的返回值. 见怪不怪了吧.

(set-ephemeral-node [this path data] ; 设置短暂节点

(zk/mkdirs zk (parent-path path)) ; zk是let中赋值的CuratorFramework操作客户端. zk/mkdirs的第一个参数就是它

(if (zk/exists zk path false) ; 已经存在节点: 直接更新数据

(try-cause

(zk/set-data zk path data) ; should verify that it's ephemeral

(catch KeeperException$NoNodeException e

(log-warn-error e "Ephemeral node disappeared between checking for existing and setting data")

(zk/create-node zk path data :ephemeral)

))

(zk/create-node zk path data :ephemeral) ; 不存在, 直接创建一个mode=:ephemeral的节点

))

(create-sequential [this path data]

(zk/create-node zk path data :sequential)) ; 创建顺序节点

(set-data [this path data] ;; note: this does not turn off any existing watches

(if (zk/exists zk path false) ; if exists (set-data) (mkdirs and create-node-with-data)

(zk/set-data zk path data)

(do

(zk/mkdirs zk (parent-path path)) ; 如果不存在, 先创建该节点的父节点

(zk/create-node zk path data :persistent) ; 然后创建该节点(创建节点的时候指定数据)

)))

(delete-node [this path] (zk/delete-recursive zk path))

(get-data [this path watch?] (zk/get-data zk path watch?)) ; 获取数据的方法都有一个watch?标志位

(get-children [this path watch?] (zk/get-children zk path watch?))

(mkdirs [this path] (zk/mkdirs zk path))

(close [this]

(reset! active false) ; 重置active=false. 在mk-client前初始值为true

(.close zk))

)))

:watcher与callback流程图:

4) mk-storm-cluster-state

现在一步步逆推, 回到mk-storm-cluster-state这条主线上来.

storm-core/backtype/storm/cluster.clj

;; Watches should be used for optimization. When ZK is reconnecting, they're not guaranteed to be called. 应该使用Watches来优化. 当ZK重新连接时, 它们(这里)并没有保证被调用.

;; nimbus.clj的nimbus-data调用参数是conf, 是一个Map. 这里定义了一个新的协议接口. satisfies?返回false. 什么时候返回true? 参数cluster-state-spec是ClusterState的子类时

(defn mk-storm-cluster-state [cluster-state-spec]

; 因为mk-distributed-cluster-state返回的是reify ClusterState, 所以let绑定的cluster-state是一个ClusterState实例!

(let [[solo? cluster-state] (if (satisfies? ClusterState cluster-state-spec)

[false cluster-state-spec]

[true (mk-distributed-cluster-state cluster-state-spec)])

assignment-info-callback (atom {})

supervisors-callback (atom nil)

assignments-callback (atom nil)

storm-base-callback (atom {})

state-id (register ; 调用的ClusterState的register方法. register(this callback), 返回值是一个uuid, 取消注册时根据uuid从map中删除

cluster-state ; 第一个参数为this, 第二个参数为callback匿名函数.

(fn [type path] ; callback方法的参数和mk-distributed-cluster-state的:watcher的函数的(callback type path)一样.

(let [[subtree & args] (tokenize-path path)] ; path为/storms/topo-id, 返回#{storms topo-id}, 则subtree=storms

(condp = subtree

ASSIGNMENTS-ROOT (if (empty? args)

(issue-callback! assignments-callback)

(issue-map-callback! assignment-info-callback (first args)))

SUPERVISORS-ROOT (issue-callback! supervisors-callback)

STORMS-ROOT (issue-map-callback! storm-base-callback (first args))

(halt-process! 30 "Unknown callback for subtree " subtree args) ;; this should never happen

)

)))]

(doseq [p [ASSIGNMENTS-SUBTREE STORMS-SUBTREE SUPERVISORS-SUBTREE WORKERBEATS-SUBTREE ERRORS-SUBTREE]]

(mkdirs cluster-state p)) ; 在zookeeper上创建各个目录. ClusterState.mkdirs -> zk/mkdirs

(reify StormClusterState ; 又定义了一个协议. 不过这个协议都是通过调用ClusterState的方法来达到目的. 当然这两个协议的方法是不一样的

… …

在调用register注册了一个回调函数之后, 循环创建[**-SUBTREE]这些子目录. 注意到这些SUBTREE并不包括最开始的/storm这个路径:

(def ASSIGNMENTS-SUBTREE (str "/" ASSIGNMENTS-ROOT))

(def STORMS-SUBTREE (str "/" STORMS-ROOT))

(def SUPERVISORS-SUBTREE (str "/" SUPERVISORS-ROOT))

(def WORKERBEATS-SUBTREE (str "/" WORKERBEATS-ROOT))

(def ERRORS-SUBTREE (str "/" ERRORS-ROOT))

而zookeeper中创建assignments所在的完整路径是/storm/assignments. 答案在于mk-distributed-cluster-state的第二个let表达式的:root选项

:root (conf STORM-ZOOKEEPER-ROOT) ; 指定了相对路径为/storm, 则后面的mkdirs等方法均会加上这个相对路径!

所以当调用ClusterState的mkdirs时, :root的值会自动作为操作的节点的前缀. 因为zk操作都在:root这个相对路径下进行!

这就不难推断出下面几个获取路径的方法的返回值也是不包含/storm的:

;; 下面的几个路径是否包含最开始的/storm?? NO!

;; /supervisors/supervisor-id

(defn supervisor-path [id] (str SUPERVISORS-SUBTREE "/" id))

;; /assignments/topology-id

(defn assignment-path [id] (str ASSIGNMENTS-SUBTREE "/" id))

;; /storms/topology-id

(defn storm-path [id] (str STORMS-SUBTREE "/" id))

;; /workerbeats/topology-id

(defn workerbeat-storm-root [storm-id] (str WORKERBEATS-SUBTREE "/" storm-id))

;; /workerbeats/topology-id/node-port

(defn workerbeat-path [storm-id node port] (str (workerbeat-storm-root storm-id) "/" node "-" port))

;; /errors/topology-id

(defn error-storm-root [storm-id] (str ERRORS-SUBTREE "/" storm-id))

(defn error-path [storm-id component-id] (str (error-storm-root storm-id) "/" (url-encode component-id)))

前面定义了一个ClusterState, 是操作ZooKeeper API的方法. 比如创建目录, 创建节点, 设置数据等. 而Storm的集群状态则和Storm的内部数据结构相关.

因为Storm的集群状态最终也是写到ZooKeeper中的. 所以这个接口的实现类, 内部会使用ClusterState来操作ZooKeeper API.

言归正传, Storm的集群状态包括了: 任务的分配assignments, supervisors, workers, hearbeat, errors等信息.

(defprotocol StormClusterState

; 任务(get)

(assignments [this callback])

(assignment-info [this storm-id callback])

(active-storms [this])

(storm-base [this storm-id callback])

; worker(get)

(get-worker-heartbeat [this storm-id node port])

(executor-beats [this storm-id executor->node+port])

(supervisors [this callback])

(supervisor-info [this supervisor-id]) ;; returns nil if doesn't exist

; heartbeat and error

(setup-heartbeats! [this storm-id])

(teardown-heartbeats! [this storm-id])

(teardown-topology-errors! [this storm-id])

(heartbeat-storms [this])

(error-topologies [this])

; update!(set and remove)

(worker-heartbeat! [this storm-id node port info])

(remove-worker-heartbeat! [this storm-id node port])

(supervisor-heartbeat! [this supervisor-id info])

(activate-storm! [this storm-id storm-base])

(update-storm! [this storm-id new-elems])

(remove-storm-base! [this storm-id])

(set-assignment! [this storm-id info])

(remove-storm! [this storm-id])

(report-error [this storm-id task-id error])

(errors [this storm-id task-id])

(disconnect [this])

)

看看mk-storm-cluster-state对应的reify StormClusterState的实现

(reify StormClusterState

; 获取集群的所有任务. /storm/assignments下的所有topology-id

(assignments [this callback]

(when callback (reset! assignments-callback callback))

(get-children cluster-state ASSIGNMENTS-SUBTREE (not-nil? callback)))

; 任务信息: 序列化某一个指定的storm-id(即topology-id). /storm/assignments/storm-id

(assignment-info [this storm-id callback]

(when callback (swap! assignment-info-callback assoc storm-id callback))

(maybe-deserialize (get-data cluster-state (assignment-path storm-id) (not-nil? callback))))

; 所有活动的计算拓扑. /storm/storms下的所有topology-id

(active-storms [this] (get-children cluster-state STORMS-SUBTREE false))

; 所有产生心跳的计算拓扑. /storm/workerbeats下的所有topology-id

(heartbeat-storms [this] (get-children cluster-state WORKERBEATS-SUBTREE false))

; 有错误的计算拓扑. /storm/errors下的所有topology-id

(error-topologies [this] (get-children cluster-state ERRORS-SUBTREE false))

; 工作进程worker的心跳信息. 工作进程在supervisor(node)的指定端口(port)上运行

(get-worker-heartbeat [this storm-id node port]

(-> cluster-state

(get-data (workerbeat-path storm-id node port) false)

maybe-deserialize))

;; supervisor > worker > executor > component/task(spout|bolt)

;; 一个supervisor有多个worker

;; 在storm.yaml中配置的每个node+port都组成一个worker

;; 一个worker可以有多个executor

;; 一个executor也可以有多个task

; 执行线程executor的心跳信息

(executor-beats [this storm-id executor->node+port]

;; need to take executor->node+port in explicitly so that we don't run into a situation where a

;; long dead worker with a skewed clock overrides all the timestamps. By only checking heartbeats with an assigned node+port,

;; and only reading executors from that heartbeat that are actually assigned, we avoid situations like that

(let [node+port->executors (reverse-map executor->node+port) ; 反转map. supervisor node上配置的一个端口可以运行多个executor

all-heartbeats (for [[[node port] executors] node+port->executors]

(->> (get-worker-heartbeat this storm-id node port) ; 获取worker的心跳信息

(convert-executor-beats executors) ; 上面函数的返回值作为convert的最后一个参数

))]

(apply merge all-heartbeats)))

; 所有的supervisors. /storm/supervisors下的所有supervisor-id

(supervisors [this callback]

(when callback (reset! supervisors-callback callback))

(get-children cluster-state SUPERVISORS-SUBTREE (not-nil? callback)))

; 序列化指定id的supervisor

(supervisor-info [this supervisor-id] (maybe-deserialize (get-data cluster-state (supervisor-path supervisor-id) false)))

; 更新worker的心跳信息

(worker-heartbeat! [this storm-id node port info] (set-data cluster-state (workerbeat-path storm-id node port) (Utils/serialize info)))

(remove-worker-heartbeat! [this storm-id node port] (delete-node cluster-state (workerbeat-path storm-id node port)))

; 创建一个心跳 /storm/workerbeats/storm-id

(setup-heartbeats! [this storm-id] (mkdirs cluster-state (workerbeat-storm-root storm-id)))

(teardown-heartbeats! [this storm-id]

(try-cause

(delete-node cluster-state (workerbeat-storm-root storm-id))

(catch KeeperException e (log-warn-error e "Could not teardown heartbeats for " storm-id))))

(teardown-topology-errors! [this storm-id]

(try-cause

(delete-node cluster-state (error-storm-root storm-id))

(catch KeeperException e (log-warn-error e "Could not teardown errors for " storm-id))))

(supervisor-heartbeat! [this supervisor-id info]

(set-ephemeral-node cluster-state (supervisor-path supervisor-id) (Utils/serialize info)))

; 激活一个指定id的计算拓扑

(activate-storm! [this storm-id storm-base]

(set-data cluster-state (storm-path storm-id) (Utils/serialize storm-base)))

; 使用new-elems更新一个指定的计算拓扑. 最后会进行序列化

(update-storm! [this storm-id new-elems]

(let [base (storm-base this storm-id nil)

executors (:component->executors base) ; component即task, 包括spout或者bolt. ->表示component从属于executor

new-elems (update new-elems :component->executors (partial merge executors))] ; 偏函数, 因为executors只是其中的一部分

(set-data cluster-state (storm-path storm-id)

(-> base

(merge new-elems)

Utils/serialize))))

; 一个计算拓扑的基本信息

(storm-base [this storm-id callback]

(when callback (swap! storm-base-callback assoc storm-id callback))

(maybe-deserialize (get-data cluster-state (storm-path storm-id) (not-nil? callback))))

(remove-storm-base! [this storm-id] (delete-node cluster-state (storm-path storm-id)))

(set-assignment! [this storm-id info] (set-data cluster-state (assignment-path storm-id) (Utils/serialize info)))

(remove-storm! [this storm-id]

(delete-node cluster-state (assignment-path storm-id))

(remove-storm-base! this storm-id))

(report-error [this storm-id component-id error]

(let [path (error-path storm-id component-id) ; component-id即builder.setSpout("spout",...)

data {:time-secs (current-time-secs) :error (stringify-error error)}

_ (mkdirs cluster-state path) ; /storm/errors/topology-id/spout ...

_ (create-sequential cluster-state (str path "/e") (Utils/serialize data)) ; 创建以e开头的顺序节点(编号递增)

to-kill (->> (get-children cluster-state path false)

(sort-by parse-error-path)

reverse

(drop 10))]

(doseq [k to-kill]

(delete-node cluster-state (str path "/" k)))))

(errors [this storm-id component-id]

(let [path (error-path storm-id component-id)

_ (mkdirs cluster-state path)

children (get-children cluster-state path false)

errors (dofor [c children]

(let [data (-> (get-data cluster-state (str path "/" c) false)

maybe-deserialize)]

(when data

(struct TaskError (:error data) (:time-secs data))

)))

]

(->> (filter not-nil? errors)

(sort-by (comp - :time-secs)))))

(disconnect [this]

(unregister cluster-state state-id)

(when solo?

(close cluster-state)))

)

对比ClusterState和StormCluasterState. nimbus.clj访问的是StormClusterState. 而StormClusterState在实现中访问ClusterState.

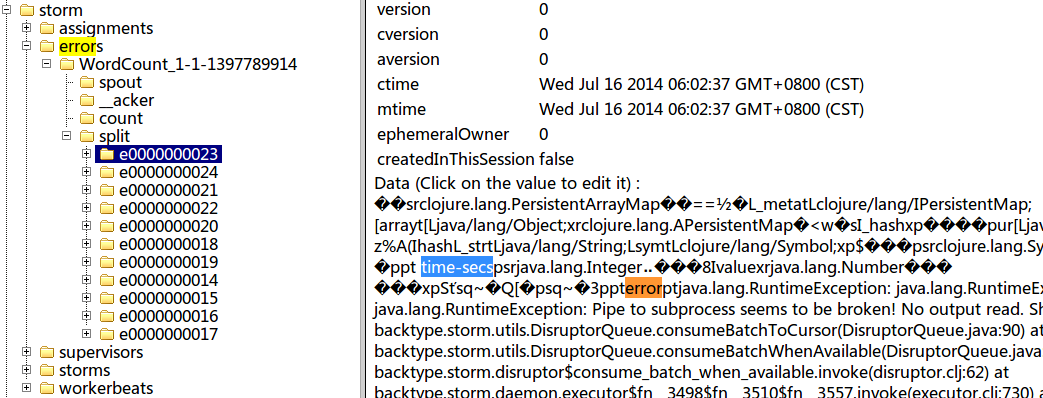

在前面我们验证了assignments, supervisors, workerbeats的三个截图. 现在来看下errors的截图.

/storm/errors下是单词计数的计算拓扑的topology-id. 拓扑下是component-id. 对应的代码:

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("spout", new RandomSentenceSpout(), 5);

builder.setBolt("split", new SplitSentence(), 8).shuffleGrouping("spout");

builder.setBolt("count", new WordCount(), 12).fieldsGrouping("split", new Fields("word"));

注意到还有一个storm默认的component: __acket. 如果有错误信息, 则会在对应的component下生成以e开头的顺序节点.

这个节点的内容包含了:time-secs和:error的data信息. 这两个信息包含在TaskError这个数据结构中:

(defstruct TaskError :error :time-secs)

是时候从nimbus-data > cluster/mk-storm-cluster-state回头了. 接下来我们重新回到nimbus.clj的service-handler这条重要的主线上去.

service-handler在nimbus-data之后有一个校验过程和transition!过程. 这个先放一边. 来看看和计算拓扑相关的代码Nimbus$Iface.

在cluster.clj的最后有一段注释: 实际上是对mk-storm-cluster-state的StormClusterState的每个方法的说明.

;; daemons have a single thread that will respond to events 进程(nimbus,supervisor..)有一个单独的线程来响应事件

;; start with initialize event 进程启动时有一个初始事件, 比如nimbus初始事件为:startup

;; callbacks add events to the thread's queue 回调函数会添加事件到线程的队列中

;; keeps in memory cache of the state, only for what client subscribes to. 将状态保存在内存缓存中, 只针对订阅的客户端

;; Any subscription is automatically kept in sync, and when there are changes, client is notified. 所有的订阅都是同步的, 当状态编号, 会通知订阅的客户端

;; master gives orders through state, and client records status in state (ephemerally)

;; master tells nodes what workers to launch 主进程将任务信息写到zk的节点上, 来通知工作进行去执行任务

;; master writes this. supervisors and workers subscribe to this to understand complete topology. 主进程写, supervisors和worker是订阅计算拓扑

;; each storm is a map from nodes to workers to tasks to ports whenever topology changes everyone will be notified. 每个计算拓扑的映射链路

;; master includes timestamp of each assignment so that appropriate time can be given to each worker to start up /assignments/{storm-id}

;; which tasks they talk to, etc. (immutable until shutdown)

;; everyone reads this in full to understand structure

;; /tasks/{storm id}/{task id} ; just contains bolt id

;; supervisors send heartbeats here, master doesn't subscribe but checks asynchronously

;; /supervisors/status/{ephemeral node ids} ;; node metadata such as port ranges are kept here

;; tasks send heartbeats here, master doesn't subscribe, just checks asynchronously

;; /taskbeats/{storm id}/{ephemeral task id}

;; contains data about whether it's started or not, tasks and workers subscribe to specific storm here to know when to shutdown

;; master manipulates

;; /storms/{storm id}

;; Zookeeper flows:

;; Master:

;; job submit:

;; 1. read which nodes are available

;; 2. set up the worker/{storm}/{task} stuff (static)

;; 3. set assignments

;; 4. start storm - necessary in case master goes down, when goes back up can remember to take down the storm (2 states: on or off)

;; Monitoring (or by checking when nodes go down or heartbeats aren't received):

;; 1. read assignment

;; 2. see which tasks/nodes are up

;; 3. make new assignment to fix any problems

;; 4. if a storm exists but is not taken down fully, ensure that storm takedown is launched (step by step remove tasks and finally remove assignments)

;; masters only possible watches is on ephemeral nodes and tasks, and maybe not even

;; Supervisor:

;; 1. monitor /storms/* and assignments

;; 2. local state about which workers are local

;; 3. when storm is on, check that workers are running locally & start/kill if different than assignments

;; 4. when storm is off, monitor tasks for workers - when they all die or don't hearbeat, kill the process and cleanup

;; Worker:

;; 1. On startup, start the tasks if the storm is on

;; Task:

;; 1. monitor assignments, reroute when assignments change

;; 2. monitor storm (when storm turns off, error if assignments change) - take down tasks as master turns them off

;; locally on supervisor: workers write pids locally on startup, supervisor deletes it on shutdown (associates pid with worker name)

;; supervisor periodically checks to make sure processes are alive

;; {rootdir}/workers/{storm id}/{worker id} ;; contains pid inside

;; all tasks in a worker share the same cluster state

;; workers, supervisors, and tasks subscribes to storm to know when it's started or stopped

;; on stopped, master removes records in order (tasks need to subscribe to themselves to see if they disappear)

;; when a master removes a worker, the supervisor should kill it (and escalate to kill -9)

;; on shutdown, tasks subscribe to tasks that send data to them to wait for them to die. when node disappears, they can die

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?