如何将本地文件上传到hadoop,又如何将hadoop文件下载到本地。借助于java.net.URL的InputStream读取HDFS的地址,在Hadoop集群中,要使用HDFS自己的文件系统FileSystem,必须生成一定的配置,有以下几种方法生成:

public static FileSystem get(Configuration conf) throws IOException

public static FileSystem get(URI uri, Configuration conf) throws IOException

public static FileSystem get(URI uri, Configuration conf, String user)

throws IOException那么实现类似于Linux文件系统里的cat功能的代码如下:

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

public class FileSystemCat {

public static void main(String[] args) throws Exception {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

InputStream in = null;

try {

in = fs.open(new Path(uri));

IOUtils.copyBytes(in, System.out, 4096, false);

}finally {

IOUtils.closeStream(in);

}

}

}import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

public class ListStatus {

public static void main(String[] args) throws Exception {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path[] paths = new Path[args.length];

for (int i = 0; i < paths.length; i++) {

paths[i] = new Path(args[i]);

}

FileStatus[] status = fs.listStatus(paths);

Path[] listedPaths = FileUtil.stat2Paths(status);

for(Path p : listedPaths) {

System.out.println(p);

}

}

}

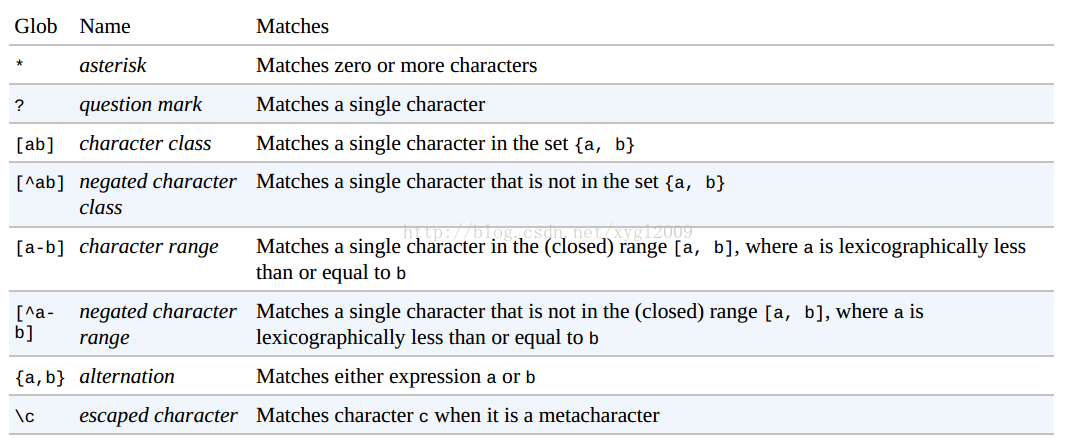

public FileStatus[] globStatus(Path pathPattern) throws IOException

public FileStatus[] globStatus(Path pathPattern, PathFilter filter)

throws IOException

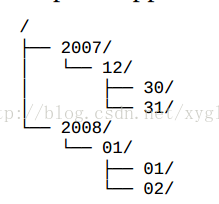

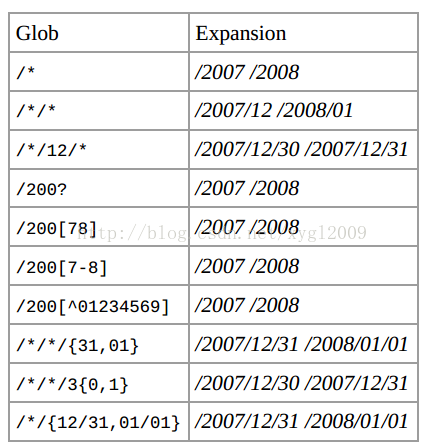

比如集群上有两个文件夹结构如下:

匹配时候,匹配结果如下,左边为通配符,右边选项为结果:

Hadoop删除HDFS数据函数:

public boolean delete(Path f, boolean recursive) throws IOException最后看一下上传HDFS与下载到HDFS实现的代码:

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

public class UploadAndDown {

public static void main(String[] args) {

UploadAndDown uploadAndDown = new UploadAndDown();

try {

//将本地文件local.txt上传为HDFS上cloud.txt文件

uploadAndDown.upLoadToCloud("local.txt", "cloud.txt");

//将HDFS上的cloud.txt文件下载到本地cloudTolocal.txt文件

uploadAndDown.downFromCloud("cloudTolocal.txt", "cloud.txt");

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

private void upLoadToCloud(String srcFileName, String cloudFileName)

throws FileNotFoundException, IOException {

String LOCAL_SRC = "/home/sina/hbase2/bin/" + srcFileName;

String CLOUD_DEST = "hdfs://localhost:9000/user/hadoop/" + cloudFileName;

InputStream in = new BufferedInputStream(new FileInputStream(LOCAL_SRC));

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(CLOUD_DEST), conf);

OutputStream out = fs.create(new Path(CLOUD_DEST), new Progressable() {

@Override

public void progress() {

System.out.println("upload a file to HDFS");

}

});

IOUtils.copyBytes(in, out, 1024, true);

}

private void downFromCloud(String srcFileName, String cloudFileName) throws FileNotFoundException, IOException {

String CLOUD_DESC = "hdfs://localhost:9000/user/hadoop/"+cloudFileName;

String LOCAL_SRC = "/home/hadoop/datasrc/"+srcFileName;

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(CLOUD_DESC), conf);

FSDataInputStream HDFS_IN = fs.open(new Path(CLOUD_DESC));

OutputStream OutToLOCAL = new FileOutputStream(LOCAL_SRC);

IOUtils.copyBytes(HDFS_IN, OutToLOCAL, 1024, true);

}

}

121

121

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?