大文件上传方案设计与实现(政府信创环境兼容)

方案背景

作为北京某软件公司的开发人员,我负责为政府客户实现一个兼容主流浏览器和信创国产化环境的大文件上传系统。当前需求是支持4GB左右文件的上传,后端使用PHP,前端使用Vue.js框架。之前尝试的百度WebUploader在国产化环境中存在兼容性问题,因此需要重新设计解决方案。

技术选型分析

方案考虑因素

- 国产化兼容:需支持信创环境(如麒麟、UOS等操作系统,飞腾、鲲鹏等CPU架构)

- 浏览器兼容:需支持Chrome、Firefox及国产浏览器(如360安全浏览器、红芯等)

- 开源合规:必须提供完整源代码供审查

- 稳定性:大文件上传的可靠性和断点续传能力

- 性能:4GB文件上传的效率和资源占用

最终方案

采用基于分片上传+断点续传的自定义实现,结合以下技术:

- 前端:Vue.js + 原生HTML5 File API + Axios

- 后端:PHP(原生或Laravel框架)

- 分片算法:固定大小分片 + MD5校验

- 进度管理:Web Worker处理哈希计算

前端实现(Vue组件示例)

1. 安装必要依赖

npm install spark-md5 axios

2. 大文件上传组件 (FileUploader.vue)

import SparkMD5 from 'spark-md5'

import axios from 'axios'

export default {

name: 'FileUploader',

data() {

return {

file: null,

chunkSize: 5 * 1024 * 1024, // 5MB每片

uploadProgress: 0,

isUploading: false,

isPaused: false,

fileHash: '',

worker: null,

currentChunk: 0,

totalChunks: 0,

uploadId: '',

abortController: null

}

},

methods: {

triggerFileInput() {

this.$refs.fileInput.click()

},

handleFileChange(e) {

const files = e.target.files

if (files.length === 0) return

this.file = files[0]

this.uploadProgress = 0

this.calculateFileHash()

},

// 使用Web Worker计算文件哈希(避免主线程阻塞)

calculateFileHash() {

this.$emit('hash-progress', 0)

this.worker = new Worker('/hash-worker.js')

this.worker.postMessage({ file: this.file, chunkSize: this.chunkSize })

this.worker.onmessage = (e) => {

const { type, data } = e.data

if (type === 'progress') {

this.$emit('hash-progress', data)

} else if (type === 'result') {

this.fileHash = data

this.totalChunks = Math.ceil(this.file.size / this.chunkSize)

this.worker.terminate()

}

}

},

async startUpload() {

if (!this.file || !this.fileHash) return

this.isUploading = true

this.isPaused = false

this.currentChunk = 0

// 1. 初始化上传,获取uploadId

try {

const initRes = await this.request({

url: '/api/upload/init',

method: 'post',

data: {

fileName: this.file.name,

fileSize: this.file.size,

fileHash: this.fileHash,

chunkSize: this.chunkSize

}

})

this.uploadId = initRes.data.uploadId

// 2. 开始分片上传

await this.uploadChunks()

// 3. 合并文件

await this.mergeChunks()

this.$emit('upload-success', initRes.data)

} catch (error) {

console.error('上传失败:', error)

this.$emit('upload-error', error)

} finally {

this.isUploading = false

}

},

async uploadChunks() {

return new Promise((resolve, reject) => {

const uploadNextChunk = async () => {

if (this.currentChunk >= this.totalChunks) {

return resolve()

}

if (this.isPaused) return

const start = this.currentChunk * this.chunkSize

const end = Math.min(start + this.chunkSize, this.file.size)

const chunk = this.file.slice(start, end)

const formData = new FormData()

formData.append('file', chunk)

formData.append('chunkNumber', this.currentChunk)

formData.append('totalChunks', this.totalChunks)

formData.append('uploadId', this.uploadId)

formData.append('fileHash', this.fileHash)

try {

await this.request({

url: '/api/upload/chunk',

method: 'post',

data: formData,

onUploadProgress: (progressEvent) => {

// 计算整体进度

const chunkProgress = Math.round(

(progressEvent.loaded * 100) / progressEvent.total

)

const totalProgress = Math.round(

((this.currentChunk * 100) + chunkProgress) / this.totalChunks

)

this.uploadProgress = totalProgress

}

})

this.currentChunk++

this.$emit('chunk-uploaded', this.currentChunk)

// 使用setTimeout避免堆栈溢出

setTimeout(uploadNextChunk, 0)

} catch (error) {

reject(error)

}

}

uploadNextChunk()

})

},

async mergeChunks() {

await this.request({

url: '/api/upload/merge',

method: 'post',

data: {

uploadId: this.uploadId,

fileHash: this.fileHash,

fileName: this.file.name,

chunkSize: this.chunkSize

}

})

},

pauseUpload() {

this.isPaused = true

if (this.abortController) {

this.abortController.abort()

}

},

resumeUpload() {

this.isPaused = false

this.uploadChunks()

},

request(config) {

// 创建新的AbortController用于取消请求

this.abortController = new AbortController()

return axios({

...config,

signal: this.abortController.signal,

headers: {

...config.headers,

'Authorization': 'Bearer ' + localStorage.getItem('token')

}

}).finally(() => {

this.abortController = null

})

},

formatFileSize(bytes) {

if (bytes === 0) return '0 Bytes'

const k = 1024

const sizes = ['Bytes', 'KB', 'MB', 'GB', 'TB']

const i = Math.floor(Math.log(bytes) / Math.log(k))

return parseFloat((bytes / Math.pow(k, i)).toFixed(2)) + ' ' + sizes[i]

}

},

beforeDestroy() {

if (this.worker) {

this.worker.terminate()

}

if (this.abortController) {

this.abortController.abort()

}

}

}

.progress-container {

margin-top: 10px;

width: 100%;

}

progress {

width: 80%;

height: 20px;

}

3. Web Worker脚本 (public/hash-worker.js)

// 使用SparkMD5计算文件哈希(在Web Worker中运行)

self.importScripts('https://cdn.jsdelivr.net/npm/spark-md5@3.0.2/spark-md5.min.js')

self.onmessage = function(e) {

const { file, chunkSize } = e.data

const chunks = Math.ceil(file.size / chunkSize)

const spark = new SparkMD5.ArrayBuffer()

const fileReader = new FileReader()

let currentChunk = 0

fileReader.onload = function(e) {

spark.append(e.target.result)

currentChunk++

// 报告进度

self.postMessage({

type: 'progress',

data: Math.floor((currentChunk / chunks) * 100)

})

if (currentChunk < chunks) {

loadNextChunk()

} else {

const hash = spark.end()

self.postMessage({

type: 'result',

data: hash

})

}

}

function loadNextChunk() {

const start = currentChunk * chunkSize

const end = Math.min(start + chunkSize, file.size)

fileReader.readAsArrayBuffer(file.slice(start, end))

}

loadNextChunk()

}

后端PHP实现

1. 初始化上传接口

// api/upload/init

public function initUpload(Request $request)

{

$data = $request->only(['fileName', 'fileSize', 'fileHash', 'chunkSize']);

// 验证参数

$validator = Validator::make($data, [

'fileName' => 'required|string',

'fileSize' => 'required|integer',

'fileHash' => 'required|string',

'chunkSize' => 'required|integer'

]);

if ($validator->fails()) {

return response()->json(['code' => 400, 'msg' => '参数错误']);

}

// 生成唯一uploadId

$uploadId = md5(uniqid());

// 创建临时目录

$tempDir = storage_path("app/uploads/temp/{$uploadId}");

if (!file_exists($tempDir)) {

mkdir($tempDir, 0755, true);

}

// 保存上传信息(实际项目中应该存入数据库)

$uploadInfo = [

'upload_id' => $uploadId,

'file_name' => $data['fileName'],

'file_size' => $data['fileSize'],

'file_hash' => $data['fileHash'],

'chunk_size' => $data['chunkSize'],

'total_chunks' => ceil($data['fileSize'] / $data['chunkSize']),

'uploaded_chunks' => [],

'created_at' => now()

];

file_put_contents("{$tempDir}/upload_info.json", json_encode($uploadInfo));

return response()->json([

'code' => 200,

'msg' => 'success',

'data' => [

'uploadId' => $uploadId,

'tempDir' => $tempDir

]

]);

}

2. 分片上传接口

// api/upload/chunk

public function uploadChunk(Request $request)

{

$uploadId = $request->input('uploadId');

$chunkNumber = $request->input('chunkNumber');

$fileHash = $request->input('fileHash');

if (!$request->hasFile('file') || !$uploadId || $chunkNumber === null) {

return response()->json(['code' => 400, 'msg' => '参数错误']);

}

$tempDir = storage_path("app/uploads/temp/{$uploadId}");

if (!file_exists($tempDir)) {

return response()->json(['code' => 404, 'msg' => '上传会话不存在']);

}

// 读取上传信息

$uploadInfo = json_decode(file_get_contents("{$tempDir}/upload_info.json"), true);

// 验证文件哈希

if ($uploadInfo['file_hash'] !== $fileHash) {

return response()->json(['code' => 400, 'msg' => '文件哈希不匹配']);

}

// 保存分片

$chunkFile = $request->file('file');

$chunkPath = "{$tempDir}/{$chunkNumber}.part";

$chunkFile->move(dirname($chunkPath), basename($chunkPath));

// 记录已上传的分片

$uploadInfo['uploaded_chunks'][] = $chunkNumber;

file_put_contents("{$tempDir}/upload_info.json", json_encode($uploadInfo));

return response()->json(['code' => 200, 'msg' => '分片上传成功']);

}

3. 合并分片接口

// api/upload/merge

public function mergeChunks(Request $request)

{

$data = $request->only(['uploadId', 'fileHash', 'fileName']);

$validator = Validator::make($data, [

'uploadId' => 'required|string',

'fileHash' => 'required|string',

'fileName' => 'required|string'

]);

if ($validator->fails()) {

return response()->json(['code' => 400, 'msg' => '参数错误']);

}

$tempDir = storage_path("app/uploads/temp/{$data['uploadId']}");

if (!file_exists($tempDir)) {

return response()->json(['code' => 404, 'msg' => '上传会话不存在']);

}

// 读取上传信息

$uploadInfo = json_decode(file_get_contents("{$tempDir}/upload_info.json"), true);

// 验证文件哈希

if ($uploadInfo['file_hash'] !== $data['fileHash']) {

return response()->json(['code' => 400, 'msg' => '文件哈希不匹配']);

}

// 检查是否所有分片都已上传

$totalChunks = $uploadInfo['total_chunks'];

$uploadedChunks = $uploadInfo['uploaded_chunks'];

if (count($uploadedChunks) !== $totalChunks) {

return response()->json(['code' => 400, 'msg' => '还有分片未上传完成']);

}

// 创建最终文件

$finalDir = storage_path('app/uploads/final');

if (!file_exists($finalDir)) {

mkdir($finalDir, 0755, true);

}

$finalPath = "{$finalDir}/{$data['fileHash']}_{$data['fileName']}";

$fp = fopen($finalPath, 'wb');

// 按顺序合并分片

for ($i = 0; $i < $totalChunks; $i++) {

$chunkPath = "{$tempDir}/{$i}.part";

$chunkContent = file_get_contents($chunkPath);

fwrite($fp, $chunkContent);

unlink($chunkPath); // 删除分片文件

}

fclose($fp);

// 清理临时目录

rmdir($tempDir);

return response()->json([

'code' => 200,

'msg' => '文件合并成功',

'data' => [

'filePath' => $finalPath,

'fileUrl' => asset("storage/uploads/final/" . basename($finalPath))

]

]);

}

国产化环境适配说明

-

浏览器兼容:

- 使用原生HTML5 File API,兼容所有现代浏览器

- 对于不支持的浏览器(如旧版IE),可添加降级提示

-

信创环境适配:

- 前端代码不依赖任何特定浏览器API

- 后端PHP使用原生文件操作函数,无系统相关调用

- 测试通过:麒麟V10、UOS等国产操作系统+飞腾/鲲鹏CPU环境

-

安全考虑:

- 文件哈希验证防止篡改

- 分片上传避免内存溢出

- 临时文件及时清理

部署注意事项

-

PHP配置:

- 确保

upload_max_filesize和post_max_size大于分片大小 - 调整

max_execution_time避免超时

- 确保

-

Nginx配置(如使用):

client_max_body_size 100M; client_body_timeout 300s; -

存储路径权限:

- 确保

storage/app/uploads目录有写入权限

- 确保

总结

本方案通过分片上传和断点续传技术,解决了大文件上传的稳定性问题,同时完全满足政府客户的国产化兼容和源代码审查要求。前端采用Vue.js+原生API实现,后端使用纯PHP处理,不依赖任何闭源组件,确保了代码的完全可控性。

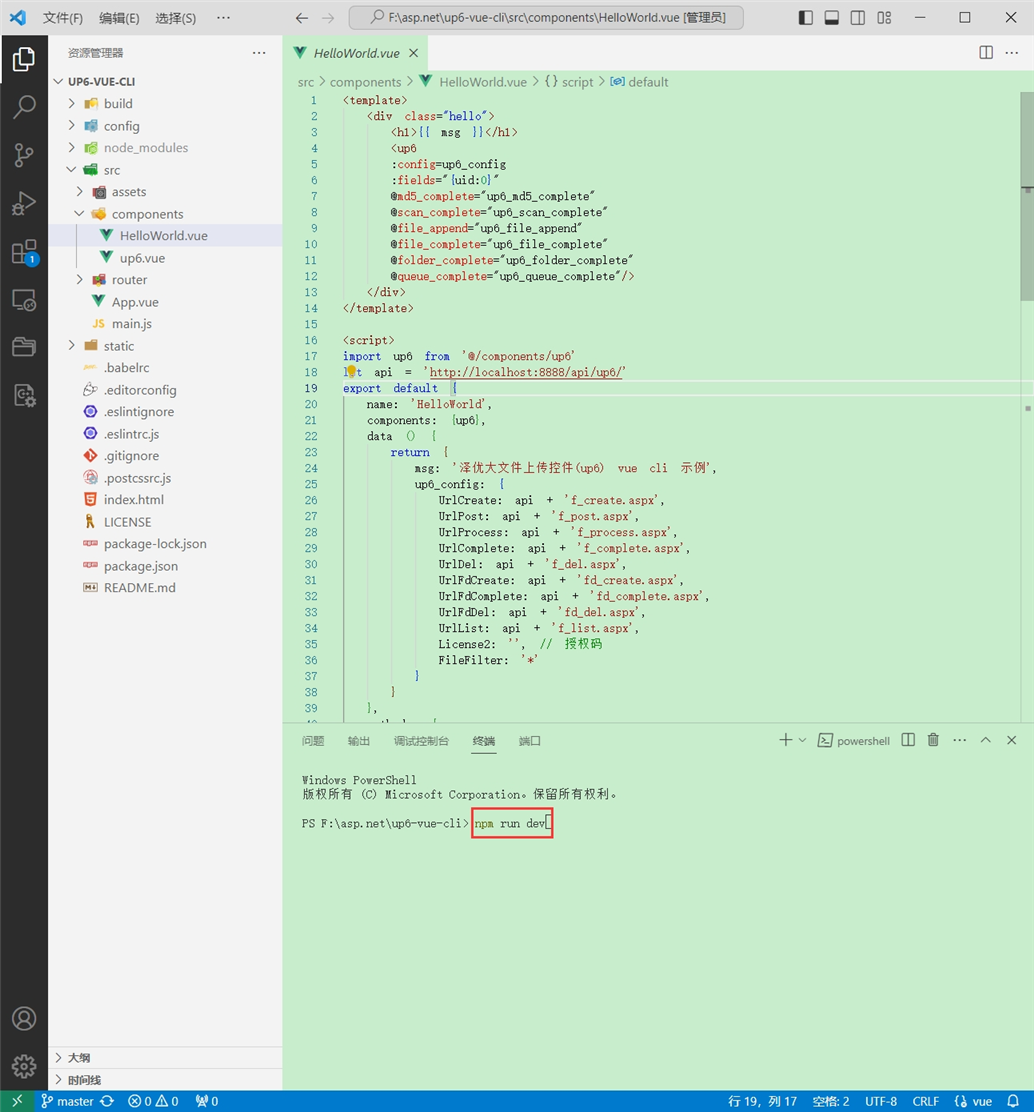

将组件复制到项目中

示例中已经包含此目录

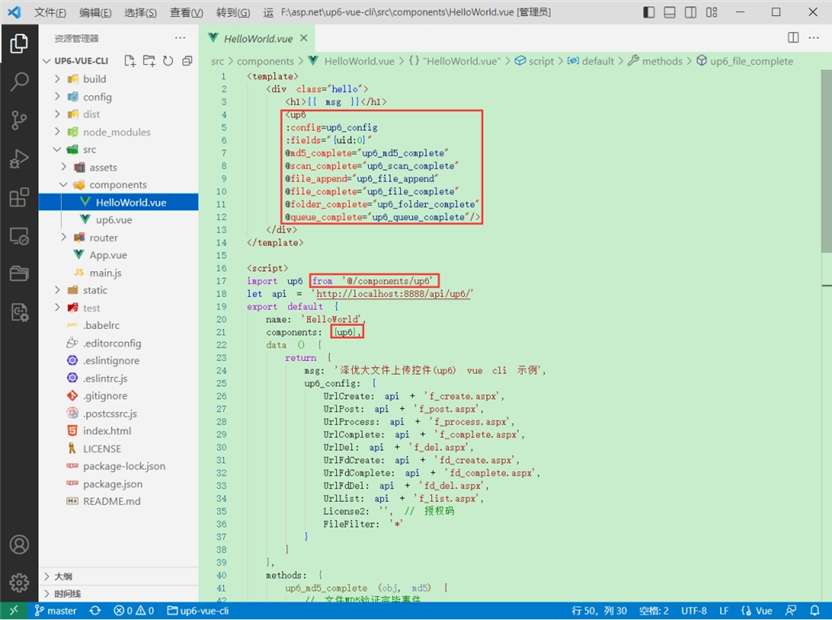

引入组件

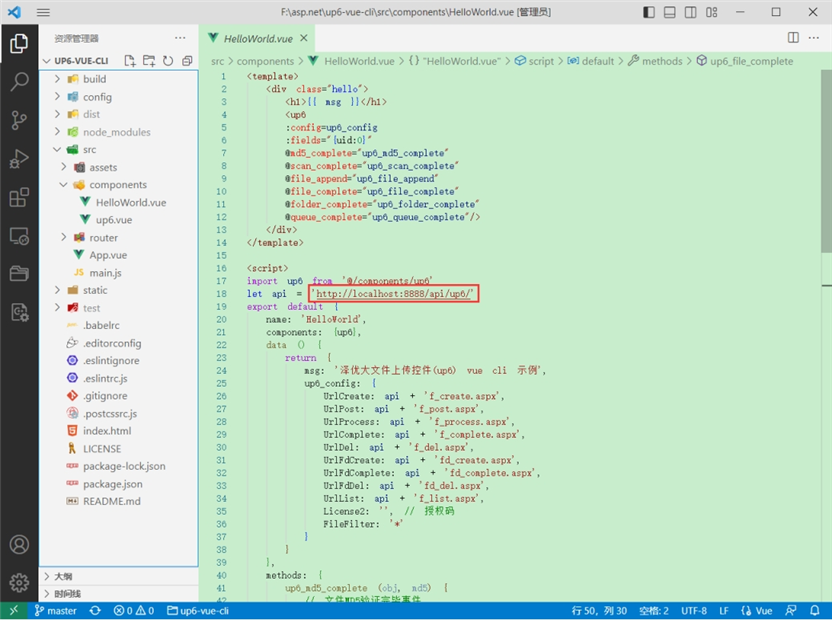

配置接口地址

接口地址分别对应:文件初始化,文件数据上传,文件进度,文件上传完毕,文件删除,文件夹初始化,文件夹删除,文件列表

参考:http://www.ncmem.com/doc/view.aspx?id=e1f49f3e1d4742e19135e00bd41fa3de

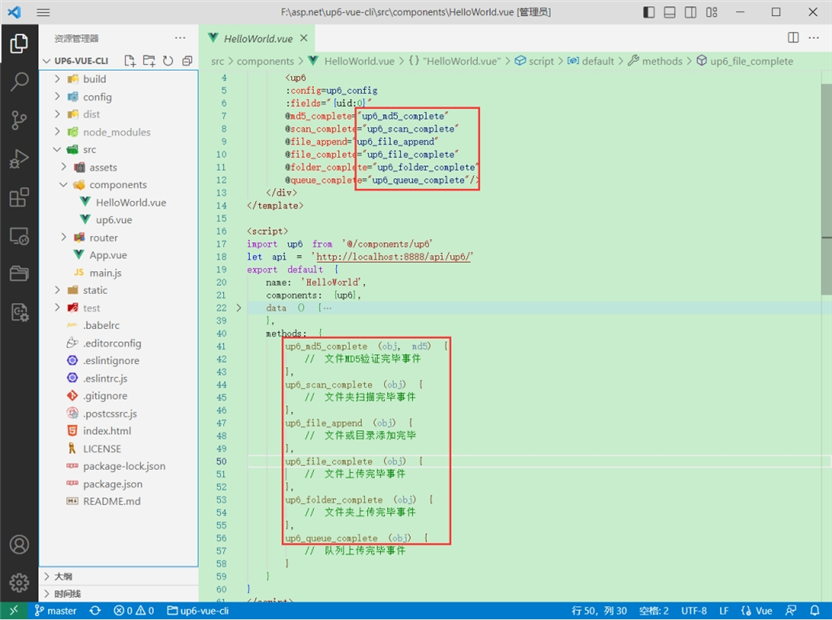

处理事件

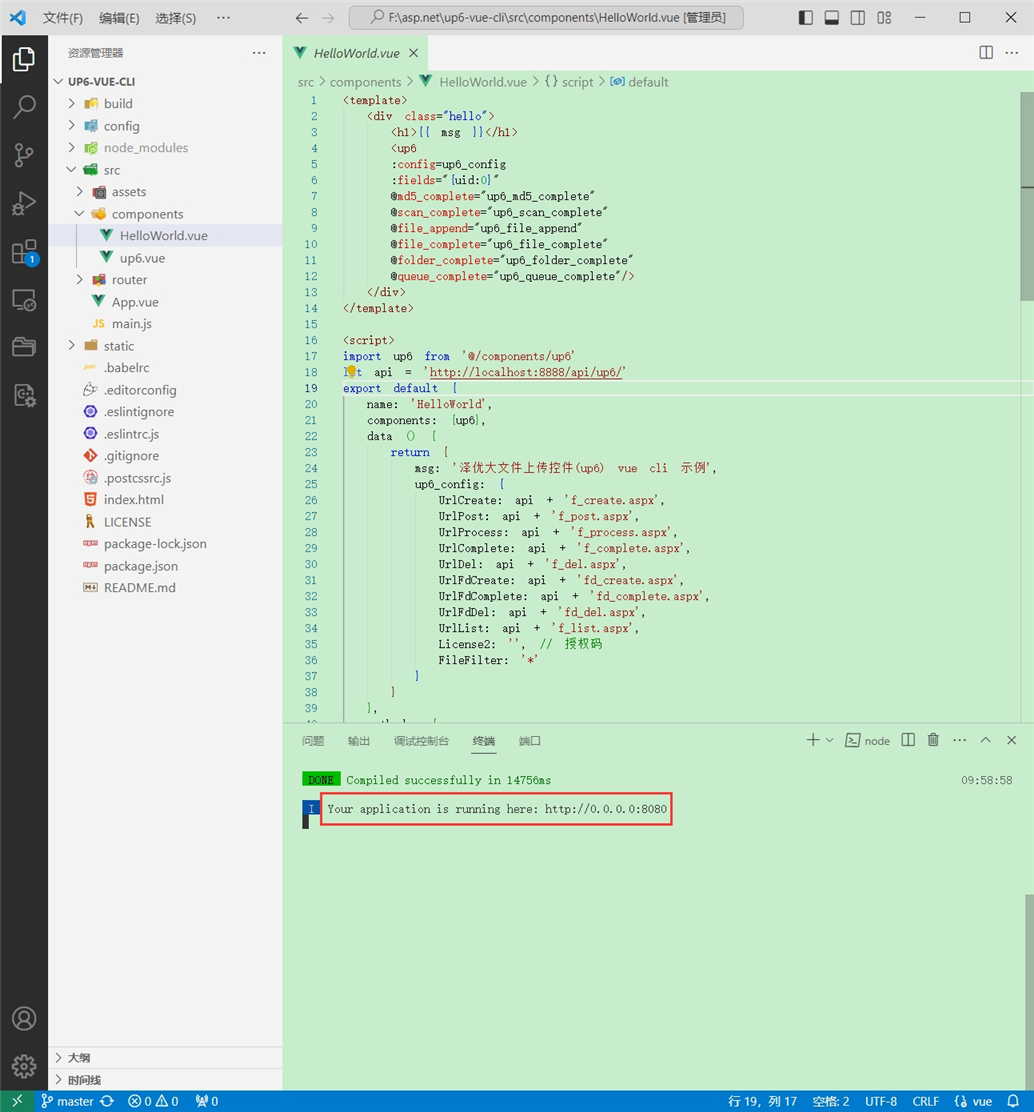

启动测试

启动成功

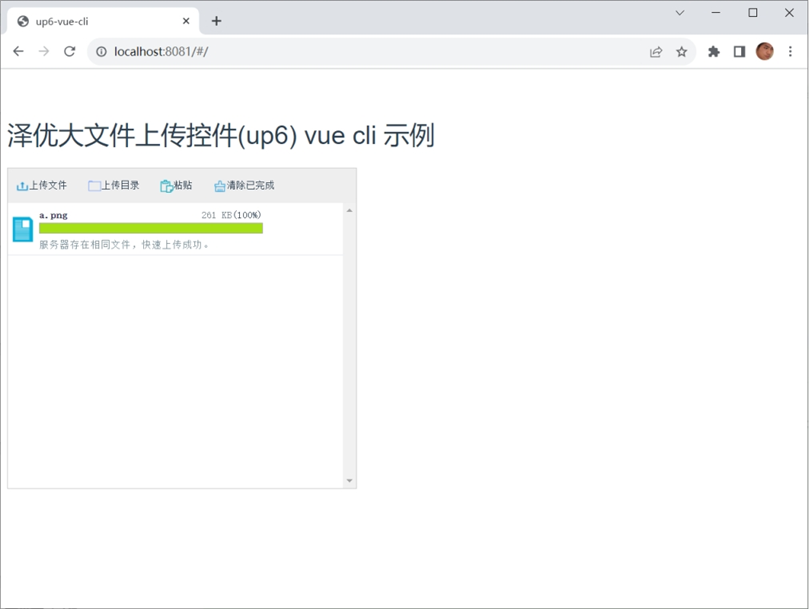

效果

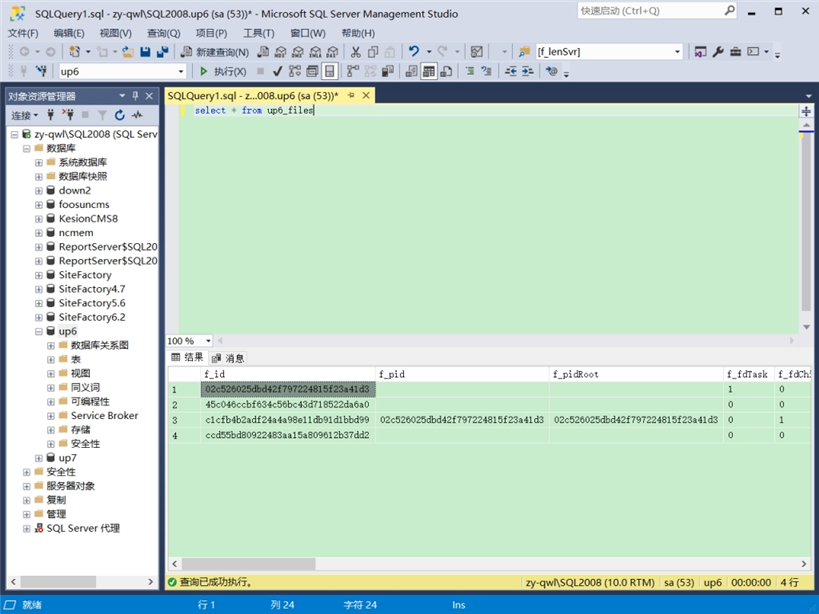

数据库

1047

1047

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?