一、创建hadoop用户及目录

1 创建用户

[root@hadoop000 ~]# useradd hadoop

2 设置密码

[root@hadoop000 ~]# passwd hadoop

3 切换用户 hadoop

[root@hadoop000 ~]# su - hadoop

[hadoop@hadoop000 ~]$ pwd

/home/hadoop

4 创建目录

[hadoop@hadoop000 ~]$ mkdir app data software lib source

[hadoop@hadoop000 ~]$ ll

总用量 20

drwxrwxr-x 2 hadoop hadoop 4096 9月 6 09:59 app

drwxrwxr-x 2 hadoop hadoop 4096 9月 6 09:59 data

drwxrwxr-x 2 hadoop hadoop 4096 9月 6 09:59 lib

drwxrwxr-x 2 hadoop hadoop 4096 9月 6 09:59 software

drwxrwxr-x 2 hadoop hadoop 4096 9月 6 09:59 source二、编译所需软件安装(JDK/MAVEN/SCALA/GIT)

http://spark.apache.org/docs/latest/building-spark.html

Building Apache Spark

Apache Maven

The Maven-based build is the build of reference for Apache Spark. Building Spark using Maven requires Maven 3.3.9 or newer and Java 8+. Note that support for Java 7 was removed as of Spark 2.2.0.

从官网得知编译spark2.2.0需要JDK1.8+和maven 3.3.9**注:上传的jdk maven scala 等安装包都是用hadoop用户安装的,

所以需执行chown -R hadoop:hadoop xxx.tar.gz来让hadoop用户拥有所有权限**

1、安装JDK1.8

JDK1.8下载地址

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

将jdk解压到hadoop用户下app目录

[hadoop@hadoop000 software]$ tar -zxvf jdk-8u144-linux-x64.tar.gz -C ~/app/

[hadoop@hadoop000 jdk1.8.0_144]$ pwd

/home/hadoop/app/jdk1.8.0_144

编辑 ~/.bash_profile添加以下两行

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_144

export PATH=$JAVA_HOME/bin:$PATH

source ~/.bash_profile 使环境变量生效

验证是否配置成功

[hadoop@hadoop000 jdk1.8.0_144]$ which java

~/app/jdk1.8.0_144/bin/java

[hadoop@hadoop000 jdk1.8.0_144]$ java -version

java version "1.8.0_144"

Java(TM) SE Runtime Environment (build 1.8.0_144-b01)

Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

2、 安装MAVAEN3.3.9

maven3.3.9下载地址

https://mirrors.tuna.tsinghua.edu.cn/apache//maven/maven-3/3.3.9/binaries/

解压

[hadoop@hadoop000 maven-3.3.9]$ unzip apache-maven-3.3.9-bin.zip

移动到app目录

[hadoop@hadoop000 maven-3.3.9]$ mv apache-maven-3.3.9 ~/app/

更名

[hadoop@hadoop000 maven-3.3.9]$ mv apache-maven-3.3.9/ maven-3.3.9/

查看路径

[hadoop@hadoop000 maven-3.3.9]$ pwd

/home/hadoop/app/maven-3.3.9

编辑环境变量,记得source生效

[hadoop@hadoop000 maven-3.3.9]$ vim ~/.bash_profile

export MAVEN_HOME=/home/hadoop/app/maven-3.3.9

export PATH=$MAVEN_HOME/bin:$PATH

验证

[hadoop@hadoop000 maven-3.3.9]$ which mvn

~/app/maven-3.3.9/bin/mvn

[hadoop@hadoop000 maven-3.3.9]$ mvn -version

Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00)

Maven home: /home/hadoop/app/maven-3.3.9

Java version: 1.8.0_144, vendor: Oracle Corporation

Java home: /home/hadoop/app/jdk1.8.0_144/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "2.6.32-431.el6.x86_64", arch: "amd64", family: "unix"

3、scala安装

为后续操作spark,现安装scala . scala2.11.7下载地址

http://www.scala-lang.org/download/2.11.7.html

解压到app目录

[hadoop@hadoop000 software]$ tar -zxvf scala-2.11.7.tgz -C ~/app/

查看路径

[hadoop@hadoop000 app]$ cd scala-2.11.7/

[hadoop@hadoop000 scala-2.11.7]$ pwd

/home/hadoop/app/scala-2.11.7

配置环境变量,记得source生效

[hadoop@hadoop000 scala-2.11.7]$ vim ~/.bash_profile

export SCALA_HOME=/home/hadoop/app/scala-2.11.7

export PATH=$SCALA_HOME/bin:$PATH

验证

[hadoop@hadoop000 scala-2.11.7]$ scala -version

Scala code runner version 2.11.7 -- Copyright 2002-2013, LAMP/EPFL

[hadoop@hadoop000 scala-2.11.7]$ scala

Welcome to Scala version 2.11.7 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_144).

Type in expressions to have them evaluated.

Type :help for more information.

scala>

4、Git安装(可选)

使用root在/etc/sudoers文件下添加 hadoop ALL=(ALL) ALL

[root@hadoop000 bin]# vim /etc/sudoers

#Allow root to run any commands anywhere

root ALL=(ALL) ALL

hadoop ALL=(ALL) ALL

:wq!强制保存退出

hadoop用户使用sudo权限安装git

[hadoop@hadoop000 scala-2.11.7]$ sudo yum -y install git

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.sohu.com

* extras: mirrors.sohu.com

* updates: mirrors.sohu.com

base | 3.7 kB 00:00

base/primary_db | 4.7 MB 00:07

http://192.168.95.10/iso/repodata/repomd.xml: [Errno 14] PYCURL ERROR 7 - "couldn't connect to host"

Trying other mirror.

Error: Cannot retrieve repository metadata (repomd.xml) for repository: centos-iso. Please verify its path and try again

[hadoop@hadoop000 scala-2.11.7]$ sudo yum -y install git

Loaded plugins: fastestmirror, refresh-packagekit, security

Loading mirror speeds from cached hostfile

* base: mirrors.sohu.com

* extras: mirrors.sohu.com

* updates: mirrors.sohu.com

extras | 3.4 kB 00:00

extras/primary_db | 29 kB 00:00

updates | 3.4 kB 00:00

updates/primary_db | 3.1 MB 00:07

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package git.x86_64 0:1.7.1-9.el6_9 will be installed

--> Processing Dependency: perl-Git = 1.7.1-9.el6_9 for package: git-1.7.1-9.el6_9.x86_64

--> Processing Dependency: perl(Git) for package: git-1.7.1-9.el6_9.x86_64

--> Processing Dependency: perl(Error) for package: git-1.7.1-9.el6_9.x86_64

--> Running transaction check

---> Package perl-Error.noarch 1:0.17015-4.el6 will be installed

---> Package perl-Git.noarch 0:1.7.1-9.el6_9 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

========================================================================================================================================

Package Arch Version Repository Size

========================================================================================================================================

Installing:

git x86_64 1.7.1-9.el6_9 updates 4.6 M

Installing for dependencies:

perl-Error noarch 1:0.17015-4.el6 base 29 k

perl-Git noarch 1.7.1-9.el6_9 updates 29 k

Transaction Summary

========================================================================================================================================

Install 3 Package(s)

Total download size: 4.7 M

Installed size: 15 M

Downloading Packages:

(1/3): git-1.7.1-9.el6_9.x86_64.rpm | 4.6 MB 00:08

(2/3): perl-Error-0.17015-4.el6.noarch.rpm | 29 kB 00:00

(3/3): perl-Git-1.7.1-9.el6_9.noarch.rpm | 29 kB 00:00

----------------------------------------------------------------------------------------------------------------------------------------

Total 560 kB/s | 4.7 MB 00:08

warning: rpmts_HdrFromFdno: Header V3 RSA/SHA256 Signature, key ID c105b9de: NOKEY

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Importing GPG key 0xC105B9DE:

Userid : CentOS-6 Key (CentOS 6 Official Signing Key) <centos-6-key@centos.org>

Package: centos-release-6-5.el6.centos.11.1.x86_64 (@anaconda-CentOS-201311272149.x86_64/6.5)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Installing : 1:perl-Error-0.17015-4.el6.noarch 1/3

Installing : git-1.7.1-9.el6_9.x86_64 2/3

Installing : perl-Git-1.7.1-9.el6_9.noarch 3/3

Verifying : 1:perl-Error-0.17015-4.el6.noarch 1/3

Verifying : git-1.7.1-9.el6_9.x86_64 2/3

Verifying : perl-Git-1.7.1-9.el6_9.noarch 3/3

Installed:

git.x86_64 0:1.7.1-9.el6_9

Dependency Installed:

perl-Error.noarch 1:0.17015-4.el6 perl-Git.noarch 0:1.7.1-9.el6_9

Complete!

[hadoop@hadoop000 scala-2.11.7]$

三、开始编译

1、在开始编译之前我们问一下自己为什么要编译?官网上不是有sprak安装包吗?

官网上的确提供了一些Hadoop版本的Spark安装包,但是我们的生产环境各异,提供的安装包能满足现实的环境要求吗?答案肯定是否定的,所以我们需要编译一个能够集成到自己生产环境当中的Hadoop版本的Spark安装包。

2、spark-2.2.0.tgz包下载

http://spark.apache.org/downloads.html

将下载好的安装包解压到hadoop用户目录下的source文件中

[hadoop@hadoop000 software]$ tar -zxvf spark-2.2.0.tgz -C ~/source/

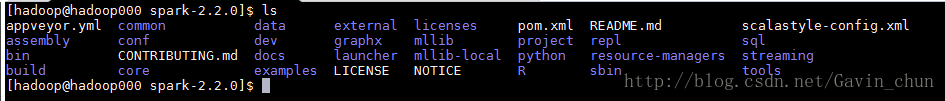

解压后目录如下

3 、从官网得知编译命令如下(http://spark.apache.org/docs/latest/building-spark.html)

./dev/make-distribution.sh --name custom-spark --pip --r --tgz -Psparkr -Phadoop-2.7 -Phive -Phive-thriftserver -Pmesos -Pyarn参数说明:

–name:指定编译完成后Spark安装包的名字

–tgz:以tgz的方式进行压缩

-Psparkr:编译出来的Spark支持R语言

-Phadoop-2.4:以hadoop-2.4的profile进行编译,具体的profile可以看出源码根目录中的pom.xml中查看

-Phive和-Phive-thriftserver:编译出来的Spark支持对Hive的操作

-Pmesos:编译出来的Spark支持运行在Mesos上

-Pyarn:编译出来的Spark支持运行在YARN上

那么我们可以根据具体的条件来编译Spark,比如我们使用的Hadoop版本是2.6.0-cdh5.7.0,并且我们需要将Spark运行在YARN上、支持对Hive的操作,那么我们的Spark源码编译脚本就是:

./dev/make-distribution.sh –name 2.6.0-cdh5.7.0 –tgz -Pyarn -Phadoop-2.6 -Phive -Phive-thriftserver -Dhadoop.version=2.6.0-cdh5.7.0

编译成功后,在Spark源码的根目录中就spark-2.1.0-bin-2.6.0-cdh5.7.0.tgz包,那么我们就可以使用编译出来的这个安装包来进行Spark的安装了

4 、编译前的修改

a. 在spark2.2.0根目录下的pom.xml中的repository标签内(219行)添加以下内容

<repository>

<id>cloudera</id>

<name>cloudera repository</name>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>pom.xml中自定义的url是https://repo1.maven.org/maven2,这个url是Apache Hadop的仓库地址。而我们编译命令加了–name 2.6.0-cdh5.7.0,编译出来的spark包支持集成到cdh5.7.0集群中。如果不加以上url,编译会报错(自定义的url中没有cdh的内容,大家可以把地址复制到浏览器中自测),加了以上内容后pom.xml自定义的url中找不到就会去我们定义的url找。

b.将./dev/make-distribution.sh脚本的120-136行内容注释掉,添加以下内容

VERSION=2.2.0

SCALA_VERSION=2.11

SPARK_HADOOP_VERSION=2.6.0-cdh5.7.0

SPARK_HIVE=1

脚本120-136行是校验我们安装的scala版本等信息,我们把它注释掉,直接告诉它我们安装的版本可以提高编译速度

5 、执行命令编译

./dev/make-distribution.sh –name 2.6.0-cdh5.7.0 –tgz -Pyarn -Phadoop-2.6 -Phive -Phive-thriftserver -Dhadoop.version=2.6.0-cdh5.7.0

编译成功后在spark2.2.0根目录会有一个 spark-2.1.0-bin-2.6.0-cdh5.7.0.tgz文件

注意:在编译过程中会出现下载某个依赖包的时间太久,这是由于网络问题,可以执行ctrl+c停止编译命令,然后重新运行编译命令,在编译过程中多试几次即可。有条件的小伙伴,建议开着VPN然后再进行编译,整个编译过程会顺畅很多。

179

179

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?