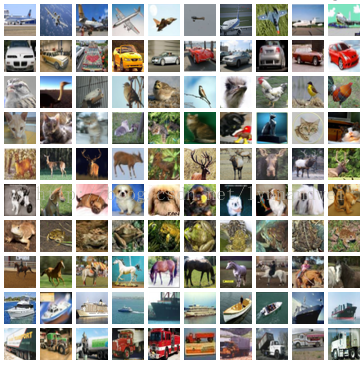

CIFAR-10数据集含有6万个32*32的彩色图像,共分为10种类型,由 Alex Krizhevsky, Vinod Nair和 Geoffrey Hinton收集而来。包含50000张训练图片,10000张测试图片

http://www.cs.toronto.edu/~kriz/cifar.html

数据集的数据存在一个10000*3072 的 numpy数组中,单位是uint8s,3072是存储了一个32*32的彩色图像。(3072=1024*3=32*32*3)。前1024位是r值,中间1024是g值,后面1024是b值(RGB)。

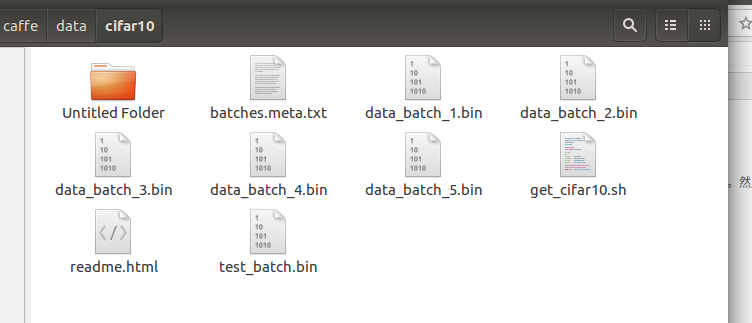

首先准备数据集:

# /home/nvidia/caffe/data/cifar10

cd $CAFFE_ROOT/data/cifar10

./get_cifar10.sh

:~/caffe$ cd /home/nvidia/caffe/data/cifar10

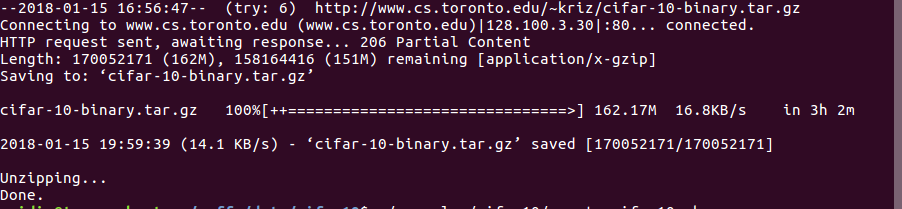

nvidia@tegra-ubuntu:~/caffe$ ./get_cifar10.sh

- [root@localhost cifar10]# ./get_cifar10.sh

- Downloading...

- --2015-03-09 18:38:23-- http://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz

- Resolving www.cs.toronto.edu... 128.100.3.30

- Connecting to www.cs.toronto.edu|128.100.3.30|:80... connected.

- HTTP request sent, awaiting response... 200 OK

- Length: 170052171 (162M) [application/x-gzip]

- Saving to: 鈥渃ifar-10-binary.tar.gz鈥

- 100%[=============================================================>] 170,052,171 1.66M/s in 5m 22s

- Unzipping...

- Done.

cifar-10-binary.tar.gz 100%[++===============================>] 162.17M 16.8KB/s in 3h 2m

2018-01-15 19:59:39 (14.1 KB/s) - ‘cifar-10-binary.tar.gz’ saved [170052171/170052171]

Unzipping...

Done.

然后进入目录

执行cd $CAFFE_ROOT/examples/cifar10

./create_cifar10.sh

nvidia@tegra-ubuntu:~/caffe$ ./examples/cifar10/create_cifar10.shcreate_cifar10.s

源代码如下,我们可以看到,所做的工作就是将图片库转成leveldb格式,并计算均值二进制文件

- #!/usr/bin/env sh

- # This script converts the cifar data into leveldb format.

- EXAMPLE=examples/cifar10

- DATA=data/cifar10

- echo "Creating

leveldb..." - rm -rf $EXAMPLE/cifar10_train_leveldb $EXAMPLE/cifar10_test_leveldb

- ./build/examples/cifar10/convert_cifar_data.bin $DATA $EXAMPLE

- echo "Computing image mean..."

- ./build/tools/compute_image_mean $EXAMPLE/cifar10_train_leveldb \

- $EXAMPLE/mean.binaryproto leveldb

- echo "Done."

#!/usr/bin/env sh

# This script converts the cifar data into leveldb format.

set -e

EXAMPLE=examples/cifar10

DATA=data/cifar10

DBTYPE=lmdb

echo "Creating $DBTYPE..."

rm -rf $EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/cifar10_test_$DBTYPE

./build/examples/cifar10/convert_cifar_data.bin $DATA $EXAMPLE $DBTYPE

echo "Computing image mean..."

./build/tools/compute_image_mean -backend=$DBTYPE \

$EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/mean.binaryproto

echo "Done."

#!/usr/bin/env sh

# This script converts the cifar data into leveldb format.

set -e

EXAMPLE=examples/cifar10

DATA=data/cifar10

DBTYPE=lmdb

echo "Creating $DBTYPE..."

rm -rf $EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/cifar10_test_$DBTYPE

./build/examples/cifar10/convert_cifar_data.bin $DATA $EXAMPLE $DBTYPE

echo "Computing image mean..."

./build/tools/compute_image_mean -backend=$DBTYPE \

$EXAMPLE/cifar10_train_$DBTYPE $EXAMPLE/mean.binaryproto

echo "Done."

运行之后,将会在 examples 中出现数据库文件 ./cifar10-leveldb 和数据库图像均值二进制文件 ./mean.binaryproto

- [root@localhost caffe]# ./examples/cifar10/create_cifar10.sh

- Creating leveldb...

- Computing image mean...

- E0309 18:50:12.026103 18241 compute_image_mean.cpp:114] Processed 10000 files.

- E0309 18:50:12.094758 18241 compute_image_mean.cpp:114] Processed 20000 files.

- E0309 18:50:12.163048 18241 compute_image_mean.cpp:114] Processed 30000 files.

- E0309 18:50:12.231233 18241 compute_image_mean.cpp:114] Processed 40000 files.

- E0309 18:50:12.299324 18241 compute_image_mean.cpp:114] Processed 50000 files.

- Done.

模型描述在examples/cifar10/cifar10_quick_solver.prototxt,和examples/cifar10/cifar10_quick_train_test.prototxt 中。

- # reduce the learning rate after 8 epochs (4000 iters) by a factor of 10

- # The train/test net protocol buffer definition

- net: "examples/cifar10/cifar10_quick_train_test.prototxt"

- # test_iter specifies how many forward passes the test should carry out.

- # In the case of MNIST, we have test batch size 100 and 100 test iterations,

- # covering the full 10,000 testing images.

- test_iter: 100

- # Carry out testing every 500 training iterations.

- test_interval: 500

- # The base learning rate, momentum and the weight decay of the network.

- base_lr: 0.001

- momentum: 0.9

- weight_decay: 0.004

- # The learning rate policy

- lr_policy: "fixed"

- # Display every 100 iterations

- display: 100

- # The maximum number of iterations

- max_iter: 4000

- # snapshot intermediate results

- snapshot: 4000

- snapshot_prefix: "examples/cifar10/cifar10_quick"

- # solver mode: CPU or GPU

- solver_mode: CPU

- ~

- name: "CIFAR10_quick"

- layers {

- name: "cifar"

- type: DATA

- top: "data"

- top: "label"

- data_param {

- source: "examples/cifar10/cifar10_train_leveldb"

- batch_size: 100

- }

- transform_param {

- mean_file: "examples/cifar10/mean.binaryproto"

- }

- include: { phase: TRAIN }

- }

- layers {

- name: "cifar"

- type: DATA

- top: "data"

- top: "label"

- data_param {

- source: "examples/cifar10/cifar10_test_leveldb"

- batch_size: 100

- }

- transform_param {

- mean_file: "examples/cifar10/mean.binaryproto"

- }

- include: { phase: TEST }

- }

- layers {

- name: "conv1"

- type: CONVOLUTION

- bottom: "data"

- top: "conv1"

- blobs_lr: 1

- blobs_lr: 2

- convolution_param {

- num_output: 32

- pad: 2

- kernel_size: 5

- stride: 1

- weight_filler {

- type: "gaussian"

- std: 0.0001

- }

- bias_filler {

- type: "constant"

- }

- }

- }

- layers {

- name: "pool1"

- type: POOLING

- bottom: "conv1"

- top: "pool1"

- pooling_param {

- pool: MAX

- kernel_size: 3

- stride: 2

- }

- }

- layers {

- name: "relu1"

- type: RELU

- bottom: "pool1"

- top: "pool1"

- }

- layers {

- name: "conv2"

- type: CONVOLUTION

- bottom: "pool1"

- top: "conv2"

- blobs_lr: 1

- blobs_lr: 2

- convolution_param {

- num_output: 32

- pad: 2

- kernel_size: 5

- stride: 1

- weight_filler {

- type: "gaussian"

- std: 0.01

- }

- bias_filler {

- type: "constant"

- }

- }

- }

- layers {

- name: "relu2"

- type: RELU

- bottom: "conv2"

- top: "conv2"

- }

- layers {

- name: "pool2"

- type: POOLING

- bottom: "conv2"

- top: "pool2"

- pooling_param {

- pool: AVE

- kernel_size: 3

- stride: 2

- }

- }

- layers {

- name: "conv3"

- type: CONVOLUTION

- bottom: "pool2"

- top: "conv3"

- blobs_lr: 1

- blobs_lr: 2

- convolution_param {

- num_output: 64

- pad: 2

- kernel_size: 5

- stride: 1

- weight_filler {

- type: "gaussian"

- std: 0.01

- }

- bias_filler {

- type: "constant"

- }

- }

- }

- layers {

- name: "relu3"

- type: RELU

- bottom: "conv3"

- top: "conv3"

- }

- layers {

- name: "pool3"

- type: POOLING

- bottom: "conv3"

- top: "pool3"

- pooling_param {

- pool: AVE

- kernel_size: 3

- stride: 2

- }

- }

- layers {

- name: "ip1"

- type: INNER_PRODUCT

- bottom: "pool3"

- top: "ip1"

- blobs_lr: 1

- blobs_lr: 2

- inner_product_param {

- num_output: 64

- weight_filler {

- type: "gaussian"

- std: 0.1

- }

- bias_filler {

- type: "constant"

- }

- }

- }

- layers {

- name: "ip2"

- type: INNER_PRODUCT

- bottom: "ip1"

- top: "ip2"

- blobs_lr: 1

- blobs_lr: 2

- inner_product_param {

- num_output: 10

- weight_filler {

- type: "gaussian"

- std: 0.1

- }

- bias_filler {

- type: "constant"

- }

- }

- }

- layers {

- name: "accuracy"

- type: ACCURACY

- bottom: "ip2"

- bottom: "label"

- top: "accuracy"

- include: { phase: TEST }

- }

- layers {

- name: "loss"

- type: SOFTMAX_LOSS

- bottom: "ip2"

- bottom: "label"

- top: "loss"

- }

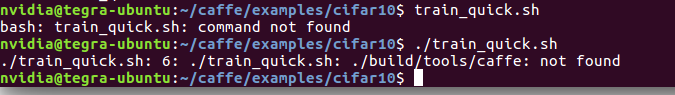

模型训练是 执行 train_quick.sh ,内容如下

- [root@localhost cifar10]# vi train_quick.sh

- #!/usr/bin/env sh

- TOOLS=./build/tools

- $TOOLS/caffe train \

- --solver=examples/cifar10/cifar10_quick_solver.prototxt

- # reduce learning rate by factor of 10 after 8 epochs

- $TOOLS/caffe train \

- --solver=examples/cifar10/cifar10_quick_solver_lr1.prototxt \

- --snapshot=examples/cifar10/cifar10_quick_iter_4000.solverstate

- ~

-

- cifar10_quick_iter_5000.solverstate

- I0317 22:12:25.592813 2008298256 solver.cpp:81] Optimization Done.

- Our model achieved ~75% test accuracy. The model parameters are stored in binary protobuf format in

- cifar10_quick_iter_5000

bash: train_quick.sh: command not found

nvidia@tegra-ubuntu:~/caffe/examples/cifar10$ ./train_quick.sh

./train_quick.sh: 6: ./train_quick.sh: ./build/tools/caffe: not found

nvidia@tegra-ubuntu:~/caffe/examples/cifar10$

caffe训练cifar10遇到./build/tools/caffe: not found 错误解决方法

cifar10训练步骤如下:

(1)打开终端,应用cd切换路径,如 cd ~/caffe/data/cifar10 ,

(2)继续执行命令 ./get_cifar10.sh,

(3)成功下载数据集之后,执行ls即可见所下载的数据文件,

(4)再次将路径切换到cd ~/caffe/examples/cifar10

(5)继续执行命令 ./create_cifar10.sh

此时系统报错./build/examples/cifar10/convert_cifar_data.bin: not found ,这是因为当前目录在/caffe/examples/cifar10 ,而执行./create_cifar10.sh ,需要在caffe目录下,因此我们需要切换到caffe目录下,然后执行./examples/cifar10/create_cifar10.sh

(6) 同理,在caffe目录下执行./examples/cifar10/train_quick.sh ,此时就不会报错找不到 ./build/tools/caffe 了。

执行结果如下:

nvidia@tegra-ubuntu:~/caffe$ ./examples/cifar10/train_quick.sh

I0116 13:04:53.104024 25452 caffe.cpp:218] Using GPUs 0

I0116 13:04:53.111429 25452 caffe.cpp:223] GPU 0: NVIDIA Tegra X2

I0116 13:04:53.700456 25452 solver.cpp:44] Initializing solver from parameters:

test_iter: 100

test_interval: 500

base_lr: 0.001

display: 100

max_iter: 4000

lr_policy: "fixed"

momentum: 0.9

weight_decay: 0.004

snapshot: 4000

snapshot_prefix: "examples/cifar10/cifar10_quick"

solver_mode: GPU

device_id: 0

net: "examples/cifar10/cifar10_quick_train_test.prototxt"

train_state {

level: 0

stage: ""

}

I0116 13:04:53.700881 25452 solver.cpp:87] Creating training net from net file: examples/cifar10/cifar10_quick_train_test.prototxt

I0116 13:04:53.701413 25452 net.cpp:294] The NetState phase (0) differed from the phase (1) specified by a rule in layer cifar

I0116 13:04:53.701463 25452 net.cpp:294] The NetState phase (0) differed from the phase (1) specified by a rule in layer accuracy

I0116 13:04:53.701498 25452 net.cpp:51] Initializing net from parameters:

name: "CIFAR10_quick"

state {

phase: TRAIN

level: 0

stage: ""

}

layer {

name: "cifar"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mean_file: "examples/cifar10/mean.binaryproto"

}

data_param {

source: "examples/cifar10/cifar10_train_lmdb"

batch_size: 100

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 32

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.0001

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "pool1"

top: "pool1"

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 32

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: AVE

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 64

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3"

top: "pool3"

pooling_param {

pool: AVE

kernel_size: 3

stride: 2

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "pool3"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 64

weight_filler {

type: "gaussian"

std: 0.1

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "gaussian"

std: 0.1

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "ip2"

bottom: "label"

top: "loss"

}

I0116 13:04:53.702026 25452 layer_factory.hpp:77] Creating layer cifar

I0116 13:04:53.702291 25452 db_lmdb.cpp:35] Opened lmdb examples/cifar10/cifar10_train_lmdb

I0116 13:04:53.702373 25452 net.cpp:84] Creating Layer cifar

I0116 13:04:53.702400 25452 net.cpp:380] cifar -> data

I0116 13:04:53.702452 25452 net.cpp:380] cifar -> label

I0116 13:04:53.702494 25452 data_transformer.cpp:25] Loading mean file from: examples/cifar10/mean.binaryproto

I0116 13:04:53.703125 25452 data_layer.cpp:45] output data size: 100,3,32,32

I0116 13:04:53.712062 25452 net.cpp:122] Setting up cifar

I0116 13:04:53.712122 25452 net.cpp:129] Top shape: 100 3 32 32 (307200)

I0116 13:04:53.712155 25452 net.cpp:129] Top shape: 100 (100)

I0116 13:04:53.712179 25452 net.cpp:137] Memory required for data: 1229200

I0116 13:04:53.712219 25452 layer_factory.hpp:77] Creating layer conv1

I0116 13:04:53.712278 25452 net.cpp:84] Creating Layer conv1

I0116 13:04:53.712309 25452 net.cpp:406] conv1 <- data

I0116 13:04:53.712357 25452 net.cpp:380] conv1 -> conv1

I0116 13:04:55.004492 25452 net.cpp:122] Setting up conv1

I0116 13:04:55.004555 25452 net.cpp:129] Top shape: 100 32 32 32 (3276800)

I0116 13:04:55.004581 25452 net.cpp:137] Memory required for data: 14336400

I0116 13:04:55.004644 25452 layer_factory.hpp:77] Creating layer pool1

I0116 13:04:55.004680 25452 net.cpp:84] Creating Layer pool1

I0116 13:04:55.004698 25452 net.cpp:406] pool1 <- conv1

I0116 13:04:55.004719 25452 net.cpp:380] pool1 -> pool1

I0116 13:04:55.004884 25452 net.cpp:122] Setting up pool1

I0116 13:04:55.004904 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.004921 25452 net.cpp:137] Memory required for data: 17613200

I0116 13:04:55.004936 25452 layer_factory.hpp:77] Creating layer relu1

I0116 13:04:55.004956 25452 net.cpp:84] Creating Layer relu1

I0116 13:04:55.004969 25452 net.cpp:406] relu1 <- pool1

I0116 13:04:55.004987 25452 net.cpp:367] relu1 -> pool1 (in-place)

I0116 13:04:55.006839 25452 net.cpp:122] Setting up relu1

I0116 13:04:55.006880 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.006901 25452 net.cpp:137] Memory required for data: 20890000

I0116 13:04:55.006917 25452 layer_factory.hpp:77] Creating layer conv2

I0116 13:04:55.006959 25452 net.cpp:84] Creating Layer conv2

I0116 13:04:55.006978 25452 net.cpp:406] conv2 <- pool1

I0116 13:04:55.006999 25452 net.cpp:380] conv2 -> conv2

I0116 13:04:55.016883 25452 net.cpp:122] Setting up conv2

I0116 13:04:55.016943 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.016966 25452 net.cpp:137] Memory required for data: 24166800

I0116 13:04:55.017004 25452 layer_factory.hpp:77] Creating layer relu2

I0116 13:04:55.017032 25452 net.cpp:84] Creating Layer relu2

I0116 13:04:55.017048 25452 net.cpp:406] relu2 <- conv2

I0116 13:04:55.017068 25452 net.cpp:367] relu2 -> conv2 (in-place)

I0116 13:04:55.019407 25452 net.cpp:122] Setting up relu2

I0116 13:04:55.019454 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.019479 25452 net.cpp:137] Memory required for data: 27443600

I0116 13:04:55.019498 25452 layer_factory.hpp:77] Creating layer pool2

I0116 13:04:55.019531 25452 net.cpp:84] Creating Layer pool2

I0116 13:04:55.019546 25452 net.cpp:406] pool2 <- conv2

I0116 13:04:55.019567 25452 net.cpp:380] pool2 -> pool2

I0116 13:04:55.021615 25452 net.cpp:122] Setting up pool2

I0116 13:04:55.021663 25452 net.cpp:129] Top shape: 100 32 8 8 (204800)

I0116 13:04:55.021689 25452 net.cpp:137] Memory required for data: 28262800

I0116 13:04:55.021705 25452 layer_factory.hpp:77] Creating layer conv3

I0116 13:04:55.021750 25452 net.cpp:84] Creating Layer conv3

I0116 13:04:55.021775 25452 net.cpp:406] conv3 <- pool2

I0116 13:04:55.021822 25452 net.cpp:380] conv3 -> conv3

I0116 13:04:55.030450 25452 net.cpp:122] Setting up conv3

I0116 13:04:55.030504 25452 net.cpp:129] Top shape: 100 64 8 8 (409600)

I0116 13:04:55.030531 25452 net.cpp:137] Memory required for data: 29901200

I0116 13:04:55.030571 25452 layer_factory.hpp:77] Creating layer relu3

I0116 13:04:55.030604 25452 net.cpp:84] Creating Layer relu3

I0116 13:04:55.030622 25452 net.cpp:406] relu3 <- conv3

I0116 13:04:55.030643 25452 net.cpp:367] relu3 -> conv3 (in-place)

I0116 13:04:55.033023 25452 net.cpp:122] Setting up relu3

I0116 13:04:55.033071 25452 net.cpp:129] Top shape: 100 64 8 8 (409600)

I0116 13:04:55.033094 25452 net.cpp:137] Memory required for data: 31539600

I0116 13:04:55.033110 25452 layer_factory.hpp:77] Creating layer pool3

I0116 13:04:55.033138 25452 net.cpp:84] Creating Layer pool3

I0116 13:04:55.033205 25452 net.cpp:406] pool3 <- conv3

I0116 13:04:55.033238 25452 net.cpp:380] pool3 -> pool3

I0116 13:04:55.035491 25452 net.cpp:122] Setting up pool3

I0116 13:04:55.035544 25452 net.cpp:129] Top shape: 100 64 4 4 (102400)

I0116 13:04:55.035567 25452 net.cpp:137] Memory required for data: 31949200

I0116 13:04:55.035585 25452 layer_factory.hpp:77] Creating layer ip1

I0116 13:04:55.035616 25452 net.cpp:84] Creating Layer ip1

I0116 13:04:55.035632 25452 net.cpp:406] ip1 <- pool3

I0116 13:04:55.035656 25452 net.cpp:380] ip1 -> ip1

I0116 13:04:55.037981 25452 net.cpp:122] Setting up ip1

I0116 13:04:55.038019 25452 net.cpp:129] Top shape: 100 64 (6400)

I0116 13:04:55.038040 25452 net.cpp:137] Memory required for data: 31974800

I0116 13:04:55.038066 25452 layer_factory.hpp:77] Creating layer ip2

I0116 13:04:55.038094 25452 net.cpp:84] Creating Layer ip2

I0116 13:04:55.038110 25452 net.cpp:406] ip2 <- ip1

I0116 13:04:55.038130 25452 net.cpp:380] ip2 -> ip2

I0116 13:04:55.038504 25452 net.cpp:122] Setting up ip2

I0116 13:04:55.038527 25452 net.cpp:129] Top shape: 100 10 (1000)

I0116 13:04:55.038544 25452 net.cpp:137] Memory required for data: 31978800

I0116 13:04:55.038575 25452 layer_factory.hpp:77] Creating layer loss

I0116 13:04:55.038599 25452 net.cpp:84] Creating Layer loss

I0116 13:04:55.038612 25452 net.cpp:406] loss <- ip2

I0116 13:04:55.038627 25452 net.cpp:406] loss <- label

I0116 13:04:55.038650 25452 net.cpp:380] loss -> loss

I0116 13:04:55.038686 25452 layer_factory.hpp:77] Creating layer loss

I0116 13:04:55.041414 25452 net.cpp:122] Setting up loss

I0116 13:04:55.041461 25452 net.cpp:129] Top shape: (1)

I0116 13:04:55.041484 25452 net.cpp:132] with loss weight 1

I0116 13:04:55.041535 25452 net.cpp:137] Memory required for data: 31978804

I0116 13:04:55.041555 25452 net.cpp:198] loss needs backward computation.

I0116 13:04:55.041586 25452 net.cpp:198] ip2 needs backward computation.

I0116 13:04:55.041601 25452 net.cpp:198] ip1 needs backward computation.

I0116 13:04:55.041615 25452 net.cpp:198] pool3 needs backward computation.

I0116 13:04:55.041630 25452 net.cpp:198] relu3 needs backward computation.

I0116 13:04:55.041642 25452 net.cpp:198] conv3 needs backward computation.

I0116 13:04:55.041657 25452 net.cpp:198] pool2 needs backward computation.

I0116 13:04:55.041672 25452 net.cpp:198] relu2 needs backward computation.

I0116 13:04:55.041685 25452 net.cpp:198] conv2 needs backward computation.

I0116 13:04:55.041699 25452 net.cpp:198] relu1 needs backward computation.

I0116 13:04:55.041712 25452 net.cpp:198] pool1 needs backward computation.

I0116 13:04:55.041730 25452 net.cpp:198] conv1 needs backward computation.

I0116 13:04:55.041744 25452 net.cpp:200] cifar does not need backward computation.

I0116 13:04:55.041756 25452 net.cpp:242] This network produces output loss

I0116 13:04:55.041788 25452 net.cpp:255] Network initialization done.

I0116 13:04:55.042315 25452 solver.cpp:172] Creating test net (#0) specified by net file: examples/cifar10/cifar10_quick_train_test.prototxt

I0116 13:04:55.042404 25452 net.cpp:294] The NetState phase (1) differed from the phase (0) specified by a rule in layer cifar

I0116 13:04:55.042443 25452 net.cpp:51] Initializing net from parameters:

name: "CIFAR10_quick"

state {

phase: TEST

}

layer {

name: "cifar"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mean_file: "examples/cifar10/mean.binaryproto"

}

data_param {

source: "examples/cifar10/cifar10_test_lmdb"

batch_size: 100

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 32

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.0001

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "pool1"

top: "pool1"

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 32

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: AVE

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 64

pad: 2

kernel_size: 5

stride: 1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3"

top: "pool3"

pooling_param {

pool: AVE

kernel_size: 3

stride: 2

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "pool3"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 64

weight_filler {

type: "gaussian"

std: 0.1

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "gaussian"

std: 0.1

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "ip2"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "ip2"

bottom: "label"

top: "loss"

}

I0116 13:04:55.042997 25452 layer_factory.hpp:77] Creating layer cifar

I0116 13:04:55.043182 25452 db_lmdb.cpp:35] Opened lmdb examples/cifar10/cifar10_test_lmdb

I0116 13:04:55.043238 25452 net.cpp:84] Creating Layer cifar

I0116 13:04:55.043267 25452 net.cpp:380] cifar -> data

I0116 13:04:55.043295 25452 net.cpp:380] cifar -> label

I0116 13:04:55.043323 25452 data_transformer.cpp:25] Loading mean file from: examples/cifar10/mean.binaryproto

I0116 13:04:55.043987 25452 data_layer.cpp:45] output data size: 100,3,32,32

I0116 13:04:55.070179 25452 net.cpp:122] Setting up cifar

I0116 13:04:55.070256 25452 net.cpp:129] Top shape: 100 3 32 32 (307200)

I0116 13:04:55.070305 25452 net.cpp:129] Top shape: 100 (100)

I0116 13:04:55.070324 25452 net.cpp:137] Memory required for data: 1229200

I0116 13:04:55.070346 25452 layer_factory.hpp:77] Creating layer label_cifar_1_split

I0116 13:04:55.070382 25452 net.cpp:84] Creating Layer label_cifar_1_split

I0116 13:04:55.070402 25452 net.cpp:406] label_cifar_1_split <- label

I0116 13:04:55.070427 25452 net.cpp:380] label_cifar_1_split -> label_cifar_1_split_0

I0116 13:04:55.070463 25452 net.cpp:380] label_cifar_1_split -> label_cifar_1_split_1

I0116 13:04:55.070688 25452 net.cpp:122] Setting up label_cifar_1_split

I0116 13:04:55.070716 25452 net.cpp:129] Top shape: 100 (100)

I0116 13:04:55.070736 25452 net.cpp:129] Top shape: 100 (100)

I0116 13:04:55.070750 25452 net.cpp:137] Memory required for data: 1230000

I0116 13:04:55.070762 25452 layer_factory.hpp:77] Creating layer conv1

I0116 13:04:55.070798 25452 net.cpp:84] Creating Layer conv1

I0116 13:04:55.070816 25452 net.cpp:406] conv1 <- data

I0116 13:04:55.070838 25452 net.cpp:380] conv1 -> conv1

I0116 13:04:55.090713 25452 net.cpp:122] Setting up conv1

I0116 13:04:55.090768 25452 net.cpp:129] Top shape: 100 32 32 32 (3276800)

I0116 13:04:55.090797 25452 net.cpp:137] Memory required for data: 14337200

I0116 13:04:55.090842 25452 layer_factory.hpp:77] Creating layer pool1

I0116 13:04:55.090878 25452 net.cpp:84] Creating Layer pool1

I0116 13:04:55.090893 25452 net.cpp:406] pool1 <- conv1

I0116 13:04:55.090960 25452 net.cpp:380] pool1 -> pool1

I0116 13:04:55.091177 25452 net.cpp:122] Setting up pool1

I0116 13:04:55.091202 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.091223 25452 net.cpp:137] Memory required for data: 17614000

I0116 13:04:55.091238 25452 layer_factory.hpp:77] Creating layer relu1

I0116 13:04:55.091264 25452 net.cpp:84] Creating Layer relu1

I0116 13:04:55.091279 25452 net.cpp:406] relu1 <- pool1

I0116 13:04:55.091295 25452 net.cpp:367] relu1 -> pool1 (in-place)

I0116 13:04:55.093794 25452 net.cpp:122] Setting up relu1

I0116 13:04:55.093854 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.093879 25452 net.cpp:137] Memory required for data: 20890800

I0116 13:04:55.093895 25452 layer_factory.hpp:77] Creating layer conv2

I0116 13:04:55.093940 25452 net.cpp:84] Creating Layer conv2

I0116 13:04:55.093960 25452 net.cpp:406] conv2 <- pool1

I0116 13:04:55.093992 25452 net.cpp:380] conv2 -> conv2

I0116 13:04:55.106508 25452 net.cpp:122] Setting up conv2

I0116 13:04:55.106557 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.106582 25452 net.cpp:137] Memory required for data: 24167600

I0116 13:04:55.106617 25452 layer_factory.hpp:77] Creating layer relu2

I0116 13:04:55.106650 25452 net.cpp:84] Creating Layer relu2

I0116 13:04:55.106668 25452 net.cpp:406] relu2 <- conv2

I0116 13:04:55.106689 25452 net.cpp:367] relu2 -> conv2 (in-place)

I0116 13:04:55.109305 25452 net.cpp:122] Setting up relu2

I0116 13:04:55.109350 25452 net.cpp:129] Top shape: 100 32 16 16 (819200)

I0116 13:04:55.109374 25452 net.cpp:137] Memory required for data: 27444400

I0116 13:04:55.109390 25452 layer_factory.hpp:77] Creating layer pool2

I0116 13:04:55.109421 25452 net.cpp:84] Creating Layer pool2

I0116 13:04:55.109439 25452 net.cpp:406] pool2 <- conv2

I0116 13:04:55.109468 25452 net.cpp:380] pool2 -> pool2

I0116 13:04:55.112432 25452 net.cpp:122] Setting up pool2

I0116 13:04:55.112485 25452 net.cpp:129] Top shape: 100 32 8 8 (204800)

I0116 13:04:55.112509 25452 net.cpp:137] Memory required for data: 28263600

I0116 13:04:55.112531 25452 layer_factory.hpp:77] Creating layer conv3

I0116 13:04:55.112586 25452 net.cpp:84] Creating Layer conv3

I0116 13:04:55.112607 25452 net.cpp:406] conv3 <- pool2

I0116 13:04:55.112632 25452 net.cpp:380] conv3 -> conv3

I0116 13:04:55.126611 25452 net.cpp:122] Setting up conv3

I0116 13:04:55.126663 25452 net.cpp:129] Top shape: 100 64 8 8 (409600)

I0116 13:04:55.126687 25452 net.cpp:137] Memory required for data: 29902000

I0116 13:04:55.126722 25452 layer_factory.hpp:77] Creating layer relu3

I0116 13:04:55.126756 25452 net.cpp:84] Creating Layer relu3

I0116 13:04:55.126772 25452 net.cpp:406] relu3 <- conv3

I0116 13:04:55.126794 25452 net.cpp:367] relu3 -> conv3 (in-place)

I0116 13:04:55.129612 25452 net.cpp:122] Setting up relu3

I0116 13:04:55.129658 25452 net.cpp:129] Top shape: 100 64 8 8 (409600)

I0116 13:04:55.129683 25452 net.cpp:137] Memory required for data: 31540400

I0116 13:04:55.129700 25452 layer_factory.hpp:77] Creating layer pool3

I0116 13:04:55.129739 25452 net.cpp:84] Creating Layer pool3

I0116 13:04:55.129760 25452 net.cpp:406] pool3 <- conv3

I0116 13:04:55.129786 25452 net.cpp:380] pool3 -> pool3

I0116 13:04:55.132236 25452 net.cpp:122] Setting up pool3

I0116 13:04:55.132287 25452 net.cpp:129] Top shape: 100 64 4 4 (102400)

I0116 13:04:55.132308 25452 net.cpp:137] Memory required for data: 31950000

I0116 13:04:55.132323 25452 layer_factory.hpp:77] Creating layer ip1

I0116 13:04:55.132359 25452 net.cpp:84] Creating Layer ip1

I0116 13:04:55.132374 25452 net.cpp:406] ip1 <- pool3

I0116 13:04:55.132397 25452 net.cpp:380] ip1 -> ip1

I0116 13:04:55.135041 25452 net.cpp:122] Setting up ip1

I0116 13:04:55.135087 25452 net.cpp:129] Top shape: 100 64 (6400)

I0116 13:04:55.135108 25452 net.cpp:137] Memory required for data: 31975600

I0116 13:04:55.135134 25452 layer_factory.hpp:77] Creating layer ip2

I0116 13:04:55.135164 25452 net.cpp:84] Creating Layer ip2

I0116 13:04:55.135180 25452 net.cpp:406] ip2 <- ip1

I0116 13:04:55.135215 25452 net.cpp:380] ip2 -> ip2

I0116 13:04:55.135763 25452 net.cpp:122] Setting up ip2

I0116 13:04:55.135787 25452 net.cpp:129] Top shape: 100 10 (1000)

I0116 13:04:55.135807 25452 net.cpp:137] Memory required for data: 31979600

I0116 13:04:55.135836 25452 layer_factory.hpp:77] Creating layer ip2_ip2_0_split

I0116 13:04:55.135859 25452 net.cpp:84] Creating Layer ip2_ip2_0_split

I0116 13:04:55.135872 25452 net.cpp:406] ip2_ip2_0_split <- ip2

I0116 13:04:55.135890 25452 net.cpp:380] ip2_ip2_0_split -> ip2_ip2_0_split_0

I0116 13:04:55.135911 25452 net.cpp:380] ip2_ip2_0_split -> ip2_ip2_0_split_1

I0116 13:04:55.136051 25452 net.cpp:122] Setting up ip2_ip2_0_split

I0116 13:04:55.136068 25452 net.cpp:129] Top shape: 100 10 (1000)

I0116 13:04:55.136085 25452 net.cpp:129] Top shape: 100 10 (1000)

I0116 13:04:55.136098 25452 net.cpp:137] Memory required for data: 31987600

I0116 13:04:55.136111 25452 layer_factory.hpp:77] Creating layer accuracy

I0116 13:04:55.136135 25452 net.cpp:84] Creating Layer accuracy

I0116 13:04:55.136157 25452 net.cpp:406] accuracy <- ip2_ip2_0_split_0

I0116 13:04:55.136171 25452 net.cpp:406] accuracy <- label_cifar_1_split_0

I0116 13:04:55.136193 25452 net.cpp:380] accuracy -> accuracy

I0116 13:04:55.136224 25452 net.cpp:122] Setting up accuracy

I0116 13:04:55.136236 25452 net.cpp:129] Top shape: (1)

I0116 13:04:55.136251 25452 net.cpp:137] Memory required for data: 31987604

I0116 13:04:55.136265 25452 layer_factory.hpp:77] Creating layer loss

I0116 13:04:55.136284 25452 net.cpp:84] Creating Layer loss

I0116 13:04:55.136297 25452 net.cpp:406] loss <- ip2_ip2_0_split_1

I0116 13:04:55.136314 25452 net.cpp:406] loss <- label_cifar_1_split_1

I0116 13:04:55.136335 25452 net.cpp:380] loss -> loss

I0116 13:04:55.136365 25452 layer_factory.hpp:77] Creating layer loss

I0116 13:04:55.139508 25452 net.cpp:122] Setting up loss

I0116 13:04:55.139556 25452 net.cpp:129] Top shape: (1)

I0116 13:04:55.139580 25452 net.cpp:132] with loss weight 1

I0116 13:04:55.139606 25452 net.cpp:137] Memory required for data: 31987608

I0116 13:04:55.139624 25452 net.cpp:198] loss needs backward computation.

I0116 13:04:55.139642 25452 net.cpp:200] accuracy does not need backward computation.

I0116 13:04:55.139658 25452 net.cpp:198] ip2_ip2_0_split needs backward computation.

I0116 13:04:55.139672 25452 net.cpp:198] ip2 needs backward computation.

I0116 13:04:55.139686 25452 net.cpp:198] ip1 needs backward computation.

I0116 13:04:55.139699 25452 net.cpp:198] pool3 needs backward computation.

I0116 13:04:55.139716 25452 net.cpp:198] relu3 needs backward computation.

I0116 13:04:55.139729 25452 net.cpp:198] conv3 needs backward computation.

I0116 13:04:55.139744 25452 net.cpp:198] pool2 needs backward computation.

I0116 13:04:55.139757 25452 net.cpp:198] relu2 needs backward computation.

I0116 13:04:55.139770 25452 net.cpp:198] conv2 needs backward computation.

I0116 13:04:55.139784 25452 net.cpp:198] relu1 needs backward computation.

I0116 13:04:55.139801 25452 net.cpp:198] pool1 needs backward computation.

I0116 13:04:55.139816 25452 net.cpp:198] conv1 needs backward computation.

I0116 13:04:55.139830 25452 net.cpp:200] label_cifar_1_split does not need backward computation.

I0116 13:04:55.139844 25452 net.cpp:200] cifar does not need backward computation.

I0116 13:04:55.139858 25452 net.cpp:242] This network produces output accuracy

I0116 13:04:55.139873 25452 net.cpp:242] This network produces output loss

I0116 13:04:55.139910 25452 net.cpp:255] Network initialization done.

I0116 13:04:55.140070 25452 solver.cpp:56] Solver scaffolding done.

I0116 13:04:55.141544 25452 caffe.cpp:248] Starting Optimization

I0116 13:04:55.141582 25452 solver.cpp:272] Solving CIFAR10_quick

I0116 13:04:55.141597 25452 solver.cpp:273] Learning Rate Policy: fixed

I0116 13:04:55.146293 25452 solver.cpp:330] Iteration 0, Testing net (#0)

I0116 13:04:56.560989 25456 data_layer.cpp:73] Restarting data prefetching from start.

I0116 13:04:56.592571 25452 solver.cpp:397] Test net output #0: accuracy = 0.0981

I0116 13:04:56.592634 25452 solver.cpp:397] Test net output #1: loss = 2.303 (* 1 = 2.303 loss)

1、在配置cifar10 的时候,出现下列错误。

gwp@gwp:~/caffe/examples/cifar10$ ./create_cifar10.sh

Creating lmdb...

./create_cifar10.sh: 13: ./create_cifar10.sh: ./build/examples/cifar10/convert_cifar_data.bin: not found

解决方法:

gwp@gwp:~/caffe$ ./examples/cifar10/create_cifar10.sh

提出问题:在根目录下直接运行跟在当前目录下直接运行有什么区别?

如果还有其他类似的问题,也可以用这种方法解决,比如在mnist运行的时候。

windows下跑caffe的

cifar-10-binary.gz 是在linux下进行压缩的,windows下解压,一般实用rar,解压后得到 cifar-10-binary 是错误的,应该用7-zip进行解压,解压后得到下面的文件就对了。然后运行 convert_cifar_data.exe input output 成功。

1730

1730

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?