参考Programming Guide

runtime角度

cuda runtime封装了底层的C API,这层C API就是cuda driver API(驱动层),我们应用程序里可以调用runtime api(cuda_api_runtime.h),也可以调用driver api(cuda.h)

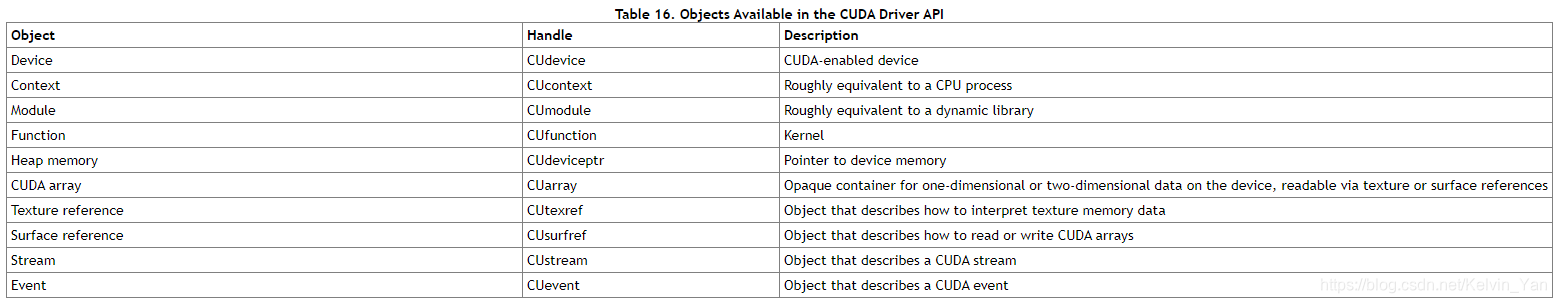

driver api相比runtime api多了两样东西:

(1)context,一个context对于device来说等价于一个host端(即cpu)的进程

(2)module,对device的动态链接库

对于runtime api,这两者的管理都是隐式进行的,使用者不用理会(也无法理会)

driver api角度

driver api的介绍:https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#driver-api

一个kernel函数在运行之前经历了如下过程:

(1)cuInit() ,在此之后才能调用任何的driver api

(2)cuCtxCreate,为当前host端线程创建context,绑定至device,并设置为current context

(3)cuModuleGetFunction,kernel函数作为PTX或者二进制obj导入至host端代码

(4)cuLaunchKernel,通过入口地址调用kernel函数

此处有一个很重要的概念:context,详见下文

以下展示一个采用纯粹的driver api调用kernel函数的例子:

int main()

{

int N = ...;

size_t size = N * sizeof(float);

// Allocate input vectors h_A and h_B in host memory

float* h_A = (float*)malloc(size);

float* h_B = (float*)malloc(size);

// Initialize input vectors

...

// Initialize

cuInit(0);

// Get number of devices supporting CUDA

int deviceCount = 0;

cuDeviceGetCount(&deviceCount);

if (deviceCount == 0) {

printf("There is no device supporting CUDA.\n");

exit (0);

}

// Get handle for device 0

CUdevice cuDevice;

cuDeviceGet(&cuDevice, 0);

// Create context

CUcontext cuContext;

cuCtxCreate(&cuContext, 0, cuDevice);

// Create module from binary file

CUmodule cuModule;

cuModuleLoad(&cuModule, "VecAdd.ptx");

// Allocate vectors in device memory

CUdeviceptr d_A;

cuMemAlloc(&d_A, size);

CUdeviceptr d_B;

cuMemAlloc(&d_B, size);

CUdeviceptr d_C;

cuMemAlloc(&d_C, size);

// Copy vectors from host memory to device memory

cuMemcpyHtoD(d_A, h_A, size);

cuMemcpyHtoD(d_B, h_B, size);

// Get function handle from module

CUfunction vecAdd;

cuModuleGetFunction(&vecAdd, cuModule, "VecAdd");

// Invoke kernel

int threadsPerBlock = 256;

int blocksPerGrid =

(N + threadsPerBlock - 1) / threadsPerBlock;

void* args[] = { &d_A, &d_B, &d_C, &N };

cuLaunchKernel(vecAdd,

blocksPerGrid, 1, 1, threadsPerBlock, 1, 1,

0, 0, args, 0);

...

}

Context

设备上下文

一个context等价于一个cpu的进程,它包含了资源的管理以及driver api的一切行为,当一个context被销毁,其包含的资源也会被释放。每个context拥有独立的modules 、 texture 、 surface地址空间,这使得不同的context,CUdeviceptr会指向不同的显存地址。

context的一些要点如下:

(1)一个cpu线程拥有一个context堆栈,位于栈顶的context将作为当前上下文。cuCtxCreate() 会创建一个新的context并压栈,堆栈指向新加入的context。cuCtxPopCurrent() 会移除栈顶context,堆栈指向上一个context

Each host thread has a stack of current contexts. cuCtxCreate() pushes the new context onto the top of the stack. cuCtxPopCurrent() may be called to detach the context from the host thread.

(2)一个cpu的线程同一时刻只有一个current context(可以理解为活动的)

A host thread may have only one device context current at a time.When a context is created with cuCtxCreate(), it is made current to the calling host thread.

(3)kernel函数也有自己所属的context,context1不能调用context2的kernel函数,反之亦然。kernel函数所属的context为module导入时的current context

CUDA functions that operate in a context (most functions that do not involve device enumeration or context management) will return CUDA_ERROR_INVALID_CONTEXT if a valid context is not current to the thread.

(4)一个context只能绑定至某一个device,因为cuCtxCreate要求输入设备id,创建时就绑定到device了

(5)context有引用计数

A usage count is also maintained for each context. cuCtxCreate() creates a context with a usage count of 1. cuCtxAttach() increments the usage count and cuCtxDetach() decrements it. A context is destroyed when the usage count goes to 0 when calling cuCtxDetach() or cuCtxDestroy().

cuCtxCreate()后context的引用计数为1;

cuCtxAttach()、cuCtxDetach()已经是废弃接口,不建议使用;

当context的引用计数为0,调用cuCtxDestroy()可以销毁context

Primary context

对于runtime,它会在第一次运行runtime api的时候为device隐式创建一个context并初始化(如果此前已经通过driver api创建了context,那runtime会沿用这个context),该context称为该device的primary context,本进程内所有的runtime api都属于这个context。初始化context是要花费较长时间的。

The runtime creates a CUDA context for each device in the system. This context is the primary context for this device and is initialized at the first runtime function which requires an active context on this device. It is shared among all the host threads of the application. As part of this context creation, the device code is just-in-time compiled if necessary (see Just-in-Time Compilation) and loaded into device memory. This all happens transparently.

当调用过cudaDeviceReset(),将会销毁primary context,此后下一次运行runtime api时将要重新创建新的primary context,这意味着又要等待一段context初始化的时间了!

When a host thread calls cudaDeviceReset(), this destroys the primary context of the device the host thread currently operates on (i.e., the current device as defined in Device Selection). The next runtime function call made by any host thread that has this device as current will create a new primary context for this device.

当应用程序内调用了多个库(lib、dll),这些库都用到了cuda runtime api,它们将共享一个primary context,而当某一个库调用了cudaDeviceReset,primary context将会被清除,那么其它库分配的device资源也会被释放,继续运行这些库将会出现异常,很显然一个最可能的异常是显存访问失败

https://docs.nvidia.com/cuda/cuda-driver-api/driver-vs-runtime-api.html#driver-vs-runtime-api

Using the runtime API with primary contexts has its tradeoffs, however. It can cause trouble for users writing plug-ins for larger software packages, for example, because if all plug-ins run in the same process, they will all share a context but will likely have no way to communicate with each other. So, if one of them calls cudaDeviceReset() after finishing all its CUDA work, the other plug-ins will fail because the context they were using was destroyed without their knowledge. To avoid this issue, CUDA clients can use the driver API to create and set the current context, and then use the runtime API to work with it. However, contexts may consume significant resources, such as device memory, extra host threads, and performance costs of context switching on the device. This runtime-driver context sharing is important when using the driver API in conjunction with libraries built on the runtime API, such as cuBLAS or cuFFT.

不要在应用程序启动和关闭的时候运行runtime或者driver api,因为此时context的状态是未知的

Note: The CUDA interfaces use global state that is initialized during host program initiation and destroyed during host program termination. The CUDA runtime and driver cannot detect if this state is invalid, so using any of these interfaces (implicitly or explicity) during program initiation or termination after main) will result in undefined behavior.

虽然primary context是runtime隐式创建的,但driver api也可以访问,给出了以下函数

CUresult CUDAAPI cuDevicePrimaryCtxRelease(CUdevice dev); //Release the primary context on the GPU.

CUresult CUDAAPI cuDevicePrimaryCtxReset(CUdevice dev); //Destroy all allocations and reset all state on the primary context.

CUresult CUDAAPI cuDevicePrimaryCtxRetain(CUcontext *pctx, CUdevice dev); //Retain the primary context on the GPU.

CUresult CUDAAPI cuDevicePrimaryCtxSetFlags(CUdevice dev, unsigned int flags); //Set flags for the primary context.

2211

2211

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?