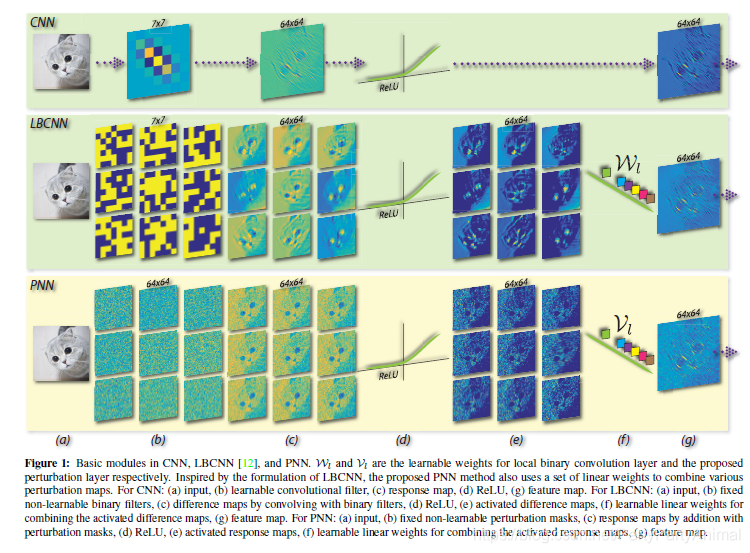

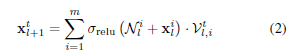

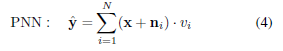

The perturbation layer does away with convolution in the traditional sense and instead computes its response as a weighted linear combination of non-linearly activated additive noise perturbed inputs.

MobileNets [10] introduced efficient reparameterization of standard 3 × 3 convolutional weights, in terms of depth-wise convolutions and 1 × 1 convolutions.

While the proposed perturbation layer does offer computational benefits in terms of fewer parameters and more efficient inference our aim in this paper is to motivate the need to rethink the premise and utility of a standard convolutional layer for image classification tasks.

(扰动层消除了传统意义上的卷积,而是将其响应计算为非线性激活的加性噪声扰动输入的加权线性组合。

MobileNets [10]在深度方向卷积和1×1卷积方面引入了标准3×3卷积权重的有效重新参数化。

虽然所提出的扰动层确实提供了更少参数和更有效推理的计算优势,但本文的目的是激发重新思考标准卷积层的前提和效用以进行图像分类任务的需要。)

PNN公式:

PNN公式:

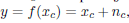

类似于:

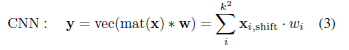

PNN和CNN之间的关联:

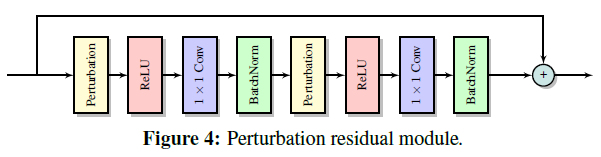

convolution leverages two important ideas that can help improve a machine learning system: sparse interactions and parameter sharing.

PNN:

(1) sparse interactions:it only needs a single element in the input to contribute to one element in the output perturbation map. 1 × 1 convolution provides the sparsest interactions possible.It is important to note that while a perturbation layer by itself has a receptive field of one pixel, the receptive field of a PNN would typically cover the entire image with an appropriate size and number of pooling layers. .

(2)parameter sharing:the parameter sharing is carried out again by the 1 × 1 convolution that linearly combines the various non-linearly activated perturbation masks.

(3)multi-scale equivalent convolutions:adding different amounts of perturbation noise is equivalent to applying convolutions at different scales.

(4)distance preserving property

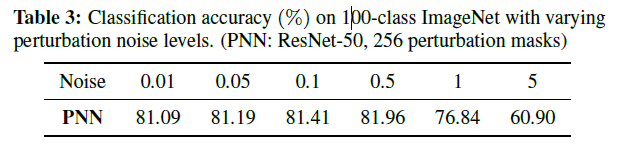

Since uniform distribution provides better control over the energy level of the noise, our main experiments are carried out by using uniformly distributed noise in the perturbation masks.

(卷积利用了两个可以帮助改进机器学习系统的重要思想:稀疏交互和参数共享。

PNN:

(1)稀疏交互:它只需要输入中的单个元素来贡献输出扰动图中的一个元素。 1×1卷积提供了可能的最稀疏的交互。重要的是要注意,虽然扰动层本身具有一个像素的感受场,但PNN的感受域通常将覆盖具有适当大小和数量的池的整个图像。层。 。

(2)参数共享:通过线性组合各种非线性激活的扰动掩模的1×1卷积再次执行参数共享。

(3)多尺度等效卷积:添加不同量的扰动噪声相当于在不同尺度上应用卷积。

(4)保留距离的财产

由于均匀分布可以更好地控制噪声的能量水平,我们的主要实验是通过在扰动掩模中使用均匀分布的噪声来实现的。)

实验:

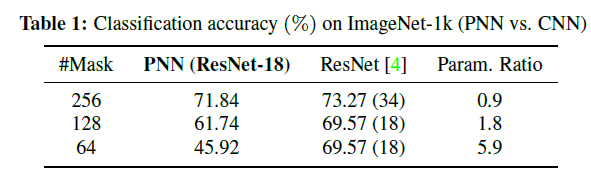

(1)ImageNet-1k

Training:All the images are first resized so that the long edge is 256 pixels, and then a 224 × 224 crop is randomly sampled from an image or its horizontal flip, with the per-pixel mean subtracted.

each standard convolutional

Layer in a residual unit is replaced by the proposed perturbation layer.

(为什么没有把PNN加入到更深层网络的结果?PNN适不适合深层网络?)

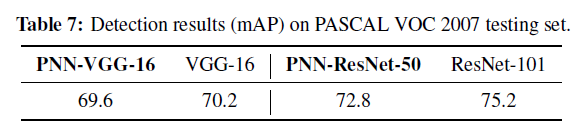

Our findings suggest that perhaps high performance deep neural networks for image classification and detection can be designed without convolutional layers.

(我们的研究结果表明,用于图像分类和检测的高性能深度神经网络可以在没有卷积层的情况下设计。)

4853

4853

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?