一、简介

MooseFS(即Moose File System,简称MFS)是一个具有容错性的网络分布式文件系统,它将数据分散存放在多个物理服务器或单独磁盘或分区上,确保一份数据有多个备份副本,对于访问MFS的客户端或者用户来说,整个分布式网络文件系统集群看起来就像一个资源一样,也就是说呈现给用户的是一个统一的资源。MooseFS就相当于UNIX的文件系统(类似ext3、ext4、nfs),它是一个分层的目录树结构。

MFS存储支持POSIX标准的文件属性(权限,最后访问和修改时间),支持特殊的文件,如块设备,字符设备,管道、套接字、链接文件(符合链接、硬链接);

MFS支持FUSE(用户空间文件系统Filesystem in Userspace,简称FUSE),客户端挂载后可以作为一个普通的Unix文件系统使用MooseFS。

MFS可支持文件自动备份的功能,提高可用性和高扩展性。MogileFS不支持对一个文件内部的随机或顺序读写,因此只适合做一部分应用,如图片服务,静态HTML服务、

文件服务器等,这些应用在文件写入后基本上不需要对文件进行修改,但是可以生成一个新的文件覆盖原有文件

二、MooseFS的特性

- Free(GPL)

- 通用文件系统,不需要修改上层应用就可以使用

- 可以在线扩容,体系架构可伸缩性极强。

- 部署简单。

- 高可用,可设置任意的文件冗余程度(提供比 raid1+0 更高的冗余级别,而绝对不会影响读或

写的性能,只会加速!) - 可回收在指定时间内删除的文件( “ 回收站 ” 提供的是系统级别的服务,不怕误操作了,提供类

似 oralce 的闪回等高级 dbms 的即时回滚特性!) - 提供 netapp,emc,ibm 等商业存储的 snapshot 特性。(可以对整个文件甚至在正在写入的文

件创建文件的快照) - google filesystem 的一个 c 实现。

- 提供 web gui 监控接口。

- 提高随机读或写的效率。

- 提高海量小文件的读写效率。

三、MooseFS的优点

1)部署简单,轻量、易配置、易维护

2)易于扩展,支持在线扩容,不影响业务,体系架构可伸缩性极强(官方的case可以扩到70台)

3)通用文件系统,不需要修改上层应用就可以使用(比那些需要专门api的dfs方便多了)。

4)以文件系统方式展示:如存图片,虽然存储在chunkserver上的数据是二进制文件,但是在挂载mfs的client端仍旧以图片文件形式展示,便于数据备份

5)硬盘利用率高。测试需要较大磁盘空间

6)可设置删除的空间回收时间,避免误删除文件丢失就恢复不及时影响业务

7)系统负载,即数据读写分配到所有的服务器上

8)可设置文件备份的副本数量,一般建议3份,未来硬盘容量也要是存储单份的容量的三倍

四、可能的瓶颈:

- master 本身的性能瓶颈。mfs 系统 master 存在单点故障如何解决?moosefs+drbd+heartbeat 来保证 master 单点问题?不过在使用过程中不可能完全不关机和间歇性的网络中断!

- 体系架构存储文件总数的可遇见的上限。(mfs 把文件系统的结构缓存到 master 的内存中,文 件越多,master 的内存消耗越大,8g 对应 2500w 的文件数,2 亿文件就得 64GB 内存 )。 master 服务器 CPU负载取决于操作的次数,内存的使用取决于文件和文件夹的个数。

五、MFS 文件系统结构:

包含 4 种角色:

- 管理服务器 managing server (master)

- 元数据日志服务器 Metalogger server(Metalogger)

- 数据存储服务器 data servers (chunkservers)

- 客户机挂载使用 client computers

各种角色作用:

- 管理服务器:负责各个数据存储服务器的管理,文件读写调度,文件空间回收以及恢复.多节点拷贝。

- 元数据日志服务器: 负责备份 master 服务器的变化日志文件,文件类型为changelog_ml.*.mfs,以便于在 master server 出问题的时候接替其进行工作。

- 数据存储服务器:负责连接管理服务器,听从管理服务器调度,提供存储空间,并为客户提供数据传输。

- 客户端: 通过 fuse 内核接口挂接远程管理服务器上所管理的数据存储服务器,看起来共享的文件系统和本地 unix 文件系统使用一样的效果。

六MFS 部署

1.主机环境:RHEL6.5

| ip | hostname | 角色 |

|---|---|---|

| 172.25.27.2 | server2 | MFS Master |

| 172.25.27.3 | server3 | MFS Metalogger |

| 172.25.27.4 | server4 | MFS Chunkserver1 |

| 172.25.27.5 | server5 | MFS Chunkserver2 |

| 172.25.27.6 | server6 | MFS Client |

2.软件下载,生成rpm包

下载地址:https://moosefs.com/index.html

生成 rpm,便于部署

[root@server2 ~]# yum install gcc make rpm-build fuse-devel zlib-devel -y

[root@server2 ~]# wget http://mirror.centos.org/centos/6/os/x86_64/Packages/libpcap-1.4.0-4.20130826git2dbcaa1.el6.x86_64.rpm

[root@server2 ~]# wget http://mirror.centos.org/centos/6/os/x86_64/Packages/libpcap-devel-1.4.0-4.20130826git2dbcaa1.el6.x86_64.rpm

[root@server2 ~]# yum install -y libpcap-1.4.0-4.20130826git2dbcaa1.el6.x86_64.rpm libpcap-devel-1.4.0-4.20130826git2dbcaa1.el6.x86_64.rpm

[root@server2 ~]# rpmbuild -tb moosefs-3.0.80.tar.gz

[root@server2 ~]# cd /root/rpmbuild/RPMS/x86_64/

[root@server2 x86_64]# ls

moosefs-cgi-3.0.80-1.x86_64.rpm moosefs-client-3.0.80-1.x86_64.rpm

moosefs-cgiserv-3.0.80-1.x86_64.rpm moosefs-master-3.0.80-1.x86_64.rpm

moosefs-chunkserver-3.0.80-1.x86_64.rpm moosefs-metalogger-3.0.80-1.x86_64.rpm

moosefs-cli-3.0.80-1.x86_64.rpm moosefs-netdump-3.0.80-1.x86_64.rpm3.主控服务器 Master server 安装

[root@server2 x86_64]# yum install -y moosefs-master-3.0.80-1.x86_64.rpm moosefs-cgi-3.0.80-1.x86_64.rpm moosefs-cgiserv-3.0.80-1.x86_64.rpm

[root@server2 ~]# vim /etc/hosts

172.25.27.2 mfsmaster server2

[root@server2 x86_64]# cat /etc/mfs/mfsmaster.cfg ##主配置文件

[root@server2 ~]# mfsmaster start ##启动 master server

open files limit has been set to: 16384

working directory: /var/lib/mfs

lockfile created and locked

initializing mfsmaster modules ...

exports file has been loaded

topology file has been loaded

loading metadata ...

metadata file has been loaded

no charts data file - initializing empty charts

master <-> metaloggers module: listen on *:9419

master <-> chunkservers module: listen on *:9420

main master server module: listen on *:9421

mfsmaster daemon initialized properly

[root@server2 ~]# ls -a /var/lib/mfs

. .. changelog.0.mfs metadata.mfs.back metadata.mfs.empty .mfsmaster.lock

此时进入/var/lib/mfs 可以看到 moosefs 所产生的数据:

.mfsmaster.lock 文件记录正在运行的 mfsmaster 的主进程

metadata.mfs, metadata.mfs.back MooseFS 文件系统的元数据 metadata 的镜像

changelog.*.mfs 是 MooseFS 文件系统元数据的改变日志(每一个小时合并到 metadata.mfs中一次)

Metadata 文件的大小是取决于文件数的多少(而不是他们的大小)。changelog 日志的大小是取决于每小时操作的数目,但是这个时间长度(默认是按小时)是可配置的

[root@server2 x86_64]# mfscgiserv ##启动 CGI 监控服务

lockfile created and locked

starting simple cgi server (host: any , port: 9425 , rootpath: /usr/share/mfscgi)

[root@server2 x86_64]# chmod +x /usr/share/mfscgi/mfs.cgi /usr/share/mfscgi/chart.cgi

[root@server2 x86_64]# mfscgiserv

sending SIGTERM to lock owner (pid:7735)

lockfile created and locked

starting simple cgi server (host: any , port: 9425 , rootpath: /usr/share/mfscgi)

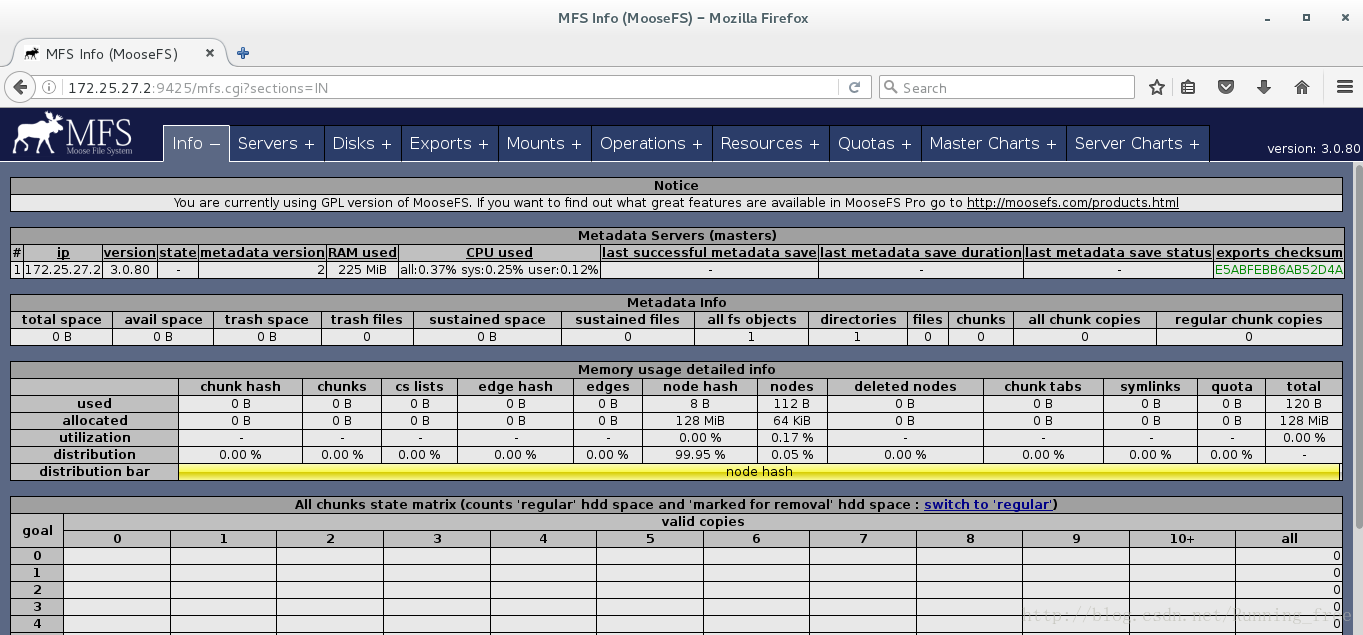

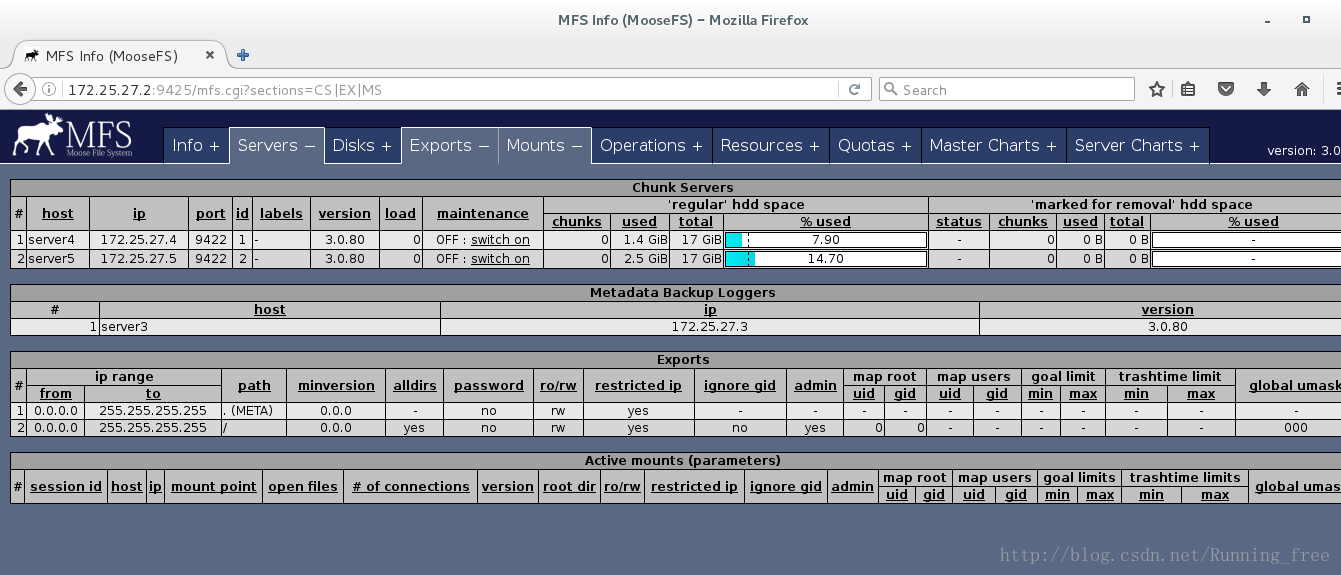

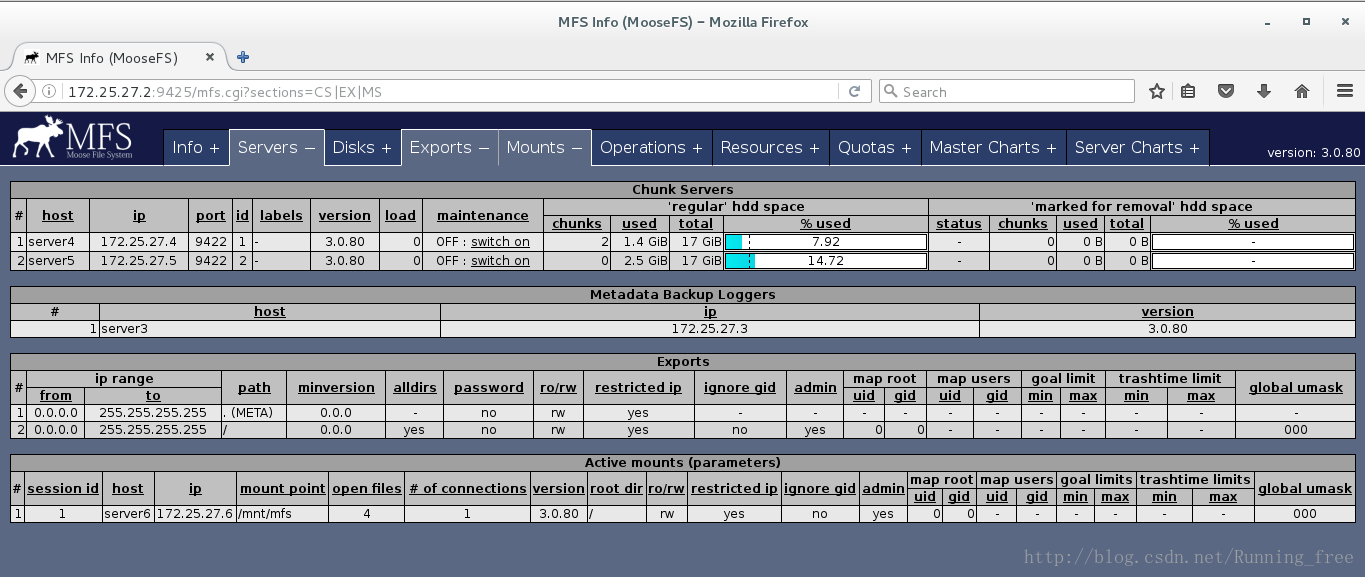

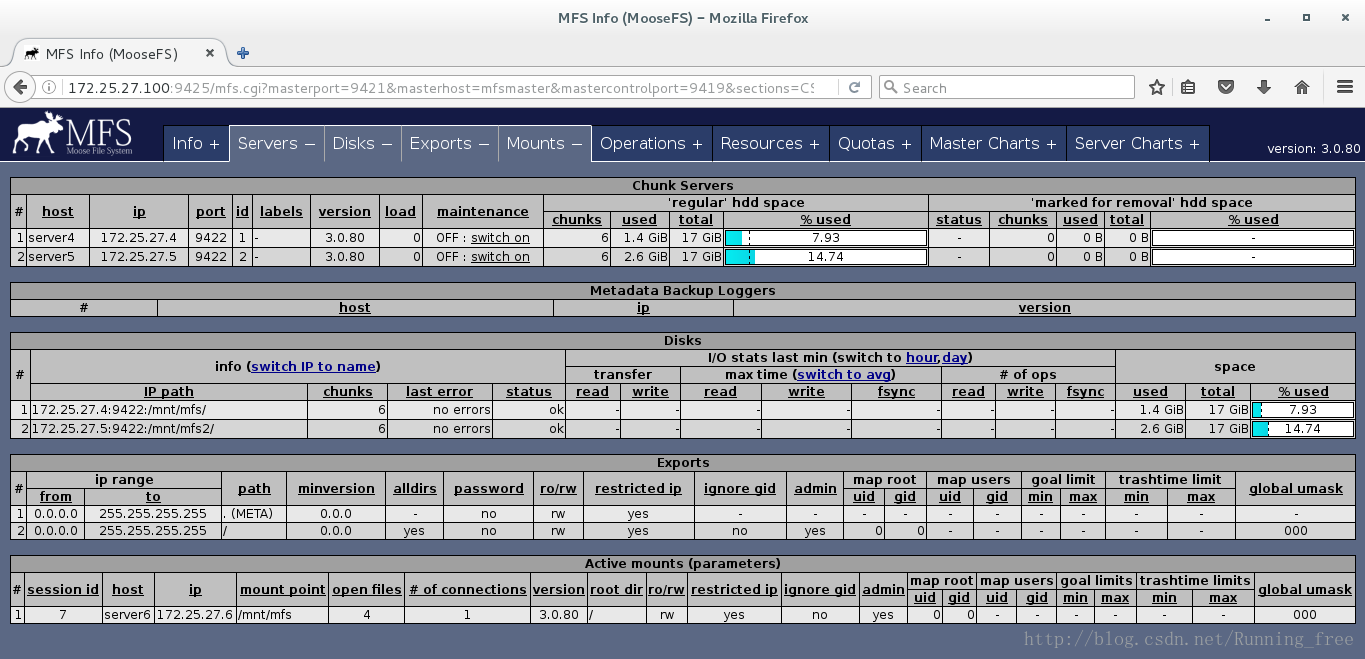

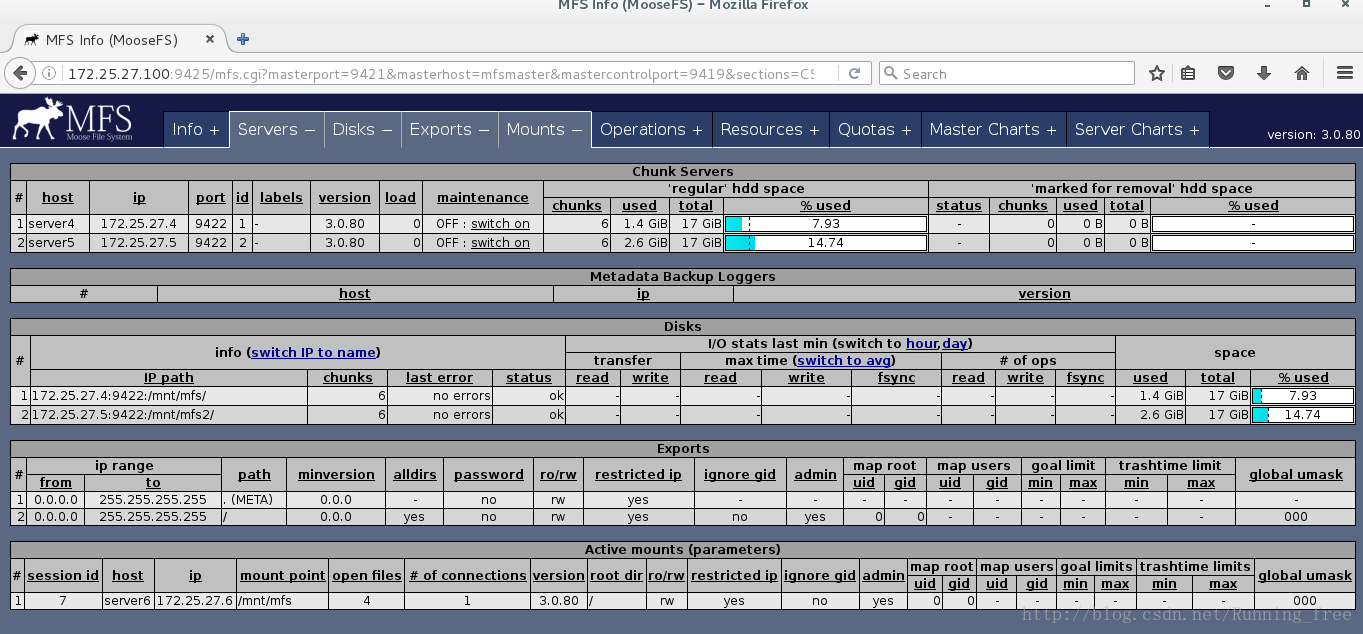

在浏览器地址栏输入 http://172.25.27.2:9425 即可查看 master 的运行情况

4.元数据日志服务器 Metalogger server 安装

[root@server2 x86_64]# scp moosefs-metalogger-3.0.80-1.x86_64.rpm server3: ##酱缸才生成的rpm包传到 Metalogger server上

[root@server3 ~]# yum install -y moosefs-metalogger-3.0.80-1.x86_64.rpm

[root@server3 ~]# cat /etc/mfs/mfsmetalogger.cfg ##主配置文件

[root@server3 ~]# vim /etc/hosts

172.25.27.2 mfsmaster server2

[root@server3 ~]# mfsmetalogger start

open files limit has been set to: 4096

working directory: /var/lib/mfs

lockfile created and locked

initializing mfsmetalogger modules ...

mfsmetalogger daemon initialized properly

[root@server3 ~]# ll /var/lib/mfs ##在/var/lib/mfs 目录中可以看到从 master 上复制来的元数据

total 8

-rw-r----- 1 mfs mfs 45 Oct 22 09:31 changelog_ml_back.0.mfs

-rw-r----- 1 mfs mfs 0 Oct 22 09:31 changelog_ml_back.1.mfs

-rw-r----- 1 mfs mfs 8 Oct 22 09:31 metadata_ml.tmp

Mfsmetalogger 并不能完美的接管 master server,在实际生产环境中使用 HA 解决 master 的单点故障,后面会讲到怎么实现

5.存储块服务器 Chunk servers 安装

[root@server2 x86_64]# scp moosefs-chunkserver-3.0.80-1.x86_64.rpm server4:

[root@server2 x86_64]# scp moosefs-chunkserver-3.0.80-1.x86_64.rpm server5:

[root@server4 ~]# yum install -y moosefs-chunkserver-3.0.80-1.x86_64.rpm

[root@server4 ~]# vim /etc/hosts

172.25.27.2 mfsmaster server2

[root@server4 ~]# mkdir /mnt/mfsdata1 ##这个是数据的真实存放目录,名字随意起。里面存放的是数据的chunks块文件

[root@server4 ~]# chown mfs.mfs /mnt/mfsdata1/

[root@server4 ~]# echo '/mnt/mfsdata1'>> /etc/mfs/mfshdd.cfg 定义 mfs 共享点

[root@server4 ~]# mfschunkserver start ##启动Chunk server

open files limit has been set to: 16384

working directory: /var/lib/mfs

lockfile created and locked

setting glibc malloc arena max to 8

setting glibc malloc arena test to 1

initializing mfschunkserver modules ...

hdd space manager: path to scan: /mnt/mfsdata1/

hdd space manager: start background hdd scanning (searching for available chunks)

main server module: listen on *:9422

no charts data file - initializing empty charts

mfschunkserver daemon initialized properly另一台Chunk server完全相同的操作,这里不再演示了

启动之后再通过浏览器访问 http://172.25.27.2:9425 可以看见这个 MooseFS 系统的全部信息,包括主控 master 和存储服务 chunkserver 。

6.客户端 client 安装

[root@server2 x86_64]# scp moosefs-client-3.0.80-1.x86_64.rpm server6:

[root@server6 ~]# yum install -y moosefs-client-3.0.80-1.x86_64.rpm

[root@server6 ~]# vim /etc/hosts

172.25.27.2 mfsmaster server2

[root@server6 ~]# vim /etc/mfs/mfsmount.cfg

mfsmaster=mfsmaster

/mnt/mfs ##定义客户端默认挂载

[root@server6 ~]# mfsmount ##或者 mfsmount /mnt/mfs

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@server6 ~]# df -h

...

mfsmaster:9421 35G 4.0G 31G 12% /mnt/mfs

7.MFS 测试

- 创建文件与文件夹

[root@server6 mfs]# mkdir dir{1..5}

[root@server6 mfs]# ls

dir1 dir2 dir3 dir4 dir5

[root@server6 mfs]# mfsdirinfo dir1

dir1:

inodes: 1

directories: 1

files: 0

chunks: 0

length: 0

size: 0

realsize: 0

[root@server6 mfs]# cp /etc/passwd .

[root@server6 mfs]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

[root@server6 mfs]# mfssetgoal -r 1 passwd 设置passwd存储份数为一个,默认是两个

passwd:

inodes with goal changed: 1

inodes with goal not changed: 0

inodes with permission denied: 0

[root@server6 mfs]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)如果对一个目录设定 “ goal”,此目录下的新创建文件和子目录均会继承此目录的设定,但不会改变已经存在的文件及目录的 copy 份数。但使用-r 选项可以更改已经存在的 copy 份数。

- 测试拷贝同一个文件到备份数不同的两个目录

[root@server6 mfs]# mfssetgoal -r 1 dir4/

dir4/:

inodes with goal changed: 1

inodes with goal not changed: 0

inodes with permission denied: 0

[root@server6 mfs]# cp /etc/passwd dir4/

[root@server6 mfs]# cp /etc/passwd dir5/

[root@server6 mfs]# mfsfileinfo dir4/passwd

dir4/passwd:

chunk 0: 0000000000000005_00000001 / (id:5 ver:1)

copy 1: 172.25.27.5:9422 (status:VALID)

[root@server6 mfs]# mfsfileinfo dir5/passwd

dir5/passwd:

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

关闭 mfschunkserver2 后再查看文件信息

[root@server5 ~]# mfschunkserver stop

[root@server6 mfs]# mfsfileinfo dir4/passwd

dir4/passwd:

chunk 0: 0000000000000005_00000001 / (id:5 ver:1)

no valid copies !!!

[root@server6 mfs]# mfsfileinfo dir5/passwd

dir5/passwd:

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)只存一份的文件不可用,存两份的仍可使用,启动 mfschunkserver2 后,文件回复正常。

- 大文件测试

[root@server6 mfs]# dd if=/dev/zero of=/mnt/mfs/dir5/bigfile bs=1M count=500

500+0 records in

500+0 records out

524288000 bytes (524 MB) copied, 4.13419 s, 127 MB/s

[root@server6 mfs]# mfsfileinfo dir5/bigfile

dir5/bigfile:

chunk 0: 000000000000000F_00000001 / (id:15 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 1: 0000000000000010_00000001 / (id:16 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 2: 0000000000000011_00000001 / (id:17 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 3: 0000000000000012_00000001 / (id:18 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 4: 0000000000000013_00000001 / (id:19 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 5: 0000000000000014_00000001 / (id:20 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 6: 0000000000000015_00000001 / (id:21 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)

chunk 7: 0000000000000016_00000001 / (id:22 ver:1)

copy 1: 172.25.27.4:9422 (status:VALID)

copy 2: 172.25.27.5:9422 (status:VALID)七、MFS+Keepalived双机高可用热备

1.思考

基于MFS的单点及手动备份的缺陷,考虑将其与Keepalived相结合以提高可用性,在以上基础上,只需要做如下改动:

- 将master-server作为Keepalived_MASTER(启动mfsmaster、mfscgiserv)

- 将matelogger作为Keepalived_BACKUP(启动mfsmaster、mfscgiserv)

- 将ChunkServer服务器里配置的MASTER_HOST参数值改为VIP地址

- clinet挂载的master的ip地址改为VIP地址

按照这样调整后,需要将Keepalived_MASTER和Keepalived_BACKUP里面的hosts绑定信息也修改下。

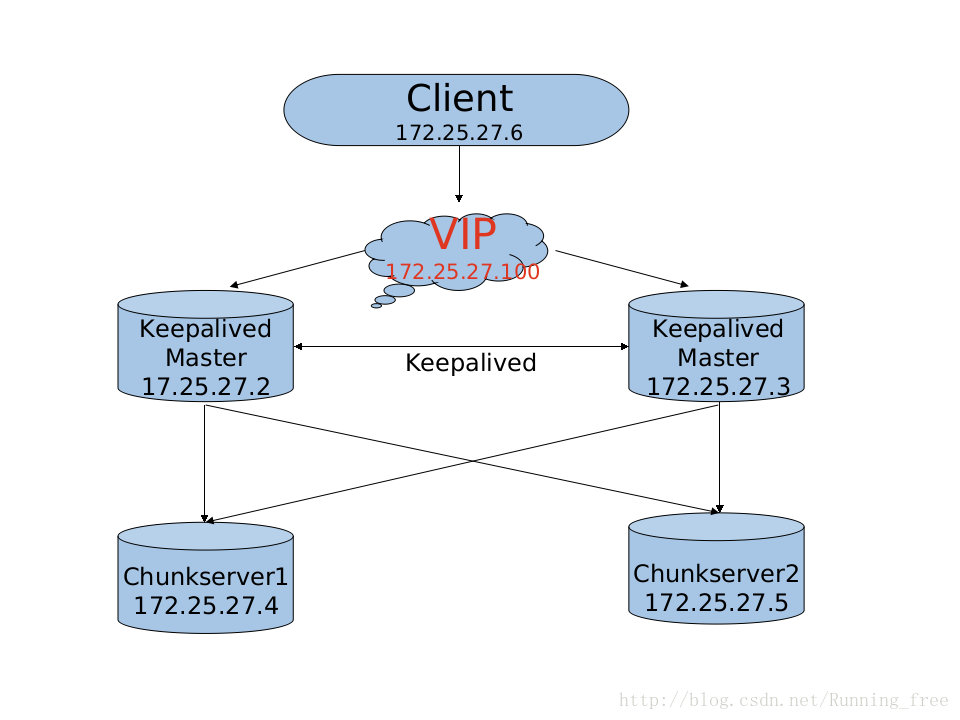

2.方案实施原理及思路

- mfsmaster的故障恢复在1.6.5版本后可以由mfsmetalogger产生的日志文件changelog_ml.*.mfs和metadata.mfs.back文件通过命令mfsmetarestore恢复

- 定时从mfsmaster获取metadata.mfs.back 文件用于master恢复

- Keepalived MASTER检测到mfsmaster进程宕停时会执行监控脚本,即自动启动mfsmaster进程,如果启动失败,则会强制kill掉keepalived和mfscgiserv进程,由此转移VIP到BACKUP上面。

- 若是Keepalived MASTER故障恢复,则会将VIP资源从BACKUP一方强制抢夺回来,继而由它提供服务

- 整个切换在2~5秒内完成 根据检测时间间隔。

3.部署方案

主机环境:RHEL6.5

| ip | hostname | 角色 |

|---|---|---|

| 172.25.27.2 | server2 | Keepalived Master |

| 172.25.27.3 | server3 | Keepalived Backup |

| 172.25.27.4 | server4 | MFS Chunkserver1 |

| 172.25.27.5 | server5 | MFS Chunkserver2 |

| 172.25.27.6 | server6 | MFS Client |

| 172.25.27.100 | VIP |

架构拓扑图如下

4.Keepalived_MASTER 实现

- keepalived安装

[root@server2 ~]# wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz

[root@server2 ~]# tar -zxf keepalived-1.3.5.tar.gz

[root@server2 ~]# cd keepalived-1.3.5

[root@server2 keepalived-1.3.5]# yum install -y openssl-devel

[root@server2 keepalived-1.3.5]# ./configure --prefix=/usr/local/keepalived --with-init=SYSV

[root@server2 keepalived-1.3.5]# make && make install

[root@server2 keepalived-1.3.5]# ln -s /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

[root@server2 keepalived-1.3.5]# chmod +x /etc/init.d/keepalived

[root@server2 keepalived-1.3.5]# ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@server2 keepalived-1.3.5]# ln -s /usr/local/keepalived/etc/keepalived/ /etc/

[root@server2 keepalived-1.3.5]# ln -s /usr/local/keepalived/sbin/keepalived /sbin/

[root@server2 keepalived-1.3.5]# scp -r /usr/local/keepalived/ server3:/usr/local/

[root@server2 keepalived-1.3.5]# chkconfig keepalived on

##测试

[root@server2 keepalived-1.3.5]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@server2 keepalived-1.3.5]# /etc/init.d/keepalived restart

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

[root@server2 keepalived-1.3.5]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]- 配置Keepalived

[root@server2 keepalived-1.3.5]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@server2 keepalived-1.3.5]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id MFS_HA_MASTER

#router_id LVS_DEVEL

#vrrp_skip_check_adv_addr

#vrrp_strict

#vrrp_garp_interval 0

#vrrp_gna_interval 0

}

vrrp_script chk_mfs {

script "/usr/local/mfs/keepalived_check_mfsmaster.sh" ##监控脚本

interval 2 ##监控时间

weight 2

}

vrrp_instance VI_1 {

state MASTER ## 设置为 MASTER

interface eth0 ## 监控网卡

virtual_router_id 51 ## 这个两台服务器必须一样

priority 100 ## 权重值 MASTRE 一定要高于 BAUCKUP

advert_int 1

authentication {

auth_type PASS ## 加密

auth_pass 1111 ## 加密的密码,两台服务器一定要一样,不然会出错

}

track_script {

chk_mfs ## 执行监控的服务

}

virtual_ipaddress {

172.25.27.100 ## VIP 地址

}

notify_master "/etc/keepalived/clean_arp.sh 172.25.27.100" ##成为master 时候执行该脚本

}

- 编写监控脚本

[root@server2 keepalived-1.3.5]# mkdir -p /usr/local/mfs/

[root@server2 keepalived-1.3.5]# vim /usr/local/mfs/keepalived_check_mfsmaster.sh

#!/bin/bash

STATUS=`ps -C mfsmaster --no-header | wc -l` ## 查看是否有 nginx进程 把值赋给变量TATUS

if [ $STATUS -eq 0 ];then ## 如果没有进程值,则执行 mfsmaster start

mfsmaster start

sleep 3

if [ `ps -C mfsmaster --no-header | wc -l ` -eq 0 ];then

/usr/bin/killall -9 mfscgiserv ## 如果有进程值,结束 mfscgiserv 进程

/usr/bin/killall -9 keepalived ## 结束 keepalived 进程

fi

fi

[root@server2 keepalived-1.3.5]# chmod +x /usr/local/mfs/keepalived_check_mfsmaster.sh

- 设置更新虚拟服务器(VIP)地址的arp记录到网关脚本

[root@server2 keepalived-1.3.5]# vim /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=172.25.27.250

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null

[root@server2 keepalived-1.3.5]# chmod +x /etc/keepalived/clean_arp.sh

本地解析

[root@server2 ~]# vim /etc/hosts

172.25.27.100 mfsmaster

#172.25.27.2 mfsmaster server2

172.25.27.2 server2- 启动keepalived(确保Keepalived_MASTER机器的mfs master服务和Keepalived服务都要启动)

[root@server2 keepalived-1.3.5]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@server2 keepalived-1.3.5]# ps -ef|grep keepalived

root 16038 1 0 11:32 ? 00:00:00 keepalived -D

root 16040 16038 0 11:32 ? 00:00:00 keepalived -D

root 16041 16038 0 11:32 ? 00:00:00 keepalived -D

root 16078 1027 0 11:32 pts/0 00:00:00 grep keepalived

[root@server2 keepalived-1.3.5]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:de:d6:14 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.2/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/32 scope global eth0

inet6 fe80::5054:ff:fede:d614/64 scope link

valid_lft forever preferred_lft forever

5.Keepalived_BACKUP 实现

- 首先需要和Keepalived_MASTER环境一样,需要安装moosefs-master,由于采用keepalived实现高可用,元数据日志服务器moosefs-metalogger就可以停用了

[root@server3 ~]# mfsmetalogger stop

[root@server3 ~]# yum install moosefs-master-3.0.80-1.x86_64.rpm moosefs-cgiserv-3.0.80-1.x86_64.rpm -y

[root@server3 ~]# vim /etc/hosts

#172.25.27.2 mfsmaster server2

172.25.27.100 server2

[root@server3 ~]# mfsmaster start1.安装

##确保刚才已经把文件夹考过来了

[root@server3 ~]# ls /usr/local/keepalived/

bin etc sbin share

[root@server3 ~]# vim keepalive.sh

#!/bin/bash

ln -s /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

chmod +x /etc/init.d/keepalived

ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

ln -s /usr/local/keepalived/etc/keepalived/ /etc/

ln -s /usr/local/keepalived/sbin/keepalived /sbin/

chkconfig keepalived on

[root@server3 ~]# chmod +x keepalive.sh

[root@server3 ~]# ./keepalive.sh

##测试

[root@server3 ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@server3 ~]# /etc/init.d/keepalived restart

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

[root@server3 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]2.配置Keepalived

[root@server3 ~]# scp server2:/etc/keepalived/keepalived.conf /etc/keepalived/ ##改以下三个地方

router_id MFS_HA_BACKUP

state BACKUP

priority 503.编写监控脚本

[root@server3 ~]# mkdir -p /usr/local/mfs/

[root@server3 ~]# scp server2:/usr/local/mfs/keepalived_check_mfsmaster.sh /usr/local/mfs/

[root@server3 ~]# chmod +x /usr/local/mfs/keepalived_mfsmaster.sh4.设置更新虚拟服务器(VIP)地址的arp记录到网关脚本

[root@server3 ~]# scp server2:/etc/keepalived/clean_arp.sh /etc/keepalived/clean_arp.sh

[root@server3 ~]# cat /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=172.25.27.250

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null本地解析

[root@server3 ~]# vim /etc/hosts

172.25.27.100 mfsmaster

#172.25.27.2 mfsmaster server2

172.25.27.2 server25.启动keepalived

[root@server3 ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@server3 ~]# ps -ef|grep keepalived

root 1276 1 0 11:55 ? 00:00:00 keepalived -D

root 1278 1276 0 11:55 ? 00:00:00 keepalived -D

root 1279 1276 0 11:55 ? 00:00:00 keepalived -D

root 1307 1029 0 11:55 pts/0 00:00:00 grep keepalived

[root@server3 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:95:91:c4 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.3/24 brd 172.25.27.255 scope global eth0

inet6 fe80::5054:ff:fe95:91c4/64 scope link

valid_lft forever preferred_lft forever

6.chunkServer的配置

[root@server4 ~]# sed -i.bak 's/# MASTER_HOST = mfsmaster/MASTER_HOST = 172.25.27.100/g' /etc/mfs/mfschunkserver.cfg

[root@server4 ~]# cat /etc/mfs/mfschunkserver.cfg | grep MASTER_HOST

MASTER_HOST = 172.25.27.100

[root@server4 ~]# vim /etc/hosts

#172.25.27.2 mfsmaster server2

172.25.27.2 server2

172.25.27.100 mfsmaster

[root@server4 ~]# mfschunkserver restart

[root@server5 ~]# sed -i.bak 's/# MASTER_HOST = mfsmaster/MASTER_HOST = 172.25.27.100/g' /etc/mfs/mfschunkserver.cfg

[root@server5 ~]# vim /etc/hosts

#172.25.27.2 mfsmaster server2

172.25.27.2 server2

172.25.27.100 mfsmaster

[root@server5 ~]# mfschunkserver restart7.clinet客户端的配置

[root@server6 ~]# vim /etc/hosts

#172.25.27.2 mfsmaster server2

172.25.27.2 server2

172.25.27.100 mfsmaster

[root@server6 mnt]# umount -l /mnt/mfs

[root@server6 ~]# mfsmount /mnt/mfs -H 172.25.27.100 ##或者直接执行mfsmount

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@server6 ~]# mfsmount -m /mnt/mfsmeta/ -H 172.25.27.100

mfsmaster accepted connection with parameters: read-write,restricted_ip

[root@server6 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 914M 17G 6% /

tmpfs 939M 0 939M 0% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot

mfsmaster:9421 35G 4.0G 31G 12% /mnt/mfs

验证下客户端挂载MFS文件系统后的数据读写是否正常,此布省略

8.故障切换后的数据同步脚本

上面的配置可以实现Keepalived_MASTER机器出现故障(keepalived服务关闭),VIP资源转移到Keepalived_BACKUP上;

当Keepalived_MASTER机器故障恢复(即keepalived服务开启),那么它就会将VIP资源再次抢夺回来!

但是只是实现了VIP资源的转移,但是MFS文件系统的数据该如何进行同步呢?

下面在两台机器上分别写了数据同步脚本

要提前做好双方的ssh无密码登陆的信任关系,并且需要安装rsync

1.Keepalived_MASTER

[root@server2 ~]# yum install -y rsync

[root@server2 keepalived-1.3.5]# vim /usr/local/mfs/MFS_DATA_Sync.sh

#!/bin/bash

VIP=`ip addr|grep 172.25.27.100|awk -F" " '{print $2}'|cut -d"/" -f1`

if [ $VIP == 172.25.27.100 ];then

mfsmaster stop

rm -rf /var/lib/mfs/metadata.mfs /var/lib/mfs/metadata.mfs.bak /var/lib/mfs/changelog.*.mfs /var/lib/mfs/Metadata ##或者写成 rm -rf /var/lib/mfs/* ,为防止数据同步失败导致服务无法开启,我这里只删除部分文件

rsync -e "ssh -p22" -avpgolr 172.25.27.3:/var/lib/mfs/* /var/lib/mfs/

mfsmetarestore -m

mfsmaster -ai

sleep 3

mfsmaster restart

echo "this server has become the master of MFS"

if [ $STATUS != 172.25.27.100 ];then

echo "this server is still MFS's slave"

fi

fi

[root@server2 keepalived-1.3.5]# chmod +x /usr/local/mfs/MFS_DATA_Sync.sh

2.Keepalived_BACKUP

[root@server3 ~]# yum install -y rsync

[root@server3 ~]# scp server2:/usr/local/mfs/MFS_DATA_Sync.sh /usr/local/mfs/

[root@server3 ~]# sed -i.bak 's/172.25.27.3/172.25.27.2/g' /usr/local/mfs/MFS_DATA_Sync.sh即当VIP资源转移到自己这一方时,执行这个同步脚本,就会将对方的数据同步过来了。

9.故障切换测试

1.关闭Keepalived_MASTER的mfsmaster服务

[root@server2 keepalived-1.3.5]# mfsmaster stop

sending SIGTERM to lock owner (pid:25365)

waiting for termination terminated

[root@server2 keepalived-1.3.5]# ps -ef|grep mfs

root 7739 1 0 09:21 ? 00:00:00 /usr/bin/python /usr/sbin/mfscgiserv

root 7849 7739 0 10:07 ? 00:00:00 [mfs.cgi] <defunct>

mfs 25755 1 20 12:27 ? 00:00:00 mfsmaster start

root 25763 1027 0 12:27 pts/0 00:00:00 grep mfs

mfsmaster关闭后,会自动重启

2.关闭Keepalived_MASTER的keepalived

[root@server2 keepalived-1.3.5]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server2 keepalived-1.3.5]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:de:d6:14 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.2/24 brd 172.25.27.255 scope global eth0

inet6 fe80::5054:ff:fede:d614/64 scope link

valid_lft forever preferred_lft forever

Keepalived_MASTER的keepalived关闭后,VIP资源就不在它上面了

然后到Keepalived_BACKUP上面发现,VIP已经过来了

[root@server3 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:95:91:c4 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.3/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/32 scope global eth0

inet6 fe80::5054:ff:fe95:91c4/64 scope link

valid_lft forever preferred_lft forever

查看数据会发现无数据,这时候我们可就要用刚才的脚本同步数据

[root@server6 ~]# ls /mnt/mfs

[root@server6 ~]#umount -l /mnt/mfs

[root@server6 ~]# mfsmount /mnt/mfs -H 172.25.27.100

[root@server6 ~]# ls /mnt/mfs

[root@server3 ~]# sh -x /usr/local/mfs/MFS_DATA_Sync.sh [root@server2 ~]# ip addr

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:de:d6:14 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.2/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/24 scope global secondary eth0

inet6 fe80::5054:ff:fede:d614/64 scope link

valid_lft forever preferred_lft forever

[root@server6 ~]# mfsmount

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@server6 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 913M 17G 6% /

tmpfs 939M 0 939M 0% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot

mfsmaster:9421 35G 4.0G 31G 12% /mnt/mfs

[root@server6 ~]# cd /mnt/mfs

[root@server6 mfs]# ls

passwd

[root@server6 mfs]# tail -n 5 passwd

vcsa:x:69:69:virtual console memory owner:/dev:/sbin/nologin

saslauth:x:499:76:"Saslauthd user":/var/empty/saslauth:/sbin/nologin

postfix:x:89:89::/var/spool/postfix:/sbin/nologin

sshd:x:74:74:Privilege-separated SSH:/var/empty/sshd:/sbin/nologin

apache:x:48:48:Apache:/var/www:/sbin/nologin停掉server2的keepalived

[root@server2 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server3 .ssh]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:95:91:c4 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.3/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/24 scope global secondary eth0

inet6 fe80::5054:ff:fe95:91c4/64 scope link

valid_lft forever preferred_lft forever

[root@server6 mfs]# ls

passwd

[root@server6 mfs]# tail -n 5 passwd

tail: cannot open `passwd' for reading: No such file or directory

[root@server3 ~]# sh -x /usr/local/mfs/MFS_DATA_Sync.sh

[root@server6 mnt]# ls

passwd

[root@server6 mfs]# tail -n 5 passwd

vcsa:x:69:69:virtual console memory owner:/dev:/sbin/nologin

saslauth:x:499:76:"Saslauthd user":/var/empty/saslauth:/sbin/nologin

postfix:x:89:89::/var/spool/postfix:/sbin/nologin

sshd:x:74:74:Privilege-separated SSH:/var/empty/sshd:/sbin/nologin

apache:x:48:48:Apache:/var/www:/sbin/nologin这样还是不爽,每次要手动启动脚本

所以再做改动,将/usr/local/mfs/MFS_DATA_Sync.sh整合到/etc/keepalived/clean_arp.sh 里面,每次切换vip的时候就自动执行数据同步了

[root@server2 ~]# vim /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=172.25.27.250

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null

/bin/sh /usr/local/mfs/MFS_DATA_Sync.sh ##添加这一行

server3也一样,每次切换vip的时候,就调用同步脚本,这样数据就可以自动同步了

[root@server3 ~]# vim /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=172.25.27.250

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null

/bin/sh /usr/local/mfs/MFS_DATA_Sync.sh测试:

[root@server2 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:de:d6:14 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.2/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/24 scope global secondary eth0

inet6 fe80::5054:ff:fede:d614/64 scope link

valid_lft forever preferred_lft forever

[root@server6 mfs]# cat file5

cat: file5: No such file or directory

[root@server6 mfs]# echo "test5">file5

[root@server6 mfs]# cat file5

test5

[root@server2 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server2 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:de:d6:14 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.2/24 brd 172.25.27.255 scope global eth0

inet6 fe80::5054:ff:fede:d614/64 scope link

valid_lft forever preferred_lft forever

[root@server3 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:95:91:c4 brd ff:ff:ff:ff:ff:ff

inet 172.25.27.3/24 brd 172.25.27.255 scope global eth0

inet 172.25.27.100/24 scope global secondary eth0

inet6 fe80::5054:ff:fe95:91c4/64 scope link

valid_lft forever preferred_lft forever

[root@server6 mfs]# cat file5

test5

数据同步成功,监控看状态也正常

1397

1397

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?