在今天的文章中,我们将描述如何在 Kubernetes 中安装 Elasticsearch 及 Kibana,并利用它们来存储数据并可视化被监控的数据。

Elasticsearch 集群

如上一篇文章中所述,要使用 Elastic 技术设置监视堆栈,我们首先需要部署 Elasticsearch,它将充当数据库来存储所有数据(指标,日志和跟踪)。 该数据库将由三个可扩展的节点组成,这些节点按照建议的生产方式连接到一个群集中。

此外,我们将启用身份验证,以使堆栈对潜在的攻击者更加安全。

配置 Elasticsearch master 节点

我们将要设置的集群的第一个节点是主节点,它负责控制集群。

我们需要的第一个 k8s 对象是 ConfigMap,它描述了一个 YAML 文件,其中包含将 Elasticsearch 主节点配置到集群中并启用安全性所需的所有设置。

elasticsearch-master.configmap.yaml

# elasticsearch-master.configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: monitoring

name: elasticsearch-master-config

labels:

app: elasticsearch

role: master

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: true

data: false

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---其次,我们将部署一个服务,该服务定义对一组 Pod 的网络访问。 对于主节点,我们仅需要通过用于集群通信的端口9300进行通信。

elasticsearch-master.service.yaml

# elasticsearch-master.service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: master

---最后,最后一部分是 Deployment,它描述了正在运行的服务(docker 映像,副本数量,环境变量和卷)。

elasticsearch-master.service.yaml

# elasticsearch-master.deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: monitoring

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

role: master

template:

metadata:

labels:

app: elasticsearch

role: master

spec:

containers:

- name: elasticsearch-master

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-master

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /data

volumes:

- name: config

configMap:

name: elasticsearch-master-config

- name: "storage"

emptyDir:

medium: ""

---现在,使用以下命令将配置应用于我们的 k8s 环境:

kubectl apply -f elasticsearch-master.configmap.yaml \

-f elasticsearch-master.service.yaml \

-f elasticsearch-master.deployment.yaml$ kubectl apply -f elasticsearch-master.configmap.yaml \

> -f elasticsearch-master.service.yaml \

> -f elasticsearch-master.deployment.yaml

configmap/elasticsearch-master-config created

service/elasticsearch-master created

deployment.apps/elasticsearch-master created使用以下命令检查所有内容是否正在运行:

kubectl get pods -n monitoring$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

elasticsearch-master-7cb4f584cb-rbwv9 1/1 Running 2 95s配置 Elasticsearch data 节点

我们将要设置的集群的第二个节点是 data 节点,该数据负责托管数据并执行查询(CRUD,搜索,聚合)。

像 master 节点一样,我们需要一个 ConfigMap 来配置我们的节点,该节点看上去与 master 节点相似,但略有不同(请参阅 node.data:true)

elasticsearch-data.configmap.yaml

# elasticsearch-data.configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: monitoring

name: elasticsearch-data-config

labels:

app: elasticsearch

role: data

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: true

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---该服务仅公开端口9300,以便与群集的其他成员通信。

elasticsearch-data.service.yaml

# elasticsearch-data.service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: data

---最后,ReplicaSet 与部署类似,但涉及存储,您可以在文件底部标识一个 volumeClaimTemplates 来创建 20GB 的持久卷。

elasticsearch-data.replicaset.yaml

# elasticsearch-data.replicaset.yaml

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: monitoring

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

serviceName: "elasticsearch-data"

replicas: 1

selector:

matchLabels:

app: elasticsearch-data

role: data

template:

metadata:

labels:

app: elasticsearch-data

role: data

spec:

containers:

- name: elasticsearch-data

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-data

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms1024m -Xmx1024m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: elasticsearch-data-persistent-storage

mountPath: /data/db

volumes:

- name: config

configMap:

name: elasticsearch-data-config

volumeClaimTemplates:

- metadata:

name: elasticsearch-data-persistent-storage

annotations:

volume.beta.kubernetes.io/storage-class: "standard"

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: standard

resources:

requests:

storage: 20Gi

---现在,让我们使用以下命令应用配置:

kubectl apply -f elasticsearch-data.configmap.yaml \

-f elasticsearch-data.service.yaml \

-f elasticsearch-data.replicaset.yaml$ kubectl apply -f elasticsearch-data.configmap.yaml \

> -f elasticsearch-data.service.yaml \

> -f elasticsearch-data.replicaset.yaml

configmap/elasticsearch-data-config created

service/elasticsearch-data created

statefulset.apps/elasticsearch-data created并检查一切是否正在运行:

kubectl get pods -n monitoring$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

elasticsearch-data-0 1/1 Running 0 87s

elasticsearch-master-7cb4f584cb-xndth 1/1 Running 0 112m配置 Elasticsearch client 节点

集群的最后一个节点(但并非最不重要)是 client,该客户端负责公开 HTTP 接口并将查询传递给数据节点。

ConfigMap 再次非常类似于 master 节点:

elasticsearch-client.configmap.yaml

# elasticsearch-client.configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: monitoring

name: elasticsearch-client-config

labels:

app: elasticsearch

role: client

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: false

ingest: true

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

---客户端节点公开了两个端口:9300,用于与集群的其他节点通信;以及9200,用于 HTTP API。

elasticsearch-client.service.yaml

# elasticsearch-client.service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

ports:

- port: 9200

name: client

- port: 9300

name: transport

selector:

app: elasticsearch

role: client

---描述 client 节点容器的 Deployment:

elasticsearch-client.deployment.yaml

# elasticsearch-client.deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: monitoring

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

role: client

template:

metadata:

labels:

app: elasticsearch

role: client

spec:

containers:

- name: elasticsearch-client

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-client

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9200

name: client

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /data

volumes:

- name: config

configMap:

name: elasticsearch-client-config

- name: "storage"

emptyDir:

medium: ""

---应用每个文件来部署 client 节点:

kubectl apply -f elasticsearch-client.configmap.yaml \

-f elasticsearch-client.service.yaml \

-f elasticsearch-client.deployment.yaml$ kubectl apply -f elasticsearch-client.configmap.yaml \

> -f elasticsearch-client.service.yaml \

> -f elasticsearch-client.deployment.yaml

configmap/elasticsearch-client-config created

service/elasticsearch-client created

deployment.apps/elasticsearch-client created并验证一切是否正常运行:

kubectl get pods -n monitoring$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

elasticsearch-client-55769c87ff-gbdcd 1/1 Running 0 53s

elasticsearch-data-0 1/1 Running 0 9m31s

elasticsearch-master-7cb4f584cb-xndth 1/1 Running 0 120m几分钟后,集群的每个节点应协调一致,master节点应记录以下语句: Cluster health status changed from [YELLOW] to [GREEN]。

kubectl logs -f -n monitoring \

> $(kubectl get pods -n monitoring | grep elasticsearch-master | sed -n 1p | awk '{print $1}') \

> | grep "Cluster health status changed from \[YELLOW\] to \[GREEN\]"$ kubectl logs -f -n monitoring \

> $(kubectl get pods -n monitoring | grep elasticsearch-master | sed -n 1p | awk '{print $1}') \

> | grep "Cluster health status changed from \[YELLOW\] to \[GREEN\]"

{"type": "server", "timestamp": "2020-05-02T09:58:35,653Z", "level": "INFO", "component": "o.e.c.r.a.AllocationService", "cluster.name": "elasticsearch", "node.name": "elasticsearch-master", "message": "Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[.monitoring-es-7-2020.05.02][0]]]).", "cluster.uuid": "MM4ERegSQBS0NP-38oikxQ", "node.id": "PIVWXmPCQVWX9JwMNXBRGQ" }

生成密码并存储在 k8s secret 中

我们启用了 xpack 安全模块以保护我们的集群,因此我们需要初始化密码。 执行以下命令,该命令在 client 节点容器(任何节点均可工作)中运行程序 bin/elasticsearch-setup-passwords 以生成默认用户和密码。

kubectl exec $(kubectl get pods -n monitoring | grep elasticsearch-client | sed -n 1p | awk '{print $1}') \

-n monitoring \

-- bin/elasticsearch-setup-passwords auto -b$ kubectl exec $(kubectl get pods -n monitoring | grep elasticsearch-client | sed -n 1p | awk '{print $1}') \

> -n monitoring \

> -- bin/elasticsearch-setup-passwords auto -b

Changed password for user apm_system

PASSWORD apm_system = A7zRhBjDLZTSQo3D2hQN

Changed password for user kibana

PASSWORD kibana = fRWNTqNENUhZbmhddqsz

Changed password for user logstash_system

PASSWORD logstash_system = 8bDIJzF6fcnoNnEDaAmq

Changed password for user beats_system

PASSWORD beats_system = QhFlfb8q1MmC1ccn9aSx

Changed password for user remote_monitoring_user

PASSWORD remote_monitoring_user = k4l3YnFi0nIzlCS1F0rN

Changed password for user elastic

PASSWORD elastic = HvWqpeKSs6BznGG8aall请注意 elastic 用户密码及添加它到 k8s secret 中,使用如下的命令:

kubectl create secret generic elasticsearch-pw-elastic \

-n monitoring \

--from-literal password=HvWqpeKSs6BznGG8aall为了验证我们的 Elasticsearch 是否已经正常工作,我们使用如下的命令:

kubectl get svc -n monitoring$ kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-client ClusterIP 10.111.73.231 <none> 9200/TCP,9300/TCP 9m12s

elasticsearch-data ClusterIP 10.100.68.53 <none> 9300/TCP 9m46s

elasticsearch-master ClusterIP 10.107.146.210 <none> 9300/TCP 12m上面显示有一个叫做 elasticsearch-client 的服务,我们可以通过如下的方法来得到它的 url:

minikube service elasticsearch-client --url -n monitoring$ minikube service elasticsearch-client --url -n monitoring

🏃 Starting tunnel for service elasticsearch-client.

|------------|----------------------|-------------|--------------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|------------|----------------------|-------------|--------------------------------|

| monitoring | elasticsearch-client | | http://127.0.0.1:59481 |

| | | | http://127.0.0.1:59482 |

|------------|----------------------|-------------|--------------------------------|

http://127.0.0.1:59481

http://127.0.0.1:59482

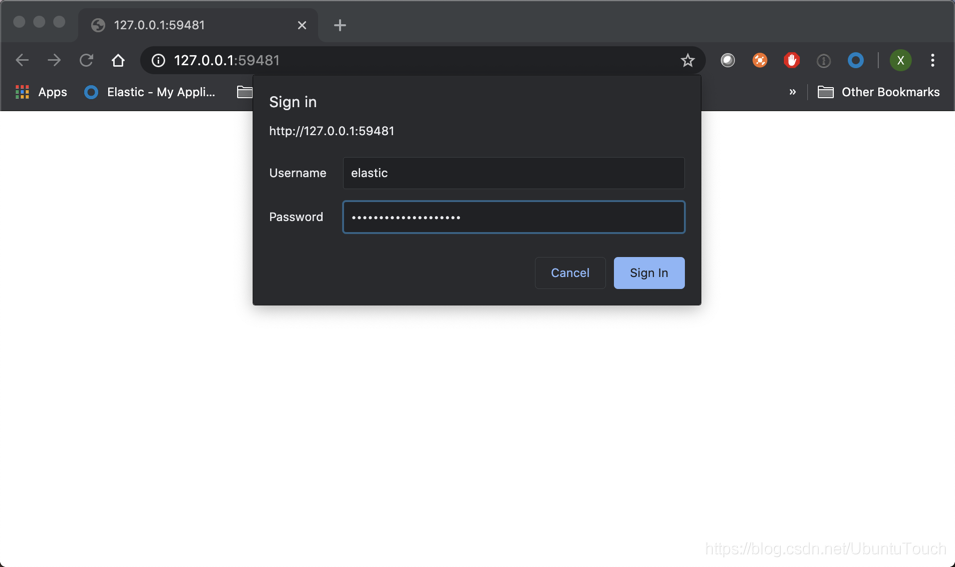

❗ Because you are using docker driver on Mac, the terminal needs to be open to run it.上面显示有两个 url 可以访问 Elasticsearch client。我们打开浏览器,并在浏览器中输入:http://127.0.0.1:59481

我们输入elastic及上面所得到的密码,然后点击Sign In:

上面显示我们的 Elasticsearch 是已经正常工作了。

Kibana 安装

本文的第二部分包括部署 Kibana,它是 Elasticsearch 的数据可视化插件,提供了管理 ElasticSeach 集群和可视化所有数据的功能。

在 k8s 中的设置方面,这与 Elasticsearch 非常相似,我们首先使用 ConfigMap 为我们的部署提供具有所有必需属性的配置文件。 这尤其包括对配置为环境变量的 Elasticsearch (主机,用户名和密码)的访问。

kibana.configmap.yaml

# kibana.configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: monitoring

name: kibana-config

labels:

app: kibana

data:

kibana.yml: |-

server.host: 0.0.0.0

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICSEARCH_USER}

password: ${ELASTICSEARCH_PASSWORD}

---该 Service 将 Kibana 默认端口5601暴露给环境,并使用 NodePort 还将端口直接暴露在静态节点 IP 上,以便我们可以从外部访问它。

kibana.service.yaml

# kibana.service.yaml

---

apiVersion: v1

kind: Service

metadata:

namespace: monitoring

name: kibana

labels:

app: kibana

spec:

type: NodePort

ports:

- port: 5601

name: webinterface

selector:

app: kibana

---最后,Deployment 部分描述了容器,环境变量和卷。 对于环境变量ELASTICSEARCH_PASSWORD,我们使用 secretKeyRef 从机密中读取密码。

kibana.deployment.yaml

# kibana.deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: monitoring

name: kibana

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.6.2

ports:

- containerPort: 5601

name: webinterface

env:

- name: ELASTICSEARCH_HOSTS

value: "http://elasticsearch-client.monitoring.svc.cluster.local:9200"

- name: ELASTICSEARCH_USER

value: "elastic"

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml

readOnly: true

subPath: kibana.yml

volumes:

- name: config

configMap:

name: kibana-config

---现在,让我们应用这些文件来部署 Kibana:

kubectl apply -f kibana.configmap.yaml \

-f kibana.service.yaml \

-f kibana.deployment.yaml$ kubectl apply -f kibana.configmap.yaml \

> -f kibana.service.yaml \

> -f kibana.deployment.yaml

configmap/kibana-config created

service/kibana created

deployment.apps/kibana created几分钟后,检查状态 Status changed from yellow to green:

kubectl logs -f -n monitoring $(kubectl get pods -n monitoring | grep kibana | sed -n 1p | awk '{print $1}') \

| grep "Status changed from yellow to green"$ kubectl logs -f -n monitoring $(kubectl get pods -n monitoring | grep kibana | sed -n 1p | awk '{print $1}') \

> | grep "Status changed from yellow to green"

{"type":"log","@timestamp":"2020-05-02T10:23:26Z","tags":["status","plugin:elasticsearch@7.6.2","info"],"pid":6,"state":"green","message":"Status changed from yellow to green - Ready","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

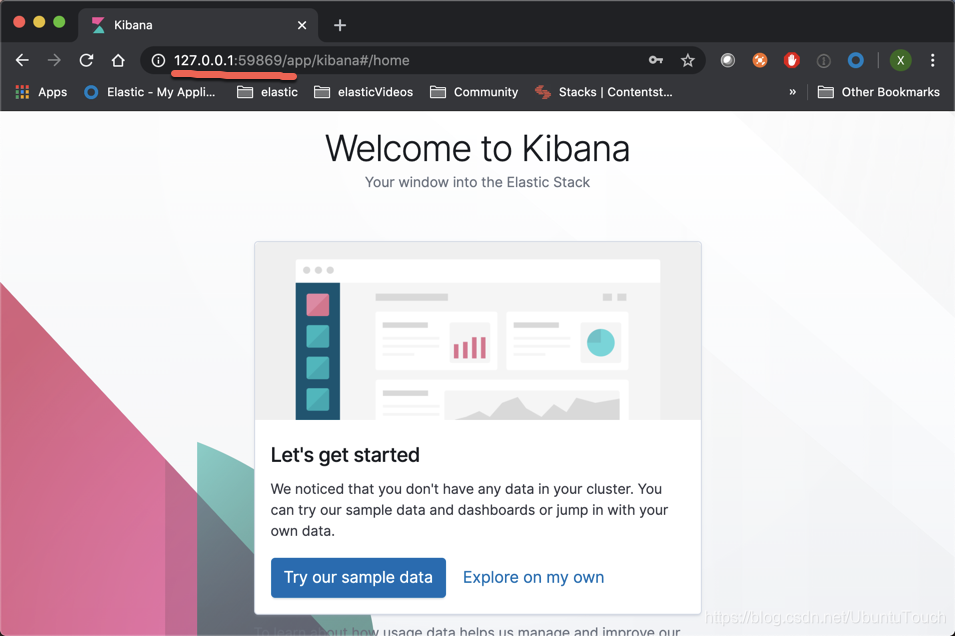

一旦日志显示为 “green”,您就可以从浏览器访问 Kibana。

minikube ip$ minikube ip

127.0.0.1还要运行以下命令以查找在哪个外部端口上映射了 Kibana 的端口5601

kubectl get service kibana -n monitoring$ kubectl get service kibana -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kibana NodePort 10.108.208.162 <none> 5601:31285/TCP 4m25s我们通过如下的方式找到 Kibana 服务的外部链接:

minikube service kibana --url -n monitoring$ minikube service kibana --url -n monitoring

🏃 Starting tunnel for service kibana.

|------------|--------|-------------|------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|------------|--------|-------------|------------------------|

| monitoring | kibana | | http://127.0.0.1:59869 |

|------------|--------|-------------|------------------------|

http://127.0.0.1:59869

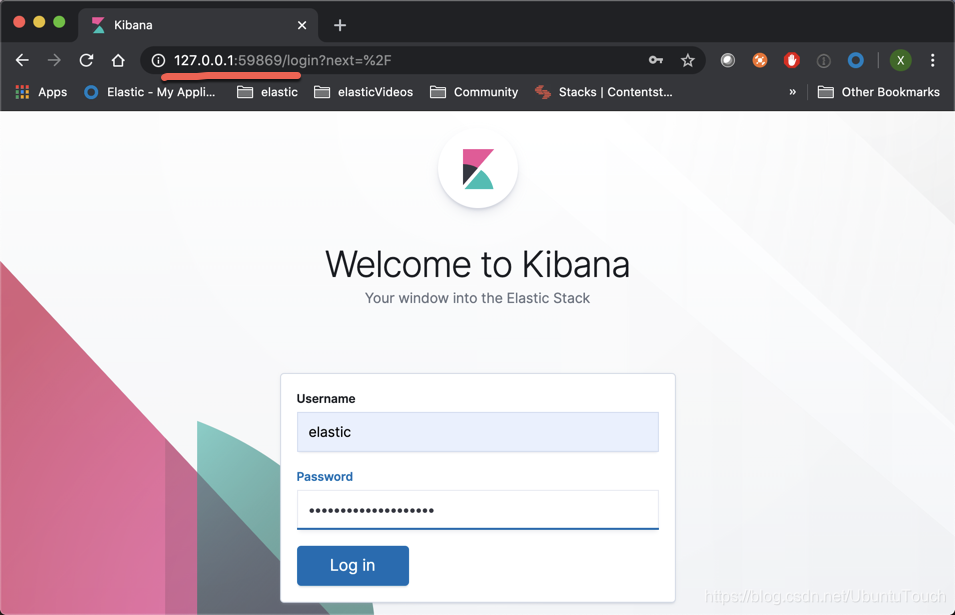

❗ Because you are using docker driver on Mac, the terminal needs to be open to run it.在浏览器中输入以上地址:http://127.0.0.1:59869

我们把上面获得的 Kibana 的密码 HvWqpeKSs6BznGG8aall 输入进去(在上一节中可以找到这个密码。这个和 Elasticsearch 是一样的密码):

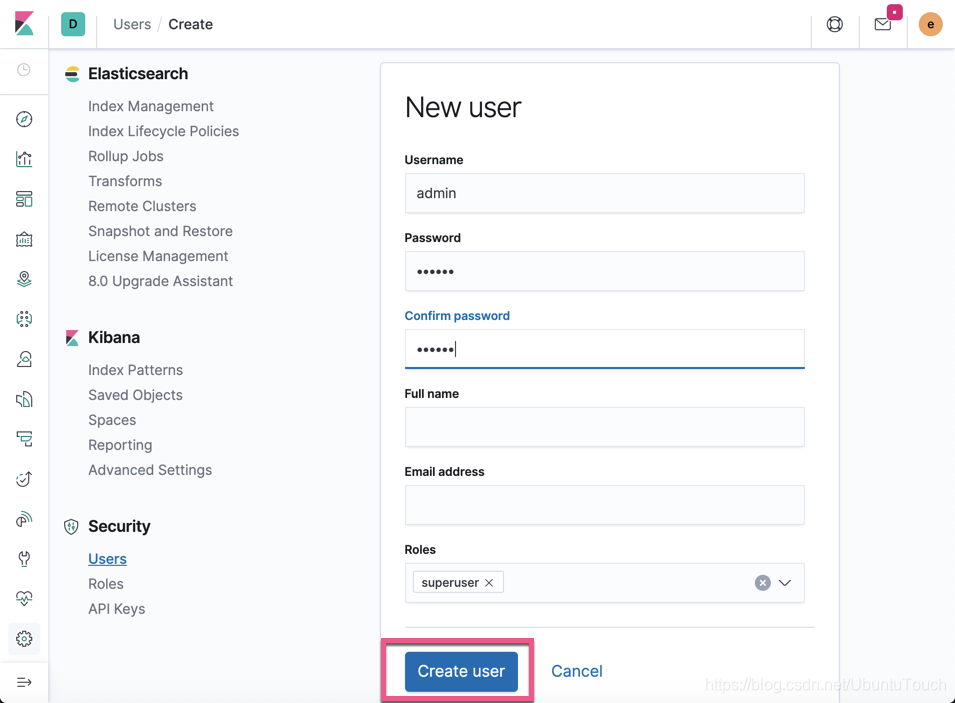

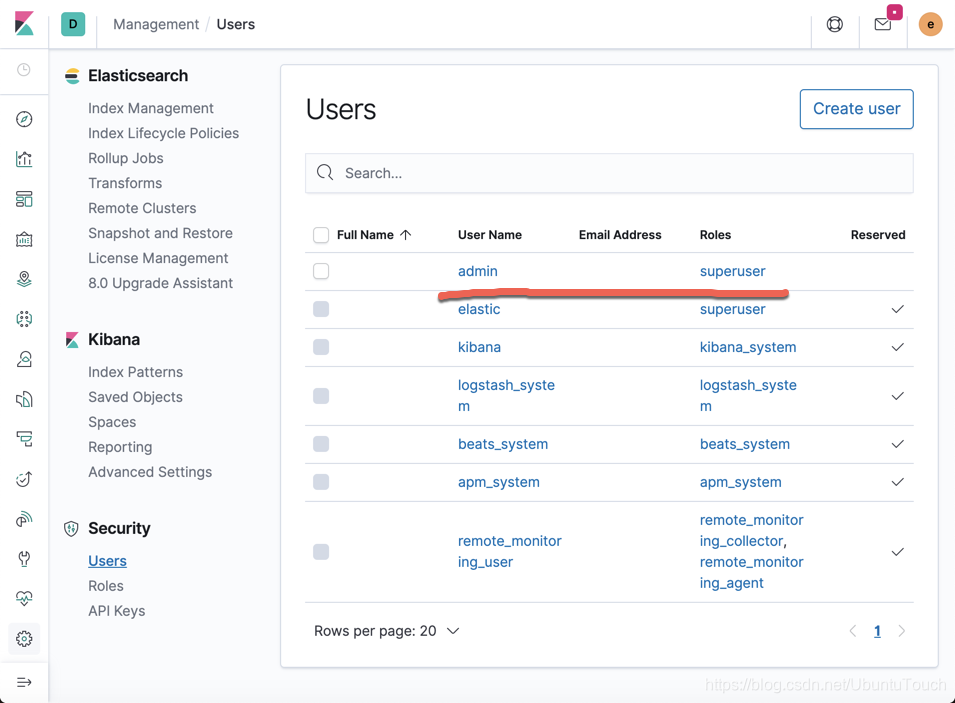

在继续之前,我建议您忘记 elastic 用户(仅用于跨服务访问),并创建一个专用用户来访问 Kibana。 转到 Management>Security>User,然后单击 Create User:

点击 Create User:

点击 Create User 按钮:

我们接着点击 Stack Monitoring 来查看我们的集群的健康状况:

总而言之,我们现在拥有一个随时可用的 Elasticsearch + Kibana 堆栈,可用于存储和可视化我们的基础架构和应用程序数据(指标,日志和跟踪)。

下一步

在接下来的文章中,我们将学习如何安装并配置 Metricbeat:通过 Metricbeat 收集指标信息并监控 Kubernetes。请详细参阅 “运用 Elastic Stack 对 Kubernetes 进行监控 (三)”。

1344

1344

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?