原理

TF(Term Frequency):词频

T F = 该 词 频 数 文 档 词 语 总 数 TF = \frac{该词频数}{文档词语总数} TF=文档词语总数该词频数

IDF(Inverse Document Frequency):逆文本频率指数

I D F = log ( 文 档 总 数 出 现 该 词 文 档 数 + 1 ) IDF = \log(\frac{文档总数}{出现该词文档数+1}) IDF=log(出现该词文档数+1文档总数)

调用jieba(免训练)

from jieba.analyse import tfidf

sentence = '佛山市科技局发布关于发展佛山市人工智能项目的通知'

print(tfidf(sentence))

print(tfidf(sentence, allowPOS=('n', 'ns', 'v', 'vn'))) # 按词性筛选

print(tfidf(sentence, allowPOS=('n', 'ns', 'v', 'vn'), withFlag=True)) # 返回词性

print(tfidf(sentence, withWeight=True)) # 返回权重

打印结果

['佛山市', '科技局', '人工智能', '通知', '发布', '关于', '项目', '发展']

['佛山市', '科技局', '人工智能', '通知', '发布', '项目', '发展']

[pair('佛山市', 'ns'), pair('科技局', 'n'), pair('人工智能', 'n'), pair('通知', 'v'), pair('发布', 'v'), pair('项目', 'n'), pair('发展', 'vn')]

[('佛山市', 2.2638012411777777), ('科技局', 1.3454353536333334), ('人工智能', 1.0508918217211112), ('通知', 0.6714233436266667), ('发布', 0.5657954481322222), ('关于', 0.5532763439699999), ('项目', 0.5425367102355555), ('发展', 0.39722939449333333)]

Python手写

from collections import Counter

from math import log10

from re import split

from jieba.posseg import dt

FLAGS = set('a an b f i j l n nr nrfg nrt ns nt nz s t v vi vn z eng'.split())

def cut(text):

for sentence in split('[^a-zA-Z0-9\u4e00-\u9fa5]+', text.strip()):

for w in dt.cut(sentence):

if len(w.word) > 1 and w.flag in FLAGS:

yield w.word

class TFIDF:

def __init__(self):

self.idf = None

self.idf_max = None

def fit(self, texts):

texts = [set(cut(text)) for text in texts]

lent = len(texts)

words = set(w for t in texts for w in t)

self.idf = {w: log10(lent/(sum((w in t)for t in texts)+1)) for w in words}

self.idf_max = log10(lent)

return self

def get_idf(self, word):

return self.idf.get(word, self.idf_max)

def extract(self, text, top_n=10):

counter = Counter()

for w in cut(text):

counter[w] += self.get_idf(w)

return [i[0] for i in counter.most_common(top_n)]

tfidf = TFIDF().fit(['奶茶', '巧克力奶茶', '巧克力酸奶', '巧克力', '巧克力']*2)

print(tfidf.extract('酸奶巧克力奶茶'))

sklearn

from re import split

from jieba.posseg import dt

from sklearn.feature_extraction.text import TfidfVectorizer

from collections import Counter

FLAGS = set('a an b f i j l n nr nrfg nrt ns nt nz s t v vi vn z eng'.split())

def cut(text):

for sentence in split('[^a-zA-Z0-9\u4e00-\u9fa5]+', text.strip()):

for w in dt.cut(sentence):

if len(w.word) > 2 and w.flag in FLAGS:

yield w.word

class TFIDF:

def __init__(self, idf):

self.idf = idf

@classmethod

def train(cls, texts):

model = TfidfVectorizer(tokenizer=cut)

model.fit(texts)

idf = {w: model.idf_[i] for w, i in model.vocabulary_.items()}

return cls(idf)

def get_idf(self, word):

return self.idf.get(word, max(self.idf.values()))

def extract(self, text, top_n=10):

counter = Counter()

for w in cut(text):

counter[w] += self.get_idf(w)

return [i[0] for i in counter.most_common(top_n)]

gensim

from gensim.corpora import Dictionary

from gensim.models import TfidfModel

from re import split

from jieba.posseg import dt

FLAGS = set('a an b f i j l n nr nrfg nrt ns nt nz s t v vi vn z eng'.split())

def lcut(text):

return [

w.word for sentence in split('[^a-zA-Z0-9\u4e00-\u9fa5]+', text.strip())

for w in dt.cut(sentence)if len(w.word) > 2 and w.flag in FLAGS]

class TFIDF:

def __init__(self, dictionary, model):

self.model = model

self.doc2bow = dictionary.doc2bow

self.id2word = {i: w for w, i in dictionary.token2id.items()}

@classmethod

def train(cls, texts):

texts = [lcut(text) for text in texts]

dictionary = Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

model = TfidfModel(corpus)

return cls(dictionary, model)

def extract(self, text, top_n=10):

vector = self.doc2bow(lcut(text))

key_words = sorted(self.model[vector], key=lambda x: x[1], reverse=True)

return [self.id2word[i] for i, j in key_words][:top_n]

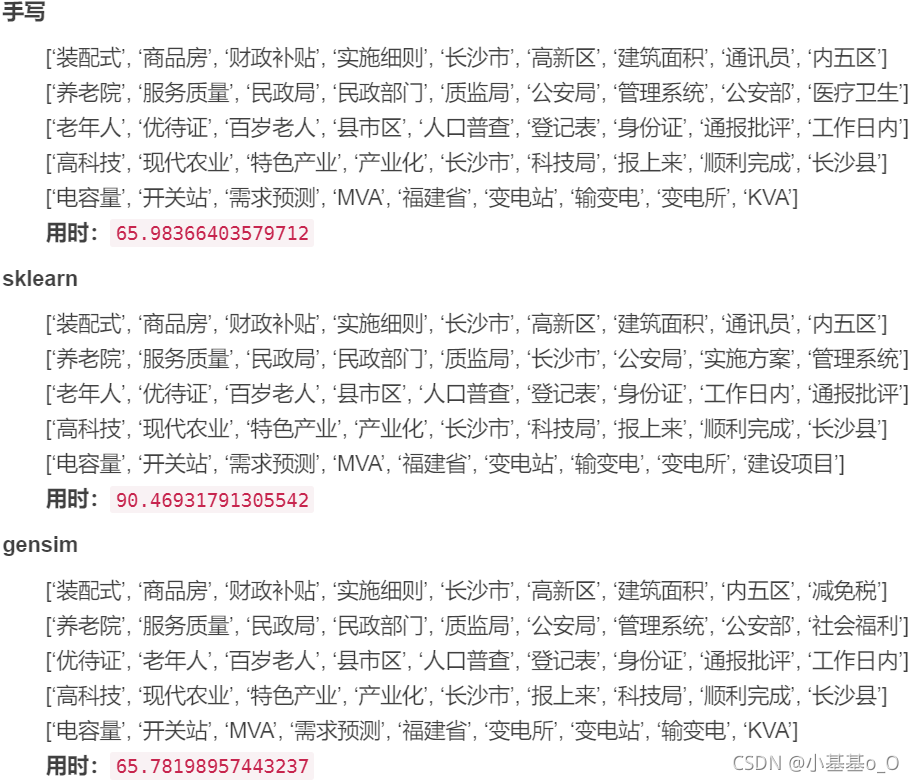

手写、sklearn、gensim结果比较

from time import time

t0 = time()

with open('policy.txt', encoding='utf-8')as f:

_texts = f.read().strip().split('\n\n')

tfidf = TFIDF.train(_texts)

for _text in _texts:

print(tfidf.extract(_text))

print(time() - t0)

结果打印:

速度:手写≈gensim>sklearn

关键词:三者Top2完全相同,之后不完全相同

1818

1818

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?