hdfs中文件块错误

hdfs路径为:

怀疑租约未释放引起

参考文件:

https://blog.csdn.net/qq_29992111/article/details/80533563

1.执行:

hdfs fsck /user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/ -openforwrite

2.查看信息:

[zhihui004@nlkfpt-xian-yh34 ~]$ hdfs fsck /user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/ -openforwrite

Connecting to namenode via http://nlkfpt-nf5280-hx71:50070/fsck?ugi=zhihui004&openforwrite=1&path=%2Fuser%2Fzhihui004%2Fbjwx.db%2Fdwd_d_yd_sms%2Fdayid%3D08

FSCK started by zhihui004 (auth:SIMPLE) from /10.236.10.124 for path /user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08 at Fri Nov 08 17:41:27 CST 2019

/user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/CPHQ-20191029-124613_dwd_d_yd_sms_20191104_0000001.011 521838526464 bytes, 486 block(s), OPENFORWRITE: Status: HEALTHY

Total size: 521838526464 B

Total dirs: 1

Total files: 1

Total symlinks: 0

Total blocks (validated): 486 (avg. block size 1073741824 B)

Minimally replicated blocks: 486 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Number of data-nodes: 62

Number of racks: 4

FSCK ended at Fri Nov 08 17:41:27 CST 2019 in 5 milliseconds

The filesystem under path '/user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08' is HEALTHY

3.问题文件:

/user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/CPHQ-20191029-124613_dwd_d_yd_sms_20191104_0000001.011

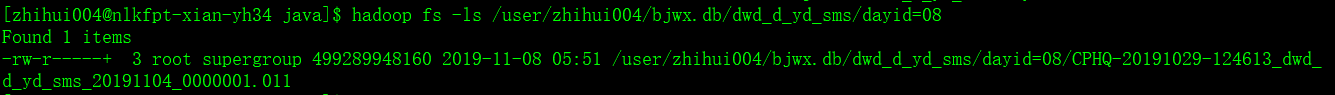

4. 查看文件:

hadoop fs -tail /user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/CPHQ-20191029-124613_dwd_d_yd_sms_20191104_0000001.011

会有Error: java.io.IOException: Cannot obtain block length for LocatedBlock报错信息出现

5.我们对文件执行修复命令

hdfs debug recoverLease -path /user/zhihui004/bjwx.db/dwd_d_yd_sms/dayid=08/CPHQ-20191029-124613_dwd_d_yd_sms_20191104_0000001.011 -retries 3

6.经检查

问题解决

批量排查:

7.后来针对数据量比较大的目录下出现类似问题,需要筛选故障文件,以 "/"目录为例,执行命令为:

hadoop fsck / -openforwrite | egrep -v '^\.+$' | egrep "MISSING|OPENFORWRITE" | grep -o "/[^ ]*" | sed -e "s/:$//"

8.筛选出文件后,通过文件修复命令修复,脚本待写中~~~

757

757

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?