一 序:

因为爬取数据需要,代理跟验证码识别属于不可避免的问题。本文总结了下因为爬取免费代理IP数据遇到的js加密cookie问题。

二 问题:

对于常见的静态页面来说,jsoup的解析是比较常见的。

但是这个网站如果直接用jsoup去抓取,会报错。

org.jsoup.HttpStatusException: HTTP error fetching URL. Status=521, URL=http://www.kuaidaili.com/ops/proxylist/1

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:679)

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:628)

at org.jsoup.helper.HttpConnection.execute(HttpConnection.java:260)

at org.jsoup.helper.HttpConnection.get(HttpConnection.java:249)三 问题分析及解决:

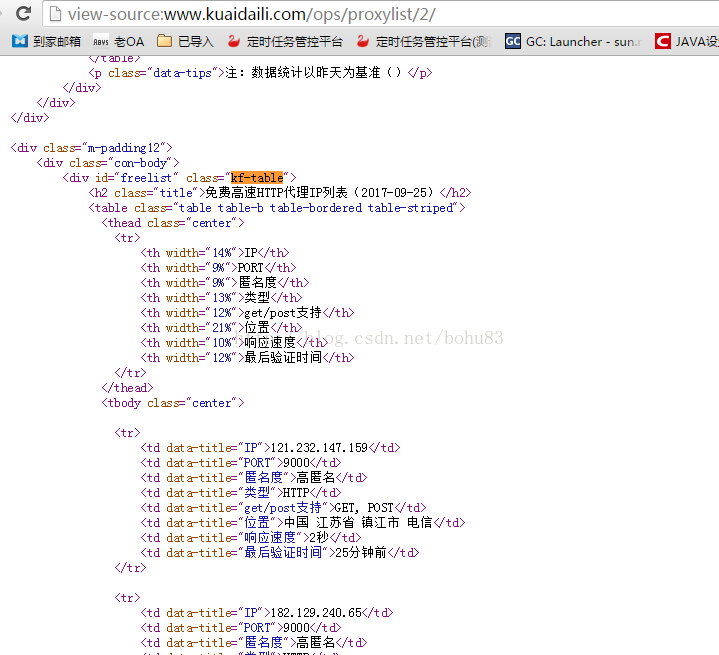

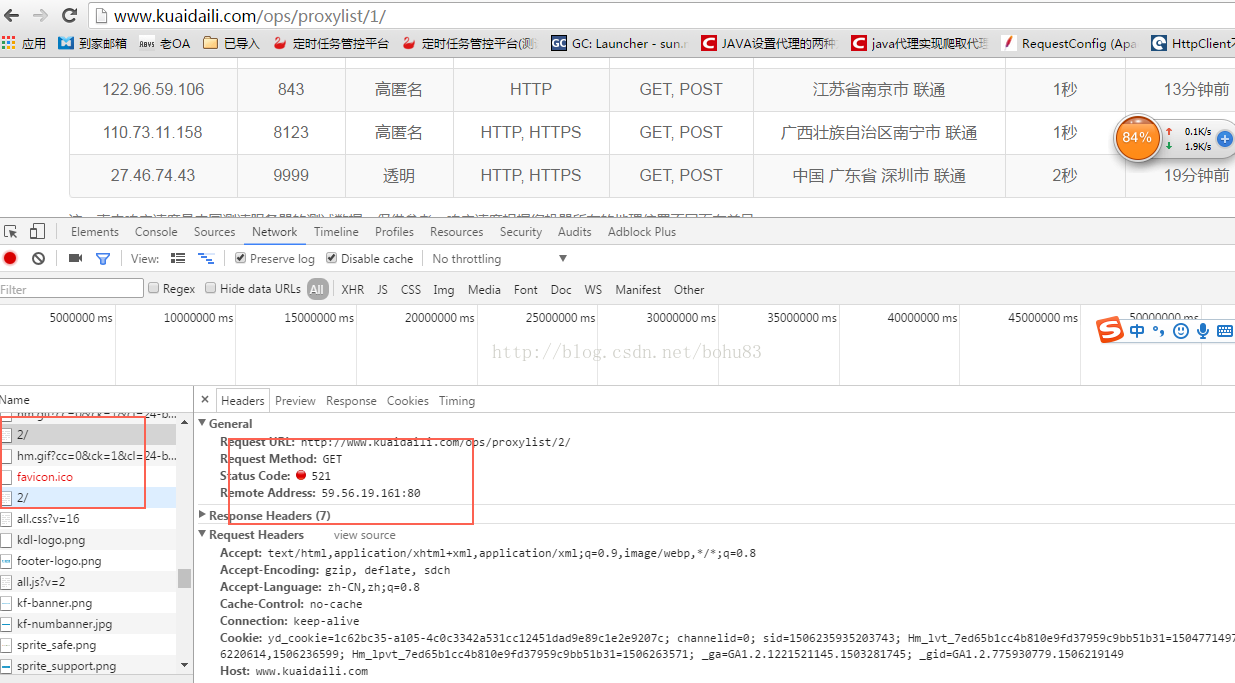

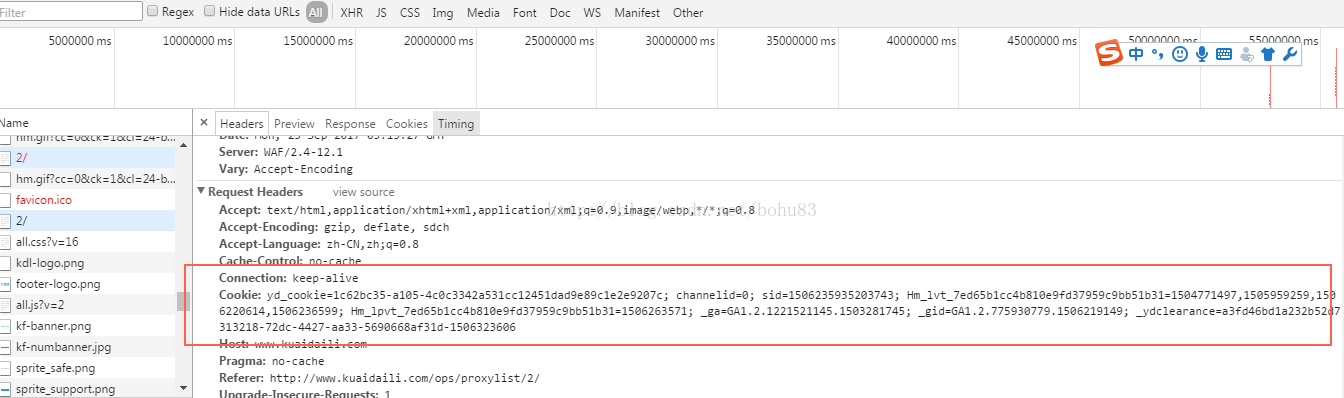

其实浏览器是能正常浏览的,我们打开浏览器看下过程。以Chrome的为例子

可以明显看到第一次是报错的,HTTP状态码是521,不是200.

第二次是200,但是第二次的cookie多了一些:_ydclearance=a3fd46bd1a232b52d7313218-72dc-4427-aa33-5690668af31d-1506323606

这就是问题的原因了。

用程序来模拟下,打印下参数:

CloseableHttpClient httpClient = HttpClients.createDefault();

Registry<CookieSpecProvider> cookieSpecProviderRegistry = RegistryBuilder.<CookieSpecProvider>create()

.register("myCookieSpec", context -> new MyCookieSpec()).build();//注册自定义CookieSpec

String url = baseUrl + i;

HttpGet get = new HttpGet(url);

HttpClientContext context = HttpClientContext.create();

context.setCookieSpecRegistry(cookieSpecProviderRegistry);

get.setConfig(RequestConfig.custom().setCookieSpec("myCookieSpec").build());

WebRequest request = null;

WebClient wc = null;

try {

//1、获取521状态时返回setcookie

CloseableHttpResponse response = httpClient.execute(get, context);

// 响应状态

System.out.println("status:" + response.getStatusLine());

System.out.println(">>>>>>headers:");

HeaderIterator iterator = response.headerIterator();

while (iterator.hasNext()) {

System.out.println("\t" + iterator.next());

}

System.out.println(">>>>>>cookies:");

// context.getCookieStore().getCookies().forEach(System.out::println);

String cookie =getCookie(context);

System.out.println("cookie="+cookie);

response.close();输出日志:

status:HTTP/1.1 521

>>>>>>headers:

Date: Mon, 25 Sep 2017 07:10:25 GMT

Content-Type: text/html

Connection: keep-alive

Set-Cookie: yd_cookie=fa424be4-70a9-4478226851c4b3f3e8e031e4ed7860052980; Expires=1506330625; Path=/; HttpOnly

Cache-Control: no-cache, no-store

Server: WAF/2.4-12.1

>>>>>>cookies:

cookie=yd_cookie=fa424be4-70a9-4478226851c4b3f3e8e031e4ed7860052980;Expires=Mon Sep 25 17:10:25 CST 2017;Path=/拿到这个cookie,再调用一次目标URL。看看反馈数据:

HttpGet secGet = new HttpGet(url);

secGet.setHeader("Cookie",cookie);

//测试用,对比获取结果

CloseableHttpResponse secResponse = httpClient.execute(secGet, context);

System.out.println("secstatus:" + secResponse.getStatusLine());

String content = EntityUtils.toString(secResponse.getEntity());

System.out.println(content);

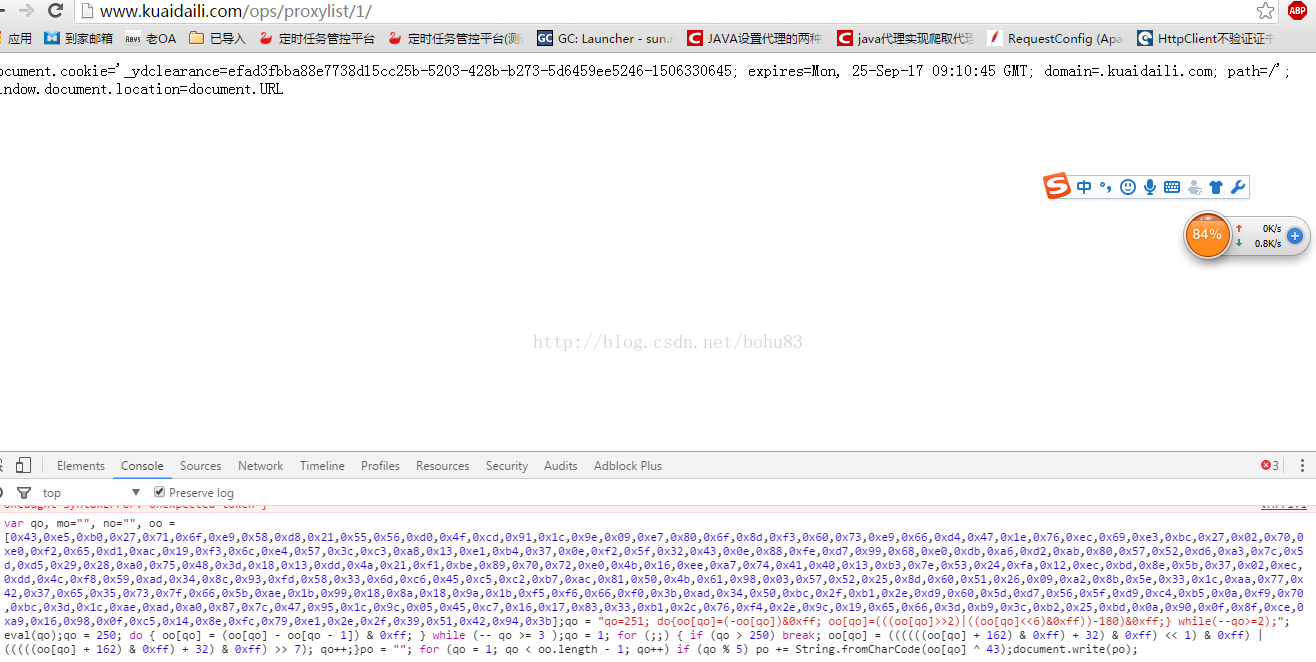

secResponse.close();<html><body><script language="javascript"> window.οnlοad=setTimeout("dv(43)", 200); function dv(VC) {var qo, mo="", no="", oo = [0x43,0xe5,0xb0,0x27,0x71,0x6f,0xe9,0x58,0xd8,0x21,0x55,0x56,0xd0,0x4f,0xcd,0x91,0x1c,0x9e,0x09,0xe7,0x80,0x6f,0x8d,0xf3,0x60,0x73,0xe9,0x66,0xd4,0x47,0x1e,0x76,0xec,0x69,0xe3,0xbc,0x27,0x02,0x70,0xe0,0xf2,0x65,0xd1,0xac,0x19,0xf3,0x6c,0xe4,0x57,0x3c,0xc3,0xa8,0x13,0xe1,0xb4,0x37,0x0e,0xf2,0x5f,0x32,0x43,0x0e,0x88,0xfe,0xd7,0x99,0x68,0xe0,0xdb,0xa6,0xd2,0xab,0x80,0x57,0x52,0xd6,0xa3,0x7c,0x5d,0xd5,0x29,0x28,0xa0,0x75,0x48,0x3d,0x18,0x13,0xdd,0x4a,0x21,0xf1,0xbe,0x89,0x70,0x72,0xe0,0x4b,0x16,0xee,0xa7,0x74,0x41,0x40,0x13,0xb3,0x7e,0x53,0x24,0xfa,0x12,0xec,0xbd,0x8e,0x5b,0x37,0x02,0xec,0xdd,0x4c,0xf8,0x59,0xad,0x34,0x8c,0x93,0xfd,0x58,0x33,0x6d,0xc6,0x45,0xc5,0xc2,0xb7,0xac,0x81,0x50,0x4b,0x61,0x98,0x03,0x57,0x52,0x25,0x8d,0x60,0x51,0x26,0x09,0xa2,0x8b,0x5e,0x33,0x1c,0xaa,0x77,0x42,0x37,0x65,0x35,0x73,0x7f,0x66,0x5b,0xae,0x1b,0x99,0x18,0x8a,0x18,0x9a,0x1b,0xf5,0xf6,0x66,0xf0,0x3b,0xad,0x34,0x50,0xbc,0x2f,0xb1,0x2e,0xd9,0x60,0x5d,0xd7,0x56,0x5f,0xd9,0xc4,0xb5,0x0a,0xf9,0x70,0xbc,0x3d,0x1c,0xae,0xad,0xa0,0x87,0x7c,0x47,0x95,0x1c,0x9c,0x05,0x45,0xc7,0x16,0x17,0x83,0x33,0xb1,0x2c,0x76,0xf4,0x2e,0x9c,0x19,0x65,0x66,0x3d,0xb9,0x3c,0xb2,0x25,0xbd,0x0a,0x90,0x0f,0x8f,0xce,0xa9,0x16,0x98,0x0f,0xc5,0x14,0x8e,0xfc,0x79,0xe1,0x2e,0x2f,0x39,0x51,0x42,0x94,0x3b];qo = "qo=251; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>2)|((oo[qo]<<6)&0xff))-180)&0xff;} while(--qo>=2);"; eval(qo);qo = 250; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (-- qo >= 3 );qo = 1; for (;;) { if (qo > 250) break; oo[qo] = ((((((oo[qo] + 162) & 0xff) + 32) & 0xff) << 1) & 0xff) | (((((oo[qo] + 162) & 0xff) + 32) & 0xff) >> 7); qo++;}po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 5) po += String.fromCharCode(oo[qo] ^ VC);eval("qo=eval;qo(po);");} </script> </body></html>好吧,我承认js不行。搞不懂其中的细节。

但是我们有web控制台,我们可以看看这个js是运行出啥结果

document.cookie='_ydclearance=efad3fbba88e7738d15cc25b-5203-428b-b273-5d6459ee5246-1506330645; expires=Mon, 25-Sep-17 09:10:45 GMT; domain=.kuaidaili.com; path=/'; window.document.location=document.URL

这个数据就是js加密后的cookie,也是我们想要的数据。

上面的js大概意思就是生成新的cookie,页面重新刷新一下。eval("qo=eval;qo(po);这句话是赋值的。针对浏览器起作用。

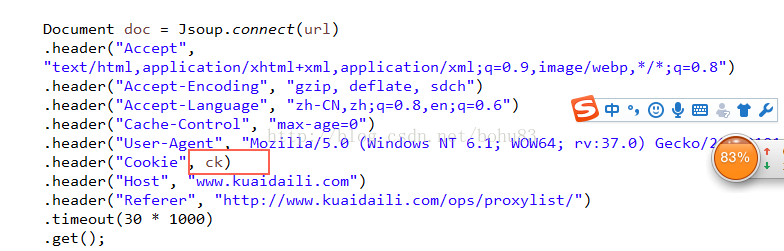

也就是说我们如果能拿到这个新cookie,就能是jsoup重新发挥作用,获取数据。

至此,这个网站的反爬虫策略已经清楚了:

1. 返回状态521,带有ydcookie

2. ydcookie在访问会返回js.

3.执行js会返回加密后的_ydclearance,带着加密后的cookie会能正常访问。

那么关键就是如何执行这个js了。httpclient及jsoup是不满足业务需求了,Python有PyV8来做JavaScript引擎,那么Java采用啥好呢。

Google一下,网上常见的HtmlUnit/Selenium/PhantomJs三类流行的js渲染引擎。

Seleninum :需要安装浏览器,结合相应的WebDriver,正确配置webdriver的路径参数优点:支持复杂功能。缺点:性能差一些,不适合作为服务器,更是小部分数据调试

HtmlUnit/:基于HTTPclient封装的,无浏览器界面,性能一般。解析js差。

PhantomJs :看介绍比较主流,性能尚可。值得深入学习。(目前我也没用过)

其实这些引擎估计学习起来都有成本,官网的资料不太全或者自己自己看不太懂,需要自己在调试过程中结合API去搞明白。

说白了就是要不断的踩坑,才知道哦原来是这么回事。

***********************************我是一个分割线*********************************************************

废话说完了,周末时间不多,找个学习代价小的HTMLUNIT去尝试。

URL link=new URL(url);

request=new WebRequest(link);

request.setCharset("UTF-8");

request.setAdditionalHeader("Referer", "http://www.kuaidaili.com/ops/proxylist/1/");//设置请求报文头里的refer字段

设置请求报文头里的User-Agent字段

request.setAdditionalHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:37.0) Gecko/20100101 Firefox/38.0");

wc = new WebClient();

wc.getCookieManager().setCookiesEnabled(true);//开启cookie管理

wc.getOptions().setJavaScriptEnabled(true);//开启js解析。对于变态网页,这个是必须的

wc.getOptions().setCssEnabled(false);//关闭css解析。

wc.getOptions().setThrowExceptionOnFailingStatusCode(false);

wc.getOptions().setThrowExceptionOnScriptError(false);

wc.getOptions().setRedirectEnabled(true);

wc.getOptions().setTimeout(10000);

wc.setJavaScriptTimeout(50000);

//设置cookie

List<Cookie> cList = context.getCookieStore().getCookies();

if(cList != null && !cList.isEmpty())

{

for(int j=0;j<cList.size();j++)

{

Cookie ck = cList.get(j);

//cookie

com.gargoylesoftware.htmlunit.util.Cookie ucookies=new com.gargoylesoftware.htmlunit.util.Cookie("www.kuaidaili.com", ck.getName() , ck.getValue());

wc.getCookieManager().addCookie(ucookies);

}

}

//获取动态跳转页

HtmlPage hp = wc.getPage(request);

String body = hp.getBody().asXml();

//截取js函数

String function = body.substring(body.indexOf("function"),body.lastIndexOf("}")+1);

//把设置cookie的函数替换成返回

function = function.replace("eval(\"qo=eval;qo(po);\")", "return po");

System.out.println("function:"+function);

//提取js函数参数(正则表达式匹配)

String regx ="setTimeout\\(\\\"\\D+\\((\\d+)\\)\"";

Pattern pattern = Pattern.compile(regx);

Matcher matcher = pattern.matcher(body);

String call = "";

String args ="";

while(matcher.find()){

String group =matcher.group();

args = group.substring(group.lastIndexOf("(")+1,group.indexOf(")"));

call = group.substring(group.indexOf("\"")+1,group.lastIndexOf("\""));

System.out.println(args+":"+call);

}

// 执行js获取加密后的数据后面执行js就能获取数据: ScriptResult ckstr =hp.executeJavaScript

我们用获取的加密的cookie,传给jsoup。这就能正常访问数据了。

以测试为例http://www.kuaidaili.com/ops/proxylist/1,2两个页面为例

日志如下:

status:HTTP/1.1 521

>>>>>>headers:

Date: Mon, 25 Sep 2017 07:10:25 GMT

Content-Type: text/html

Connection: keep-alive

Set-Cookie: yd_cookie=fa424be4-70a9-4478226851c4b3f3e8e031e4ed7860052980; Expires=1506330625; Path=/; HttpOnly

Cache-Control: no-cache, no-store

Server: WAF/2.4-12.1

>>>>>>cookies:

cookie=yd_cookie=fa424be4-70a9-4478226851c4b3f3e8e031e4ed7860052980;Expires=Mon Sep 25 17:10:25 CST 2017;Path=/

15:10:27.023 [main] INFO c.g.htmlunit.WebClient - statusCode=[521] contentType=[text/html]

15:10:27.033 [main] INFO c.g.htmlunit.WebClient - <html><body><script language="javascript"> window.οnlοad=setTimeout("kt(180)", 200); function kt(OD) {var qo, mo="", no="", oo = [0xe7,0xd1,0x34,0xe1,0x60,0xfd,0x51,0x2f,0xc4,0x2b,0xc2,0x90,0xb5,0x43,0xf0,0x7e,0xfb,0xd9,0x22,0x42,0x32,0x5f,0x5d,0x23,0xe6,0xb4,0x5a,0x38,0xf5,0x2c,0xd6,0x94,0x2a,0xf7,0xd5,0xf5,0x99,0xe9,0x52,0x92,0x68,0x46,0x45,0x4d,0x03,0x53,0x8b,0x61,0xc6,0x2f,0x0d,0x45,0x13,0xe0,0xc7,0x08,0x80,0x80,0xb8,0x9e,0xf2,0x53,0x53,0xa3,0x81,0x21,0xdc,0xb2,0x88,0xc8,0x01,0xc0,0x68,0xd0,0x29,0x61,0xd1,0x71,0x01,0xde,0x1f,0xdc,0x1d,0xbc,0x04,0x6c,0xa4,0xec,0x35,0x3d,0x67,0xcf,0x28,0xf5,0xab,0xe3,0x08,0x78,0x4e,0xed,0x2e,0x8e,0x34,0x7c,0xd4,0x05,0x55,0x9d,0x32,0x8a,0xc2,0x3b,0x2b,0xf2,0x89,0x67,0x6d,0xb3,0x31,0x67,0x78,0x56,0x84,0xa4,0x41,0xee,0x7e,0x14,0xbb,0x63,0xbb,0x1c,0xd4,0x74,0xa1,0x9f,0xc5,0x65,0x25,0x65,0xd5,0x9d,0xc5,0xc5,0xa4,0xbc,0xfc,0x25,0x3d,0x75,0x50,0xa8,0x70,0x5d,0xf9,0x5f,0x37,0x27,0xee,0xd4,0x62,0x20,0xe7,0xa5,0x23,0xd8,0xf8,0x90,0xdd,0x4b,0xa9,0x67,0xe4,0xca,0xdd,0x9b,0x19,0xbe,0x3c,0xd3,0x9f,0x4d,0xfa,0x98,0x88,0x50,0x14,0x5a,0x18,0x7e,0xe3,0x04,0x0a,0xb9,0x89,0x99,0x41,0xaf,0x98,0x16,0xab,0x91,0x3f,0x8d,0x21,0xd8,0xbe,0x4c,0x1a,0x78,0x47,0xe4,0xc2,0x78,0xde,0x76,0xd2,0x78,0x06,0xd3,0x91,0xf7,0xf0,0x6e,0x1c,0xb1,0xd1,0xb7,0x3e,0xcb,0x99,0xf7,0x95,0x73,0x6e,0x04,0x6a,0x02,0x7f,0xb4,0x22,0x87,0x3b];qo = "qo=241; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>5)|((oo[qo]<<3)&0xff))-211)&0xff;} while(--qo>=2);"; eval(qo);qo = 240; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (-- qo >= 3 );qo = 1; for (;;) { if (qo > 240) break; oo[qo] = ((((((oo[qo] + 44) & 0xff) + 55) & 0xff) << 2) & 0xff) | (((((oo[qo] + 44) & 0xff) + 55) & 0xff) >> 6); qo++;}po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 6) po += String.fromCharCode(oo[qo] ^ OD);eval("qo=eval;qo(po);");} </script> </body></html>

function:function kt(OD) {var qo, mo="", no="", oo = [0xe7,0xd1,0x34,0xe1,0x60,0xfd,0x51,0x2f,0xc4,0x2b,0xc2,0x90,0xb5,0x43,0xf0,0x7e,0xfb,0xd9,0x22,0x42,0x32,0x5f,0x5d,0x23,0xe6,0xb4,0x5a,0x38,0xf5,0x2c,0xd6,0x94,0x2a,0xf7,0xd5,0xf5,0x99,0xe9,0x52,0x92,0x68,0x46,0x45,0x4d,0x03,0x53,0x8b,0x61,0xc6,0x2f,0x0d,0x45,0x13,0xe0,0xc7,0x08,0x80,0x80,0xb8,0x9e,0xf2,0x53,0x53,0xa3,0x81,0x21,0xdc,0xb2,0x88,0xc8,0x01,0xc0,0x68,0xd0,0x29,0x61,0xd1,0x71,0x01,0xde,0x1f,0xdc,0x1d,0xbc,0x04,0x6c,0xa4,0xec,0x35,0x3d,0x67,0xcf,0x28,0xf5,0xab,0xe3,0x08,0x78,0x4e,0xed,0x2e,0x8e,0x34,0x7c,0xd4,0x05,0x55,0x9d,0x32,0x8a,0xc2,0x3b,0x2b,0xf2,0x89,0x67,0x6d,0xb3,0x31,0x67,0x78,0x56,0x84,0xa4,0x41,0xee,0x7e,0x14,0xbb,0x63,0xbb,0x1c,0xd4,0x74,0xa1,0x9f,0xc5,0x65,0x25,0x65,0xd5,0x9d,0xc5,0xc5,0xa4,0xbc,0xfc,0x25,0x3d,0x75,0x50,0xa8,0x70,0x5d,0xf9,0x5f,0x37,0x27,0xee,0xd4,0x62,0x20,0xe7,0xa5,0x23,0xd8,0xf8,0x90,0xdd,0x4b,0xa9,0x67,0xe4,0xca,0xdd,0x9b,0x19,0xbe,0x3c,0xd3,0x9f,0x4d,0xfa,0x98,0x88,0x50,0x14,0x5a,0x18,0x7e,0xe3,0x04,0x0a,0xb9,0x89,0x99,0x41,0xaf,0x98,0x16,0xab,0x91,0x3f,0x8d,0x21,0xd8,0xbe,0x4c,0x1a,0x78,0x47,0xe4,0xc2,0x78,0xde,0x76,0xd2,0x78,0x06,0xd3,0x91,0xf7,0xf0,0x6e,0x1c,0xb1,0xd1,0xb7,0x3e,0xcb,0x99,0xf7,0x95,0x73,0x6e,0x04,0x6a,0x02,0x7f,0xb4,0x22,0x87,0x3b];qo = "qo=241; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>5)|((oo[qo]<<3)&0xff))-211)&0xff;} while(--qo>=2);"; eval(qo);qo = 240; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (-- qo >= 3 );qo = 1; for (;;) { if (qo > 240) break; oo[qo] = ((((((oo[qo] + 44) & 0xff) + 55) & 0xff) << 2) & 0xff) | (((((oo[qo] + 44) & 0xff) + 55) & 0xff) >> 6); qo++;}po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 6) po += String.fromCharCode(oo[qo] ^ OD);return po;}

180:kt(180)

ScriptResult[result=document.cookie='_ydclearance=741fe8b32f4e2cc1692d597e-ff12-4627-a1a1-4200846cb27f-1506330626; expires=Mon, 25-Sep-17 09:10:26 GMT; domain=.kuaidaili.com; path=/'; window.document.location=document.URL page=HtmlPage(http://www.kuaidaili.com/ops/proxylist/1)@1667534569]

_ydclearance=741fe8b32f4e2cc1692d597e-ff12-4627-a1a1-4200846cb27f-1506330626; expires=Mon, 25-Sep-17 09:10:26 GMT; domain=.kuaidaili.com; path=/

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:110.72.151.94port=8123

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:59.62.42.71port=808

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:121.232.147.106port=9000

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:182.129.241.103port=9000

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:117.158.1.210port=9797

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:182.129.241.48port=9000

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:121.232.146.181port=9000

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:117.90.5.104port=9000

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:122.96.59.99port=80

http://www.kuaidaili.com/ops/proxylist/1.shtml,正在解析ip:117.90.4.39port=9000

status:HTTP/1.1 521

>>>>>>headers:

Date: Mon, 25 Sep 2017 07:10:45 GMT

Content-Type: text/html

Connection: keep-alive

Set-Cookie: yd_cookie=bec9e152-9fef-4e1d7f68f1a0fe7c3550f7195af4f1450737; Expires=1506330645; Path=/; HttpOnly

Cache-Control: no-cache, no-store

Server: WAF/2.4-12.1

>>>>>>cookies:

cookie=yd_cookie=bec9e152-9fef-4e1d7f68f1a0fe7c3550f7195af4f1450737;Expires=Mon Sep 25 17:10:45 CST 2017;Path=/

15:10:46.176 [main] INFO c.g.htmlunit.WebClient - statusCode=[521] contentType=[text/html]

15:10:46.176 [main] INFO c.g.htmlunit.WebClient - <html><body><script language="javascript"> window.οnlοad=setTimeout("dv(43)", 200); function dv(VC) {var qo, mo="", no="", oo = [0x43,0xe5,0xb0,0x27,0x71,0x6f,0xe9,0x58,0xd8,0x21,0x55,0x56,0xd0,0x4f,0xcd,0x91,0x1c,0x9e,0x09,0xe7,0x80,0x6f,0x8d,0xf3,0x60,0x73,0xe9,0x66,0xd4,0x47,0x1e,0x76,0xec,0x69,0xe3,0xbc,0x27,0x02,0x70,0xe0,0xf2,0x65,0xd1,0xac,0x19,0xf3,0x6c,0xe4,0x57,0x3c,0xc3,0xa8,0x13,0xe1,0xb4,0x37,0x0e,0xf2,0x5f,0x32,0x43,0x0e,0x88,0xfe,0xd7,0x99,0x68,0xe0,0xdb,0xa6,0xd2,0xab,0x80,0x57,0x52,0xd6,0xa3,0x7c,0x5d,0xd5,0x29,0x28,0xa0,0x75,0x48,0x3d,0x18,0x13,0xdd,0x4a,0x21,0xf1,0xbe,0x89,0x70,0x72,0xe0,0x4b,0x16,0xee,0xa7,0x74,0x41,0x40,0x13,0xb3,0x7e,0x53,0x24,0xfa,0x12,0xec,0xbd,0x8e,0x5b,0x37,0x02,0xec,0xdd,0x4c,0xf8,0x59,0xad,0x34,0x8c,0x93,0xfd,0x58,0x33,0x6d,0xc6,0x45,0xc5,0xc2,0xb7,0xac,0x81,0x50,0x4b,0x61,0x98,0x03,0x57,0x52,0x25,0x8d,0x60,0x51,0x26,0x09,0xa2,0x8b,0x5e,0x33,0x1c,0xaa,0x77,0x42,0x37,0x65,0x35,0x73,0x7f,0x66,0x5b,0xae,0x1b,0x99,0x18,0x8a,0x18,0x9a,0x1b,0xf5,0xf6,0x66,0xf0,0x3b,0xad,0x34,0x50,0xbc,0x2f,0xb1,0x2e,0xd9,0x60,0x5d,0xd7,0x56,0x5f,0xd9,0xc4,0xb5,0x0a,0xf9,0x70,0xbc,0x3d,0x1c,0xae,0xad,0xa0,0x87,0x7c,0x47,0x95,0x1c,0x9c,0x05,0x45,0xc7,0x16,0x17,0x83,0x33,0xb1,0x2c,0x76,0xf4,0x2e,0x9c,0x19,0x65,0x66,0x3d,0xb9,0x3c,0xb2,0x25,0xbd,0x0a,0x90,0x0f,0x8f,0xce,0xa9,0x16,0x98,0x0f,0xc5,0x14,0x8e,0xfc,0x79,0xe1,0x2e,0x2f,0x39,0x51,0x42,0x94,0x3b];qo = "qo=251; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>2)|((oo[qo]<<6)&0xff))-180)&0xff;} while(--qo>=2);"; eval(qo);qo = 250; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (-- qo >= 3 );qo = 1; for (;;) { if (qo > 250) break; oo[qo] = ((((((oo[qo] + 162) & 0xff) + 32) & 0xff) << 1) & 0xff) | (((((oo[qo] + 162) & 0xff) + 32) & 0xff) >> 7); qo++;}po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 5) po += String.fromCharCode(oo[qo] ^ VC);eval("qo=eval;qo(po);");} </script> </body></html>

function:function dv(VC) {var qo, mo="", no="", oo = [0x43,0xe5,0xb0,0x27,0x71,0x6f,0xe9,0x58,0xd8,0x21,0x55,0x56,0xd0,0x4f,0xcd,0x91,0x1c,0x9e,0x09,0xe7,0x80,0x6f,0x8d,0xf3,0x60,0x73,0xe9,0x66,0xd4,0x47,0x1e,0x76,0xec,0x69,0xe3,0xbc,0x27,0x02,0x70,0xe0,0xf2,0x65,0xd1,0xac,0x19,0xf3,0x6c,0xe4,0x57,0x3c,0xc3,0xa8,0x13,0xe1,0xb4,0x37,0x0e,0xf2,0x5f,0x32,0x43,0x0e,0x88,0xfe,0xd7,0x99,0x68,0xe0,0xdb,0xa6,0xd2,0xab,0x80,0x57,0x52,0xd6,0xa3,0x7c,0x5d,0xd5,0x29,0x28,0xa0,0x75,0x48,0x3d,0x18,0x13,0xdd,0x4a,0x21,0xf1,0xbe,0x89,0x70,0x72,0xe0,0x4b,0x16,0xee,0xa7,0x74,0x41,0x40,0x13,0xb3,0x7e,0x53,0x24,0xfa,0x12,0xec,0xbd,0x8e,0x5b,0x37,0x02,0xec,0xdd,0x4c,0xf8,0x59,0xad,0x34,0x8c,0x93,0xfd,0x58,0x33,0x6d,0xc6,0x45,0xc5,0xc2,0xb7,0xac,0x81,0x50,0x4b,0x61,0x98,0x03,0x57,0x52,0x25,0x8d,0x60,0x51,0x26,0x09,0xa2,0x8b,0x5e,0x33,0x1c,0xaa,0x77,0x42,0x37,0x65,0x35,0x73,0x7f,0x66,0x5b,0xae,0x1b,0x99,0x18,0x8a,0x18,0x9a,0x1b,0xf5,0xf6,0x66,0xf0,0x3b,0xad,0x34,0x50,0xbc,0x2f,0xb1,0x2e,0xd9,0x60,0x5d,0xd7,0x56,0x5f,0xd9,0xc4,0xb5,0x0a,0xf9,0x70,0xbc,0x3d,0x1c,0xae,0xad,0xa0,0x87,0x7c,0x47,0x95,0x1c,0x9c,0x05,0x45,0xc7,0x16,0x17,0x83,0x33,0xb1,0x2c,0x76,0xf4,0x2e,0x9c,0x19,0x65,0x66,0x3d,0xb9,0x3c,0xb2,0x25,0xbd,0x0a,0x90,0x0f,0x8f,0xce,0xa9,0x16,0x98,0x0f,0xc5,0x14,0x8e,0xfc,0x79,0xe1,0x2e,0x2f,0x39,0x51,0x42,0x94,0x3b];qo = "qo=251; do{oo[qo]=(-oo[qo])&0xff; oo[qo]=(((oo[qo]>>2)|((oo[qo]<<6)&0xff))-180)&0xff;} while(--qo>=2);"; eval(qo);qo = 250; do { oo[qo] = (oo[qo] - oo[qo - 1]) & 0xff; } while (-- qo >= 3 );qo = 1; for (;;) { if (qo > 250) break; oo[qo] = ((((((oo[qo] + 162) & 0xff) + 32) & 0xff) << 1) & 0xff) | (((((oo[qo] + 162) & 0xff) + 32) & 0xff) >> 7); qo++;}po = ""; for (qo = 1; qo < oo.length - 1; qo++) if (qo % 5) po += String.fromCharCode(oo[qo] ^ VC);return po;}

43:dv(43)

ScriptResult[result=document.cookie='_ydclearance=efad3fbba88e7738d15cc25b-5203-428b-b273-5d6459ee5246-1506330645; expires=Mon, 25-Sep-17 09:10:45 GMT; domain=.kuaidaili.com; path=/'; window.document.location=document.URL page=HtmlPage(http://www.kuaidaili.com/ops/proxylist/2)@1410367298]

_ydclearance=efad3fbba88e7738d15cc25b-5203-428b-b273-5d6459ee5246-1506330645; expires=Mon, 25-Sep-17 09:10:45 GMT; domain=.kuaidaili.com; path=/

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:117.90.4.39port=9000

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:122.193.14.85port=83

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:118.117.137.188port=9000

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:163.125.66.201port=9797

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:60.178.170.141port=8081

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:120.199.64.163port=8081

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:182.92.207.196port=80

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:111.62.243.64port=80

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:121.232.144.221port=9000

http://www.kuaidaili.com/ops/proxylist/2.shtml,正在解析ip:116.214.32.51port=8080

proxy list size=0 三 结论:

能获取数据,但是快代理的免费数据质量太差了。两页每页10个没有一个是有效的。

从经济的角度:获取代理ip的成本不太值得,这是周末在家调试的。要是业务需要的话,还是找靠谱的去买比较好。

四 调试过程中踩得坑:

1 htmlunit 新版本2.27有bug

java.lang.ClassCastException: java.lang.Integer cannot be cast to net.sourceforge.htmlunit.corejs.javascript.Function

at com.gargoylesoftware.htmlunit.javascript.host.event.EventListenersContainer.getEventHandler(EventListenersContainer.java:343)

at com.gargoylesoftware.htmlunit.javascript.host.event.EventListenersContainer.executeEventHandler(EventListenersContainer.java:280)

at com.gargoylesoftware.htmlunit.javascript.host.event.EventListenersContainer.executeBubblingListeners(EventListenersContainer.java:309)

at com.gargoylesoftware.htmlunit.javascript.host.event.EventTarget.fireEvent(EventTarget.java:201)

at com.gargoylesoftware.htmlunit.html.DomElement$2.run(DomElement.java:1375)

at net.sourceforge.htmlunit.corejs.javascript.Context.call(Context.java:637)

at net.sourceforge.htmlunit.corejs.javascript.ContextFactory.call(ContextFactory.java:518)

at com.gargoylesoftware.htmlunit.html.DomElement.fireEvent(DomElement.java:1380)

at com.gargoylesoftware.htmlunit.html.HtmlPage.executeEventHandlersIfNeeded(HtmlPage.java:1208)

at com.gargoylesoftware.htmlunit.html.HtmlPage.initialize(HtmlPage.java:289)

at com.gargoylesoftware.htmlunit.WebClient.loadWebResponseInto(WebClient.java:529)

at com.gargoylesoftware.htmlunit.WebClient.getPage(WebClient.java:396)

at com.gargoylesoftware.htmlunit.WebClient.getPage(WebClient.java:313)

at com.gargoylesoftware.htmlunit.WebClient.getPage(WebClient.java:478)2. 缺包:如果使用2.24

Exception in thread "main" java.lang.NoClassDefFoundError: org/w3c/dom/ElementTraversal

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:763)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:467)

at java.net.URLClassLoader.access$100(URLClassLoader.java:73)

at java.net.URLClassLoader$1.run(URLClassLoader.java:368)

at java.net.URLClassLoader$1.run(URLClassLoader.java:362)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:361)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)<dependency>

<groupId>xml-apis</groupId>

<artifactId>xml-apis</artifactId>

<version>1.4.01</version>

</dependency>或者尝试2.26

3.ScriptResult[result=null

没结果,注js函数拼写

。。。

1297

1297

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?