JPEG

A photo of a cat compressed with successively more lossy compression ratios from right to left | |

| Filename extension | .jpg, .jpeg, .jpe |

|---|---|

| Internet media type | image/jpeg |

| Type code | JPEG |

| Uniform Type Identifier | public.jpeg |

| Magic number | ff d8 |

| Developed by | Joint Photographic Experts Group |

| Type of format | lossy image format |

| Standard(s) | ISO/IEC 10918, ITU-T T.81, ITU-T T.83, ITU-T T.84, ITU-T T.86 |

In computing, JPEG (![]() /ˈdʒeɪpɛɡ/ [pronounced as jay-peg] is a commonly used method of lossy compression for digital photography (image). The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality.

/ˈdʒeɪpɛɡ/ [pronounced as jay-peg] is a commonly used method of lossy compression for digital photography (image). The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality.

JPEG compression is used in a number of image file formats. JPEG/Exif is the most common image format used by digital cameras and other photographic image capture devices; along with JPEG/JFIF, it is the most common format for storing and transmitting photographic images on the World Wide Web.[citation needed] These format variations are often not distinguished, and are simply called JPEG.

The term "JPEG" is an acronym for the Joint Photographic Experts Group which created the standard. The MIME media type for JPEG is image/jpeg (defined in RFC 1341), except in Internet Explorer, which provides a MIME type of image/pjpeg when uploading JPEG images.[1]

It supports a maximum image size of 65535×65535.[2]

Contents[hide] |

[edit] The JPEG standard

The name "JPEG" stands for Joint Photographic Experts Group, the name of the committee that created the JPEG standard and also other standards. It is one of two sub-groups of ISO/IEC Joint Technical Committee 1, Subcommittee 29, Working Group 1 (ISO/IEC JTC 1/SC 29/WG 1) – titled as Coding of still pictures.[3][4][5] The group was organized in 1986,[6] issuing the first JPEG standard in 1992, which was approved in September 1992 as ITU-T Recommendation T.81[7] and in 1994 as ISO/IEC 10918-1.

The JPEG standard specifies the codec, which defines how an image is compressed into a stream of bytes and decompressed back into an image, but not the file format used to contain that stream.[8] The Exif and JFIF standards define the commonly used file formats for interchange of JPEG-compressed images.

JPEG standards are formally named as Information technology – Digital compression and coding of continuous-tone still images. ISO/IEC 10918 consists of the following parts:

| Part | ISO/IEC standard | ITU-T Rec. | First public release date | Latest amendment | Title | Description |

|---|---|---|---|---|---|---|

| Part 1 | ISO/IEC 10918-1:1994 | T.81 (09/92) | 1992 | Requirements and guidelines | ||

| Part 2 | ISO/IEC 10918-2:1995 | T.83 (11/94) | 1994 | Compliance testing | rules and checks for software conformance (to Part 1) | |

| Part 3 | ISO/IEC 10918-3:1997 | T.84 (07/96) | 1996 | 1999 | Extensions | set of extensions to improve the Part 1, including the SPIFF file format |

| Part 4 | ISO/IEC 10918-4:1999 | T.86 (06/98) | 1998 | Registration of JPEG profiles, SPIFF profiles, SPIFF tags, SPIFF colour spaces, APPn markers, SPIFF compression types and Registration Authorities (REGAUT) | methods for registering some of the parameters used to extend JPEG | |

| Part 5 | ISO/IEC FDIS 10918-5 | T.871 (05/11) | (under development since 2009)[10] | JPEG File Interchange Format (JFIF) | A popular format which has been the de-facto file format for images encoded by the JPEG standard. In 2009, the JPEG Committee formally established an Ad Hoc Group to standardize JFIF as JPEG Part 5.[10] |

Ecma International TR/98 specifies the JPEG File Interchange Format (JFIF); the first edition was published in June 2009.[11]

[edit] Typical usage

The JPEG compression algorithm is at its best on photographs and paintings of realistic scenes with smooth variations of tone and color. For web usage, where the amount of data used for an image is important, JPEG is very popular. JPEG/Exif is also the most common format saved by digital cameras.

On the other hand, JPEG may not be as well suited for line drawings and other textual or iconic graphics, where the sharp contrasts between adjacent pixels can cause noticeable artifacts. Such images may be better saved in a lossless graphics format such as TIFF, GIF, PNG, or a raw image format. The JPEG standard actually includes a lossless coding mode, but that mode is not supported in most products.

As the typical use of JPEG is a lossy compression method, which somewhat reduces the image fidelity, it should not be used in scenarios where the exact reproduction of the data is required (such as some scientific and medical imaging applications and certain technical image processing work).

JPEG is also not well suited to files that will undergo multiple edits, as some image quality will usually be lost each time the image is decompressed and recompressed, particularly if the image is cropped or shifted, or if encoding parameters are changed – see digital generation loss for details. To avoid this, an image that is being modified or may be modified in the future can be saved in a lossless format, with a copy exported as JPEG for distribution.

[edit] JPEG compression

The compression method is usually lossy, meaning that some original image information is lost and cannot be restored, possibly affecting image quality. There is an optional lossless mode defined in the JPEG standard; however, this mode is not widely supported in products.

There is also an interlaced "Progressive JPEG" format, in which data is compressed in multiple passes of progressively higher detail. This is ideal for large images that will be displayed while downloading over a slow connection, allowing a reasonable preview after receiving only a portion of the data. However, progressive JPEGs are not as widely supported,[citation needed] and even some software which does support them (such as versions of Internet Explorer before Windows 7)[12] only displays the image after it has been completely downloaded.

There are also many medical imaging and traffic systems that create and process 12-bit JPEG images, normally grayscale images. The 12-bit JPEG format has been part of the JPEG specification for some time, but again, this format is not as widely supported.

[edit] Lossless editing

A number of alterations to a JPEG image can be performed losslessly (that is, without recompression and the associated quality loss) as long as the image size is a multiple of 1 MCU block (Minimum Coded Unit) (usually 16 pixels in both directions, for 4:2:0 chroma subsampling). Utilities that implement this include jpegtran, with user interface Jpegcrop, and the JPG_TRANSFORM plugin to IrfanView.

Blocks can be rotated in 90 degree increments, flipped in the horizontal, vertical and diagonal axes and moved about in the image. Not all blocks from the original image need to be used in the modified one.

The top and left edge of a JPEG image must lie on a block boundary, but the bottom and right edge need not do so. This limits the possible lossless crop operations, and also prevents flips and rotations of an image whose bottom or right edge does not lie on a block boundary for all channels (because the edge would end up on top or left, where – as aforementioned – a block boundary is obligatory).

When using lossless cropping, if the bottom or right side of the crop region is not on a block boundary then the rest of the data from the partially used blocks will still be present in the cropped file and can be recovered.

It is also possible to transform between baseline and progressive formats without any loss of quality, since the only difference is the order in which the coefficients are placed in the file.

[edit] JPEG files

The file format known as "JPEG Interchange Format" (JIF) is specified in Annex B of the standard. However, this "pure" file format is rarely used, primarily because of the difficulty of programming encoders and decoders that fully implement all aspects of the standard and because of certain shortcomings of the standard:

- Color space definition

- Component sub-sampling registration

- Pixel aspect ratio definition.

Several additional standards have evolved to address these issues. The first of these, released in 1992, was JPEG File Interchange Format (or JFIF), followed in recent years by Exchangeable image file format (Exif) and ICC color profiles. Both of these formats use the actual JIF byte layout, consisting of different markers, but in addition employ one of the JIF standard's extension points, namely the application markers: JFIF use APP0, while Exif use APP1. Within these segments of the file, that were left for future use in the JIF standard and aren't read by it, these standards add specific metadata.

Thus, in some ways JFIF is a cutdown version of the JIF standard in that it specifies certain constraints (such as not allowing all the different encoding modes), while in other ways it is an extension of JIF due to the added metadata. The documentation for the original JFIF standard states:[13]

- JPEG File Interchange Format is a minimal file format which enables JPEG bitstreams to be exchanged between a wide variety of platforms and applications. This minimal format does not include any of the advanced features found in the TIFF JPEG specification or any application specific file format. Nor should it, for the only purpose of this simplified format is to allow the exchange of JPEG compressed images.

Image files that employ JPEG compression are commonly called "JPEG files", and are stored in variants of the JIF image format. Most image capture devices (such as digital cameras) that output JPEG are actually creating files in the Exif format, the format that the camera industry has standardized on for metadata interchange. On the other hand, since the Exif standard does not allow color profiles, most image editing software stores JPEG in JFIF format, and also include the APP1 segment from the Exif file to include the metadata in an almost-compliant way; the JFIF standard is interpreted somewhat flexibly.[14]

Strictly speaking, the JFIF and Exif standards are incompatible because they each specify that their marker segment (APP0 or APP1, respectively) appears first. In practice, most JPEG files contain a JFIF marker segment that precedes the Exif header. This allows older readers to correctly handle the older format JFIF segment, while newer readers also decode the following Exif segment, being less strict about requiring it to appear first.

[edit] JPEG filename extensions

The most common filename extensions for files employing JPEG compression are .jpg and .jpeg, though .jpe, .jfif and .jif are also used. It is also possible for JPEG data to be embedded in other file types – TIFF encoded files often embed a JPEG image as a thumbnail of the main image; and MP3 files can contain a JPEG of cover art, in the ID3v2 tag.

[edit] Color profile

Many JPEG files embed an ICC color profile (color space). Commonly used color profiles include sRGB and Adobe RGB. Because these color spaces use a non-linear transformation, the dynamic range of an 8-bit JPEG file is about 11 stops; see gamma curve.

[edit] Syntax and structure

A JPEG image consists of a sequence of segments, each beginning with a marker, each of which begins with a 0xFF byte followed by a byte indicating what kind of marker it is. Some markers consist of just those two bytes; others are followed by two bytes indicating the length of marker-specific payload data that follows. (The length includes the two bytes for the length, but not the two bytes for the marker.) Some markers are followed by entropy-coded data; the length of such a marker does not include the entropy-coded data. Note that consecutive 0xFF bytes are used as fill bytes for padding purposes (see JPEG specification section B.1.1.2 for details).

Within the entropy-coded data, after any 0xFF byte, a 0x00 byte is inserted by the encoder before the next byte, so that there does not appear to be a marker where none is intended, preventing framing errors. Decoders must skip this 0x00 byte. This technique, called byte stuffing (see JPEG specification section F.1.2.3), is only applied to the entropy-coded data, not to marker payload data.

| Short name | Bytes | Payload | Name | Comments |

|---|---|---|---|---|

| SOI | 0xFF, 0xD8 | none | Start Of Image | |

| SOF0 | 0xFF, 0xC0 | variable size | Start Of Frame (Baseline DCT) | Indicates that this is a baseline DCT-based JPEG, and specifies the width, height, number of components, and component subsampling (e.g., 4:2:0). |

| SOF2 | 0xFF, 0xC2 | variable size | Start Of Frame (Progressive DCT) | Indicates that this is a progressive DCT-based JPEG, and specifies the width, height, number of components, and component subsampling (e.g., 4:2:0). |

| DHT | 0xFF, 0xC4 | variable size | Define Huffman Table(s) | Specifies one or more Huffman tables. |

| DQT | 0xFF, 0xDB | variable size | Define Quantization Table(s) | Specifies one or more quantization tables. |

| DRI | 0xFF, 0xDD | 2 bytes | Define Restart Interval | Specifies the interval between RSTn markers, in macroblocks. This marker is followed by two bytes indicating the fixed size so it can be treated like any other variable size segment. |

| SOS | 0xFF, 0xDA | variable size | Start Of Scan | Begins a top-to-bottom scan of the image. In baseline DCT JPEG images, there is generally a single scan. Progressive DCT JPEG images usually contain multiple scans. This marker specifies which slice of data it will contain, and is immediately followed by entropy-coded data. |

| RSTn | 0xFF, 0xD0 … 0xD7 | none | Restart | Inserted every r macroblocks, where r is the restart interval set by a DRI marker. Not used if there was no DRI marker. The low 3 bits of the marker code cycle in value from 0 to 7. |

| APPn | 0xFF, 0xEn | variable size | Application-specific | For example, an Exif JPEG file uses an APP1 marker to store metadata, laid out in a structure based closely on TIFF. |

| COM | 0xFF, 0xFE | variable size | Comment | Contains a text comment. |

| EOI | 0xFF, 0xD9 | none | End Of Image |

There are other Start Of Frame markers that introduce other kinds of JPEG encodings.

Since several vendors might use the same APPn marker type, application-specific markers often begin with a standard or vendor name (e.g., "Exif" or "Adobe") or some other identifying string.

At a restart marker, block-to-block predictor variables are reset, and the bitstream is synchronized to a byte boundary. Restart markers provide means for recovery after bitstream error, such as transmission over an unreliable network or file corruption. Since the runs of macroblocks between restart markers may be independently decoded, these runs may be decoded in parallel.

[edit] JPEG codec example

Although a JPEG file can be encoded in various ways, most commonly it is done with JFIF encoding. The encoding process consists of several steps:

- The representation of the colors in the image is converted from RGB to Y′CBCR, consisting of one luma component (Y'), representing brightness, and two chroma components, (CB and CR), representing color. This step is sometimes skipped.

- The resolution of the chroma data is reduced, usually by a factor of 2. This reflects the fact that the eye is less sensitive to fine color details than to fine brightness details.

- The image is split into blocks of 8×8 pixels, and for each block, each of the Y, CB, and CR data undergoes a discrete cosine transform (DCT). A DCT is similar to a Fourier transform in the sense that it produces a kind of spatial frequency spectrum.

- The amplitudes of the frequency components are quantized. Human vision is much more sensitive to small variations in color or brightness over large areas than to the strength of high-frequency brightness variations. Therefore, the magnitudes of the high-frequency components are stored with a lower accuracy than the low-frequency components. The quality setting of the encoder (for example 50 or 95 on a scale of 0–100 in the Independent JPEG Group's library[16]) affects to what extent the resolution of each frequency component is reduced. If an excessively low quality setting is used, the high-frequency components are discarded altogether.

- The resulting data for all 8×8 blocks is further compressed with a lossless algorithm, a variant of Huffman encoding.

The decoding process reverses these steps, except the quantization because it is irreversible. In the remainder of this section, the encoding and decoding processes are described in more detail.

[edit] Encoding

Many of the options in the JPEG standard are not commonly used, and as mentioned above, most image software uses the simpler JFIF format when creating a JPEG file, which among other things specifies the encoding method. Here is a brief description of one of the more common methods of encoding when applied to an input that has 24 bits per pixel (eight each of red, green, and blue). This particular option is a lossy data compression method.[citation needed]

[edit] Color space transformation

First, the image should be converted from RGB into a different color space called Y′CBCR (or, informally, YCbCr). It has three components Y', CB and CR: the Y' component represents the brightness of a pixel, and the CB and CR components represent the chrominance (split into blue and red components). This is basically the same color space as used by digital color television as well as digital video including video DVDs, and is similar to the way color is represented in analog PAL video and MAC (but not by analog NTSC, which uses the YIQ color space). The Y′CBCR color space conversion allows greater compression without a significant effect on perceptual image quality (or greater perceptual image quality for the same compression). The compression is more efficient because the brightness information, which is more important to the eventual perceptual quality of the image, is confined to a single channel. This more closely corresponds to the perception of color in the human visual system. The color transformation also improves compression by statistical decorrelation.

A particular conversion to Y′CBCR is specified in the JFIF standard, and should be performed for the resulting JPEG file to have maximum compatibility. However, some JPEG implementations in "highest quality" mode do not apply this step and instead keep the color information in the RGB color model[citation needed], where the image is stored in separate channels for red, green and blue brightness components. This results in less efficient compression, and would not likely be used when file size is especially important.

[edit] Downsampling

Due to the densities of color- and brightness-sensitive receptors in the human eye, humans can see considerably more fine detail in the brightness of an image (the Y' component) than in the hue and color saturation of an image (the Cb and Cr components). Using this knowledge, encoders can be designed to compress images more efficiently.

The transformation into the Y′CBCR color model enables the next usual step, which is to reduce the spatial resolution of the Cb and Cr components (called "downsampling" or "chroma subsampling"). The ratios at which the downsampling is ordinarily done for JPEG images are 4:4:4 (no downsampling), 4:2:2 (reduction by a factor of 2 in the horizontal direction), or (most commonly) 4:2:0 (reduction by a factor of 2 in both the horizontal and vertical directions). For the rest of the compression process, Y', Cb and Cr are processed separately and in a very similar manner.

[edit] Block splitting

After subsampling, each channel must be split into 8×8 blocks. Depending on chroma subsampling, this yields (Minimum Coded Unit) MCU blocks of size 8×8 (4:4:4 – no subsampling), 16×8 (4:2:2), or most commonly 16×16 (4:2:0). In video compression MCUs are called macroblocks.

If the data for a channel does not represent an integer number of blocks then the encoder must fill the remaining area of the incomplete blocks with some form of dummy data. Filling the edges with a fixed color (for example, black) can create ringing artifacts along the visible part of the border; repeating the edge pixels is a common technique that reduces (but does not necessarily completely eliminate) such artifacts, and more sophisticated border filling techniques can also be applied.

[edit] Discrete cosine transform

Next, each 8×8 block of each component (Y, Cb, Cr) is converted to a frequency-domain representation, using a normalized, two-dimensional type-II discrete cosine transform (DCT).

As an example, one such 8×8 8-bit subimage might be:

Before computing the DCT of the 8×8 block, its values are shifted from a positive range to one centered around zero. For an 8-bit image, each entry in the original block falls in the range ![[0, 255]](http://upload.wikimedia.org/wikipedia/en/math/8/9/d/89dd46dfd5ac422d57ddaa6237c56f8c.png) . The mid-point of the range (in this case, the value 128) is subtracted from each entry to produce a data range that is centered around zero, so that the modified range is

. The mid-point of the range (in this case, the value 128) is subtracted from each entry to produce a data range that is centered around zero, so that the modified range is ![[-128, 127]](http://upload.wikimedia.org/wikipedia/en/math/1/9/0/190bc24ca0cc401a93d68000963988ef.png) . This step reduces the dynamic range requirements in the DCT processing stage that follows. (Aside from the difference in dynamic range within the DCT stage, this step is mathematically equivalent to subtracting 1024 from the DC coefficient after performing the transform – which may be a better way to perform the operation on some architectures since it involves performing only one subtraction rather than 64 of them.)

. This step reduces the dynamic range requirements in the DCT processing stage that follows. (Aside from the difference in dynamic range within the DCT stage, this step is mathematically equivalent to subtracting 1024 from the DC coefficient after performing the transform – which may be a better way to perform the operation on some architectures since it involves performing only one subtraction rather than 64 of them.)

This step results in the following values:

The next step is to take the two-dimensional DCT, which is given by:

and the vertical index is

and the vertical index is

.

.

where

is the horizontal spatial frequency, for the integers

is the horizontal spatial frequency, for the integers  .

.  is the vertical spatial frequency, for the integers

is the vertical spatial frequency, for the integers  .

.  is a normalizing scale factor to make the transformation orthonormal

is a normalizing scale factor to make the transformation orthonormal  is the pixel value at coordinates

is the pixel value at coordinates

is the DCT coefficient at coordinates

is the DCT coefficient at coordinates

If we perform this transformation on our matrix above, we get the following (rounded to the nearest two digits beyond the decimal point):

Note the top-left corner entry with the rather large magnitude. This is the DC coefficient. The remaining 63 coefficients are called the AC coefficients. The advantage of the DCT is its tendency to aggregate most of the signal in one corner of the result, as may be seen above. The quantization step to follow accentuates this effect while simultaneously reducing the overall size of the DCT coefficients, resulting in a signal that is easy to compress efficiently in the entropy stage.

The DCT temporarily increases the bit-depth of the data, since the DCT coefficients of an 8-bit/component image take up to 11 or more bits (depending on fidelity of the DCT calculation) to store. This may force the codec to temporarily use 16-bit bins to hold these coefficients, doubling the size of the image representation at this point; they are typically reduced back to 8-bit values by the quantization step. The temporary increase in size at this stage is not a performance concern for most JPEG implementations, because typically only a very small part of the image is stored in full DCT form at any given time during the image encoding or decoding process.

[edit] Quantization

The human eye is good at seeing small differences in brightness over a relatively large area, but not so good at distinguishing the exact strength of a high frequency brightness variation. This allows one to greatly reduce the amount of information in the high frequency components. This is done by simply dividing each component in the frequency domain by a constant for that component, and then rounding to the nearest integer. This rounding operation is the only lossy operation in the whole process if the DCT computation is performed with sufficiently high precision. As a result of this, it is typically the case that many of the higher frequency components are rounded to zero, and many of the rest become small positive or negative numbers, which take many fewer bits to represent.

A typical quantization matrix, as specified in the original JPEG Standard, is as follows:

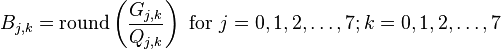

The quantized DCT coefficients are computed with

where  is the unquantized DCT coefficients;

is the unquantized DCT coefficients;  is the quantization matrix above; and

is the quantization matrix above; and  is the quantized DCT coefficients.

is the quantized DCT coefficients.

Using this quantization matrix with the DCT coefficient matrix from above results in:

For example, using −415 (the DC coefficient) and rounding to the nearest integer

[edit] Entropy coding

Entropy coding is a special form of lossless data compression. It involves arranging the image components in a "zigzag" order employing run-length encoding (RLE) algorithm that groups similar frequencies together, inserting length coding zeros, and then using Huffman coding on what is left.

The JPEG standard also allows, but does not require, decoders to support the use of arithmetic coding, which is mathematically superior to Huffman coding. However, this feature has rarely been used as it was historically covered by patents requiring royalty-bearing licenses, and because it is slower to encode and decode compared to Huffman coding. Arithmetic coding typically makes files about 5–7% smaller.

The previous quantized DC coefficient is used to predict the current quantized DC coefficient. The difference between the two is encoded rather than the actual value. The encoding of the 63 quantized AC coefficients does not use such prediction differencing.

The zigzag sequence for the above quantized coefficients are shown below. (The format shown is just for ease of understanding/viewing.)

| −26 | |||||||

| −3 | 0 | ||||||

| −3 | −2 | −6 | |||||

| 2 | −4 | 1 | −3 | ||||

| 1 | 1 | 5 | 1 | 2 | |||

| −1 | 1 | −1 | 2 | 0 | 0 | ||

| 0 | 0 | 0 | −1 | −1 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 0 | |||

| 0 | 0 | 0 | 0 | ||||

| 0 | 0 | 0 | |||||

| 0 | 0 | ||||||

| 0 |

If the i-th block is represented by Bi and positions within each block are represented by (p,q) where p = 0, 1, ..., 7 and q = 0, 1, ..., 7, then any coefficient in the DCT image can be represented as Bi(p,q). Thus, in the above scheme, the order of encoding pixels (for the i-th block) is Bi(0,0), Bi(0,1), Bi(1,0), Bi(2,0), Bi(1,1), Bi(0,2), Bi(0,3), Bi(1,2) and so on.

This encoding mode is called baseline sequential encoding. Baseline JPEG also supports progressive encoding. While sequential encoding encodes coefficients of a single block at a time (in a zigzag manner), progressive encoding encodes similar-positioned coefficients of all blocks in one go, followed by the next positioned coefficients of all blocks, and so on. So, if the image is divided into N 8×8 blocks {B0,B1,B2, ..., Bn-1}, then progressive encoding encodes Bi(0,0) for all blocks, i.e., for all i = 0, 1, 2, ..., N-1. This is followed by encoding Bi(0,1) coefficient of all blocks, followed by Bi(1,0)-th coefficient of all blocks, then Bi(2,0)-th coefficient of all blocks, and so on. It should be noted here that once all similar-positioned coefficients have been encoded, the next position to be encoded is the one occurring next in the zigzag traversal as indicated in the figure above. It has been found that Baseline Progressive JPEG encoding usually gives better compression as compared to Baseline Sequential JPEG due to the ability to use different Huffman tables (see below) tailored for different frequencies on each "scan" or "pass" (which includes similar-positioned coefficients), though the difference is not too large.

In the rest of the article, it is assumed that the coefficient pattern generated is due to sequential mode.

In order to encode the above generated coefficient pattern, JPEG uses Huffman encoding. JPEG has a special Huffman code word for ending the sequence prematurely when the remaining coefficients are zero.

Using this special code word: "EOB", the sequence becomes:

| −26 | |||||

| −3 | 0 | ||||

| −3 | −2 | −6 | |||

| 2 | −4 | 1 | −3 | ||

| 1 | 1 | 5 | 1 | 2 | |

| −1 | 1 | −1 | 2 | 0 | 0 |

| 0 | 0 | 0 | −1 | −1 | EOB |

JPEG's other code words represent combinations of (a) the number of significant bits of a coefficient, including sign, and (b) the number of consecutive zero coefficients that precede it. (Once you know how many bits to expect, it takes 1 bit to represent the choices {-1, +1}, 2 bits to represent the choices {-3, −2, +2, +3}, and so forth.) In our example block, most of the quantized coefficients are small numbers that are not preceded immediately by a zero coefficient. These more-frequent cases will be represented by shorter code words.

The JPEG standard provides general-purpose Huffman tables; encoders may also choose to generate Huffman tables optimized for the actual frequency distributions in images being encoded.

[edit] Compression ratio and artifacts

The resulting compression ratio can be varied according to need by being more or less aggressive in the divisors used in the quantization phase. Ten to one compression usually results in an image that cannot be distinguished by eye from the original. 100 to one compression is usually possible, but will look distinctly artifacted compared to the original. The appropriate level of compression depends on the use to which the image will be put.

| Illustration of edge busyness.[17] | |

Those who use the World Wide Web may be familiar with the irregularities known as compression artifacts that appear in JPEG images, which may take the form of noise around contrasting edges (especially curves and corners), or blocky images, commonly known as 'jaggies'. These are due to the quantization step of the JPEG algorithm. They are especially noticeable around sharp corners between contrasting colors (text is a good example as it contains many such corners). The analogous artifacts in MPEG video are referred to as mosquito noise, as the resulting "edge busyness" and spurious dots, which change over time, resemble mosquitoes swarming around the object.[17][18]

These artifacts can be reduced by choosing a lower level of compression; they may be eliminated by saving an image using a lossless file format, though for photographic images this will usually result in a larger file size. The images created with ray-tracing programs have noticeable blocky shapes on the terrain. Certain low-intensity compression artifacts might be acceptable when simply viewing the images, but can be emphasized if the image is subsequently processed, usually resulting in unacceptable quality. Consider the example below, demonstrating the effect of lossy compression on an edge detection processing step.

| Image | Lossless compression | Lossy compression |

|---|---|---|

| Original |  |  |

| Processed by Canny edge detector |  |  |

Some programs allow the user to vary the amount by which individual blocks are compressed. Stronger compression is applied to areas of the image that show fewer artifacts. This way it is possible to manually reduce JPEG file size with less loss of quality.

JPEG artifacts, like pixelation, are occasionally intentionally exploited for artistic purposes, as in Jpegs, by German photographer Thomas Ruff.[19][20]

Since the quantization stage always results in a loss of information, JPEG standard is always a lossy compression codec. (Information is lost both in quantizing and rounding of the floating-point numbers.) Even if the quantization matrix is a matrix of ones, information will still be lost in the rounding step.

[edit] Decoding

Decoding to display the image consists of doing all the above in reverse.

Taking the DCT coefficient matrix (after adding the difference of the DC coefficient back in)

and taking the entry-for-entry product with the quantization matrix from above results in

which closely resembles the original DCT coefficient matrix for the top-left portion.

The next step is to take the two-dimensional inverse DCT (a 2D type-III DCT), which is given by:

![f_{x,y} =

\sum_{u=0}^7

\sum_{v=0}^7

\alpha(u) \alpha(v) F_{u,v}

\cos \left[\frac{\pi}{8} \left(x+\frac{1}{2}\right) u \right]

\cos \left[\frac{\pi}{8} \left(y+\frac{1}{2}\right) v \right]](http://upload.wikimedia.org/wikipedia/en/math/e/2/4/e2455ba6701a307abda4827c6f628590.png)

where

is the pixel row, for the integers

is the pixel row, for the integers  .

.  is the pixel column, for the integers

is the pixel column, for the integers  .

.  is defined as above, for the integers

is defined as above, for the integers  .

.  is the reconstructed approximate coefficient at coordinates

is the reconstructed approximate coefficient at coordinates

is the reconstructed pixel value at coordinates

is the reconstructed pixel value at coordinates

Rounding the output to integer values (since the original had integer values) results in an image with values (still shifted down by 128)

and adding 128 to each entry

This is the decompressed subimage. In general, the decompression process may produce values outside of the original input range of ![[0, 255]](http://upload.wikimedia.org/wikipedia/en/math/8/9/d/89dd46dfd5ac422d57ddaa6237c56f8c.png) . If this occurs, the decoder needs to clip the output values keep them within that range to prevent overflow when storing the decompressed image with the original bit depth.

. If this occurs, the decoder needs to clip the output values keep them within that range to prevent overflow when storing the decompressed image with the original bit depth.

The decompressed subimage can be compared to the original subimage (also see images to the right) by taking the difference (original − uncompressed) results in the following error values:

with an average absolute error of about 5 values per pixels (i.e.,  ).

).

The error is most noticeable in the bottom-left corner where the bottom-left pixel becomes darker than the pixel to its immediate right.

[edit] Required precision

The encoding description in the JPEG standard does not fix the precision needed for the output compressed image. However, the JPEG standard (and the similar MPEG standards) includes some precision requirements for the decoding, including all parts of the decoding process (variable length decoding, inverse DCT, dequantization, renormalization of outputs); the output from the reference algorithm must not exceed:

- a maximum 1 bit of difference for each pixel component

- low mean square error over each 8×8-pixel block

- very low mean error over each 8×8-pixel block

- very low mean square error over the whole image

- extremely low mean error over the whole image

These assertions are tested on a large set of randomized input images, to handle the worst cases. The former IEEE 1180–1990 standard contained some similar precision requirements. The precision has a consequence on the implementation of decoders, and it is critical because some encoding processes (notably used for encoding sequences of images like MPEG) need to be able to construct, on the encoder side, a reference decoded image. In order to support 8-bit precision per pixel component output, dequantization and inverse DCT transforms are typically implemented with at least 14-bit precision in optimized decoders.

[edit] Effects of JPEG compression

JPEG compression artifacts blend well into photographs with detailed non-uniform textures, allowing higher compression ratios. Notice how a higher compression ratio first affects the high-frequency textures in the upper-left corner of the image, and how the contrasting lines become more fuzzy. The very high compression ratio severely affects the quality of the image, although the overall colors and image form are still recognizable. However, the precision of colors suffer less (for a human eye) than the precision of contours (based on luminance). This justifies the fact that images should be first transformed in a color model separating the luminance from the chromatic information, before subsampling the chromatic planes (which may also use lower quality quantization) in order to preserve the precision of the luminance plane with more information bits.

[edit] Sample photographs

For information, the uncompressed 24-bit RGB bitmap image below (73,242 pixels) would require 219,726 bytes (excluding all other information headers). The filesizes indicated below include the internal JPEG information headers and some meta-data. For highest quality images (Q=100), about 8.25 bits per color pixel is required. On grayscale images, a minimum of 6.5 bits per pixel is enough (a comparable Q=100 quality color information requires about 25% more encoded bits). The highest quality image below (Q=100) is encoded at 9 bits per color pixel, the medium quality image (Q=25) uses 1 bit per color pixel. For most applications, the quality factor should not go below 0.75 bit per pixel (Q=12.5), as demonstrated by the low quality image. The image at lowest quality uses only 0.13 bit per pixel, and displays very poor color, it could only be usable after subsampling to a much lower display size.

-

-

Note: The above images are not IEEE / CCIR / EBU test images, and the encoder settings are not specified or available. Image Quality Size (bytes) Compression ratio Comment

Highest quality (Q = 100) 83,261 2.6:1 Extremely minor artifacts

High quality (Q = 50) 15,138 15:1 Initial signs of subimage artifacts

Medium quality (Q = 25) 9,553 23:1 Stronger artifacts; loss of high frequency information

Low quality (Q = 10) 4,787 46:1 Severe high frequency loss; artifacts on subimage boundaries ("macroblocking") are obvious

Lowest quality (Q = 1) 1,523 144:1 Extreme loss of color and detail; the leaves are nearly unrecognizable

-

The medium quality photo uses only 4.3% of the storage space required for the uncompressed image, but has little noticeable loss of detail or visible artifacts. However, once a certain threshold of compression is passed, compressed images show increasingly visible defects. See the article on rate distortion theory for a mathematical explanation of this threshold effect. A particular limitation of JPEG in this regard is its non-overlapped 8×8 block transform structure. More modern designs such as JPEG 2000 and JPEG XR exhibit a more graceful degradation of quality as the bit usage decreases – by using transforms with a larger spatial extent for the lower frequency coefficients and by using overlapping transform basis functions.

[edit] Lossless further compression

From 2004 to 2008, new research has emerged on ways to further compress the data contained in JPEG images without modifying the represented image.[21][22][23][24] This has applications in scenarios where the original image is only available in JPEG format, and its size needs to be reduced for archival or transmission. Standard general-purpose compression tools cannot significantly compress JPEG files.

Typically, such schemes take advantage of improvements to the naive scheme for coding DCT coefficients, which fails to take into account:

- Correlations between magnitudes of adjacent coefficients in the same block;

- Correlations between magnitudes of the same coefficient in adjacent blocks;

- Correlations between magnitudes of the same coefficient/block in different channels;

- The DC coefficients when taken together resemble a downscale version of the original image multiplied by a scaling factor. Well-known schemes for lossless coding of continuous-tone images can be applied, achieving somewhat better compression than the Huffman coded DPCM used in JPEG.

Some standard but rarely-used options already exist in JPEG to improve the efficiency of coding DCT coefficients: the arithmetic coding option, and the progressive coding option (which produces lower bitrates because values for each coefficient are coded independently, and each coefficient has a significantly different distribution). Modern methods have improved on these techniques by reordering coefficients to group coefficients of larger magnitude together;[21] using adjacent coefficients and blocks to predict new coefficient values;[23] dividing blocks or coefficients up among a small number of independently coded models based on their statistics and adjacent values;[22][23] and most recently, by decoding blocks, predicting subsequent blocks in the spatial domain, and then encoding these to generate predictions for DCT coefficients.[24]

Typically, such methods can compress existing JPEG files between 15 and 25 percent, and for JPEGs compressed at low-quality settings, can produce improvements of up to 65%.[23][24]

A freely-available tool called packJPG[25] is based on the 2007 paper "Improved Redundancy Reduction for JPEG Files." There are also at least two companies selling proprietary tools with similar capabilities, Infima's JPACK[26] and Smith Micro Software's StuffIt,[27] both of which claim to have pending patents on their respective technologies.[28][29]

[edit] Derived formats for stereoscopic 3D

[edit] JPEG Stereoscopic

JPEG Stereoscopic (JPS, extension .jps) is a JPEG-based format for stereoscopic images.[30][31] It has a range of configurations stored in the JPEG APP3 marker field, but usually contains one image of double width, representing two images of identical size in cross-eyed (i.e. left frame on the right half of the image and vice versa) side-by-side arrangement. This file format can be viewed as a JPEG without any special software, or can be processed for rendering in other modes.

[edit] JPEG Multi-Picture Format

JPEG Multi-Picture Format (MPO, extension .mpo) is a JPEG-based format for multi-view images. It contains two or more JPEG files concatenated together.[32][33] There are also special EXIF fields describing its purpose. This is used by the Fujifilm FinePix Real 3D W1 camera, Panasonic Lumix DMC-TZ20, Sony DSC-HX7V, HTC Evo 3D, the JVC GY-HMZ1U AVCHD/MVC extension camcorder and by the Nintendo 3DS for its 3D Camera.

[edit] Patent issues

In 2002, Forgent Networks asserted that it owned and would enforce patent rights on the JPEG technology, arising from a patent that had been filed on October 27, 1986, and granted on October 6, 1987 (U.S. Patent 4,698,672). The announcement created a furor reminiscent of Unisys' attempts to assert its rights over the GIF image compression standard.

The JPEG committee investigated the patent claims in 2002 and were of the opinion that they were invalidated by prior art.[34] Others also concluded that Forgent did not have a patent that covered JPEG.[35] Nevertheless, between 2002 and 2004 Forgent was able to obtain about US$105 million by licensing their patent to some 30 companies. In April 2004, Forgent sued 31 other companies to enforce further license payments. In July of the same year, a consortium of 21 large computer companies filed a countersuit, with the goal of invalidating the patent. In addition, Microsoft launched a separate lawsuit against Forgent in April 2005.[36] In February 2006, the United States Patent and Trademark Office agreed to re-examine Forgent's JPEG patent at the request of the Public Patent Foundation.[37] On May 26, 2006 the USPTO found the patent invalid based on prior art. The USPTO also found that Forgent knew about the prior art, and did not tell the Patent Office, making any appeal to reinstate the patent highly unlikely to succeed.[38]

Forgent also possesses a similar patent granted by the European Patent Office in 1994, though it is unclear how enforceable it is.[39]

As of October 27, 2006, the U.S. patent's 20-year term appears to have expired, and in November 2006, Forgent agreed to abandon enforcement of patent claims against use of the JPEG standard.[40]

The JPEG committee has as one of its explicit goals that their standards (in particular their baseline methods) be implementable without payment of license fees, and they have secured appropriate license rights for their upcoming JPEG 2000 standard from over 20 large organizations.

Beginning in August 2007, another company, Global Patent Holdings, LLC claimed that its patent (U.S. Patent 5,253,341) issued in 1993, is infringed by the downloading of JPEG images on either a website or through e-mail. If not invalidated, this patent could apply to any website that displays JPEG images. The patent emerged[clarification needed] in July 2007 following a seven-year reexamination by the U.S. Patent and Trademark Office in which all of the original claims of the patent were revoked, but an additional claim (claim 17) was confirmed.[41]

In its first two lawsuits following the reexamination, both filed in Chicago, Illinois, Global Patent Holdings sued the Green Bay Packers, CDW, Motorola, Apple, Orbitz, Officemax, Caterpillar, Kraft and Peapod as defendants. A third lawsuit was filed on December 5, 2007 in South Florida against ADT Security Services, AutoNation, Florida Crystals Corp., HearUSA, MovieTickets.com, Ocwen Financial Corp. and Tire Kingdom, and a fourth lawsuit on January 8, 2008 in South Florida against the Boca Raton Resort & Club. A fifth lawsuit was filed against Global Patent Holdings in Nevada. That lawsuit was filed by Zappos.com, Inc., which was allegedly threatened by Global Patent Holdings, and seeks a judicial declaration that the '341 patent is invalid and not infringed.

Global Patent Holdings had also used the '341 patent to sue or threaten outspoken critics of broad software patents, including Gregory Aharonian[42] and the anonymous operator of a website blog known as the "Patent Troll Tracker."[43] On December 21, 2007, patent lawyer Vernon Francissen of Chicago asked the U.S. Patent and Trademark Office to reexamine the sole remaining claim of the '341 patent on the basis of new prior art.[44]

On March 5, 2008, the U.S. Patent and Trademark Office agreed to reexamine the '341 patent, finding that the new prior art raised substantial new questions regarding the patent's validity.[45] In light of the reexamination, the accused infringers in four of the five pending lawsuits have filed motions to suspend (stay) their cases until completion of the U.S. Patent and Trademark Office's review of the '341 patent. On April 23, 2008, a judge presiding over the two lawsuits in Chicago, Illinois granted the motions in those cases.[46] On July 22, 2008, the Patent Office issued the first "Office Action" of the second reexamination, finding the claim invalid based on nineteen separate grounds.[47] On Nov. 24, 2009, a Reexamination Certificate was issued cancelling all claims.

[edit] Standards

Here are some examples of standards created by ISO/IEC JTC1 SC29 Working Group 1 (WG 1), which includes the Joint Photographic Experts Group and Joint Bi-level Image experts Group:

- JPEG (lossy and lossless): ITU-T T.81, ISO/IEC 10918-1

- JPEG extensions: ITU-T T.84

- JPEG-LS (lossless, improved): ITU-T T.87, ISO/IEC 14495-1

- JBIG (lossless, bi-level pictures, fax): ITU-T T.82, ISO/IEC 11544

- JBIG2 (bi-level pictures): ITU-T T.88, ISO/IEC 14492

- JPEG 2000: ITU-T T.800, ISO/IEC 15444-1

- JPEG 2000 extensions: ITU-T T.801

- JPEG XR (formerly called HD Photo prior to standardization) : ITU-T T.832, ISO/IEC 29199-2

[edit] See also

- C-Cube an early implementer of JPEG in chip form

- Comparison of graphics file formats

- Comparison of layout engines (graphics)

- Deblocking filter (video), the similar deblocking methods could be applied to JPEG

- Design rule for Camera File system (DCF)

- Exchangeable image file format (Exif)

- File extensions

- Generation loss

- Graphics editing program

- Image compression

- Image file formats

- JPEG 2000

- JPEG File Interchange Format (JFIF)

- JPEG XR

- Lenna, the traditional standard image used to test image processing algorithms

- Libjpeg of Independent JPEG Group

- Lossless Image Codec FELICS

- Motion JPEG

- PGF

- PNG

[edit] References

- ^ MIME Type Detection in Internet Explorer: Uploaded MIME Types (msdn.microsoft.com)

- ^ JPEG File Layout and Format

- ^ ISO/IEC JTC 1/SC 29 (2009-05-07). "ISO/IEC JTC 1/SC 29/WG 1 – Coding of Still Pictures (SC 29/WG 1 Structure)". http://www.itscj.ipsj.or.jp/sc29/29w12901.htm. Retrieved 2009-11-11.

- ^ a b ISO/IEC JTC 1/SC 29. "Programme of Work, (Allocated to SC 29/WG 1)". http://www.itscj.ipsj.or.jp/sc29/29w42901.htm. Retrieved 2009-11-07.

- ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". http://www.iso.org/iso/standards_development/technical_committees/list_of_iso_technical_committees/iso_technical_committee.htm?commid=45316. Retrieved 2009-11-11.

- ^ a b JPEG. "Joint Photographic Experts Group, JPEG Homepage". http://www.jpeg.org/jpeg/index.html. Retrieved 2009-11-08.

- ^ "T.81 : Information technology – Digital compression and coding of continuous-tone still images – Requirements and guidelines". http://www.itu.int/rec/T-REC-T.81. Retrieved 2009-11-07.

- ^ William B. Pennebaker and Joan L. Mitchell (1993). JPEG still image data compression standard (3rd ed.). Springer. p. 291. ISBN 9780442012724. http://books.google.com/books?id=AepB_PZ_WMkC&pg=PA291&dq=JPEG+%22did+not+specify+a+file+format%22&lr=&num=20&as_brr=0&ei=VHXySui8JYqukASSssWzAw#v=onepage&q=JPEG%20%22did%20not%20specify%20a%20file%20format%22&f=false.

- ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_tc_browse.htm?commid=45316. Retrieved 2009-11-07.

- ^ a b JPEG (2009-04-24). "Press Release – 48th WG1 meeting, Maui, USA – JPEG XR enters FDIS status, JPEG File Interchange Format (JFIF) to be standardized as JPEG Part 5". http://www.jpeg.org/newsrel25.html. Retrieved 2009-11-09.

- ^ "JPEG File Interchange Format (JFIF)". ECMA TR/98 1st ed.. Ecma International. 2009. http://www.ecma-international.org/publications/techreports/E-TR-098.htm. Retrieved 2011-08-01.

- ^ "Progressive Decoding Overview". Microsoft Developer Network. Microsoft. http://msdn.microsoft.com/en-us/library/ee720036(v=vs.85).aspx. Retrieved 2012-03-23.

- ^ "JFIF File Format as PDF" (PDF). http://www.w3.org/Graphics/JPEG/jfif3.pdf.

- ^ Tom Lane (1999-03-29). "JPEG image compression FAQ". http://www.faqs.org/faqs/jpeg-faq/part1/. Retrieved 2007-09-11. (q. 14: "Why all the argument about file formats?")

- ^ "ISO/IEC 10918-1 : 1993(E) p.36". http://www.digicamsoft.com/itu/itu-t81-36.html.

- ^ Thomas G. Lane.. "Advanced Features: Compression parameter selection". Using the IJG JPEG Library. http://apodeline.free.fr/DOC/libjpeg/libjpeg-3.html.

- ^ a b Phuc-Tue Le Dinh and Jacques Patry. Video compression artifacts and MPEG noise reduction. Video Imaging DesignLine. February 24, 2006. Retrieved May 28, 2009.

- ^ "3.9 mosquito noise: Form of edge busyness distortion sometimes associated with movement, characterized by moving artifacts and/or blotchy noise patterns superimposed over the objects (resembling a mosquito flying around a person's head and shoulders)." ITU-T Rec. P.930 (08/96) Principles of a reference impairment system for video

- ^ jpegs, Thomas Ruff, Aperture, May 31, 2009, 132 pp., ISBN 978-1-59711093-8

- ^ Review: jpegs by Thomas Ruff, by Joerg Colberg, Apr 17, 2009

- ^ a b I. Bauermann and E. Steinbacj. Further Lossless Compression of JPEG Images. Proc. of Picture Coding Symposium (PCS 2004), San Francisco, USA, December 15–17, 2004.

- ^ a b N. Ponomarenko, K. Egiazarian, V. Lukin and J. Astola. Additional Lossless Compression of JPEG Images, Proc. of the 4th Intl. Symposium on Image and Signal Processing and Analysis (ISPA 2005), Zagreb, Croatia, pp.117–120, September 15–17, 2005.

- ^ a b c d M. Stirner and G. Seelmann. Improved Redundancy Reduction for JPEG Files. Proc. of Picture Coding Symposium (PCS 2007), Lisbon, Portugal, November 7–9, 2007

- ^ a b c Ichiro Matsuda, Yukio Nomoto, Kei Wakabayashi and Susumu Itoh. Lossless Re-encoding of JPEG images using block-adaptive intra prediction. Proceedings of the 16th European Signal Processing Conference (EUSIPCO 2008).

- ^ "Latest Binary Releases of packJPG: V2.3a". January 3, 2008. http://www.elektronik.htw-aalen.de/packjpg/.

- ^ "Reduce Your JPEG Storage and Bandwidth Cost by up to 80% While Enhancing User's Experience With Infima's JPACK(TM) Compression Solution". Infima Technologies. November 17, 2008. http://www.marketwatch.com/news/story/Reduce-Your-JPEG-Storage-Bandwidth/story.aspx?guid=%7B924ADA85-3E91-40FE-B0A1-162EC91A9467%7D.

- ^ "StuffIt Image Compression White Paper rev. 2.1". 1/5/2006. http://my.smithmicro.com/stuffitcompression/wp_stuffit_imgcomp.pdf.

- ^ "Infima Ultimate Compression". Infima Technologies. http://www.myinfima.com/images/.

- ^ "Stuffit Image". Smith Micro Software. http://my.smithmicro.com/stuffitcompression/imagecompression.html.

- ^ J. Siragusa, D. C. Swift, “General Purpose Stereoscopic Data Descriptor”, VRex, Inc., Elmsford, New York, USA, 1997.

- ^ Tim Kemp, JPS files

- ^ "Multi-Picture Format" (PDF). http://www.cipa.jp/english/hyoujunka/kikaku/pdf/DC-007_E.pdf. Retrieved 2011-05-29.

- ^ cybereality, MPO2Stereo: Convert Fujifilm MPO files to JPEG stereo pairs, mtbs3d, http://www.mtbs3d.com/phpbb/viewtopic.php?f=3&t=4124&start=15, retrieved 12 January 2010

- ^ "Concerning recent patent claims". Jpeg.org. 2002-07-19. http://www.jpeg.org/newsrel1.html. Retrieved 2011-05-29.

- ^ JPEG and JPEG2000 – Between Patent Quarrel and Change of Technology (Archive)

- ^ Kawamoto, Dawn (April 22, 2005). "Graphics patent suit fires back at Microsoft". CNET News. http://news.cnet.com/2100-1025_3-5681112.html. Retrieved 2009-01-28.

- ^ "Trademark Office Re-examines Forgent JPEG Patent". Publish.com. February 3, 2006. http://www.publish.com/c/a/Graphics-Tools/Trademark-Office-Reexamines-Forgent-JPEG-Patent/. Retrieved 2009-01-28.

- ^ "USPTO: Broadest Claims Forgent Asserts Against JPEG Standard Invalid". Groklaw.net. May 26, 2006. http://www.groklaw.net/article.php?story=20060526105754880. Retrieved 2007-07-21.

- ^ "Coding System for Reducing Redundancy". Gauss.ffii.org. http://gauss.ffii.org/PatentView/EP266049. Retrieved 2011-05-29.

- ^ "JPEG Patent Claim Surrendered". Public Patent Foundation. November 2, 2006. http://www.pubpat.org/jpegsurrendered.htm. Retrieved 2006-11-03.

- ^ Ex Parte Reexamination Certificate for U.S. Patent No. 5,253,341[dead link]

- ^ Workgroup. "Rozmanith: Using Software Patents to Silence Critics". Eupat.ffii.org. http://eupat.ffii.org/pikta/xrani/rozmanith/index.en.html. Retrieved 2011-05-29.

- ^ "A Bounty of $5,000 to Name Troll Tracker: Ray Niro Wants To Know Who Is saying All Those Nasty Things About Him". Law.com. http://www.law.com/jsp/article.jsp?id=1196762670106. Retrieved 2011-05-29.

- ^ Reimer, Jeremy (2008-02-05). "Hunting trolls: USPTO asked to reexamine broad image patent". Arstechnica.com. http://arstechnica.com/news.ars/post/20080205-hunting-trolls-uspto-asked-to-reexamine-broad-image-patent.html. Retrieved 2011-05-29.

- ^ U.S. Patent Office – Granting Reexamination on 5,253,341 C1

- ^ "Judge Puts JPEG Patent On Ice". Techdirt.com. 2008-04-30. http://www.techdirt.com/articles/20080427/143205960.shtml. Retrieved 2011-05-29.

- ^ "JPEG Patent's Single Claim Rejected (And Smacked Down For Good Measure)". Techdirt.com. 2008-08-01. http://techdirt.com/articles/20080731/0337491852.shtml. Retrieved 2011-05-29.

[edit] External links

| Wikimedia Commons has media related to: JPEG |

- JPEG Standard (JPEG ISO/IEC 10918-1 ITU-T Recommendation T.81) at W3.org

- Official Joint Photographic Experts Group site

- JFIF File Format at W3.org

- JPEG File Size Calculator

- JPEG viewer in 250 lines of easy to understand python code

- Wotsit.org's entry on the JPEG format

- Example images over the full range of quantization levels from 1 to 100 at visengi.com

- Public domain JPEG compressor in a single C++ source file, along with a matching decompressor at code.google.com

- Example of .JPG file decoding

- Jpeg Decoder Open Source Code , Copyright (C) 1995–1997, Thomas G. Lane.

jpg格式

求助编辑百科名片

SOI,Start of Image,图像开始

jpg全名是JPEG 。JPEG 图片以 24 位颜色存储单个光栅图像。JPEG 是与平台无关的格式,支持最高级别的压缩,不过,这种压缩是有损耗的。渐近式 JPEG 文件支持交错。目录jpg功能jpg优缺点jpg使用范围jpg压缩模式jpg压缩步骤 编辑本段jpg功能 可以提高或降低 JPEG 文件压缩的级别。但是,文件大小是以牺牲图像质量为代 价的。压缩比率可以高达 100:1。(JPEG 格式可在 10:1 到 20:1 的比率下轻松地压缩文件,而图片质量不会下降。)JPEG 压缩可以很好地处理写实摄影作品。但是,对于颜色较少、对比级别强烈、实心边框或纯色区域大的较简单的作品,JPEG 压缩无法提供理想的结果。有时,压缩比率会低到 5:1,严重损失了图片完整性。这一损失产生的原因是,JPEG 压缩方案可以很好地压缩类似的色调,但是 JPEG 压缩方案不能很好地处理亮度的强烈差异或处理纯色区域。编辑本段jpg优缺点 优点:摄影作品或写实作品支持高级压缩。 利用可变的压缩比可以控制文件大小。 支持交错(对于渐近式 JPEG 文件)。 广泛支持 Internet 标准。 由于体积小,jpg在万维网中被用来储存和传输照片的格式。 缺点:有损耗压缩会使原始图片数据质量下降。 当您编辑和重新保存 JPEG 文件时,JPEG 会混合原始图片数据的质量下降。这种下降是累积性的。 JPEG 不适用于所含颜色很少、具有大块颜色相近的区域或亮度差异十分明显的较简单的图片。编辑本段jpg使用范围 jpg格式是一种图片格式,是一种比较常见的图画格式,如果你的图片是其他格式,可以通过以下方法转化: 1、photoshop ,打开图画以后,按另存为,下面格式那里选择JPG格式就是了,这个方法比较简单,而且适合画质比较好的,要求比较高的图片转换。 2、如果你要求不高,你直接通过windows附带的图画程序,选择JPG格式就可以,这种转换方式画质不高 如果JPG格式转其他格式,这样的方法同样适用。编辑本段jpg压缩模式 JPEG (Joint Photographic Experts GROUP)是由国际标准组织(ISO:International Standardization Organization)和国际电话电报咨询委员会(CCITT:Consultation Commitee of the International Telephone and Telegraph)为静态图像所建立的第一个国际数字图像压缩标准,也是至今一直在使用的、应用最广的图像压缩标准。JPEG由于可以提供有损压缩,因此压缩比可以达到其他传统压缩算法无法比拟的程度。 JPEG的压缩模式有以下几种: 顺序式编码(Sequential Encoding) 一次将图像由左到右、由上到下顺序处理。 递增式编码(Progressive Encoding) 当图像传输的时间较长时,可将图像分数次处理,以从模糊到清晰的方式来传送图像(效果类似GIF在网络上的传输)。 无失真编码(Lossless Encoding) 阶梯式编码(Hierarchical Encoding) 图像以数种分辨率来压缩,其目的是为了让具有高分辨率的图像也可以在较低分辨率的设备上显示。编辑本段jpg压缩步骤 由于JPEG的无损压缩方式并不比其他的压缩方法更优秀,因此我们着重来看它的有损压缩。以一幅24位彩色图像为例,JPEG的压缩步骤分为: 1.颜色转换 由于JPEG只支持YUV颜色模式的数据结构,而不支持RGB图像数据结构,所以在将彩色图像进行压缩之前,必须先对颜色模式进行数据转换。各个值的转换可以通过下面的转换公式计算得出: Y=0.299R+0.587G+0.114B U=-0.169R-0.3313G+0.5B V=0.5R-0.4187G-0.0813B 其中,Y表示亮度,U和V表示颜色。 转换完成之后还需要进行数据采样。一般采用的采样比例是4:1:1或4:2:2。由于在执行了此项工作之后,每两行数据只保留一行,因此,采样后图像数据量将压缩为原来的一半。 2.DCT变换 DCT(Discrete Consine Transform)是将图像信号在频率域上进行变换,分离出高频和低频信息的处理过程。然后再对图像的高频部分(即图像细节)进行压缩,以达到压缩图像数据的目的。 首先将图像划分为多个8*8的矩阵。然后对每一个矩阵作DCT变换(变换公式此略)。变换后得到一个频率系数矩阵,其中的频率系数都是浮点数。 3.量化 由于在后面编码过程中使用的码本都是整数,因此需要对变换后的频率系数进行量化,将之转换为整数。 由于进行数据量化后,矩阵中的数据都是近似值,和原始图像数据之间有了差异,这一差异是造成图像压缩后失真的主要原因。 在这一过程中,质量因子的选取至为重要。值选得过大,可以大幅度提高压缩比,但是图像质量就比较差;反之,质量因子越小(最小为1),图像重建质量越好,但是压缩比越低。对此,ISO已经制定了一组供JPEG代码实现者使用的标准量化值。 4.编码 从前面过程我们可以看到,颜色转换完成到编码之前,图像并没有得到进一步的压缩,DCT变换和量化可以说是为编码阶段做准备。 编码采用两种机制:一是0值的行程长度编码;二是熵编码(Entropy Coding)。 在JPEG中,采用曲徊序列,即以矩阵对角线的法线方向作“之”字排列矩阵中的元素。这样做的优点是使得靠近矩阵左上角、值比较大的元素排列在行程的前面,而行程的后面所排列的矩阵元素基本上为0值。行程长度编码是非常简单和常用的编码方式,在此不再赘述。 编码实际上是一种基于统计特性的编码方法。在JPEG中允许采用HUFFMAN编码或者算术编码。

![\left[

\begin{array}{rrrrrrrr}

52 & 55 & 61 & 66 & 70 & 61 & 64 & 73 \\

63 & 59 & 55 & 90 & 109 & 85 & 69 & 72 \\

62 & 59 & 68 & 113 & 144 & 104 & 66 & 73 \\

63 & 58 & 71 & 122 & 154 & 106 & 70 & 69 \\

67 & 61 & 68 & 104 & 126 & 88 & 68 & 70 \\

79 & 65 & 60 & 70 & 77 & 68 & 58 & 75 \\

85 & 71 & 64 & 59 & 55 & 61 & 65 & 83 \\

87 & 79 & 69 & 68 & 65 & 76 & 78 & 94

\end{array}

\right].](http://upload.wikimedia.org/wikipedia/en/math/8/f/a/8faa09381fedbff2f77ad8cff3ed4a3b.png)

![g=

\begin{array}{c}

x \\

\longrightarrow \\

\left[

\begin{array}{rrrrrrrr}

-76 & -73 & -67 & -62 & -58 & -67 & -64 & -55 \\

-65 & -69 & -73 & -38 & -19 & -43 & -59 & -56 \\

-66 & -69 & -60 & -15 & 16 & -24 & -62 & -55 \\

-65 & -70 & -57 & -6 & 26 & -22 & -58 & -59 \\

-61 & -67 & -60 & -24 & -2 & -40 & -60 & -58 \\

-49 & -63 & -68 & -58 & -51 & -60 & -70 & -53 \\

-43 & -57 & -64 & -69 & -73 & -67 & -63 & -45 \\

-41 & -49 & -59 & -60 & -63 & -52 & -50 & -34

\end{array}

\right]

\end{array}

\Bigg\downarrow y.](http://upload.wikimedia.org/wikipedia/en/math/4/2/a/42a33df8ed3af5204dce1035e5a19d70.png)

![\ G_{u,v} =

\sum_{x=0}^7

\sum_{y=0}^7

\alpha(u)

\alpha(v)

g_{x,y}

\cos \left[\frac{\pi}{8} \left(x+\frac{1}{2}\right) u \right]

\cos \left[\frac{\pi}{8} \left(y+\frac{1}{2}\right) v \right]](http://upload.wikimedia.org/wikipedia/en/math/c/c/4/cc486e03d1a28db41935561efe34a12a.png)

![G=

\begin{array}{c}

u \\

\longrightarrow \\

\left[

\begin{array}{rrrrrrrr}

-415.38 & -30.19 & -61.20 & 27.24 & 56.13 & -20.10 & -2.39 & 0.46 \\

4.47 & -21.86 & -60.76 & 10.25 & 13.15 & -7.09 & -8.54 & 4.88 \\

-46.83 & 7.37 & 77.13 & -24.56 & -28.91 & 9.93 & 5.42 & -5.65 \\

-48.53 & 12.07 & 34.10 & -14.76 & -10.24 & 6.30 & 1.83 & 1.95 \\

12.12 & -6.55 & -13.20 & -3.95 & -1.88 & 1.75 & -2.79 & 3.14 \\

-7.73 & 2.91 & 2.38 & -5.94 & -2.38 & 0.94 & 4.30 & 1.85 \\

-1.03 & 0.18 & 0.42 & -2.42 & -0.88 & -3.02 & 4.12 & -0.66 \\

-0.17 & 0.14 & -1.07 & -4.19 & -1.17 & -0.10 & 0.50 & 1.68

\end{array}

\right]

\end{array}

\Bigg\downarrow v.](http://upload.wikimedia.org/wikipedia/en/math/3/a/3/3a385c20012cd0cf7588e04c319167ba.png)

![B=

\left[

\begin{array}{rrrrrrrr}

-26 & -3 & -6 & 2 & 2 & -1 & 0 & 0 \\

0 & -2 & -4 & 1 & 1 & 0 & 0 & 0 \\

-3 & 1 & 5 & -1 & -1 & 0 & 0 & 0 \\

-3 & 1 & 2 & -1 & 0 & 0 & 0 & 0 \\

1 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0

\end{array}

\right].](http://upload.wikimedia.org/wikipedia/en/math/f/2/6/f26447bde52f84f3fea4eadfe2268e56.png)

![\left[

\begin{array}{rrrrrrrr}

-26 & -3 & -6 & 2 & 2 & -1 & 0 & 0 \\

0 & -2 & -4 & 1 & 1 & 0 & 0 & 0 \\

-3 & 1 & 5 & -1 & -1 & 0 & 0 & 0 \\

-4 & 1 & 2 & -1 & 0 & 0 & 0 & 0 \\

1 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0

\end{array}

\right]](http://upload.wikimedia.org/wikipedia/en/math/6/f/4/6f473099ed82c8f387ead798a1c8bd3a.png)

![\left[

\begin{array}{rrrrrrrr}

-416 & -33 & -60 & 32 & 48 & -40 & 0 & 0 \\

0 & -24 & -56 & 19 & 26 & 0 & 0 & 0 \\

-42 & 13 & 80 & -24 & -40 & 0 & 0 & 0 \\

-42 & 17 & 44 & -29 & 0 & 0 & 0 & 0 \\

18 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 & 0 & 0 & 0

\end{array}

\right]](http://upload.wikimedia.org/wikipedia/en/math/3/9/2/3924a4568430d4c0074d23e7b4cf004b.png)

![\left[

\begin{array}{rrrrrrrr}

-66 & -63 & -71 & -68 & -56 & -65 & -68 & -46 \\

-71 & -73 & -72 & -46 & -20 & -41 & -66 & -57 \\

-70 & -78 & -68 & -17 & 20 & -14 & -61 & -63 \\

-63 & -73 & -62 & -8 & 27 & -14 & -60 & -58 \\

-58 & -65 & -61 & -27 & -6 & -40 & -68 & -50 \\

-57 & -57 & -64 & -58 & -48 & -66 & -72 & -47 \\

-53 & -46 & -61 & -74 & -65 & -63 & -61 & -45 \\

-47 & -34 & -53 & -74 & -60 & -47 & -47 & -41

\end{array}

\right]](http://upload.wikimedia.org/wikipedia/en/math/2/2/0/220321d5d8ba95ab483d98f88852d904.png)

![\left[

\begin{array}{rrrrrrrr}

62 & 65 & 57 & 60 & 72 & 63 & 60 & 82 \\

57 & 55 & 56 & 82 & 108 & 87 & 62 & 71 \\

58 & 50 & 60 & 111 & 148 & 114 & 67 & 64 \\

65 & 55 & 66 & 120 & 155 & 114 & 68 & 70 \\

70 & 63 & 67 & 101 & 122 & 88 & 60 & 78 \\

71 & 71 & 64 & 70 & 80 & 62 & 56 & 81 \\

75 & 82 & 67 & 54 & 63 & 65 & 66 & 83 \\

81 & 95 & 75 & 54 & 68 & 81 & 81 & 87

\end{array}

\right].](http://upload.wikimedia.org/wikipedia/en/math/e/9/9/e990f311fe156b5509e16c0cff866617.png)

![\left[

\begin{array}{rrrrrrrr}

-10 & -10 & 4 & 6 & 2 & 2 & 4 & -9 \\

6 & 4 & -1 & 8 & 1 & -2 & 7 & 1 \\

4 & 9 & 8 & 2 & -4 & -10 & -1 & 8 \\

-2 & 3 & 5 & 2 & -1 & -8 & 2 & -1 \\

-3 & -2 & 1 & 3 & 4 & 0 & 8 & -8 \\

8 & -6 & -4 & 0 & -3 & 6 & 2 & -6 \\

10 & -11 & -3 & 5 & -8 & -4 & -1 & 0 \\

6 & -15 & -6 & 14 & -3 & -5 & -3 & 7

\end{array}

\right]](http://upload.wikimedia.org/wikipedia/en/math/2/6/2/262f8119beac4967e4d8d56e4eed83bc.png)

4168

4168

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?