目录

2、bash: keytools: command not found

9、There appears to be a gap in the edit log. We expected txid 1, but got txid 2941500.

12、Caused by: MetaException(message:Version information not found in metastore. )

前言

前一篇记录了如何安装主从kdc,这篇记录下kerberos如何与hadoop集群集成。设定:

hadoop 的配置文件目录为:/export/common/hadoop/conf/

hadoop 的安装目录为:/export/hadoop/

一、配置 SASL 认证证书

因为启用kerberos 认证后,会使用HTTPS 访问,需要访问证书。随意在一台服务器比如:v0106-c0a8000e.4e462.c.local 上执行:

$ openssl req -newkey rsa:2048 -keyout rsa_private.key -x509 -days 365 -out cert.crt -subj /C=CN/ST=BJ/L=BJ/O=test/OU=dev/CN=jd.com/emailAddress=test@126.com

将生成的 cert.crt rsa_private.key 复制到 每个服务器的hadoop 配置文件目录里

$ scp cert.crt rsa_private.key 192.168.0.25:/export/common/hadoop/conf/(每个节点都要有)

在每个服务器上执行执行中需要输入密码:123456。(每个节点都要有java环境才可以执行)

$ cd /export/common/hadoop/conf/

$ keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname "CN=v0106-c0a8000e.4e462.c.local, OU=dev, O=test, L=BJ, ST=BJ, C=CN"

$ keytool -keystore truststore -alias CARoot -import -file cert.crt;

$ keytool -certreq -alias localhost -keystore keystore -file cert;

$ openssl x509 -req -CA cert.crt -CAkey rsa_private.key -in cert -out cert_signed -days 9999 -CAcreateserial;

$ keytool -keystore keystore -alias CARoot -import -file cert.crt ;

$ keytool -keystore keystore -alias localhost -import -file cert_signed ;

二、修改集群配置文件

1.hdfs添加以下配置

core-site.xml

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>hdfs-site.xml

<!-- kerberos start -->

<!-- namenode -->

<property>

<name>dfs.namenode.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<!-- datanode -->

<property>

<name>dfs.datanode.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

<!-- <property>

<name>dfs.https.port</name>

<value>50470</value>

</property> -->

<property>

<name>dfs.data.transfer.protection</name>

<value>integrity</value>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>700</value>

</property>

<!--

<property>

<name>dfs.datanode.https.address</name>

<value>0.0.0.0:50475</value>

</property> -->

<!-- journalnode -->

<property>

<name>dfs.journalnode.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>dfs.journalnode.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.journalnode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<!-- kerberos end-->hadoop_env.sh

export HADOOP_OPTS="$HADOOP_OPTS -Djava.library.path=${JAVA_HOME}/lib -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88"ssl-server.xml(放在hadoop配置目录下:/export/common/hadoop/conf,赋权hdfs:hadoop)

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<property>

<name>ssl.server.truststore.location</name>

<value>/export/common/hadoop/conf/truststore</value>

<description>Truststore to be used by NN and DN. Must be specified.

</description>

</property>

<property>

<name>ssl.server.truststore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.server.truststore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.truststore.reload.interval</name>

<value>10000</value>

<description>Truststore reload check interval, in milliseconds.

Default value is 10000 (10 seconds).

</description>

</property>

<property>

<name>ssl.server.keystore.location</name>

<value>/export/common/hadoop/conf/keystore</value>

<description>Keystore to be used by NN and DN. Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.password</name>

<value>123456</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.keypassword</name>

<value>123456</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.exclude.cipher.list</name>

<value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA,

SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_RC4_128_MD5</value>

<description>Optional. The weak security cipher suites that you want excluded

from SSL communication.</description>

</property>

</configuration>

ssl-client.xml(放在hadoop配置目录下:/export/common/hadoop/conf,赋权hdfs:hadoop)

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<property>

<name>ssl.client.truststore.location</name>

<value>/export/common/hadoop/conf/truststore</value>

<description>Truststore to be used by clients like distcp. Must be

specified.

</description>

</property>

<property>

<name>ssl.client.truststore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.truststore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.client.truststore.reload.interval</name>

<value>10000</value>

<description>Truststore reload check interval, in milliseconds.

Default value is 10000 (10 seconds).

</description>

</property>

<property>

<name>ssl.client.keystore.location</name>

<value>/export/common/hadoop/conf/keystore</value>

<description>Keystore to be used by clients like distcp. Must be

specified.

</description>

</property>

<property>

<name>ssl.client.keystore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.keystore.keypassword</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.keystore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

</configuration>2.yarn添加以下配置

yarn-site.xml

<!-- resourcemanager -->

<property>

<name>yarn.web-proxy.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>yarn.web-proxy.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>yarn.resourcemanager.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<!-- nodemanager -->

<property>

<name>yarn.nodemanager.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>yarn.nodemanager.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hdfs</value>

</property>

<!-- timeline kerberos -->

<property>

<name>yarn.timeline-service.http-authentication.type</name>

<value>kerberos</value>

<description>Defines authentication used for the timeline server HTTP endpoint. Supported values are: simple | kerberos | #AUTHENTICATION_HANDLER_CLASSNAME#</description>

</property>

<property>

<name>yarn.timeline-service.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>yarn.timeline-service.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>yarn.nodemanager.container-localizer.java.opts</name>

<value>-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49 :88</value>

</property>

<property>

<name>yarn.nodemanager.health-checker.script.opts</name>

<value>-Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88</value>

</property>mapred-site.xml

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1638M -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx3276M -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88</value>

</property>

<property>

<name>mapreduce.jobhistory.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>mapreduce.jobhistory.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-keytab-file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx1024m -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88</value>

</property>

<property>

<name>yarn.app.mapreduce.am.command-opts</name>

<value>-Xmx3276m -Djava.security.krb5.conf=/etc/krb5.conf -Djava.security.krb5.realm=HADOOP.COM -Djava.security.krb5.kdc=192.168.0.49:88</value>

</property>3.hive添加以下配置

hive-site.xml

<!--hiveserver2-->

<property>

<name>hive.server2.authentication</name>

<value>KERBEROS</value>

</property>

<property>

<name>hive.server2.authentication.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>hive.server2.authentication.kerberos.keytab</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<!-- metastore -->

<property>

<name>hive.metastore.sasl.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.kerberos.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<property>

<name>hive.metastore.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>4.hbase添加以下配置

hbase-site.xml

<!-- hbase配置kerberos安全认证start -->

<property>

<name>hbase.security.authentication</name>

<value>kerberos</value>

</property>

<!-- 配置hbase rpc安全通信 -->

<property>

<name>hbase.rpc.engine</name>

<value>org.apache.hadoop.hbase.ipc.SecureRpcEngine</value>

</property>

<!-- hmaster配置kerberos安全凭据认证 -->

<property>

<name>hbase.master.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property> -->

<!-- hmaster配置kerberos安全证书keytab文件位置 -->

<property>

<name>hbase.master.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property> -->

<!-- regionserver配置kerberos安全凭据认证 -->

<property>

<name>hbase.regionserver.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property> -->

<!-- regionserver配置kerberos安全证书keytab文件位置 -->

<property>

<name>hbase.regionserver.keytab.file</name>

<value>/export/common/kerberos5/hdfs.keytab</value>

</property>

<!--

<property>

<name>hbase.thrift.keytab.file</name>

<value>/soft/conf/hadoop/hdfs.keytab</value>

</property>

<property>

<name>hbase.thrift.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>hbase.rest.keytab.file</name>

<value>/soft/conf/hadoop/hdfs.keytab</value>

</property>

<property>

<name>hbase.rest.kerberos.principal</name>

<value>hdfs/_HOST@HADOOP.COM</value>

</property>

<property>

<name>hbase.rest.authentication.type</name>

<value>kerberos</value>

</property>

<property>

<name>hbase.rest.authentication.kerberos.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>hbase.rest.authentication.kerberos.keytab</name>

<value>/soft/conf/hadoop/hdfs.keytab</value>

</property>

-->

<!-- hbase配置kerberos安全认证end -->三、kerberos相关命令

退出授权:kdestroy

主kdc打开kadmin管理:kadmin.local

查看当前系统使用的Kerberos账户:klist

使用keytab获取用户凭证: kinit -kt /export/common/kerberos5/kadm5.keytab admin/admin@HADOOP.COM

查看keytab内容:klist -k -e /export/common/kerberos5/hdfs.keytab

生成keytab文件:kadmin.local -q "xst -k /export/common/kerberos5/hdfs.keytab admin/admin@HADOOP.COM"

延长kerberos认证时长:kinit -R

删除kdc数据库:rm -rf /export/common/kerberos5/principal(这个路径是create时新建的数据库路径)

四、快速测试

测试hdfs:切换到hdfs用户,键入命令:hdfs dfs -ls /后,需要认证。再次键入命令“kinit -kt /export/common/kerberos5/hdfs.keytab hdfs/`hostname | awk '{print tolower($0)}'`”后可以查出结果即为与hdfs集成成功。

还要注意一些显著的变化:1、任务可以由提交作业的用户以操作系统账户启动运行,而不一定要由运行节点管理的用户启动。这意味着,可以借助操作系统来隔离正在运行的任务,使它们之间无法相互传送指令,这样的话,诸如任务数据等本地信息的隐私即可通过本地文件系统的安全性而得到保护。(需要将yarn.nodemanager.container-executor.class设为org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor。)

五、问题解决

1、Caused by: java.io.IOException: Failed on local exception: java.io.IOException: Server asks us to fall back to SIMPLE auth, but this client is configured to only allow secure connections.; Host Details : local host is: "v0106-c0a8003a.4eb06.c.local/192.168.0.58"; destination host is: "v0106-c0a80019.4eb06.c.local":8020;

分析:配置好某一个hadoop节点后,切换到hdfs再执行hive命令,报上述错。刚开始排查以为是读的目标机器与发送请求机器的密钥验证不一致导致,需要把全部hadoop节点变为kdc客户端,但是在客户端执行hive命令,仍然报相同错。后来查资料发现原因是代码中编写了登录kerberos的代码,而hdfs没有开kerberos,故还需检查hdfs配置是否生效。

解决:重启了下集群后生效了。

2、bash: keytools: command not found

解决: keytool命令不识别,请设置java_home

3、java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]; Host Details : local host is: "v0106-c0a8003a.4eb06.c.local/192.168.0.58"; destination host is: "v0106-c0a80019.4eb06.c.local":8020;

分析:重启集群后,有hdfs用户访问命令“hdfs dfs -ls /”时,报上述错。刚开始认为没有赋权,故用kinit -kt /export/common/kerberos5/hdfs.keytab hdfs/v0106-c0a8003a.4eb06.c.local@HADOOP.COM命令赋权,之后还是报同样的错误。然后开查资料进行调试。

调试:先执行如下命令:export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Dlog.enable-console=true -Dsun.security.krb5.debug=true ${HADOOP_OPTS}"

export HADOOP_ROOT_LOGGER=DEBUG,console

再执行hadoop操作:hadoop dfs -ls /,在控制台上即可看到相关的debug输出。调试结果仍然是看不出什么。

解决1:由于之前kdc.conf配置master_key_type和supported_enctypes默认使用aes256-cts。后来在网上查资料,发现有说jdk问题,由于出口管制原因,JAVA8使用aes256-cts验证方式需要安装额外的jar包。后来按照提示把JDK8密码扩展无限制权限策略文件下载下来(我的是jdk8)JCE Unlimited Strength Jurisdiction Policy Files for JDK/JRE 8 Download,下载后,覆盖到$JAVA_HOME/jre/lib/security中去,主要是local_policy.jar和 US_export_policy.jar中即可。

解决2:上面的解决方案需要我们替换java包,比较麻烦。所以我们在安装kdc时不用aec256加密,在安装时就修改默认的kdc.conf文件的master_key_type和supported_enctypes中去掉aes256-cts加密。

4、retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB over /192.168.0.92:8020 after 4 fail over attempts.

描述:解决完问题3后,再用命令“hdfs dfs -ls /”,又报上面的错。

分析:hdfs haadmin -getServiceState nn2/nn1,发现两个namenode都是standby状态。zkfc也起来了。(nn1 nn2的名称命名可以去看hdfs-site.xml配置)

解决:1)如果zkfc没有起来,启动zkfc就OK了。进入/export/hadoop/sbin目录下 ./hadoop-daemon.sh start zkfc;

2)手动切换主备状态:hdfs haadmin -transitionToActive nn1 --forcemanual(切换为standby状态命令:hdfs haadmin –transitionToStandby nn2)

5、Caused by: GSSException: No valid credentials provided (Mechanism level: Ticket expired (32) - PROCESS_TGS)。Caused by: KrbException: Identifier doesn't match expected value (906)。Failed on local exception: java.io.IOException: Couldn't setup connection for hdfs/v0106-c0a8005c.4eb06.c.local@HADOOP.COM to /192.168.0.25:8485; Host Details : local host is: "v0106-c0a8005c.4eb06.c.local/192.168.0.92"; destination host is: "v0106-c0a80019.4eb06.c.local":8485;

分析:1)检查/etc/krb5.conf中"default_tgs_enctypes" ; "default_tkt_enctypes" ; "permitted_enctypes",把/etc/krb5.conf中的arcfour-hmac-md5加密方式去掉,因为在kdc.conf中没有匹配。

2)检查hdfs安全模式:hdfs dfsadmin -safemode get,发现进入了安全模式,然后输入命令:hdfs dfsadmin -safemode leave离开安全模式,重启namenode,又进入安全模式。

解决:后来采用更新krb5版本来测试:

1)先卸载原来的版本yum remove -y libkadm5-1.15.1-18.el7.x86_64;rpm -e --nodeps krb5-libs-1.15.1-18.el7.x86_64

(注:yum有时卸载不了,就用rpm忽略依赖来卸载,其间可用rpm -qa | grep krb5或yum list installed |grep krb5来看安装的依赖包)

2) 安装新版本

rpm -ivh krb5-libs-1.15.1-37.el7_6.x86_64.rpm

rpm -ivh libkadm5-1.15.1-37.el7_6.x86_64.rpm

rpm -ivh krb5-server-1.15.1-37.el7_6.x86_64.rpm(客户端不用安装)

rpm -ivh krb5-workstation-1.15.1-37.el7_6.x86_64.rpm

6、java.net.ConnectException: Call From xxx to localhost:8020 failed on connection exception: java.net.ConnectException: Connection refused

描述:解决完问题5后,启动namenode时报上述错。

解决:重新格式化namenode,命令如下:hadoop namenode -format,之后分别重启两个namenode,发现还是都为standby状态,报错日志如下问题7

7、WARN org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Unable to trigger a roll of the active NN

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category JOURNAL is not supported in state standby。

描述:解决完问题6后,报上面的错。然后执行切换HA namenode active状态命令:hdfs haadmin -transitionToActive --forcemanual nn1后,namenode进程没了,同时报下面错:Incompatible namespaceID for journal Storage Directory /export/data/hadoop/journal/yinheforyanghang: NameNode has nsId 420017674 but storage has nsId 1693249738

解决:vim /export/data/hadoop/namenode/current/VERSION,把420017674改为1693249738。重启namenode发现还是都为standby状态。

8、Incompatible clusterID for journal Storage Directory /export/data/hadoop/journal/yinheforyanghang: NameNode has clusterId 'CID-7c4fa72e-afa0-4334-bafd-68af133d4ffe' but storage has clusterId 'CID-52b9fb86-24b4-43a3-96d3-faa584e96d69'

描述:解决完问题7后,重启NN,然后还是standby,再执行切换HA namenode active状态命令:hdfs haadmin -transitionToActive --forcemanual nn1后,namenode进程没了,报上面的错。

解决:vim /export/data/hadoop/namenode/current/VERSION,把clusterId的值改成 storage的值。

9、There appears to be a gap in the edit log. We expected txid 1, but got txid 2941500.

描述:解决完问题8后,nn1节点为active了,但nn2节点进程自动消失,并且日志报上面的错。这个错误表明该节点namenode元数据发生了损坏。需要恢复元数据以后,才能启动namenode

解决:cd $HADOOP_HOME/bin&&hadoop namenode -recover,一路选择c 进行元数据的恢复。恢复完元数据以后,使用如下命令重新启动namenode节点。

10、Caused by: ExitCodeException exitCode=24: File /home/hadoop/core/hadoop-2.7.6/etc/hadoop/container-executor.cfg must be owned by root, but is owned by 1000

描述:启动nodemanager节点时,报的错

解决:1)hadoop版本2.7.6时,这个问题的原因是 LinuxContainerExecutor 通过container-executor来启动容器,但是出于安全的考虑,要求其所依赖的配置文件container-executor.cfg及其各级父路径owner必须是root用户。命令格式为:chown root 每个层级目录名(云环境目录层次为:/export/server/hadoop-2.7.6/etc/hadoop/container-executor.cfg)

2)设置了之后又出现别的问题,如下:

Caused by: ExitCodeException exitCode=22: Invalid permissions on container-executor binary.

这里确实是权限问题,我们还需要执行以下命令修改$HADOOP_HOME/bin/container-executor权限:

chown root:hadoop $HADOOP_HOME/bin/container-executor

chmod 6050 $HADOOP_HOME/bin/container-executor

然后再启动nodemanager节点,又出现下面问题:

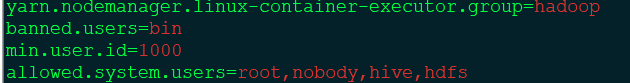

3)Caused by: ExitCodeException exitCode=24: Can't get configured value for yarn.nodemanager.linux-container-executor.group

vim /export/server/hadoop-2.7.6/etc/hadoop/container-executor.cfg

然后再启动nodemanager节点:cd /export/hadoop/sbin && ./yarn-daemon.sh start nodemanager,发现已经成功启动了。

11、ulimit -a for user hdfs

分析:重启datanode时启动失败。查看日志/export/server/hadoop_home/logs/hadoop-hdfs-datanode-v0106-c0a80049.4eb06.c.local.out发现“ulimit -a for user hdfs”,之后查了下/export/server/hadoop_home/logs/hadoop-hdfs-datanode-v0106-c0a80049.4eb06.c.local.log发现“Address already in use;”,说明50070端口被占。

解决:netstat -anlp | grep 50070输入如下:

tcp 0 0 192.168.0.73:50070 192.168.0.73:50070 ESTABLISHED 2931329/python2

kill -9 2931329,之后再重启即可。

12、Caused by: MetaException(message:Version information not found in metastore. )

描述 :启动metastore命令:nohup hive --service metastore &后,报此错,到 hive-site.xml中查看: hive.metastore.schema.verification=false,配置也没问题。

解决:su - hdfs

vim ~/.bashrc,在里面添加以下环境变量

export HIVE_CONF_DIR=/export/common/hive/conf

export HIVE_HOME=/export/hive

export PATH=".:$HIVE_HOME/bin:$HIVE_HOME/sbin"

13、Caused by: java.lang.IllegalStateException: Cannot skip to less than the current value (=492685), where newValue=16385。

描述:运行一段时间后,发现standby的namenode挂了,启动时报如上错误。之后format该namenode节点,再重启发现虽然启来了。但是datanode节点全部挂了,并报错: java.io.IOException: Incompatible clusterIDs in /home/hduser/mydata/hdfs/datanode: namenode clusterID = **CID-8e09ff25-80fb-4834-878b-f23b3deb62d0**; datanode clusterID = **CID-cd85e59a-ed4a-4516-b2ef-67e213cfa2a1,后vim /export/data/hadoop/namenode/current/VERSION,把clusterId的值改成 datanode的值。再次启动namenode时报错:got premature end-of-file at txid 0; expected file to go up to 4,再执行命令:hdfs namenode -bootstrapStandby。

14、This node has namespaceId '279103593 and clusterId 'xxx' but the requesting node expected '279103593' and 'xxx'。

解决:解决了13问题后,又报上面错,之后vim /export/data/hadoop/jounalnode/集群名/current/VERSION下的clusterid改成与namenode的一样

15、FAILED: SemanticException org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient。

描述:启动hive时,进入hive命令行后,执行hive sq时报上述错。用此命令启动hive --hiveconf hive.root.logger=DEBUG,console,看debug日志:Caused by: org.ietf.jgss.GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - LOOKING_UP_SERVER)。Caused by: org.apache.hadoop.hive.metastore.api.MetaException: Could not connect to meta store using any of the URIs provided. Most recent failure: org.apache.thrift.transport.TTransportException: GSS initiate failed。

解决:1)先清空或删掉元数据库表再初始化hive元数据库:/export/hive/bin/schematool -dbType mysql -initSchema,这一步后仍然没有解决。(这不是根本原因,可以不用做)

2)后分析日志后发现是连不上metastore,看了hive-site.xml后才发现属性hive.metastore.uris的值是ip:9083,而kerberos在认证时是hostname通过认证的,故把ip改为hostaname名即可成功。具体配置示例如下:

<property>

<name>hive.metastore.uris</name>

<value>thrift://v0106-c0a800cb.4eb06.c.local:9083,thrift://v0106-c0a800b3.4eb06.c.local:9083</value>

</property>

16、WARN hdfs.DFSClient DataStreamer Exception java.lang.IllegalArgumentException: null at javax.security.auth.callback.NameCallback.<init>(NameCallback.java:90)...。

描述:用hdfs用户执行命令“hdfs dfs -put test.txt /tmp”时报上述错。

解决:hdfs-site.xml中加入以下配置:

<property>

<name>dfs.block.access.token.enable<</name>

<value>true</value>

</property>

17、Failed to become active master.org.apache.hadoop.security.AccessControlException: Permission denied. user=hbase is not the owner of inode=/hbase。

描述:用hdfs用户执行命令“hdfs dfs -put test.txt /tmp”时报上述错。

解决:hbase-site.xml中加入以下配置:

<property>

<name>hbase.rootdir.perms</name>

<value>777</value>

</property>

<property>

<name>hbase.wal.dir.perms</name>

<value>777</value>

</property>

973

973

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?