一直都听别人说Hadoop,还是蛮神秘的,不过看介绍才知道这个是整分布式的.现在分布式,大数据都是挺火的,不得不让人去凑个热闹呀.

先说下我的环境:

test1@test1-virtual:~/Downloads$ uname -a

Linux test1-virtual 3.11.0-17-generic #31~precise1-Ubuntu SMP Tue Feb 4 21:29:23 UTC 2014 i686 i686 i386 GNU/Linux

准备工作,Hadoop是Apache的产品,你懂的,这个当然和Java相关了,所以你得有一个Java编译器才行,不管你是OpenJDK,还是OraceJDK,你都要整一个不是.

详情可以见我另外一篇文章:http://my.oschina.net/robinsonlu/blog/170365

好了,进入正题。

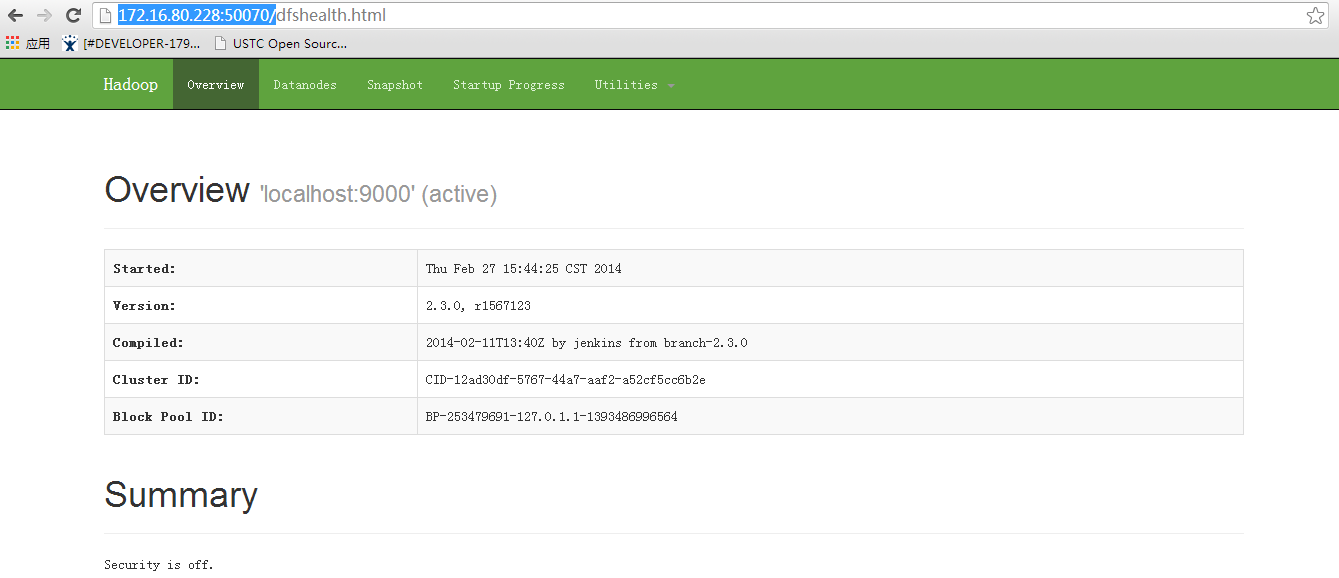

1,我们得去Hadoop官网去下载,我这里选择最新的hadoop-2.3.0版本,其他版本记得甄别一下,毕竟测试环境,当然用最新的好了.

解压压缩包,复制到制定的位置.

test1@test1-virtual:~/Downloads$ tar -xvf hadoop-2.3.0.tar.gz

test1@test1-virtual:~/Downloads$ sudo cp -r hadoop-2.3.0 /usr/local/hadoop/

2,为啥,我要说版本问题呢,因为,这个配置文件的位置,之前的版本和现在版本有很大差异的.

当然版本配置文件地址在

test1@test1-virtual:/usr/local/hadoop/etc/hadoop$ ls -al

total 128

drwxr-xr-x 2 test1 test1 4096 Feb 27 15:09 .

drwxr-xr-x 3 test1 test1 4096 Feb 27 15:09 ..

-rw-r--r-- 1 test1 test1 3589 Feb 27 15:09 capacity-scheduler.xml

-rw-r--r-- 1 test1 test1 1335 Feb 27 15:09 configuration.xsl

-rw-r--r-- 1 test1 test1 318 Feb 27 15:09 container-executor.cfg

-rw-r--r-- 1 test1 test1 860 Feb 27 15:23 core-site.xml

-rw-r--r-- 1 test1 test1 3589 Feb 27 15:09 hadoop-env.cmd

-rw-r--r-- 1 test1 test1 3402 Feb 27 15:42 hadoop-env.sh

-rw-r--r-- 1 test1 test1 1774 Feb 27 15:09 hadoop-metrics2.properties

-rw-r--r-- 1 test1 test1 2490 Feb 27 15:09 hadoop-metrics.properties

-rw-r--r-- 1 test1 test1 9257 Feb 27 15:09 hadoop-policy.xml

-rw-r--r-- 1 test1 test1 984 Feb 27 15:27 hdfs-site.xml

-rw-r--r-- 1 test1 test1 1449 Feb 27 15:09 httpfs-env.sh

-rw-r--r-- 1 test1 test1 1657 Feb 27 15:09 httpfs-log4j.properties

-rw-r--r-- 1 test1 test1 21 Feb 27 15:09 httpfs-signature.secret

-rw-r--r-- 1 test1 test1 620 Feb 27 15:09 httpfs-site.xml

-rw-r--r-- 1 test1 test1 11169 Feb 27 15:09 log4j.properties

-rw-r--r-- 1 test1 test1 918 Feb 27 15:09 mapred-env.cmd

-rw-r--r-- 1 test1 test1 1383 Feb 27 15:09 mapred-env.sh

-rw-r--r-- 1 test1 test1 4113 Feb 27 15:09 mapred-queues.xml.template

-rw-r--r-- 1 test1 test1 758 Feb 27 15:09 mapred-site.xml.template

-rw-r--r-- 1 test1 test1 10 Feb 27 15:09 slaves

-rw-r--r-- 1 test1 test1 2316 Feb 27 15:09 ssl-client.xml.example

-rw-r--r-- 1 test1 test1 2268 Feb 27 15:09 ssl-server.xml.example

-rw-r--r-- 1 test1 test1 2178 Feb 27 15:09 yarn-env.cmd

-rw-r--r-- 1 test1 test1 4084 Feb 27 15:09 yarn-env.sh

-rw-r--r-- 1 test1 test1 772 Feb 27 15:30 yarn-site.xml

而我们要修改的配置文件有

hadoop-env.sh 找到JAVA_HOME,把它修改成这样.

# The java implementation to use.

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_45

core-site.xml

test1@test1-virtual:/usr/local/hadoop/etc/hadoop$ cat core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://127.0.0.1:9000</value>

</property>

</configuration>

hdfs-site.xml

test1@test1-virtual:/usr/local/hadoop/etc/hadoop$ cat hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/dfs/data</value>

</property>

</configuration>

yarn-site.xml(这个就是新增加的,代替以前mapred-site.xml)

test1@test1-virtual:/usr/local/hadoop/etc/hadoop$ cat yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

3,更改Hadoop的权限,这个是必须的,因为启动的时候,系统会叫你输入当前启动用户密码,我们现在都是在root用户上操作,这个可不成。

test1@test1-virtual:/usr/local/hadoop/etc/hadoop$ sudo chown -R test1:test1 /usr/local/hadoop/

4,现在基本配置就完成了,现在就可以启动一下看看模样了.

进入Hadoop主目录bin下,初始化namenode.

test1@test1-virtual:/usr/local/hadoop/bin$ ./hadoop namenode -format在sbin下启动namenode和datanone.

test1@test1-virtual:/usr/local/hadoop/sbin$ ./hadoop-daemon.sh start namenode

test1@test1-virtual:/usr/local/hadoop/sbin$ ./hadoop-daemon.sh start datanode

启动Hadoop.

test1@test1-virtual:/usr/local/hadoop/sbin$ ./start-all.sh上图:

访问http://172.16.80.228:50070/.

有开就关闭嘛.

test1@test1-virtual:/usr/local/hadoop/sbin$ ./stop-all.sh

73

73

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?